The panorama of synthetic intelligence, significantly in pure language processing (NLP), is present process a transformative shift with the introduction of the Byte Latent Transformer (BLT), and Meta’s newest analysis paper spills some beans about the identical. This progressive structure, developed by researchers at Meta AI, challenges the standard reliance on tokenization in giant language fashions (LLMs), paving the best way for extra environment friendly and sturdy language processing. This overview explores the BLT’s key options, benefits, and implications for the way forward for NLP, as a primer for the daybreak the place in all probability tokens could be changed for good.

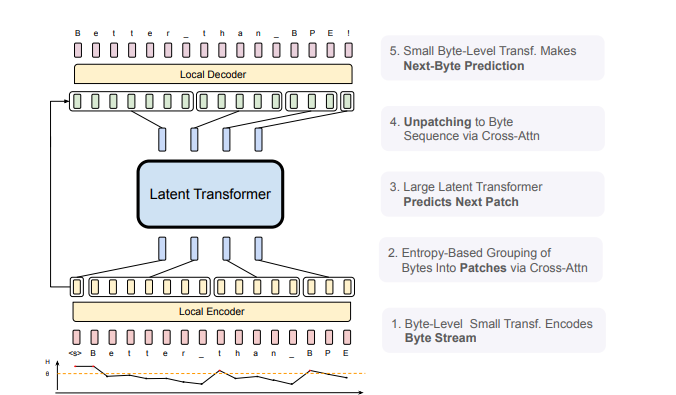

Determine 1: BLT Structure: Comprised of three modules, a light-weight Native Encoder that encodes enter bytes into patch representations, a computationally costly Latent Transformer over patch representations, and a light-weight Native Decoder to decode the following patch of bytes.

The Tokenization Downside

Tokenization has been a cornerstone in getting ready textual content knowledge for language mannequin coaching, changing uncooked textual content into a set set of tokens. Nonetheless, this methodology presents a number of limitations:

- Language Bias: Tokenization can create inequities throughout totally different languages, usually favoring these with extra sturdy token units.

- Noise Sensitivity: Mounted tokens battle to precisely characterize noisy or variant inputs, which may degrade mannequin efficiency.

- Restricted Orthographic Understanding: Conventional tokenization usually overlooks nuanced linguistic particulars which can be essential for complete language understanding.

Introducing the Byte Latent Transformer

The BLT addresses these challenges by processing language instantly on the byte stage, eliminating the necessity for a set vocabulary. As an alternative of predefined tokens, it makes use of a dynamic patching mechanism that teams bytes primarily based on their complexity and predictability, measured by entropy. This permits the mannequin to allocate computational sources extra successfully and concentrate on areas the place deeper understanding is required.

Key Technical Improvements

- Dynamic Byte Patching: The BLT dynamically segments byte knowledge into patches tailor-made to their data complexity, enhancing computational effectivity.

- Three-Tier Structure:

- Light-weight Native Encoder: Converts byte streams into patch representations.

- Giant World Latent Transformer: Processes these patch-level representations.

- Light-weight Native Decoder: Interprets patch representations again into byte sequences.

Key Benefits of the BLT

- Improved Effectivity: The BLT structure considerably reduces computational prices throughout each coaching and inference by dynamically adjusting patch sizes, resulting in as much as a 50% discount in floating-point operations (FLOPs) in comparison with conventional fashions like Llama 3.

- Robustness to Noise: By working instantly with byte-level knowledge, the BLT reveals enhanced resilience to enter noise, making certain dependable efficiency throughout numerous duties.

- Higher Understanding of Sub-word Buildings: The byte-level strategy permits for capturing intricate particulars of language that token-based fashions could miss, significantly useful for duties requiring deep phonological and orthographic understanding.

- Scalability: The structure is designed to scale successfully, accommodating bigger fashions and datasets with out compromising efficiency.

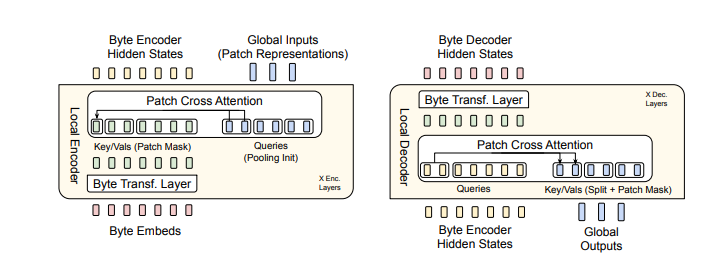

Determine 2: BLT makes use of byte n-gram embeddings together with a cross-attention mechanism to boost the circulation of data between the Latent Transformer and the byte-level modules (see Determine 5). In distinction to fixed-vocabulary tokenization, BLT dynamically organizes bytes into patches, thereby sustaining entry to byte-level data.

Experimental Outcomes

In depth experiments have demonstrated that the BLT matches or exceeds the efficiency of established tokenization-based fashions whereas using fewer sources. As an example:

- On the HellaSwag noisy knowledge benchmark, Llama 3 achieved 56.9% accuracy, whereas the BLT reached 64.3%.

- In character-level understanding duties like spelling and semantic similarity benchmarks, it achieved near-perfect accuracy charges.

These outcomes underscore the BLT’s potential as a compelling different in NLP functions.

Actual-World Implications

The introduction of the BLT opens thrilling prospects for:

- Extra environment friendly AI coaching and inference processes.

- Improved dealing with of morphologically wealthy languages.

- Enhanced efficiency on noisy or variant inputs.

- Larger fairness in multilingual language processing.

Limitations and Future Work

Regardless of its groundbreaking nature, researchers acknowledge a number of areas for future exploration:

- Improvement of end-to-end realized patching fashions.

- Additional optimization of byte-level processing strategies.

- Investigation into scaling legal guidelines particular to byte-level transformers.

Conclusion

The Byte Latent Transformer marks a major development in language modeling by transferring past conventional tokenization strategies. Its progressive structure not solely enhances effectivity and robustness but additionally redefines how AI can perceive and generate human language. As researchers proceed to discover its capabilities, we anticipate thrilling developments in NLP that may result in extra clever and adaptable AI methods. In abstract, the BLT represents a paradigm shift in language processing-one that might redefine AI’s capabilities in understanding and producing human language successfully.

The put up Revolutionizing Language Fashions: The Byte Latent Transformer (BLT) appeared first on Datafloq.