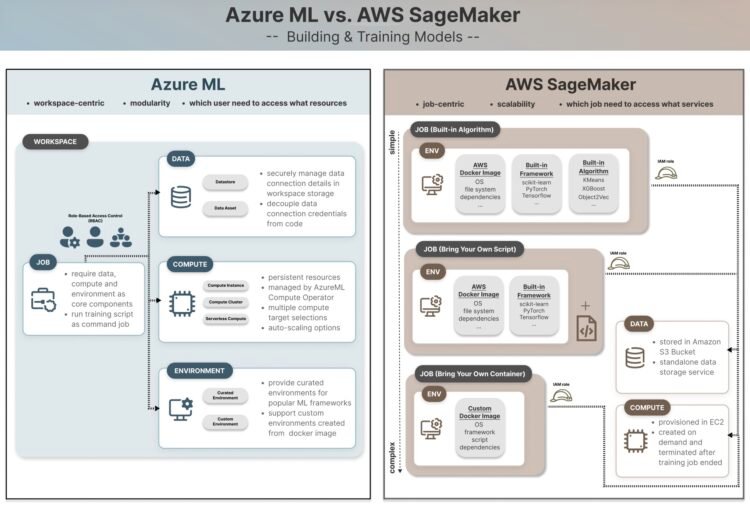

(AWS) are the world’s two largest cloud computing platforms, offering database, community, and compute sources at international scale. Collectively, they maintain about 50% of the worldwide enterprise cloud infrastructure providers market—AWS at 30% and Azure at 20%. Azure ML and AWS SageMaker are machine studying providers that allow knowledge scientists and ML engineers to develop and handle the whole ML lifecycle, from knowledge preprocessing and have engineering to mannequin coaching, deployment, and monitoring. You’ll be able to create and handle these ML providers in AWS and Azure by console interfaces, or cloud CLI, or software program improvement kits (SDK) in your most popular programming language – the strategy mentioned on this article.

Azure ML & AWS SageMaker Coaching Jobs

Whereas they provide comparable high-level functionalities, Azure ML and AWS SageMaker have elementary variations that decide which platform most accurately fits you, your staff, or your organization. Firstly, contemplate the ecosystem of the present knowledge storage, compute sources, and monitoring providers. As an example, if your organization’s knowledge primarily sits in an AWS S3 bucket, then SageMaker could develop into a extra pure selection for creating your ML providers, because it reduces the overhead of connecting to and transferring knowledge throughout totally different cloud suppliers. Nonetheless, this doesn’t imply that different elements are usually not price contemplating, and we are going to dive into the main points of how Azure ML differs from AWS SageMaker in a standard ML state of affairs—coaching and constructing fashions at scale utilizing jobs.

Though Jupyter notebooks are priceless for experimentation and exploration in an interactive improvement workflow on a single gadget, they don’t seem to be designed for productionization or distribution. Coaching jobs (and different ML jobs) develop into important within the ML workflow at this stage by deploying the duty to a number of cloud cases with a view to run for an extended time, and course of extra knowledge. This requires organising the information, code, compute cases and runtime environments to make sure constant outputs when it’s now not executed on one native machine. Consider it just like the distinction between creating a dinner recipe (Jupyter pocket book) and hiring a catering staff to cook dinner it for 500 clients (ML job). It wants everybody within the catering staff to entry the identical elements, recipe and instruments, following the identical cooking process.

Now that we perceive the significance of coaching jobs, let’s take a look at how they’re outlined in Azure ML vs. SageMaker in a nutshell.

Outline Azure ML coaching job

from azure.ai.ml import command

job = command(

code=...

command=...

setting=...

compute=...

)

ml_client.jobs.create_or_update(job)Create SageMaker coaching job estimator

from sagemaker.estimator import Estimator

estimator = Estimator(

image_uri=...

function=...

instance_type=...

)

estimator.match(training_data_s3_location)We’ll break down the comparability into following dimensions:

- Mission and Permission Administration

- Information storage

- Compute

- Setting

Partially 1, we are going to begin with evaluating the high-level venture setup and permission administration, then speak about storing and accessing the information required for mannequin coaching. Half 2 will talk about varied compute choices underneath each cloud platforms, and the right way to create and handle runtime environments for coaching jobs.

Mission and Permission Administration

Let’s begin by understanding a typical ML workflow in a medium-to-large staff of knowledge scientists, knowledge engineers, and ML engineers. Every member could concentrate on a selected function and accountability, and assigned to a number of tasks. For instance, a knowledge engineer is tasked with extracting knowledge from the supply and storing it in a centralized location for knowledge scientists to course of. They don’t must spin up compute cases for working coaching jobs. On this case, they might have learn and write entry to the information storage location however don’t essentially want entry to create GPU cases for heavy workloads. Relying on knowledge sensitivity and their function in an ML venture, staff members want totally different ranges of entry to the information and underlying cloud infrastructure. We’re going to discover how two cloud platforms construction their sources and providers to steadiness the necessities of staff collaboration and accountability separation.

Azure ML

Mission administration in Azure ML is Workspace-centric, beginning by making a Workspace (underneath your Azure subscription ID and useful resource group) for storing related useful resource and property, and shared throughout the venture staff for collaboration.

Permissions to entry and handle sources are granted on the user-level primarily based on their roles – i.e. role-based entry management (RBAC). Generic roles in Azure embrace proprietor, contributor and reader. ML specialised roles embrace AzureML Information Scientist and AzureML Compute Operator, which is accountable for creating and managing compute cases as they’re usually the biggest value factor in an ML venture. The aims of organising an Azure ML Workspace is to create a contained environments for storing knowledge, compute, mannequin and different sources, in order that solely customers throughout the Workspace are given related entry to learn or edit the information property, use present or create new compute cases primarily based on their duties.

Within the code snippet under, we hook up with the Azure ML workspace by MLClient by passing the workspace’s subscription ID, useful resource group and the default credential – Azure follows the hierarchical construction Subscription > Useful resource Group > Workspace.

Upon workspace creation, related providers like an Azure Storage Account (shops metadata and artifacts and may retailer coaching knowledge) and an Azure Key Vault (shops secrets and techniques like usernames, passwords, and credentials) are additionally instantiated routinely.

from azure.ai.ml import MLClient

from azure.id import DefaultAzureCredential

subscription_id = ''

resource_group = ''

workspace = ''

# Connect with the workspace

credential = DefaultAzureCredential()

ml_client = MLClient(credential, subscription, resource_group, workspace) When builders run the code throughout an interactive improvement session, the workspace connection is authenticated by the developer’s private credentials. They might have the ability to create a coaching job utilizing the command ml_client.jobs.create_or_update(job) as demonstrated under. To detach private account credentials within the manufacturing setting, it is suggested to make use of a service principal account to authenticate for automated pipelines or scheduled jobs. Extra info could be discovered on this article “Authenticate in your workspace utilizing a service principal”.

# Outline Azure ML coaching job

from azure.ai.ml import command

job = command(

code=...

command=...

setting=...

compute=...

)

ml_client.jobs.create_or_update(job)AWS SageMaker

Roles and permissions in SageMaker are designed primarily based on a very totally different precept, primarily utilizing “Roles” in AWS Id Entry Administration (IAM) service. Though IAM permits creating user-level (or account-level) entry just like Azure, AWS recommends granting permissions on the job-level all through the ML lifecycle. On this manner, your private AWS permissions are irrelevant at runtime and SageMaker assumes a job (i.e. SageMaker execution function) to entry related AWS providers, comparable to S3 bucket, SageMaker Coaching Pipeline, compute cases for executing the job.

For instance, here’s a fast peek of organising an Estimator with the SageMaker execution function for working the Coaching Job.

import sagemaker

from sagemaker.estimator import Estimator

# Get the SageMaker execution function

function = sagemaker.get_execution_role()

# Outline the estimator

estimator = Estimator(

image_uri=image_uri,

function=function, # assume the SageMaker execution function throughout runtime

instance_type="ml.m5.xlarge",

instance_count=1,

)

# Begin coaching

estimator.match("s3://my-training-bucket/prepare/")

It implies that we will arrange sufficient granularity to grant function permissions to run solely coaching jobs within the improvement setting however not touching the manufacturing setting. For instance, the function is given entry to an S3 bucket that holds take a look at knowledge and is blocked from the one which holds manufacturing knowledge, then the coaching job that assumes this function gained’t have the possibility to overwrite the manufacturing knowledge by chance.

Permission Administration in AWS is a complicated area by itself, and I gained’t fake I can totally clarify this subject. I like to recommend studying this text for extra greatest practices from AWS official documentation “Permissions administration“.

What does this imply in follow?

- Azure ML: Azure’s Function Based mostly Entry Management (RBAC) matches corporations or groups that handle which consumer must entry what sources. Extra intuitive to grasp and helpful for centralized consumer entry management.

- AWS SageMaker AI: AWS matches programs that care about which job must entry what providers. Decouple particular person consumer permissions with job execution for higher automation and MLOps practices. AWS matches for giant knowledge science staff with granular job and pipeline definitions and remoted environments.

Reference

Information Storage

You’ll have the query — can I retailer the information within the working listing? Not less than that’s been my query for a very long time, and I imagine the reply continues to be sure in case you are experimenting or prototyping utilizing a easy script or pocket book in an interactive improvement setting. However knowledge storage location is necessary to contemplate within the context of making ML jobs.

Since code runs in a cloud-managed setting or a docker container separate out of your native listing, any domestically saved knowledge can’t be accessed when executing pipelines and jobs in SageMaker or Azure ML. This requires centralized, managed knowledge storage providers. In Azure, that is dealt with by a storage account throughout the Workspace that helps datastores and knowledge property.

Datastores include connection info, whereas knowledge property are versioned snapshots of knowledge used for coaching or inference. AWS, however, depends closely on S3 buckets as centralized storage areas that allow safe, sturdy, cross-region entry throughout totally different accounts, and customers can entry knowledge by its distinctive URI path.

Azure ML

Azure ML treats knowledge as hooked up sources and property within the Workspaces, with one storage account and 4 built-in datastores routinely created upon the instantiation of every Workspace with a view to retailer information (in Azure File Share) and datasets (in Azure Blob Storage).

Since datastores securely maintain knowledge connection info and routinely deal with the credential/id behind the scene, it decouples knowledge location and entry permission from the code, in order that the code to stay unchanged even when the underlying knowledge connection adjustments. Datastores could be accessed by their distinctive URI. Right here’s an instance of making an Enter object with the kind uri_file by passing the datastore path.

# create coaching knowledge utilizing Datastore

training_data=Enter(

kind="uri_file",

path="",

) Then this knowledge can be utilized because the coaching knowledge for an AutoML classification job.

classification_job = automl.classification(

compute='aml-cluster',

training_data=training_data,

target_column_name='Survived',

primary_metric='accuracy',

)Information Asset is one other choice to entry knowledge in an ML job, particularly when it’s useful to maintain observe of a number of knowledge variations, so knowledge scientists can determine the right knowledge snapshots getting used for mannequin constructing or experimentations. Right here is an instance code for creating an Enter object with AssetTypes.URI_FILE kind by passing the information asset path “azureml:my_train_data:1” (which incorporates the information asset identify + model quantity) and utilizing the mode InputOutputModes.RO_MOUNT for learn solely entry. Yow will discover extra info within the documentation “Entry knowledge in a job”.

# creating coaching knowledge utilizing Information Asset

training_data = Enter(

kind=AssetTypes.URI_FILE,

path="azureml:my_train_data:1",

mode=InputOutputModes.RO_MOUNT

)AWS SageMaker

AWS SageMaker is tightly built-in with Amazon S3 (Easy Storage Service) for ML workflows, in order that SageMaker coaching jobs, inference endpoints, and pipelines can course of enter knowledge from S3 buckets and write output knowledge again to them. You might discover that making a SageMaker managed job setting (which shall be mentioned in Half 2) requires S3 bucket location as a key parameter, alternatively a default bucket shall be created if unspecified.

In contrast to Azure ML’s Workspace-centric datastore strategy, AWS S3 is a standalone knowledge storage service that gives scalable, sturdy, and safe cloud storage that may be shared throughout different AWS providers and accounts. This gives extra flexibility for permission administration on the particular person folder degree, however on the similar time requires explicitly granting the SageMaker execution function entry to the S3 bucket.

On this code snippet, we use estimator.match(train_data_uri)to suit the mannequin on the coaching knowledge by passing its S3 URI straight, then generates the output mannequin and shops it on the specified S3 bucket location. Extra situations could be discovered of their documentation: “Amazon S3 examples utilizing SDK for Python (Boto3)”.

import sagemaker

# Outline S3 paths

train_data_uri = ""

output_folder_uri = " What does it imply in follow?

- Azure ML: use Datastore to handle knowledge connections, which handles the credential/id info behind the scene. Due to this fact, this strategy decouples knowledge location and entry permission from the code, permitting the code stay unchanged when the underlying connection adjustments.

- AWS SageMaker: use S3 buckets as the first knowledge storage service for managing enter and output knowledge of SageMaker jobs by their URI paths. This strategy requires specific permission administration to grant the SageMaker execution function entry to the required S3 bucket.

Reference

Take-Residence Message

Examine Azure ML and AWS SageMaker for scalable mannequin coaching, specializing in venture setup, permission administration, and knowledge storage patterns, so groups can higher align platform decisions with their present cloud ecosystem and most popular MLOps workflows.

Partially 1, we examine the high-level venture setup and permission administration, storing and accessing the information required for mannequin coaching. Half 2 will talk about varied compute choices underneath each cloud platforms, and the creation and administration of runtime environments for coaching jobs.