of the universe (made by one of the crucial iconic singers ever) says this:

Want I might return

And alter these years

I’m going by way of adjustmentsBlack sabbath – Modifications

This music is extremely highly effective and talks about how life can change proper in entrance of you so shortly.

That music is a few damaged coronary heart and a love story. Nonetheless, it additionally jogs my memory loads of the adjustments that my job, as a Knowledge Scientist, has undergone during the last 10 years of my profession:

- After I began learning Physics, the one factor I considered when somebody mentioned “Transformer” was Optimus Prime. Machine Studying for me was all about Linear Regression, SVM, Random Forest and so forth… [2016]

- After I did my Grasp’s Diploma in Huge Knowledge and Physics of Complicated Techniques, I first heard of “BERT” and varied Deep Studying applied sciences that appeared very promising at the moment. The primary GPT fashions got here out, they usually appeared very attention-grabbing, though nobody anticipated them to be as highly effective as they’re immediately. [2018-2020]

- Quick ahead to my life now as a full-time Knowledge Scientist. In the present day, in case you don’t know what GPT stands for and have by no means learn “Consideration is All You Want” you’ve got only a few possibilities of passing a Knowledge Science System Design interview. [2021 – today]

When folks state that the instruments and the on a regular basis lifetime of an individual working with information are considerably totally different than 10 (and even 5) years in the past, I agree all the way in which. What I don’t agree with is the concept that the instruments used prior to now needs to be erased simply because every part now appears to be solvable with GPT, LLMs, or Agentic AI.

The objective of this text is to contemplate a single activity, which is classifying the love/hate/impartial intent of a Tweet. Specifically, we are going to do it with conventional Machine Studying, Deep Studying, and Giant Language Fashions.

We’ll do that hands-on, utilizing Python, and we are going to describe why and when to make use of every method. Hopefully, after this text, you’ll study:

- The instruments used within the early days ought to nonetheless be thought-about, studied, and at occasions adopted.

- Latency, Accuracy, and Price needs to be evaluated when selecting the most effective algorithm in your use case

- Modifications within the Knowledge Scientist world are crucial and to be embraced with out worry 🙂

Let’s get began!

1. The Use Case

The case we’re coping with is one thing that’s really very adopted in Knowledge Science/AI functions: sentiment evaluation. Which means, given a textual content, we wish to extrapolate the “feeling” behind the creator of that textual content. That is very helpful for instances the place you wish to collect the suggestions behind a given overview of an object, a film, an merchandise you might be recommending, and so forth…

On this weblog publish, we’re utilizing a really “well-known” sentiment evaluation instance, which is classifying the sensation behind a tweet. As I wished extra management, we is not going to work with natural tweets scraped from the net (the place labels are unsure). As an alternative, we might be utilizing content material generated by Giant Language Fashions that we will management.

This system additionally permits us to tune the issue and the number of the issue and to look at how totally different strategies react.

- Straightforward case: the love tweets sound like postcards, the hate ones are blunt, and the impartial messages speak about climate and occasional. If a mannequin struggles right here, one thing else is off.

- Tougher case: nonetheless love, hate, impartial, however now we inject sarcasm, blended tones, and refined hints that demand consideration to context. We even have much less information, to have a smaller dataset to coach with.

- Further Arduous case: we transfer to 5 feelings: love, hate, anger, disgust, envy, so the mannequin has to parse richer, extra layered sentences. Furthermore, we now have 0 entries to coach the info: we can’t do any coaching.

I’ve generated the info and put every of the recordsdata in a selected folder of the general public GitHub Folder I’ve created for this challenge [data].

Our objective is to construct a sensible classification system that can have the ability to effectively grasp the sentiment behind the tweets. However how lets do it? Let’s determine it out.

2. System Design

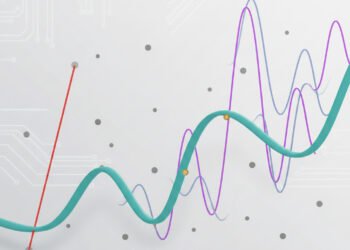

An image that’s at all times extraordinarily useful to contemplate is the next:

Accuracy, price, and scale in a Machine Studying system type a triangle. You possibly can solely totally optimize two on the identical time.

You possibly can have a really correct mannequin that scales very nicely with thousands and thousands of entries, however it gained’t be fast. You possibly can have a fast mannequin that scales with thousands and thousands of entries, however it gained’t be that correct. You possibly can have an correct and fast mannequin, however it gained’t scale very nicely.

These issues are abstracted from the precise drawback, however they assist information which ML System Design to construct. We’ll come again to this.

Additionally, the ability of our mannequin needs to be proportional to the scale of our coaching set. Normally, we attempt to keep away from the coaching set error to lower at the price of a rise within the take a look at set (the well-known overfitting).

We don’t wish to be within the Underfitting or Overfitting space. Let me clarify why.

In easy phrases, underfitting occurs when your mannequin is just too easy to study the actual sample in your information. It’s like making an attempt to attract a straight line by way of a spiral. Overfitting is the alternative. The mannequin learns the coaching information too nicely, together with all of the noise, so it performs nice on what it has already seen however poorly on new information. The candy spot is the center floor, the place your mannequin understands the construction with out memorizing it.

We’ll come again to this one as nicely.

3. Straightforward Case: Conventional Machine Studying

We open with the friendliest situation: a extremely structured dataset of 1,000 tweets that we generated and labelled. The three lessons (optimistic, impartial, adverse) are balanced on objective, the language may be very specific, and each row lives in a clear CSV.

Let’s begin with a easy import block of code.

Let’s see what the dataset appears like:

Now, we anticipate that this gained’t scale for thousands and thousands of rows (as a result of the dataset is just too structured to be numerous). Nonetheless, we will construct a really fast and correct technique for this tiny and particular use case. Let’s begin with the modeling. Three details to contemplate:

- We’re doing practice/take a look at cut up with 20% of the dataset within the take a look at set.

- We’re going to use a TF-IDF method to get the embeddings of the phrases. TF-IDF stands for Time period Frequency–Inverse Doc Frequency. It’s a basic approach that transforms textual content into numbers by giving every phrase a weight based mostly on how vital it’s in a doc in comparison with the entire dataset.

- We’ll mix this method with two ML fashions: Logistic Regression and Help Vector Machines, from scikit-learn. Logistic Regression is easy and interpretable, typically used as a powerful baseline for textual content classification. Help Vector Machines concentrate on discovering the most effective boundary between lessons and often carry out very nicely when the info isn’t too noisy.

And the efficiency is basically good for each fashions.

For this quite simple case, the place we now have a constant dataset of 1,000 rows, a conventional method will get the job accomplished. No want for billions of parameter fashions like GPT.

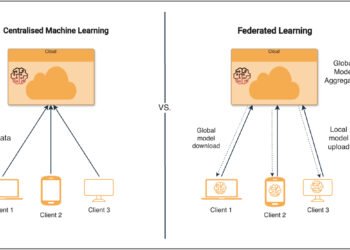

4. Arduous Case: Deep Studying

The second dataset remains to be artificial, however it’s designed to be annoying on objective. Labels stay love, hate, and impartial, but the tweets lean on sarcasm, blended tone, and backhanded compliments. On high of that, the coaching pool is smaller whereas the validation slice stays giant, so the fashions work with much less proof and extra ambiguity.

Now that we now have this ambiguity, we have to take out the larger weapons. There are Deep Studying embedding fashions that preserve robust accuracy and nonetheless scale nicely in these instances (keep in mind the triangle and the error versus complexity plot!). Specifically, Deep Studying embedding fashions study the which means of phrases from their context as an alternative of treating them as remoted tokens.

For this weblog publish, we are going to use BERT, which is without doubt one of the most well-known embedding fashions on the market. Let’s first import some libraries:

… and a few helpers.

Thanks to those capabilities, we will shortly consider our embedding mannequin vs the TF-IDF method.

As we will see, the TF-IDF mannequin is extraordinarily underperforming within the optimistic labels, whereas it preserves excessive accuracy when utilizing the embedding mannequin (BERT).

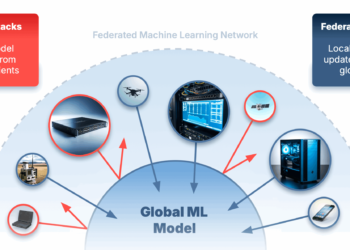

5. Further Arduous case: LLM Agent

Okay, now let’s make issues VERY exhausting:

- We solely have 100 rows.

- We assume we have no idea the labels, which means we can’t practice any machine studying mannequin.

- We’ve got 5 labels: envy, hate, love, disgust, anger.

As we can’t practice something, however we nonetheless wish to carry out our classification, we should undertake a technique that by some means already has the classifications inside. Giant Language Fashions are the best instance of such a technique.

Notice that if we used LLMs for the opposite two instances, it could be like capturing a fly with a cannon. However right here, it makes good sense: the duty is difficult, and we now have no method to do something sensible, as a result of we can’t practice our mannequin (we don’t have the coaching set).

On this case, we now have accuracy at a big scale. Nonetheless, the API takes a while, so we now have to attend a second or two earlier than the response comes again (keep in mind the triangle!).

Let’s import some libraries:

And that is the classification API name:

And we will see that the LLM does a tremendous classification job:

6. Conclusions

Over the previous decade, the function of the Knowledge Scientist has modified as dramatically because the know-how itself. This would possibly result in the thought of simply utilizing essentially the most highly effective instruments on the market, however that’s NOT the most effective route for a lot of instances.

As an alternative of reaching for the most important mannequin first, we examined one drawback by way of a easy lens: accuracy, latency, and value.

Specifically, here’s what we did, step-by-step:

- We outlined our use case as tweet sentiment classification, aiming to detect love, hate, or impartial intent. We designed three datasets of accelerating issue: a clear one, a sarcastic one, and a zero-training one.

- We tackled the straightforward case utilizing TF-IDF with Logistic Regression and SVM. The tweets have been clear and direct, and each fashions carried out virtually completely.

- We moved to the exhausting case, the place sarcasm, blended tone, and refined context made the duty extra advanced. We used BERT embeddings to seize which means past particular person phrases.

- Lastly, for the additional exhausting case with no coaching information, we used a Giant Language Mannequin to categorise feelings immediately by way of zero-shot studying.

Every step confirmed how the fitting software depends upon the issue. Conventional ML is quick and dependable when the info is structured. Deep Studying fashions assist when which means hides between the strains. LLMs are highly effective when you don’t have any labels or want broad generalization.

7. Earlier than you head out!

Thanks once more in your time. It means rather a lot ❤️

My title is Piero Paialunga, and I’m this man right here:

I’m initially from Italy, maintain a Ph.D. from the College of Cincinnati, and work as a Knowledge Scientist at The Commerce Desk in New York Metropolis. I write about AI, Machine Studying, and the evolving function of information scientists each right here on TDS and on LinkedIn. If you happen to favored the article and wish to know extra about machine studying and comply with my research, you may:

A. Observe me on Linkedin, the place I publish all my tales

B. Observe me on GitHub, the place you may see all my code

C. For questions, you may ship me an e-mail at piero.paialunga@hotmail