or fine-tuned an LLM, you’ve probably hit a wall on the final step: the Cross-Entropy Loss.

The wrongdoer is the logit bottleneck. To foretell the following token, we undertaking a hidden state into an enormous vocabulary house. For Llama 3 (128,256 tokens), the load matrix alone is over 525 million parameters. Whereas that’s solely ~1GB in bfloat16, the intermediate logit tensor is the actual problem. For big batches, it could possibly simply exceed 80GB of VRAM simply to compute a single scalar loss.

Optimising this layer is how libraries like Unsloth and Liger-Kernel obtain such large reminiscence reductions. On this article, we’ll construct a fused Linear + Cross Entropy kernel from scratch in Triton. We’ll derive the maths and implement a tiled ahead and backward cross that slashes peak reminiscence utilization by 84%.

Word on Efficiency: This implementation is primarily instructional. We prioritise mathematical readability and readable Triton code through the use of world atomic operations. Whereas it solves the reminiscence bottleneck, matching production-grade speeds would require considerably extra complicated implementations that are out of scope for this text.

This put up is a part of my Triton sequence. We’ll be utilizing ideas like tiling and on-line softmax that we’ve coated beforehand. If these sound unfamiliar, I like to recommend catching up there first!

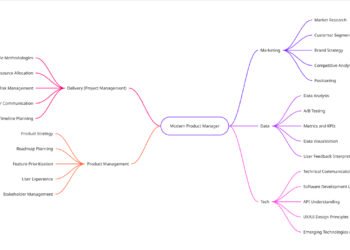

The Logit Bottleneck

To get us began, let’s put some extra numbers on the logit bottleneck. We contemplate an enter matrix X with form [NxD], a weight matrix W with form [DxV] and a logit matrix Y=X@W with form [NxV]. Within the context of an LLM, N can be the sequence size multiplied by the batch measurement (i.e. the entire variety of tokens within the batch), D the scale of the hidden state and V the vocabulary measurement.

For a Llama3 8B mannequin, we might have a context window of 8192 tokens, a hidden state with 4096 dimensions and a vocabulary measurement of 128,256 tokens. Utilizing a modest batch measurement of 8, we get N = 8192x8 = 65,536.

This ends in the Y matrix having form [NxV]=[65,536x128,256], or roughly 8.4 billion parts. In bfloat16, this is able to take up 16.8GB of reminiscence. Nonetheless, if we comply with finest practices and use float32 for the loss calculation to make sure numerical stability, the necessities double to 33.6GB.

To place this quantity in perspective, we might additionally want round 16GB of reminiscence to carry the weights of Llama3 8B in reminiscence in bfloat16. One most GPUs, this leaves no house for the huge overhead of the optimiser states (e.g. Adam’s moments) and different activations, ensuing within the notorious PyTorch OOM error.

Usually, this downside is handled through the use of:

- Gradient accumulation: Use a smaller batch measurement and accumulate gradients over a number of batches between every optimiser step, emulating a bigger batch measurement whereas holding much less information in reminiscence.

- Activation checkpointing: PyTorch shops all intermediate activations for reuse within the backward cross, checkpointing clears these activations and recomputes them on-the-fly in the course of the backward cross. This results in giant reminiscence financial savings however will increase coaching time because the variety of required ahead passes is doubled.

- Micro-batching the loss: As a substitute of computing the loss over the

Ndimension directly, we are able to slice it and accumulate the loss over smaller chunks with measurementn < N. Now, we solely maintain a slice of measurement[n, V]in reminiscence at a time. - Blended precision coaching: Utilizing half precision throughout coaching offers 2x reminiscence discount and important speedups on Tensor Cores.

Whereas these options appear enticing, all of them have important drawbacks: gradient accumulation and activation checkpointing decelerate coaching, combined precision may be unstable and micro-batching requires (sluggish) PyTorch degree iteration and although n is chosen to be smaller than N, the vocabulary measurement stays large as compared.

Extra importantly, these options don’t deal with the issue now we have handled repeatedly all through this sequence: information motion. Certainly, we’re nonetheless losing time by writing billions of logits to VRAM solely to learn them again milliseconds later.

The Kernel Answer

As we’ll see in a minute, the ahead and backward cross of the cross-entropy loss contain dot merchandise, matrix multiplication and a softmax. As we discovered on this sequence, these are all operations that may be tiled effectively. In different phrases, we are able to carry out them iteratively whereas solely holding a small piece of the inputs in reminiscence at any time.

Moreover, cross-entropy is mostly preceded by a matrix multiplication: the linear projection from the hidden state into the vocabulary house. It is a nice alternative for operator fusion: fusing a number of operation inside a single kernel, leading to giant speedups and potential reminiscence features.

Within the following sections, we’ll check out how one can derive and effectively fuse the ahead and backward passes by means of a kernel combining a linear layer with cross-entropy.

As talked about within the final article, Triton kernels don’t natively register in PyTorch’s autograd. Subsequently we have to derive the gradient ourselves, a beautiful event to brush up on some calculus 😉

The mathematics behind Fused Linear Cross-Entropy

Definition and Ahead Go

On this part, we derive the mathematical expression for our Fused Linear Cross-Entropy layer to see the way it naturally lends itself to tiling.

For 2 discrete likelihood distributions p and q, cross-entropy is outlined as:

In our context, p is the one-hot vector representing the goal token, whereas q is the mannequin’s distribution over the vocabulary. We receive q by making use of a softmax to the logits l, themselves the outputs of the previous linear layer.

Since p is constructive for a single goal token y, the summation collapses. We are able to then substitute the numerically steady softmax (as mentioned within the final article) to derive the ultimate expression:

By substituting the logits l with the linear layer x . w, we see that the ahead cross boils down to 3 main portions:

- The goal logit

x . w_y. - The log-sum-exp (LSE) of all dot merchandise.

- The worldwide most logit used for numerical stability.

Due to the net softmax algorithm, we are able to compute these portions with out ever materialising the total vocabulary in reminiscence. As a substitute of an O(V) reminiscence bottleneck, we iterate over the hidden dimension D and the vocabulary V in small tiles (D_block and V_block). This transforms the calculation into an O(1) register downside.

To parallelise this successfully, we launch one GPU program per row of the enter matrix. Every program independently executes the next steps:

- Pre-compute the goal logit: Carry out a tiled dot product between the present row of

Xand the column ofWrelated to tokenY. - On-line discount: Iterate by means of the hidden and vocabulary blocks to:

1. Monitor the working most (m)

2. Replace the working sum of exponentials (d) utilizing the net softmax system:

Now that now we have a greater understanding of the ahead cross, let’s check out the derivation of the backward cross.

Backward Go

Notation

To derive our gradients effectively, we’ll use Einstein notation and the Kronecker delta.

In Einstein notation, repeated indices are implicitly summed over. For instance, a normal matrix multiplication Y = X@W simplifies from a verbose summation to a clear index pairing:

The Kronecker delta (δ_ij) is used alongside this notation to deal with id logic. It is the same as 1 if i=j and 0 in any other case. As we’ll see, that is notably helpful for collapsing indices throughout differentiation.

Matrix Multiplication

On this part, we derive the back-propagated gradients for matrix multiplication. We assume the existence of an upstream gradient ℓ.

To find out the way it back-propagates by means of matrix multiplication, we use the apply the chain rule to the inputs x and the load matrix w. Right here y represents the multiplication’s outputs:

We begin by deriving the partial derivatives of y with respect to x, following these steps:

- Specific

yby way ofxandw. - Discover that

wis a continuing with respect to the by-product ofx, so we are able to pull it out of the by-product. - Specific the truth that the partial by-product of

x_ikwith respect tox_mnis 1 solely wheni=mandok=nutilizing the Kronecker delta. - Discover that

ẟ_knenforcesok=n, due to this factw_kj * ẟ_knreduces tow_nj.

Then, we contemplate the total expression and procure the gradient. We derive the final step by noticing as soon as once more that 1/y_ij * ẟ_im reduces to 1/y_mj.

Nonetheless, matrix notation is conceptually nearer to our Triton kernel, due to this fact, we rewrite this expression as a matrix multiplication through the use of the id X_ij = [X^T]_ji:

We comply with the very same steps to derive the gradient with respect to W:

Then, the back-propagated gradient follows:

Which is equal to the matrix notation:

Cross-Entropy

On this part, we’ll deal with cross-entropy utilized to discrete likelihood distributions. Contemplating a tensor of j logits, with a label y, the cross-entropy is computed as follows:

The place x_y corresponds to the logit related to the label.

As soon as once more, we have an interest within the partial by-product of any output i with respect to any enter ok. Due to the normalising issue, each ingredient i impacts the worth of each different ingredient, due to this fact, the partial by-product is obtained by defining the operate piecewise relying on the worth of i:

Summing each instances, we receive the gradient:

And in matrix notation:

The place y_{one scorching} is a vector of zeros with the entry equivalent to the label set to 1. This consequence tells us that the gradient is solely the distinction between the prediction and the bottom fact.

Fused Linear Cross-Entropy

Combining the linear projection with cross-entropy in a single expression, we get:

Due to the chain rule, deriving the gradient of this expression boils right down to multiplying the gradients we computed beforehand:

The place x and y check with the inputs and outputs to the linear layer respectively and w to the related weight matrix.

Word: in a batched setting, we’ll want to cut back the

Wgradients over the batch dimension. Usually, we use a sum or imply discount.

Kernel Implementation

With the idea established, we are able to implement the fused kernel in Triton. Since cross-entropy is often the ultimate layer in a language mannequin, we are able to mix the ahead and backward passes right into a single kernel. This fusion gives two benefits: it minimises the overhead of a number of kernel launches and considerably improves information locality by preserving intermediate values on-chip.

We’ll analyse the kernel step-by-step from the attitude of a single program occasion, which, in our parallelisation technique, handles one particular row of the enter matrix.

1. Setup and Goal Logit Pre-computation

The preliminary part includes customary Triton setup:

- Program Identification: We use

tl.program_idto find out which row of the enter matrix the present program is chargeable for. - Parameter Initialisation: We outline tiles utilizing

D_BLOCKandV_BLOCKand initialise the working most (m) and sum (d) required for the net softmax algorithm. - Pointer Arithmetic: We calculate the bottom reminiscence addresses for our tensors. Pointers for

X(enter) anddX(gradient) are offset utilizing the row stride so every program accesses its distinctive token vector. Conversely, theW(weight) pointer stays on the base deal with as a result of each program should ultimately iterate by means of the whole vocabulary house. - Masking and Early Exit: We outline an

ignore_index(defaulting to-100). If a program encounters this label (e.g. for padding tokens), it terminates early with a lack of 0 to avoid wasting cycles.

2. Computing the Goal Logit

Earlier than the primary loop, we should isolate the goal logit x . w_y. We iterate over the hidden dimension D in D_BLOCK chunks, performing a dot product between the enter row X and the precise column of W equivalent to the ground-truth label Y.

As a result of W is a 2D matrix, calculating the pointers for these particular column tiles requires exact stride manipulation. The illustration beneath helps visualising how we “soar” by means of reminiscence to extract solely the mandatory weights for the goal token.

As soon as the tiles are loaded, we solid them to float32 to make sure numerical stability and add their dot product to an accumulator variable earlier than shifting to the following iteration.

Right here’s the code to date:

Subsequent, we execute the ahead cross, which processes the vocabulary house in two nested levels:

- Tiled Logit Computation: We compute the logits for a

V_BLOCKat a time. That is achieved by iterating over vocabulary dimensionV(outer loop) and the hidden dimensionD(inside loop). Throughout the inside loop, we load a tile ofXand a block ofW, accumulating their partial dot merchandise right into a high-precision register. - On-line Softmax Replace: As soon as the total dot product for a logit tile is finalised, we don’t retailer it to VRAM. As a substitute, we instantly replace our working statistics: the utmost worth

mand the working sum of exponentialsdutilizing the net softmax system. By doing this “on the fly”, we make sure that we solely ever maintain a smallV_BLOCKof logits within the GPU’s registers at any given second.

Following these iterations, the ultimate values of m and d are used to reconstruct the LSE. The ultimate scalar loss for the row is then computed by subtracting the goal logit (x . w_y) from this LSE worth.

Right here’s a visible illustration of the ahead cross:

Right here’s the code for the ahead cross:

We at the moment are right down to the final a part of the kernel: the backward cross. Our aim is to compute the gradients with respect to X and W utilizing the expression we derived earlier:

To stay memory-efficient, we as soon as once more course of the vocabulary in tiles utilizing a two-staged strategy:

- Recomputing Normalised Possibilities (

P): As a result of we didn’t retailer the total logit matrix in the course of the ahead cross, we should recompute the activations for every tile. By reusing the Log-Sum-Exp calculated within the ahead cross, we are able to normalise these activations on-the-fly. Subtracting the ground-truth labelYfrom the goal logit inside this tile provides us a neighborhood chunk of the gradient logit,P.

2. Gradient Accumulation: With a tile ofPin hand, we calculate the partial gradients. FordX, we carry out a dot product with blocks ofW^T; fordW, we multiply by tiles ofX^T. To securely mixture these values throughout the whole batch, we use Triton’stl.atomic_add.

This operation acts as a thread-safe+=, making certain that totally different packages updating the identical weight gradient don’t overwrite each other.

Listed below are some extra particulars on the implementation:

- The Stride Swap: When computing

P . W_T, we don’t truly have to bodily transpose the hugeWmatrix in reminiscence. As a substitute, we invert the shapes and strides inW’s block pointer to learn the rows ofWas columns ofW^T. This ends in a “free” transpose that saves each time and VRAM. - Numerical Precision: It’s value noting that whereas

XandWis perhaps inbfloat16, the buildup ofdWanddXthroughatomic_addis normally carried out in float32 to stop the buildup of tiny rounding errors throughout hundreds of rows. - Competition Word: Whereas

atomic_addis important fordW(as a result of each program updates the identical weights),dXis non-public to every program, that means there may be zero competition between program IDs for that particular tensor. - Atomic Add Masking:

atomic_adddoesn’t help block pointers. Subsequently, we implement the pointer and masks logic fordWexplicitly.

The next determine is a illustration of the backward cross for one iteration of the outer loop (i.e. one block alongside V and all blocks alongside D):

Right here’s the total code for the backward cross:

This concludes the implementation of our kernel! The total code together with the kernel and benchmark script is on the market right here.

Reminiscence Benchmark

Lastly, we examine our kernel with the PyTorch baseline utilizing hyperparameters impressed from Llama3 and an A100 GPU. Particularly, we contemplate a sequence size of S=16,384, a batch measurement of B=1 and an embedding dimension of D=4096; the vocabulary measurement is ready to V=128,256.

As anticipated, the PyTorch baseline allocates an enormous intermediate tensor to retailer the activations, leading to a peak reminiscence utilization of 36.02GB. As compared, our Triton kernel reduces the height reminiscence utilization by 84% by allocating solely 5.04GB utilizing D_BLOCK=64 and V_BLOCK=64!

Utilizing even smaller block sizes would permit for additional reminiscence features at the price of effectivity.

Atomic Limitations and Manufacturing Scaling

On this article, we targeted on the technical and mathematical instinct behind fused Linear Cross-Entropy kernels. We used atomic operations like tl.atomic_add to maintain the code minimal and readable. Nonetheless, whereas our kernel efficiently slashed reminiscence utilization by a staggering 86%, the Triton kernel is considerably slower than native PyTorch.

Sadly, the identical atomic operations which make this kernel simpler to put in writing and comprehend come at the price of an enormous visitors jam since hundreds of threads attempt to modify the identical reminiscence deal with directly. Usually, tl.atomic_add is performant when competition is low. In our present implementation, now we have:

- Excessive Competition: For the load gradient, each single program within the batch (as much as

16,384in our take a look at) is making an attempt to replace the identical reminiscence tiles concurrently. The {hardware} should serialise these updates, forcing hundreds of threads to attend in line. - Numerical Non-associativity: In computer systems, floating-point addition is non-associative. Rounding errors can accumulate in another way relying on the order of operations, which is why correctness checks may cross on a T4 however fail on an A100, the latter has extra streaming multiprocessors (SMs) performing extra concurrent, non-deterministic additions.

Word on Precision: On Ampere and newer architectures, the

TF32format can additional contribute to those discrepancies. For strict numerical parity, one ought to setallow_tf32=Falseor use increased precision sorts in the course of the accumulation steps.

Path to Manufacturing

To maneuver past this instructional implementation and towards a production-ready kernel (I like to recommend wanting on the Liger-Kernel implementation), one might implement a number of optimisations:

- Changing

dXAtomics: Since every program “owns” its row ofX, we are able to use easy register accumulation adopted by atl.retailer, eliminating atomics for the enter gradients totally. - A devoted

dWKernel: To optimise the computation ofdW, manufacturing kernels typically use a special grid technique the place every program handles a block ofWand iterates by means of the batch dimension, accumulating gradients domestically earlier than a single world write. - Micro-batching: Superior implementations, equivalent to these within the Liger-Kernel library, course of the sequence by blocks alongside the

Ndimension, making the reminiscence scaling fixed within the sequence size relatively than linear. This allows the use a lot bigger batch sizes at a lowered reminiscence price.

Conclusion

This concludes our deep dive into fused linear cross-entropy kernels. Thanks for studying during, and I hope this text gave you each the instinct and the sensible understanding wanted to construct on these concepts and discover them additional.

If you happen to discovered this handy, contemplate sharing the article; it genuinely helps help the effort and time that goes into producing this work. And as at all times, be at liberty to contact me in case you have questions, ideas, or concepts for follow-ups.

Till subsequent time! 👋