I’ll show how one can construct a convolution neural community able to distinguishing between most cancers varieties utilizing a easy PyTorch classifier. The info and code used for coaching are publicly obtainable and the coaching will be achieved on a private laptop, probably even on a CPU.

Most cancers is a an unlucky side-effect of our cells accumulating info errors over the programs of our lives, resulting in an uncontrolled progress. As researches we examine the patterns of those errors as a way to perceive the illness higher. Seen from a knowledge scientist perspective, the human genome is an round three-billion-letter-long string with letters A, C, G, T (i.e. 2 bits of data per letter). A copying error or an exterior occasion can probably take away/insert/change a letter, inflicting a mutation and probably disruption to the genomic perform.

Nonetheless, particular person errors virtually by no means result in most cancers improvement. The human physique has a number of mechanisms to stop most cancers from growing, together with devoted proteins—the so referred to as tumor suppressors. A listing of essential situations—the so-called “hallmarks of most cancers” have to be met for a cell to have the ability to create a sustained progress.

Due to this fact, adjustments to particular person letters of the DNA are normally inadequate to causes self-sustained proliferative progress. The overwhelming majority of mutation-mediated cancers (versus different sources of most cancers, for instance the HPV virus) additionally exhibit copy quantity (CN) adjustments. These are large-scale occasions, usually including or eradicating tens of millions of DNA bases at a time.

These huge adjustments to the construction of the genome result in lack of genes that might forestall the most cancers from forming, whereas accumulating genes selling cell progress. By sequencing the DNA of those cells, we are able to establish these adjustments, which very often occurs in areas particular to the most cancers kind. Copy quantity values for every allele will be derived from sequencing information utilizing copy quantity callers.

Processing the Copy Quantity Profiles

One of many benefits of working with Copy Quantity (CN) profiles is that they don’t seem to be biometric and due to this fact will be revealed and not using a want for entry restrictions. This permits us to build up information over time from a number of research to construct datasets of enough dimension. Nonetheless, the information coming from totally different research is just not all the time instantly comparable, as it might be generated utilizing totally different applied sciences, have totally different resolutions, or be pre-processed in numerous methods.

To acquire the information and collectively course of and visualize them, we will likely be utilizing the instrument CNSistent, developed as a part of work of the Institute for Computational Most cancers Biology of the College Clinic, Cologne, Germany.

First we clone the repository and the information and set to the model used on this textual content:

git clone [email protected]:schwarzlab/cnsistent.git

cd cnsistent

git checkout v0.9.0Because the information we will likely be utilizing are inside the repository (~1GB of information), it takes a couple of minutes to obtain. For cloning each Git and Git LFS have to be current on the system.

Contained in the repository is a necessities.txt file that lists all of the dependencies that may be put in utilizing pip set up -r necessities.txt.

(Making a digital atmosphere first is advisable). As soon as the necessities are put in, CNSistent will be put in by working pip set up -e . in the identical folder. The -e flag installs the bundle from its supply listing, which is important for entry to the information via the API.

The repository accommodates uncooked information from three datasets: TCGA, PCAWG, and TRACERx. These have to first be pre-processed. This may be achieved by working the script bash ./scripts/data_process.sh.

Now, we have now processed datasets and may load it utilizing the CNSistent information utility library:

import cns.data_utils as cdu

samples_df, cns_df = cdu.main_load("imp")

print(cns_df.head())Producing the next consequence:

| | sample_id | chrom | begin | finish | major_cn | minor_cn |

|---:|:------------|:--------|---------:|---------:|-----------:|-----------:|

| 0 | SP101724 | chr1 | 0 | 27256755 | 2 | 2 |

| 1 | SP101724 | chr1 | 27256755 | 28028200 | 3 | 2 |

| 2 | SP101724 | chr1 | 28028200 | 32976095 | 2 | 2 |

| 3 | SP101724 | chr1 | 32976095 | 33354394 | 5 | 2 |

| 4 | SP101724 | chr1 | 33354394 | 33554783 | 3 | 2 |This desk exhibits the copy quantity information with the next columns:

sample_id: the identifier of the pattern,chrom: the chromosome,begin: the beginning place of the phase (0-indexed inclusive),finish: the tip place of the phase (0-indexed unique),major_cn: the variety of copies of the main allele (the larger of the 2),minor_cn: the variety of copies of the minor allele (the smaller of the 2).

On the primary line we are able to due to this fact see a phase stating that pattern SP101724 has 2 copies of the main allele and a couple of copies of the minor allele (4 in complete) within the area of chromosome 1 from 0 to 27.26 megabase.

The second dataframe we loaded, samples_df, accommodates the metadata for the samples. For our functions solely the kind is vital. We are able to examine the obtainable varieties by working:

import matplotlib.pyplot as plt

type_counts = samples_df["type"].value_counts()

plt.determine(figsize=(10, 6))

type_counts.plot(sort='bar')

plt.ylabel('Rely')

plt.xticks(rotation=90)

Within the instance proven above, we are able to observe a possible downside with the information — the lengths of the person segments will not be uniform. The primary phase is 27.26 megabase lengthy, whereas the second is barely 0.77 megabase lengthy. It is a downside for the neural community, which expects the enter to be of a set dimension.

We may technically take all current breakpoints and create segments between all breakpoints within the dataset, so-called minimal constant segmentation. This is able to nonetheless end in an enormous variety of segments — a fast examine utilizing len(cns_df[“end”].distinctive()) exhibits that there are 823652 distinctive breakpoints.

Alternatively, we are able to use CNSistent to create a brand new segmentation utilizing a binning algorithm. This can create segments of a set dimension, which can be utilized as enter to the neural community. In our work we have now decided 1–3 megabase segments to supply one of the best trade-off between accuracy and overfitting. We first create the segmentation after which apply it to acquire new CNS information utilizing the next Bash script:

threads=8

cns phase entire --out "./out/segs_3MB.mattress" --split 3000000 --remove gaps - filter 300000

for dataset in TRACERx PCAWG TCGA_hg19;

do

cns combination ./out/${dataset}_cns_imp.tsv - segments ./out/segs_3MB.mattress - out ./out/${dataset}_bin_3MB.tsv - samples ./out/${dataset}_samples.tsv - threads $threads

achievedThe loop processes every dataset individually, whereas sustaining the identical segmentation. The --threads flag is used to hurry up the method by working the aggregation in parallel, adjusting the worth in line with the variety of cores obtainable.

The --remove gaps --filter 300000 arguments will take away areas of low mappability (aka gaps) and filter out segments shorter than 300 Kb. The --split 3000000 argument will create segments of three Mb.

Non-small-cell Lung Carcinoma

On this textual content we’ll give attention to classification of non-small-cell lung carcinoma, which accounts for about 85% of all lung cancers, specifically the excellence between adenocarcinoma and squamous-cell carcinoma. You will need to differentiate between the 2 as their therapy regimes will likely be totally different and new strategies give hope for non-invasive detection from blood samples or nasal swabs.

We are going to use the segments produced above and cargo these utilizing a offered utility perform utilizing a utility perform. Since we’re classifying between two kinds of most cancers, we are able to filter the samples to solely embody the related varieties, LUAD (adenocarcinoma) and LUSC (squamous cell carcinoma) and plot the primary pattern:

import cns

samples_df, cns_df = cdu.main_load("3MB")

samples_df = samples_df.question("kind in ['LUAD', 'LUSC']")

cns_df = cns.select_CNS_samples(cns_df, samples_df)

cns_df = cns.only_aut(cns_df)

cns.fig_lines(cns.cns_head(cns_df, n=3))Main and minor copy quantity segments in 3MB bins for the primary three samples. On this case all three samples come from multi-region sequencing of the identical affected person, demonstrating how heterogeneous most cancers cells could also be even inside a single tumor.

Convolution Neural Community Mannequin

Operating the code requires Python 3 with PyTorch 2+ to be put in and a Bash-compatible shell. NVIDIA GPU is advisable for sooner coaching, however not essential.

First we outline a convolutional neural community with three layers:

import torch.nn as nn

class CNSConvNet(nn.Module):

def __init__(self, num_classes):

tremendous(CNSConvNet, self).__init__()

self.conv_layers = nn.Sequential(

nn.Conv1d(in_channels=2, out_channels=16, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool1d(kernel_size=2),

nn.Conv1d(in_channels=16, out_channels=32, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool1d(kernel_size=2),

nn.Conv1d(in_channels=32, out_channels=64, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool1d(kernel_size=2)

)

self.fc_layers = nn.Sequential(

nn.LazyLinear(128),

nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(128, num_classes)

)

def ahead(self, x):

x = self.conv_layers(x)

x = x.view(x.dimension(0), -1)

x = self.fc_layers(x)

return xIt is a boilerplate deep CNN with 2 enter channels — one for every allele — and three convolutional layers utilizing 1D kernel of dimension 3 and ReLU activation perform. The convolutional layers are adopted by max pooling layers with kernel dimension of two. Convolution is historically used for edge detection, which is beneficial for us as we’re fascinated by adjustments within the copy quantity, i.e. the perimeters of the segments.

The output of the convolutional layers is then flattened and handed via two totally linked layers with dropout. The LazyLinearlayer connects the output of 64 stacked channels into one layer of 128 nodes, without having to calculate what number of nodes there are on the finish of the convolution. That is the place most of our parameters are, due to this fact we additionally apply dropout to stop overfitting.

Coaching the Mannequin

First we have now to transform from dataframes to Torch tensors. We use a utility perform bins_to_features, which creates a 3D function array of the format (samples, alleles, segments). Within the course of we additionally cut up the information into coaching and testing units within the 4:1 ratio:

import torch

from torch.utils.information import TensorDataset, DataLoader

from sklearn.preprocessing import LabelEncoder

from sklearn.model_selection import train_test_split

# convert information to options and labels

options, samples_list, columns_df = cns.bins_to_features(cns_df)

# convert information to Torch tensors

X = torch.FloatTensor(options)

label_encoder = LabelEncoder()

y = torch.LongTensor(label_encoder.fit_transform(samples_df.loc[samples_list]["type"]))

# Take a look at/prepare cut up

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)

# Create dataloaders

train_loader = DataLoader(TensorDataset(X_train, y_train), batch_size=32, shuffle=True)

test_loader = DataLoader(TensorDataset(X_test, y_test), batch_size=32, shuffle=False)We are able to now prepare the mannequin utilizing the next coaching loop with 20 epochs. The Adam optimizer and CrossEntropy loss are usually used for classification duties, we due to this fact use them right here as properly:

# setup the mannequin, loss, and optimizer

gadget = torch.gadget('cuda' if torch.cuda.is_available() else 'cpu')

mannequin = CNSConvNet(num_classes=len(label_encoder.classes_)).to(gadget)

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(mannequin.parameters(), lr=0.001)

# Coaching loop

num_epochs = 20

for epoch in vary(num_epochs):

mannequin.prepare()

running_loss = 0.0

for inputs, labels in train_loader:

inputs, labels = inputs.to(gadget), labels.to(gadget)

# Clear gradients

optimizer.zero_grad()

# Ahead move

outputs = mannequin(inputs)

loss = criterion(outputs, labels)

# Backward move and optimize

loss.backward()

optimizer.step()

running_loss += loss.merchandise()

# Print statistics

print(f'Epoch {epoch+1}/{num_epochs}, Loss: {running_loss/len(train_loader):.4f}')

This concludes the coaching. Afterwards, we are able to consider the mannequin and print the confusion matrix:

import numpy as np

from sklearn.metrics import confusion_matrix

import seaborn as sns

# Loop over batches within the check set and acquire predictions

mannequin.eval()

y_true = []

y_pred = []

with torch.no_grad():

for inputs, labels in test_loader:

inputs, labels = inputs.to(gadget), labels.to(gadget)

outputs = mannequin(inputs)

y_true.prolong(labels.cpu().numpy())

y_pred.prolong(outputs.argmax(dim=1).cpu().numpy())

_, predicted = torch.max(outputs.information, 1)

# Calculate accuracy and confusion matrix

accuracy = (np.array(y_true) == np.array(y_pred)).imply()

cm = confusion_matrix(y_true, y_pred)

# Plot the confusion matrix

plt.determine(figsize=(3, 3), dpi=200)

sns.heatmap(cm, annot=True, fmt='d', cmap='Blues', xticklabels=label_encoder.classes_, yticklabels=label_encoder.classes_)

plt.xlabel('Predicted')

plt.ylabel('True')

plt.title('Confusion Matrix, accuracy={:.2f}'.format(accuracy))

plt.savefig("confusion_matrix.png", bbox_inches='tight')

The coaching course of takes about 7 seconds complete on an NVIDIA RTX 4090 GPU.

Conclusion

Now we have developed an environment friendly and correct classifier of lung most cancers subtype from copy quantity information. As we have now proven, such fashions switch properly to new research and sources of sequence information.

Mass scale AI is usually being justified, amongst others, as a “answer to most cancers“. Nonetheless as on this article, small fashions with classical approaches normally serve their objective properly. Some even argue that the precise impediment of machine studying in biology in drugs is just not in fixing issues, however in truly making impression for sufferers.

Nonetheless, machine studying has been in a position to largely remedy at the least one main puzzle of computational biology, reinvigorating the give attention to machine studying for most cancers. With some luck, we would have the ability to have a look at the following decade because the time we lastly “solved” most cancers.

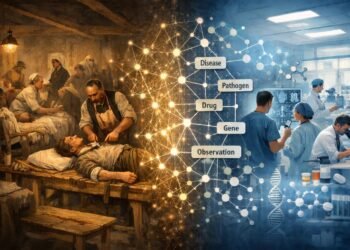

Bonus: Cell2Sentence

The foundational fashions of at present usually include info, comparable to which genes have excessive copy numbers through which cancers, as part of their coaching set. This although led to the creation of LLM-based approaches, like Cell2Sentence, the place a dataset is transformed to a pure language and submitted to an LLM.

We are able to make the most of this strategy right here. First we convert a random LUSC pattern to a ranked record of genes, based mostly on their copy quantity worth:

import cns

import cns.data_utils as cdu

samples_df, cns_df = cdu.main_load("COSMIC")

cns_df = cns.add_total_cn(cns_df)

lusc_df = cns.select_cns_by_type(cns_df, samples_df, "LUSC")

lusc_one_df = cns.cns_head(lusc_df, 1)

rank_list = lusc_one_df.sort_values(by="total_cn", ascending=False)["name"].to_list()Then we convert the record of genes to a pure language question:

question = "Under is an inventory of COSMIC {gene_names} ordered by descending complete copy quantity in a cell. Your process is to establish the most cancers kind of that pattern.nn"

question += "{gene_names}: " + " ".be part of(rank_list) + "nn"

question += "Reply:"

print(question)Submitted to ChatGPT 5, the mannequin accurately identifies the LUSC most cancers:

Based mostly on the gene copy-number profile you listed, the strongest clue is the large amplification of 11q13 (CCND1, FADD) along with SOX2, PIK3CA, TP63, EGFR, FGFR1, MYC, CCND1/CCND2/CCND3, CDK6, CDK4. It is a very attribute sample of squamous cell carcinoma of the lung (LUSC):

CCND1 amplification is without doubt one of the most frequent occasions in LUSC.

SOX2 amplification (3q26) is a defining driver of lung squamous carcinoma, not often seen in adenocarcinoma.

TP63 amplification is once more extremely particular to squamous tumors.

Co-amplifications of PIK3CA, FGFR1, EGFR, MYC match this profile.

The broad set of genes amplified (many regulators of squamous differentiation and RTK/RAS/PI3K signaling) displays typical copy-number–pushed LUSC biology.

In contrast, lung adenocarcinomas normally present KRAS, EGFR, ALK, ROS1, MET exon 14, ERBB2 level mutations/fusions and have fewer widespread squamous-type CNAs.

Reply: Lung squamous cell carcinoma (LUSC).Nonetheless, producing this output for one pattern takes longer than classifying the entire dataset with our mannequin and it might price round ~200$ on API charges to categorise our entire dataset.