fact is rarely good. From scientific measurements to human annotations used to coach deep studying fashions, floor fact at all times has some quantity of errors. ImageNet, arguably essentially the most well-curated picture dataset has 0.3% errors in human annotations. Then, how can we consider predictive fashions utilizing such inaccurate labels?

On this article, we discover methods to account for errors in take a look at information labels and estimate a mannequin’s “true” accuracy.

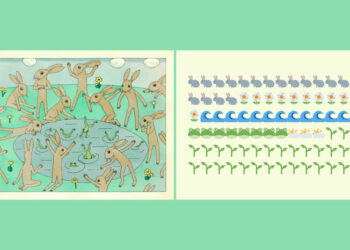

Instance: picture classification

Let’s say there are 100 photos, every containing both a cat or a canine. The pictures are labeled by human annotators who’re recognized to have 96% accuracy (Aᵍʳᵒᵘⁿᵈᵗʳᵘᵗʰ). If we prepare a picture classifier on a few of this information and discover that it has 90% accuracy on a hold-out set (Aᵐᵒᵈᵉˡ), what’s the “true” accuracy of the mannequin (Aᵗʳᵘᵉ)? A few observations first:

- Inside the 90% of predictions that the mannequin received “proper,” some examples could have been incorrectly labeled, that means each the mannequin and the bottom fact are flawed. This artificially inflates the measured accuracy.

- Conversely, inside the 10% of “incorrect” predictions, some may very well be circumstances the place the mannequin is correct and the bottom fact label is flawed. This artificially deflates the measured accuracy.

Given these issues, how a lot can the true accuracy fluctuate?

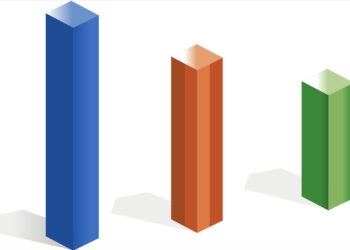

Vary of true accuracy

The true accuracy of our mannequin relies on how its errors correlate with the errors within the floor fact labels. If our mannequin’s errors completely overlap with the bottom fact errors (i.e., the mannequin is flawed in precisely the identical approach as human labelers), its true accuracy is:

Aᵗʳᵘᵉ = 0.90 — (1–0.96) = 86%

Alternatively, if our mannequin is flawed in precisely the other approach as human labelers (good unfavorable correlation), its true accuracy is:

Aᵗʳᵘᵉ = 0.90 + (1–0.96) = 94%

Or extra typically:

Aᵗʳᵘᵉ = Aᵐᵒᵈᵉˡ ± (1 — Aᵍʳᵒᵘⁿᵈᵗʳᵘᵗʰ)

It’s necessary to notice that the mannequin’s true accuracy might be each decrease and better than its reported accuracy, relying on the correlation between mannequin errors and floor fact errors.

Probabilistic estimate of true accuracy

In some circumstances, inaccuracies amongst labels are randomly unfold among the many examples and never systematically biased towards sure labels or areas of the function area. If the mannequin’s inaccuracies are impartial of the inaccuracies within the labels, we will derive a extra exact estimate of its true accuracy.

Once we measure Aᵐᵒᵈᵉˡ (90%), we’re counting circumstances the place the mannequin’s prediction matches the bottom fact label. This will occur in two situations:

- Each mannequin and floor fact are appropriate. This occurs with likelihood Aᵗʳᵘᵉ × Aᵍʳᵒᵘⁿᵈᵗʳᵘᵗʰ.

- Each mannequin and floor fact are flawed (in the identical approach). This occurs with likelihood (1 — Aᵗʳᵘᵉ) × (1 — Aᵍʳᵒᵘⁿᵈᵗʳᵘᵗʰ).

Beneath independence, we will categorical this as:

Aᵐᵒᵈᵉˡ = Aᵗʳᵘᵉ × Aᵍʳᵒᵘⁿᵈᵗʳᵘᵗʰ + (1 — Aᵗʳᵘᵉ) × (1 — Aᵍʳᵒᵘⁿᵈᵗʳᵘᵗʰ)

Rearranging the phrases, we get:

Aᵗʳᵘᵉ = (Aᵐᵒᵈᵉˡ + Aᵍʳᵒᵘⁿᵈᵗʳᵘᵗʰ — 1) / (2 × Aᵍʳᵒᵘⁿᵈᵗʳᵘᵗʰ — 1)

In our instance, that equals (0.90 + 0.96–1) / (2 × 0.96–1) = 93.5%, which is inside the vary of 86% to 94% that we derived above.

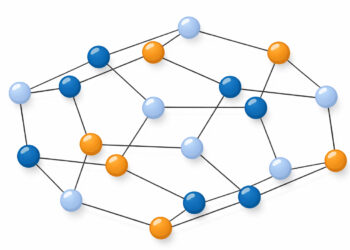

The independence paradox

Plugging in Aᵍʳᵒᵘⁿᵈᵗʳᵘᵗʰ as 0.96 from our instance, we get

Aᵗʳᵘᵉ = (Aᵐᵒᵈᵉˡ — 0.04) / (0.92). Let’s plot this under.

Unusual, isn’t it? If we assume that mannequin’s errors are uncorrelated with floor fact errors, then its true accuracy Aᵗʳᵘᵉ is at all times increased than the 1:1 line when the reported accuracy is > 0.5. This holds true even when we fluctuate Aᵍʳᵒᵘⁿᵈᵗʳᵘᵗʰ:

Error correlation: why fashions usually wrestle the place people do

The independence assumption is essential however usually doesn’t maintain in apply. If some photos of cats are very blurry, or some small canine appear to be cats, then each the bottom fact and mannequin errors are prone to be correlated. This causes Aᵗʳᵘᵉ to be nearer to the decrease sure (Aᵐᵒᵈᵉˡ — (1 — Aᵍʳᵒᵘⁿᵈᵗʳᵘᵗʰ)) than the higher sure.

Extra typically, mannequin errors are typically correlated with floor fact errors when:

- Each people and fashions wrestle with the identical “tough” examples (e.g., ambiguous photos, edge circumstances)

- The mannequin has realized the identical biases current within the human labeling course of

- Sure lessons or examples are inherently ambiguous or difficult for any classifier, human or machine

- The labels themselves are generated from one other mannequin

- There are too many lessons (and thus too many various methods of being flawed)

Finest practices

The true accuracy of a mannequin can differ considerably from its measured accuracy. Understanding this distinction is essential for correct mannequin analysis, particularly in domains the place acquiring good floor fact is unimaginable or prohibitively costly.

When evaluating mannequin efficiency with imperfect floor fact:

- Conduct focused error evaluation: Look at examples the place the mannequin disagrees with floor fact to establish potential floor fact errors.

- Contemplate the correlation between errors: If you happen to suspect correlation between mannequin and floor fact errors, the true accuracy is probably going nearer to the decrease sure (Aᵐᵒᵈᵉˡ — (1 — Aᵍʳᵒᵘⁿᵈᵗʳᵘᵗʰ)).

- Acquire a number of impartial annotations: Having a number of annotators may help estimate floor fact accuracy extra reliably.

Conclusion

In abstract, we realized that:

- The vary of attainable true accuracy relies on the error charge within the floor fact

- When errors are impartial, the true accuracy is commonly increased than measured for fashions higher than random probability

- In real-world situations, errors are not often impartial, and the true accuracy is probably going nearer to the decrease sure