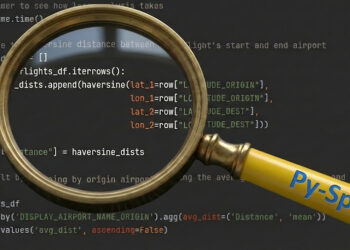

Loading Florence-2 mannequin and a pattern picture

After putting in and importing the required libraries (as demonstrated within the accompanying Colab pocket book), we start by loading the Florence-2 mannequin, processor and the enter picture of a digicam:

#Load mannequin:

model_id = ‘microsoft/Florence-2-large’

mannequin = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True, torch_dtype='auto').eval().cuda()

processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)#Load picture:

picture = Picture.open(img_path)

Auxiliary Capabilities

On this tutorial, we are going to use a number of auxiliary features. Crucial is the run_example core perform, which generates a response from the Florence-2 mannequin.

The run_example perform combines the duty immediate with any further textual content enter (if offered) right into a single immediate. Utilizing the processor, it generates textual content and picture embeddings that function inputs to the mannequin. The magic occurs throughout the mannequin.generate step, the place the mannequin’s response is generated. Right here’s a breakdown of some key parameters:

- max_new_tokens=1024: Units the utmost size of the output, permitting for detailed responses.

- do_sample=False: Ensures a deterministic response.

- num_beams=3: Implements beam search with the highest 3 most probably tokens at every step, exploring a number of potential sequences to seek out one of the best general output.

- early_stopping=False: Ensures beam search continues till all beams attain the utmost size or an end-of-sequence token is generated.

Lastly, the mannequin’s output is decoded and post-processed with processor.batch_decode and processor.post_process_generation to supply the ultimate textual content response, which is returned by the run_example perform.

def run_example(picture, task_prompt, text_input=''):immediate = task_prompt + text_input

inputs = processor(textual content=immediate, pictures=picture, return_tensors=”pt”).to(‘cuda’, torch.float16)

generated_ids = mannequin.generate(

input_ids=inputs[“input_ids”].cuda(),

pixel_values=inputs[“pixel_values”].cuda(),

max_new_tokens=1024,

do_sample=False,

num_beams=3,

early_stopping=False,

)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

parsed_answer = processor.post_process_generation(

generated_text,

activity=task_prompt,

image_size=(picture.width, picture.peak)

)

return parsed_answer

Moreover, we make the most of auxiliary features to visualise the outcomes (draw_bbox ,draw_ocr_bboxes and draw_polygon) and deal with the conversion between bounding containers codecs (convert_bbox_to_florence-2 and convert_florence-2_to_bbox). These could be explored within the connected Colab pocket book.

Florence-2 can carry out a wide range of visible duties. Let’s discover a few of its capabilities, beginning with picture captioning.

1. Captioning Technology Associated Duties:

1.1 Generate Captions

Florence-2 can generate picture captions at numerous ranges of element, utilizing the '

, ' or ' activity prompts.

print (run_example(picture, task_prompt=''))

# Output: 'A black digicam sitting on high of a picket desk.'print (run_example(picture, task_prompt=''))

# Output: 'The picture exhibits a black Kodak V35 35mm movie digicam sitting on high of a picket desk with a blurred background.'

print (run_example(picture, task_prompt=''))

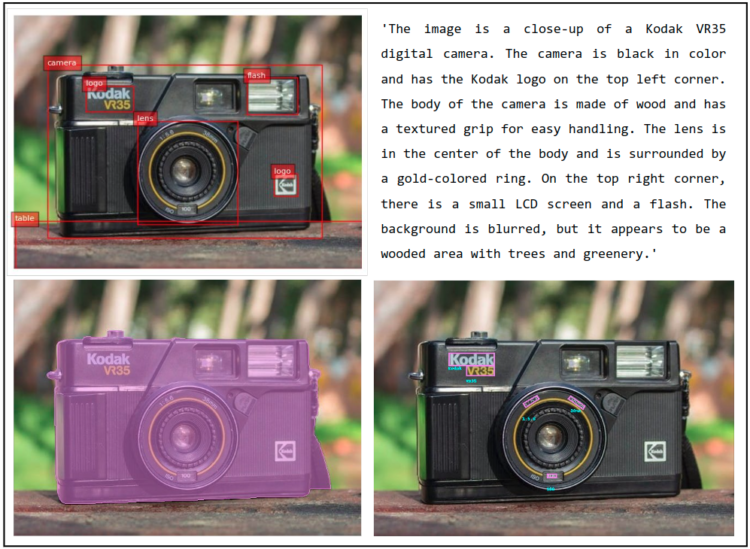

# Output: 'The picture is a close-up of a Kodak VR35 digital digicam. The digicam is black in colour and has the Kodak brand on the highest left nook. The physique of the digicam is made from wooden and has a textured grip for simple dealing with. The lens is within the middle of the physique and is surrounded by a gold-colored ring. On the highest proper nook, there's a small LCD display screen and a flash. The background is blurred, but it surely seems to be a wooded space with bushes and greenery.'

The mannequin precisely describes the picture and its surrounding. It even identifies the digicam’s model and mannequin, demonstrating its OCR capability. Nevertheless, within the ' activity there are minor inconsistencies, which is predicted from a zero-shot mannequin.

1.2 Generate Caption for a Given Bounding Field

Florence-2 can generate captions for particular areas of a picture outlined by bounding containers. For this, it takes the bounding field location as enter. You’ll be able to extract the class with ' or an outline with ' .

To your comfort, I added a widget to the Colab pocket book that allows you to attract a bounding field on the picture, and code to transform it to Florence-2 format.

task_prompt = ''

box_str = ''

outcomes = run_example(picture, task_prompt, text_input=box_str)

# Output: 'digicam lens'

task_prompt = ''

box_str = ''

outcomes = run_example(picture, task_prompt, text_input=box_str)

# Output: 'digicam'

On this case, the ' recognized the lens, whereas the ' was much less particular. Nevertheless, this efficiency could differ with completely different pictures.

2. Object Detection Associated Duties:

2.1 Generate Bounding Bins and Textual content for Objects

Florence-2 can establish densely packed areas within the picture, and to supply their bounding field coordinates and their associated labels or captions. To extract bounding containers with labels, use the ’activity immediate:

outcomes = run_example(picture, task_prompt='')

draw_bbox(picture, outcomes[''])

To extract bounding containers with captions, use ' activity immediate:

task_prompt outcomes = run_example(picture, task_prompt= '')

draw_bbox(picture, outcomes[''])

2.2 Textual content Grounded Object Detection

Florence-2 also can carry out text-grounded object detection. By offering particular object names or descriptions as enter, Florence-2 detects bounding containers across the specified objects.

task_prompt = ''

outcomes = run_example(picture,task_prompt, text_input=”lens. digicam. desk. brand. flash.”)

draw_bbox(picture, outcomes[''])

3. Segmentation Associated Duties:

Florence-2 also can generate segmentation polygons grounded by textual content (') or by bounding containers ('):

outcomes = run_example(picture, task_prompt='', text_input=”digicam”)

draw_polygons(picture, outcomes[task_prompt])

outcomes = run_example(picture, task_prompt='', text_input="")

draw_polygons(output_image, outcomes[''])

4. OCR Associated Duties:

Florence-2 demonstrates robust OCR capabilities. It could possibly extract textual content from a picture with the ' activity immediate, and extract each textual content and its location with ' :

outcomes = run_example(picture,task_prompt)

draw_ocr_bboxes(picture, outcomes[''])

Florence-2 is a flexible Imaginative and prescient-Language Mannequin (VLM), able to dealing with a number of imaginative and prescient duties inside a single mannequin. Its zero-shot capabilities are spectacular throughout numerous duties similar to picture captioning, object detection, segmentation and OCR. Whereas Florence-2 performs properly out-of-the-box, further fine-tuning can additional adapt the mannequin to new duties or enhance its efficiency on distinctive, customized datasets.