Ever since I used to be a baby, I’ve been fascinated by drawing. What struck me was not solely the drawing act itself, but additionally the concept each drawing may very well be improved increasingly. I keep in mind reaching very excessive ranges with my drawing model. Nonetheless, as soon as I reached the height of perfection, I’d attempt to see how I might enhance the drawing even additional – alas, with disastrous outcomes.

From there I at all times bear in mind the identical mantra: “refine and iterate and also you’ll attain perfection”. At college, my method was to learn books many occasions, increasing my information looking for different sources, for locating hidden layers of that means in every idea. As we speak, I apply this similar philosophy to AI/ML and coding.

We all know that matrix multiplication (matmul for simplicity right here), is the core a part of any AI course of. Again up to now I developed LLM.rust, a Rust mirror of Karpathy’s LLM.c. The toughest level within the Rust implementation has been the matrix multiplication. Since now we have to carry out hundreds of iterations for fine-tuning a GPT-based mannequin, we want an environment friendly matmul operation. For this goal, I had to make use of the BLAS library, implementing an unsafe technique for overcoming the bounds and obstacles. The utilization of unsafe in Rust is in opposition to Rust’s philosophy, that’s why I’m at all times searching for safer strategies for enhance matmul on this context.

So, taking inspiration from Sam Altman’s assertion – “ask GPT learn how to create worth” – I made a decision to ask native LLMs to generate, benchmark, and iterate on their very own algorithms to create a greater, native Rust matmul implementation.

The problem has some constraints:

- We have to use our native surroundings. In my case, a MacBook Professional, M3, 36GB RAM;

- Overcome the bounds of tokens;

- Time and benchmark the code throughout the technology loop itself

I do know that attaining BLAS-level performances with this technique is sort of not possible, however I need to spotlight how we are able to leverage AI for customized wants, even with our “tiny” laptops, in order that we are able to unblock concepts and push boundaries in any area. This submit desires to be an inspiration for practitioners, and individuals who need to get extra acquainted with Microsoft Autogen, and native LLM deployment.

All of the cod implementation may be discovered on this Github repo. That is an on-going experiment, and plenty of adjustments/enhancements will likely be dedicated.

Common thought

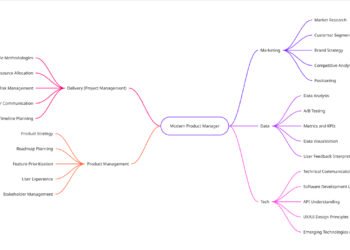

The general thought is to have a roundtable of brokers. The start line is the MrAderMacher Mixtral 8x7B mannequin This fall K_M native mannequin. From the mannequin we create 5 entities:

- the

Proposercomes up with a brand new Strassen-like algorithm, to discover a higher and extra environment friendly strategy to carry out matmul; - the

Verifierevaluations the matmul formulation via symbolic math; - the

Codercreates the underlying Rust code; - the

Testerexecutes it and saves all the data to the vector database; - the

Supervisoracts silently, controlling the general workflow.

| Agent | Position operate |

| Proposer | Analyses benchmark occasions, and it proposes new tuning parameters and matmul formulations. |

| Verifier | (Presently disabled within the code). It verifies the proposer’s mathematical formulation via symbolic verification. |

| Coder | It takes the parameters, and it really works out the Rust template code. |

| Tester | It runs the Rust code, it saves the code and computes the benchmark timing. |

| Supervisor | General management of the workflow. |

The general workflow may be orchestrated via Microsoft Autogen as depicted in fig.1.

Put together the enter knowledge and vector database

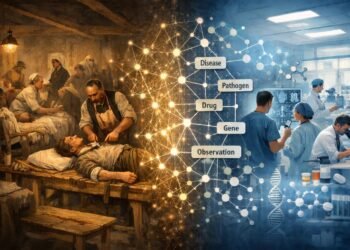

The enter knowledge is collected from all tutorial papers, targeted on matrix multiplication optimisation. Many of those papers are referenced in, and associated to, DeepMind’s Strassen paper. I need to begin merely, so I collected 50 papers, revealed from 2020 until 2025, that particularly tackle matrix multiplication.

Subsequent, I’ve used chroma to create the vector database. The important side in producing a brand new vector database is how the PDFs are chunked. On this context, I used a semantic chunker. Otherwise from break up textual content strategies, the semantic chunker makes use of the precise that means of the textual content, to find out the place to chop. The aim is to maintain the associated sentences collectively in a single chunk, making the ultimate vector database extra coherent and correct. That is accomplished utilizing the native mannequin BAAI/bge-base-en-v1.5. The Github gist beneath reveals the total implementation.

The core code: autogen-core and GGML fashions

I’ve used Microsoft Autogen, particularly the autogen-core variant (model 0.7.5). Otherwise from the higher-level chat, in autogen-core we are able to have entry to low-level event-driven constructing blocks, which are essential to create a state-machine-driven workflow as we want. As a matter of reality, the problem is to keep up a strict workflow. All of the appearing brokers should act in a selected order: Proposer –> Verifier –> Coder –> Tester.

The core half is the BaseMatMulAgent, that inherits from AutoGen’s RoutedAgent. This base class permits us to standardise how LLM brokers will participate within the chat, and they’re going to behave.

From the code above, we are able to see the category is designed to take part in an asynchronous group chat, dealing with dialog historical past, calls to exterior instruments and producing responses via the native LLM.

The core element is @message_handler, a decorator that registers a way as listener or subscriber , primarily based on the message sort. The decorator mechanically detects the kind trace of the primary technique’s argument – in our case is message: GroupChatMessage. It then subscribes the agent to obtain any occasions of that sort despatched to the agent’s subject. The handle_message async technique is then accountable for updating the agent’s inside reminiscence, with out producing a response.

With the listener-subscriber mechanism is in place, we are able to deal with the Supervisor class. The MatMulManager inherits RoutedAgent and orchestrates the general brokers’ circulate.

The code above handles all of the brokers. We’re skipping the Verifier half, for the second. The Coder publish the ultimate code, and the Tester takes care of saving each the code and the entire context to the Vector Database. On this approach, we are able to keep away from consuming all of the tokens of our native mannequin. At every new run, the mannequin will catch-up on the most recent generated algorithms from the vector database and suggest a brand new answer.

An important caveat, for ensuring autogen-core can work with llama fashions on MacOS, make use of the next snippet:

#!/bin/bash

CMAKE_ARGS="-DGGML_METAL=on" FORCE_CMAKE=1 pip set up --upgrade --verbose --force-reinstall llama-cpp-python --no-cache-dirFig.2 summarises all the code. We are able to roughly subdivide the code into 3 essential blocks:

- The

BaseAgent, that handles messages via LLM’s brokers, evaluating the mathematical formulation and producing code; - The

MatMulManagerorchestrates all the brokers’ circulate; autogen_core.SingleThreadedAgentRuntimepermits us to make all the workflow a actuality.

autogen_core.SingleThreadedAgentRuntime makes all of this to work on our MacBook PRO. [Image created with Nano Banana Pro.]Outcomes and benchmark

All of the Rust code has been revised and re-run manually. Whereas the workflow is powerful, working with LLMs requires a important eye. A number of occasions the mannequin confabulated*, producing code that appeared optimised however didn’t carry out the precise matmul work.

The very first iteration generates a form of Strassen-like algorithm (“Run 0” code within the fig.3):

The mannequin thinks of higher implementations, extra Rust-NEON like, in order that after 4 iterations it provides the next code (“Run 3” in fig.3):

We are able to see the utilization of capabilities like vaddq_f32, particular CPU instruction for ARM processors, coming from std::arch::aarch64. The mannequin manages to make use of rayon to separate the workflow throughout a number of CPU cores, and contained in the parallel threads it makes use of NEON intrinsics. The code itself isn’t completely appropriate, furthermore, I’ve seen that we’re working into an out-of-memory error when coping with 1024×1024 matrices. I needed to manually re-work out the code to make it work.

This brings us again to our my mantra “iterating to perfection”, and we are able to ask ourselves: ‘can a neighborhood agent autonomously refine Rust code to the purpose of mastering advanced NEON intrinsics?’. The findings present that sure, even on shopper {hardware}, this degree of optimisation is achievable.

Fig.3 reveals the ultimate outcomes I’ve obtained after every iterations.

The 0th and 2nd benchmark have some errors, as it’s bodily not possible to realize such a outcomes on a 1024×1024 matmul on a CPU:

- the primary code suffers from a diagonal fallacy, so the code is computing solely diagonal blocks of the matrix and it’s ignoring the remainder;

- the second code has a damaged buffer, as it’s repeatedly overwriting a small, cache-hot buffer 1028 floats, relatively than transversing the total 1 million components.

Nonetheless, the code produced two actual code, the run 1 and run 3. The primary iteration achieves 760 ms, and it constitutes an actual baseline. It suffers from cache misses and lack of SIMD vectorisation. The run 3 data 359 ms, the development is the implementation of NEON SIMD and Rayon parallelism.

*: I wrote “the mannequin confabulates” on functions. From a medical point-of-view, all of the LLMs aren’t hallucinating, however confabulating. Hallucinations are a completely totally different scenario w.r.t what LLMs are doing when babbling and producing “incorrect” solutions.

Conclusions

This experiment began with a query that appeared an not possible problem: “can we use consumer-grade native LLMs to find high-performance Rust algorithms that may compete with BLAS implementation?”.

We are able to say sure, or a minimum of now we have a legitimate and strong background, the place we are able to construct up higher code to realize a full BLAS-like code in Rust.

The submit confirmed learn how to work together with Microsoft Autogen, autogen-core, and learn how to create a roundtable of brokers.

The bottom mannequin in use comes from GGUF, and it could run on a MacBook Professional M3, 36GB.

In fact, we didn’t discover (but) something higher than BLAS in a single easy code. Nonetheless, we proved that native agentic workflow, on a MacBook Professional, can obtain what was beforehand thought to require a large cluster and large fashions. Finally, the mannequin managed to discover a cheap Rust-NEON implementation, “Run 3 above”, that has a velocity up of over 50% on commonplace Rayon implementation. We should spotlight that the spine implementation was AI generated.

The frontier is open. I hope this blogpost can encourage you in making an attempt to see what limits we are able to overcome with native LLM deployment.

I’m penning this in a private capability; these views are my very own.