BERT is a transformer-based mannequin for NLP duties that was launched by Google in 2018. It’s discovered to be helpful for a variety of NLP duties. On this article, we’ll overview the structure of BERT and the way it’s skilled. Then, you’ll find out about a few of its variants which might be launched later.

Let’s get began.

BERT Fashions and Its Variants.

Picture by Nastya Dulhiier. Some rights reserved.

Overview

This text is split into two components; they’re:

- Structure and Coaching of BERT

- Variations of BERT

Structure and Coaching of BERT

BERT is an encoder-only mannequin. Its structure is proven within the determine under.

The BERT structure

Whereas BERT makes use of a stack of transformer blocks, its key innovation is in how it’s skilled.

In line with the unique paper, the coaching goal is to foretell the masked phrases within the enter sequence. This can be a masked language mannequin (MLM) activity. The enter to the mannequin is a sequence of tokens within the format:

[CLS]

the place [CLS] and [SEP] separate them. The [CLS] token serves as a placeholder originally and it’s the place the mannequin learns the illustration of your complete sequence.

In contrast to widespread LLMs, BERT shouldn’t be a causal mannequin. It could actually see your complete sequence, and the output at any place is dependent upon each left and proper context. This makes BERT appropriate for NLP duties comparable to part-of-speech tagging. The mannequin is skilled by minimizing the loss metric:

$$textual content{loss} = textual content{loss}_{textual content{MLM}} + textual content{loss}_{textual content{NSP}}$$

The primary time period is the loss for the masked language mannequin (MLM) activity and the second time period is the loss for the subsequent sentence prediction (NSP) activity. Specifically,

- MLM activity: Any token in

- The token is changed with

[MASK]token. The mannequin ought to acknowledge this particular token and predict the unique token. - The token is changed with a random token from the vocabulary. The mannequin ought to establish this substitute.

- The token is unchanged, and the mannequin ought to predict that it’s unchanged.

- NSP activity: The mannequin is meant to foretell whether or not

[CLS]token originally of the sequence.

Therefore the coaching knowledge comprises not solely the textual content but additionally extra labels. Every coaching pattern comprises:

- A sequence of massked tokens:

[CLS], with some tokens changed in line with the foundations above.[SEP] [SEP] - Section labels (0 or 1) to tell apart between the primary and second sentences

- A boolean label indicating whether or not

- A listing of masked positions and their corresponding unique tokens

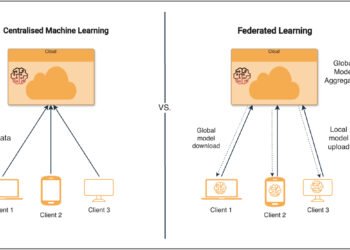

This coaching strategy teaches the mannequin to investigate your complete sequence and perceive every token in context. Consequently, BERT excels at understanding textual content however shouldn’t be skilled for textual content technology. For instance, BERT can extract related parts of textual content to reply a query, however can’t rewrite the reply in a special tone. This coaching with the MLM and NSP aims known as pre-training, after which the mannequin could be fine-tuned for particular purposes.

BERT pre-training and fine-tuning. Determine from the BERT paper.

Variations of BERT

BERT consists of $L$ stacked transformer blocks. Key hyperparameters of the mannequin embody the scale of hidden dimension $d$ and the variety of consideration heads $h$. The unique base BERT mannequin has $L = 12$, $d = 768$, and $h = 12$, whereas the big mannequin has $L = 24$, $d = 1024$, and $h = 16$.

Since BERT’s success, a number of variations have been developed. The best is RoBERTa, which maintains the identical structure however makes use of Byte-Pair Encoding (BPE) as an alternative of WordPiece for tokenization. RoBERTa trains on a bigger dataset with bigger batch sizes and extra epochs. The coaching makes use of solely the MLM loss with out NSP loss. This demonstrates that the unique BERT mannequin was under-trained. The improved coaching methods and extra knowledge can improve efficiency with out growing mannequin measurement.

ALBERT is a sooner mannequin of BERT with fewer parameters that introduces two methods to scale back mannequin measurement. First is factorized embedding: the embedding matrix transforms enter integer tokens into smaller embedding vectors, which a projection matrix then transforms into bigger remaining embedding vectors for use by the transformer blocks. This may be understood as:

$$

M = start{bmatrix}

m_{11} & m_{12} & cdots & m_{1N}

m_{21} & m_{22} & cdots & m_{2N}

vdots & vdots & ddots & vdots

m_{d1} & m_{d2} & cdots & m_{dN}

finish{bmatrix}

= N M’ = start{bmatrix}

n_{11} & n_{12} & cdots & n_{1k}

n_{21} & n_{22} & cdots & n_{2k}

vdots & vdots & ddots & vdots

n_{d1} & n_{d2} & cdots & n_{dk}

finish{bmatrix}

start{bmatrix}

m’_{11} & m’_{12} & cdots & m’_{1N}

m’_{21} & m’_{22} & cdots & m’_{2N}

vdots & vdots & ddots & vdots

m’_{k1} & m’_{k2} & cdots & m’_{kN}

finish{bmatrix}

$$

Right here, $N$ is the projection matrix and $M’$ is the embedding matrix with smaller dimension measurement $okay$. When a token is enter, the embedding matrix serves as a lookup desk for the corresponding embedding vector. The mannequin nonetheless operates on a bigger dimension measurement $d > okay$, however with the projection matrix, the whole variety of parameters is $dk + kN = okay(d+N)$, which is drastically smaller than a full embedding matrix of measurement $dN$ when $okay$ is small enough.

The second approach is cross-layer parameter sharing. Whereas BERT makes use of a stack of transformer blocks which might be an identical in design, ALBERT enforces that also they are an identical in parameters. Primarily, the mannequin processes the enter sequence via the identical transformer block $L$ instances as an alternative of via $L$ totally different blocks. This reduces the mannequin complexity however does solely barely degrade the mannequin efficiency.

DistilBERT makes use of the identical structure as BERT however is skilled via distillation. A bigger instructor mannequin is first skilled to carry out properly, then a smaller pupil mannequin is skilled to imitate the instructor’s output. The DistilBERT paper claims the coed mannequin achieves 97% of the instructor’s efficiency with solely 60% of the parameters.

In DistilBERT, the coed and instructor fashions have the identical dimension measurement and variety of consideration heads, however the pupil has half the variety of transformer layers. The scholar is skilled to match its layer outputs to the instructor’s layer outputs. The loss metric combines three elements:

- Language modeling loss: The unique MLM loss metric utilized in BERT

- Distillation loss: KL divergence between the coed mannequin and instructor mannequin’s softmax outputs

- Cosine distance loss: Cosine distance between the hidden states of each layer within the pupil mannequin and each different layer within the instructor mannequin

These a number of loss elements present extra steerage throughout distillation, leading to higher efficiency than coaching the coed mannequin independently.

Additional Studying

Beneath are some sources that you could be discover helpful:

Abstract

This text lined BERT’s structure and coaching strategy, together with the MLM and NSP aims. It additionally introduced a number of necessary variations: RoBERTa (improved coaching), ALBERT (parameter discount), and DistilBERT (data distillation). These fashions supply totally different trade-offs between efficiency, measurement, and computational effectivity for varied NLP purposes.