10 Python One-Liners for Calculating Mannequin Characteristic Significance

Picture by Editor

Understanding machine studying fashions is a crucial facet of constructing reliable AI techniques. The understandability of such fashions rests on two fundamental properties: explainability and interpretability. The previous refers to how properly we are able to describe a mannequin’s “innards” (i.e. the way it operates and appears internally), whereas the latter issues how simply people can perceive the captured relationships between enter options and predicted outputs. As we are able to see, the distinction between them is delicate, however there’s a highly effective bridge connecting each: characteristic significance.

This text unveils 10 easy however efficient Python one-liners to calculate mannequin characteristic significance from completely different views — serving to you perceive not solely how your machine studying mannequin behaves, but in addition why it made the prediction(s) it did.

1. Constructed-in Characteristic Significance in Resolution Tree-based Fashions

Tree-based fashions like random forests and XGBoost ensembles will let you simply acquire a listing of feature-importance weights utilizing an attribute like:

|

importances = mannequin.feature_importances_ |

Word that mannequin ought to comprise a skilled mannequin a priori. The result’s an array containing the significance of options, however if you’d like a extra self-explanatory model, this code enhances the earlier one-liner by incorporating the characteristic names for a dataset like iris, multi functional line.

|

print(“Characteristic importances:”, record(zip(iris.feature_names, mannequin.feature_importances_))) |

2. Coefficients in Linear Fashions

Easier linear fashions like linear regression and logistic regression additionally expose characteristic weights through discovered coefficients. It is a approach to acquire the primary of them instantly and neatly (take away the positional index to acquire all weights):

|

importances = abs(mannequin.coef_[0]) |

3. Sorting Options by Significance

Much like the improved model of no 1 above, this convenient one-liner can be utilized to rank options by their significance values in descending order: a superb glimpse of which options are the strongest or most influential contributors to mannequin predictions.

|

sorted_features = sorted(zip(options, importances), key=lambda x: x[1], reverse=True) |

4. Mannequin-Agnostic Permutation Significance

Permutation significance is an extra method to measure a characteristic’s significance — specifically, by shuffling its values and analyzing how a metric used to measure the mannequin’s efficiency (e.g. accuracy or error) decreases. Accordingly, this model-agnostic one-liner from scikit-learn is used to measure efficiency drops because of randomly shuffling a characteristic’s values.

|

from sklearn.inspection import permutation_importance consequence = permutation_importance(mannequin, X, y).importances_mean |

5. Imply Lack of Accuracy in Cross-Validation Permutations

That is an environment friendly one-liner to check permutations within the context of cross-validation processes — analyzing how shuffling every characteristic impacts mannequin efficiency throughout Okay folds.

|

import numpy as np from sklearn.model_selection import cross_val_score importances = [(cross_val_score(model, X.assign(**{f: np.random.permutation(X[f])}), y).imply()) for f in X.columns] |

6. Permutation Significance Visualizations with Eli5

Eli5 — an abbreviated type of “Clarify like I’m 5 (years previous)” — is, within the context of Python machine studying, a library for crystal-clear explainability. It gives a mildly visually interactive HTML view of characteristic importances, making it notably helpful for notebooks and appropriate for skilled linear or tree fashions alike.

|

import eli5 eli5.show_weights(mannequin, feature_names=options) |

7. International SHAP Characteristic Significance

SHAP is a well-liked and highly effective library to get deeper into explaining mannequin characteristic significance. It may be used to calculate imply absolute SHAP values (feature-importance indicators in SHAP) for every characteristic — all underneath a model-agnostic, theoretically grounded measurement method.

|

import numpy as np import shap shap_values = shap.TreeExplainer(mannequin).shap_values(X) importances = np.abs(shap_values).imply(0) |

8. Abstract Plot of SHAP Values

In contrast to world SHAP characteristic importances, the abstract plot gives not solely the worldwide significance of options in a mannequin, but in addition their instructions, visually serving to perceive how characteristic values push predictions upward or downward.

|

shap.summary_plot(shap_values, X) |

Let’s have a look at a visible instance of consequence obtained:

9. Single-Prediction Explanations with SHAP

One notably engaging facet of SHAP is that it helps clarify not solely the general mannequin conduct and have importances, but in addition how options particularly affect a single prediction. In different phrases, we are able to reveal or decompose a person prediction, explaining how and why the mannequin yielded that particular output.

|

shap.force_plot(shap.TreeExplainer(mannequin).expected_value, shap_values[0], X.iloc[0]) |

10. Mannequin-Agnostic Characteristic Significance with LIME

LIME is another library to SHAP that generates native surrogate explanations. Moderately than utilizing one or the opposite, these two libraries complement one another properly, serving to higher approximate characteristic significance round particular person predictions. This instance does so for a beforehand skilled logistic regression mannequin.

|

from lime.lime_tabular import LimeTabularExplainer exp = LimeTabularExplainer(X.values, feature_names=options).explain_instance(X.iloc[0], mannequin.predict_proba) |

Wrapping Up

This text unveiled 10 efficient Python one-liners to assist higher perceive, clarify, and interpret machine studying fashions with a concentrate on characteristic significance. Comprehending how your mannequin works from the within is not a mysterious black field with assistance from these instruments.

10 Python One-Liners for Calculating Mannequin Characteristic Significance

Picture by Editor

Understanding machine studying fashions is a crucial facet of constructing reliable AI techniques. The understandability of such fashions rests on two fundamental properties: explainability and interpretability. The previous refers to how properly we are able to describe a mannequin’s “innards” (i.e. the way it operates and appears internally), whereas the latter issues how simply people can perceive the captured relationships between enter options and predicted outputs. As we are able to see, the distinction between them is delicate, however there’s a highly effective bridge connecting each: characteristic significance.

This text unveils 10 easy however efficient Python one-liners to calculate mannequin characteristic significance from completely different views — serving to you perceive not solely how your machine studying mannequin behaves, but in addition why it made the prediction(s) it did.

1. Constructed-in Characteristic Significance in Resolution Tree-based Fashions

Tree-based fashions like random forests and XGBoost ensembles will let you simply acquire a listing of feature-importance weights utilizing an attribute like:

|

importances = mannequin.feature_importances_ |

Word that mannequin ought to comprise a skilled mannequin a priori. The result’s an array containing the significance of options, however if you’d like a extra self-explanatory model, this code enhances the earlier one-liner by incorporating the characteristic names for a dataset like iris, multi functional line.

|

print(“Characteristic importances:”, record(zip(iris.feature_names, mannequin.feature_importances_))) |

2. Coefficients in Linear Fashions

Easier linear fashions like linear regression and logistic regression additionally expose characteristic weights through discovered coefficients. It is a approach to acquire the primary of them instantly and neatly (take away the positional index to acquire all weights):

|

importances = abs(mannequin.coef_[0]) |

3. Sorting Options by Significance

Much like the improved model of no 1 above, this convenient one-liner can be utilized to rank options by their significance values in descending order: a superb glimpse of which options are the strongest or most influential contributors to mannequin predictions.

|

sorted_features = sorted(zip(options, importances), key=lambda x: x[1], reverse=True) |

4. Mannequin-Agnostic Permutation Significance

Permutation significance is an extra method to measure a characteristic’s significance — specifically, by shuffling its values and analyzing how a metric used to measure the mannequin’s efficiency (e.g. accuracy or error) decreases. Accordingly, this model-agnostic one-liner from scikit-learn is used to measure efficiency drops because of randomly shuffling a characteristic’s values.

|

from sklearn.inspection import permutation_importance consequence = permutation_importance(mannequin, X, y).importances_mean |

5. Imply Lack of Accuracy in Cross-Validation Permutations

That is an environment friendly one-liner to check permutations within the context of cross-validation processes — analyzing how shuffling every characteristic impacts mannequin efficiency throughout Okay folds.

|

import numpy as np from sklearn.model_selection import cross_val_score importances = [(cross_val_score(model, X.assign(**{f: np.random.permutation(X[f])}), y).imply()) for f in X.columns] |

6. Permutation Significance Visualizations with Eli5

Eli5 — an abbreviated type of “Clarify like I’m 5 (years previous)” — is, within the context of Python machine studying, a library for crystal-clear explainability. It gives a mildly visually interactive HTML view of characteristic importances, making it notably helpful for notebooks and appropriate for skilled linear or tree fashions alike.

|

import eli5 eli5.show_weights(mannequin, feature_names=options) |

7. International SHAP Characteristic Significance

SHAP is a well-liked and highly effective library to get deeper into explaining mannequin characteristic significance. It may be used to calculate imply absolute SHAP values (feature-importance indicators in SHAP) for every characteristic — all underneath a model-agnostic, theoretically grounded measurement method.

|

import numpy as np import shap shap_values = shap.TreeExplainer(mannequin).shap_values(X) importances = np.abs(shap_values).imply(0) |

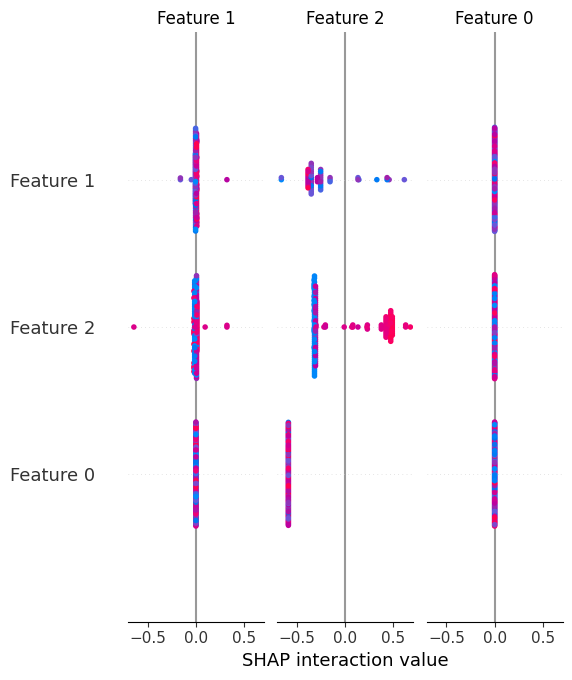

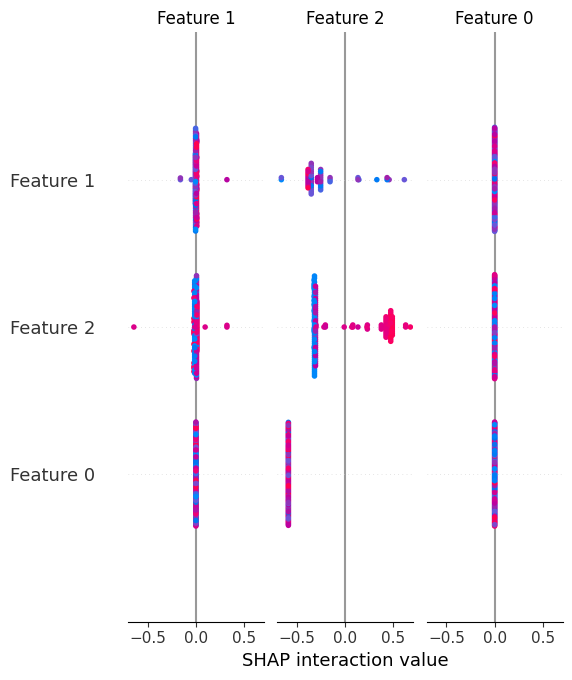

8. Abstract Plot of SHAP Values

In contrast to world SHAP characteristic importances, the abstract plot gives not solely the worldwide significance of options in a mannequin, but in addition their instructions, visually serving to perceive how characteristic values push predictions upward or downward.

|

shap.summary_plot(shap_values, X) |

Let’s have a look at a visible instance of consequence obtained:

9. Single-Prediction Explanations with SHAP

One notably engaging facet of SHAP is that it helps clarify not solely the general mannequin conduct and have importances, but in addition how options particularly affect a single prediction. In different phrases, we are able to reveal or decompose a person prediction, explaining how and why the mannequin yielded that particular output.

|

shap.force_plot(shap.TreeExplainer(mannequin).expected_value, shap_values[0], X.iloc[0]) |

10. Mannequin-Agnostic Characteristic Significance with LIME

LIME is another library to SHAP that generates native surrogate explanations. Moderately than utilizing one or the opposite, these two libraries complement one another properly, serving to higher approximate characteristic significance round particular person predictions. This instance does so for a beforehand skilled logistic regression mannequin.

|

from lime.lime_tabular import LimeTabularExplainer exp = LimeTabularExplainer(X.values, feature_names=options).explain_instance(X.iloc[0], mannequin.predict_proba) |

Wrapping Up

This text unveiled 10 efficient Python one-liners to assist higher perceive, clarify, and interpret machine studying fashions with a concentrate on characteristic significance. Comprehending how your mannequin works from the within is not a mysterious black field with assistance from these instruments.