of my Machine Studying Creation Calendar.

Earlier than closing this sequence, I wish to sincerely thank everybody who adopted it, shared suggestions, and supported it, particularly the In direction of Knowledge Science group.

Ending this calendar with Transformers shouldn’t be a coincidence. The Transformer is not only a elaborate identify. It’s the spine of contemporary Giant Language Fashions.

There’s a lot to say about RNNs, LSTMs, and GRUs. They performed a key historic function in sequence modeling. However as we speak, fashionable LLMs are overwhelmingly based mostly on Transformers.

The identify Transformer itself marks a rupture. From a naming perspective, the authors may have chosen one thing like Consideration Neural Networks, according to Recurrent Neural Networks or Convolutional Neural Networks. As a Cartesian thoughts, I might have appreciated a extra constant naming construction. However naming apart, the conceptual shift launched by Transformers absolutely justifies the excellence.

Transformers can be utilized in several methods. Encoder architectures are generally used for classification. Decoder architectures are used for next-token prediction, so for textual content era.

On this article, we’ll give attention to one core concept solely: how the eye matrix transforms enter embeddings into one thing extra significant.

Within the earlier article, we launched 1D Convolutional Neural Networks for textual content. We noticed {that a} CNN scans a sentence utilizing small home windows and reacts when it acknowledges native patterns. This method is already very highly effective, nevertheless it has a transparent limitation: a CNN solely seems to be regionally.

Immediately, we transfer one step additional.

The Transformer solutions a essentially completely different query.

What if each phrase may have a look at all the opposite phrases without delay?

1. The identical phrase in two completely different contexts

To know why consideration is required, we’ll begin with a easy concept.

We are going to use two completely different enter sentences, each containing the phrase mouse, however utilized in completely different contexts.

Within the first enter, mouse seems in a sentence with cat. Within the second enter, mouse seems in a sentence with keyboard.

On the enter stage, we intentionally use the identical embedding for the phrase “mouse” in each circumstances. That is necessary. At this stage, the mannequin doesn’t know which which means is meant.

The embedding for mouse comprises each:

- a robust animal part

- a robust tech part

This ambiguity is intentional. With out context, mouse may discuss with an animal or to a pc machine.

All different phrases present clearer indicators. Cat is strongly animal. Keyboard is strongly tech. Phrases like and or are primarily carry grammatical info. Phrases like buddies and helpful are weakly informative on their very own.

At this level, nothing within the enter embeddings permits the mannequin to resolve which which means of mouse is right.

Within the subsequent chapter, we’ll see how the eye matrix performs this transformation, step-by-step.

2. Self-attention: how context is injected into embeddings

2.1 Self-attention, not simply consideration

We first make clear what sort of consideration we’re utilizing right here. This chapter focuses on self-attention.

Self-attention implies that every phrase seems to be on the different phrases of the identical enter sequence.

On this simplified instance, we make an extra pedagogical selection. We assume that Queries and Keys are immediately equal to the enter embeddings. In different phrases, there aren’t any discovered weight matrices for Q and Ok on this chapter.

This can be a deliberate simplification. It permits us to focus completely on the eye mechanism, with out introducing additional parameters. Similarity between phrases is computed immediately from their embeddings.

Conceptually, this implies:

Q = Enter

Ok = Enter

Solely the Worth vectors are used later to propagate info to the output.

In actual Transformer fashions, Q, Ok, and V are all obtained via discovered linear projections. These projections add flexibility, however they don’t change the logic of consideration itself. The simplified model proven right here captures the core concept.

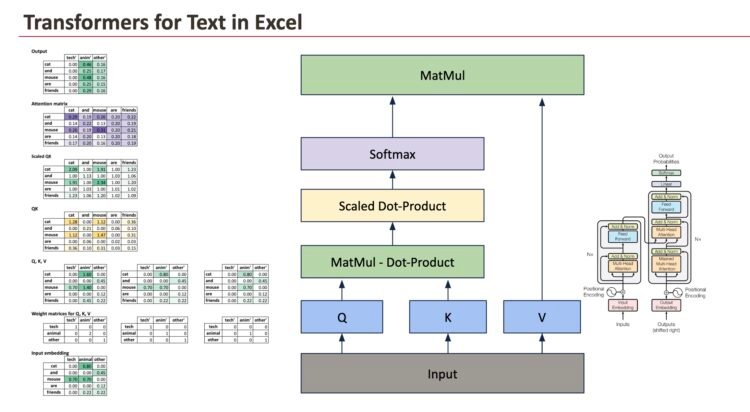

Right here is the entire image that we are going to decompose.

2.2 From enter embeddings to uncooked consideration scores

We begin from the enter embedding matrix, the place every row corresponds to a phrase and every column corresponds to a semantic dimension.

The primary operation is to match each phrase with each different phrase. That is carried out by computing dot merchandise between Queries and Keys.

As a result of Queries and Keys are equal to the enter embeddings on this instance, this step reduces to computing dot merchandise between enter vectors.

All dot merchandise are computed without delay utilizing a matrix multiplication:

Scores = Enter × Inputᵀ

Every cell of this matrix solutions a easy query: how comparable are these two phrases, given their embeddings?

At this stage, the values are uncooked scores. They don’t seem to be possibilities, and they don’t but have a direct interpretation as weights.

2.3 Scaling and normalization

Uncooked dot merchandise can develop giant because the embedding dimension will increase. To maintain values in a steady vary, the scores are scaled by the sq. root of the embedding dimension.

ScaledScores = Scores / √d

This scaling step shouldn’t be conceptually deep, however it’s virtually necessary. It prevents the subsequent step, the softmax, from changing into too sharp.

As soon as scaled, a softmax is utilized row by row. This converts uncooked scores into constructive values that sum to at least one.

The result’s the consideration matrix.

And consideration is all you want.

Every row of this matrix describes how a lot consideration a given phrase pays to each different phrase within the sentence.

2.4 Deciphering the eye matrix

The eye matrix is the central object of self-attention.

For a given phrase, its row within the consideration matrix solutions the query: when updating this phrase, which different phrases matter, and the way a lot?

For instance, the row equivalent to mouse assigns larger weights to phrases which are semantically associated within the present context. Within the sentence with cat and buddies, mouse attends extra to animal-related phrases. Within the sentence with keyboard and helpful, it attends extra to technical phrases.

The mechanism is similar in each circumstances. Solely the encompassing phrases change the end result.

2.5 From consideration weights to output embeddings

The eye matrix itself shouldn’t be the ultimate consequence. It’s a set of weights.

To supply the output embeddings, we mix these weights with the Worth vectors.

Output = Consideration × V

On this simplified instance, the Worth vectors are taken immediately from the enter embeddings. Every output phrase vector is subsequently a weighted common of the enter vectors, with weights given by the corresponding row of the eye matrix.

For a phrase like mouse, because of this its last illustration turns into a mix of:

- its personal embedding

- the embeddings of the phrases it attends to most

That is the exact second the place context is injected into the illustration.

On the finish of self-attention, the embeddings are now not ambiguous.

The phrase mouse now not has the identical illustration in each sentences. Its output vector displays its context. In a single case, it behaves like an animal. Within the different, it behaves like a technical object.

Nothing within the embedding desk modified. What modified is how info was mixed throughout phrases.

That is the core concept of self-attention, and the muse on which Transformer fashions are constructed.

If we now evaluate the 2 examples, cat and mouse on the left and keyboard and mouse on the appropriate, the impact of self-attention turns into specific.

In each circumstances, the enter embedding of mouse is similar. But the ultimate illustration differs. Within the sentence with cat, the output embedding of mouse is dominated by the animal dimension. Within the sentence with keyboard, the technical dimension turns into extra outstanding. Nothing within the embedding desk modified. The distinction comes completely from how consideration redistributed weights throughout phrases earlier than mixing the values.

This comparability highlights the function of self-attention: it doesn’t change phrases in isolation, however reshapes their representations by taking the total context into consideration.

3. Studying how one can combine info

3.1 Introducing discovered weights for Q, Ok, and V

Till now, we’ve targeted on the mechanics of self-attention itself. We now introduce an necessary ingredient: discovered weights.

In an actual Transformer, Queries, Keys, and Values usually are not taken immediately from the enter embeddings. As an alternative, they’re produced by discovered linear transformations.

For every phrase embedding, the mannequin computes:

Q = Enter × W_Q

Ok = Enter × W_K

V = Enter × W_V

These weight matrices are discovered throughout coaching.

At this stage, we normally preserve the identical dimensionality. The enter embeddings, Q, Ok, V, and the output embeddings all have the identical variety of dimensions. This makes the function of consideration simpler to grasp: it modifies representations with out altering the area they stay in.

Conceptually, these weights enable the mannequin to resolve:

- which features of a phrase matter for comparability (Q and Ok)

- which features of a phrase must be transmitted to others (V)

3.2 What the mannequin really learns

The eye mechanism itself is fastened. Dot merchandise, scaling, softmax, and matrix multiplications all the time work the identical method. What the mannequin really learns are the projections.

By adjusting the Q and Ok weights, the mannequin learns how one can measure relationships between phrases for a given activity. By adjusting the V weights, it learns what info must be propagated when consideration is excessive. The construction defines how info flows, whereas the weights outline what info flows.

As a result of the eye matrix relies on Q and Ok, it’s partially interpretable. We are able to examine which phrases attend to which others and observe patterns that usually align with syntax or semantics.

This turns into clear when evaluating the identical phrase in two completely different contexts. In each examples, the phrase mouse begins with precisely the identical enter embedding, containing each an animal and a tech part. By itself, it’s ambiguous.

What modifications shouldn’t be the phrase, however the consideration it receives. Within the sentence with cat and buddies, consideration emphasizes animal-related phrases. Within the sentence with keyboard and helpful, consideration shifts towards technical phrases. The mechanism and the weights are similar in each circumstances, but the output embeddings differ. The distinction comes completely from how the discovered projections work together with the encompassing context.

That is exactly why the eye matrix is interpretable: it reveals which relationships the mannequin has discovered to think about significant for the duty.

3.3 Altering the dimensionality on goal

Nothing, nevertheless, forces Q, Ok, and V to have the identical dimensionality because the enter.

The Worth projection, particularly, can map embeddings into an area of a unique measurement. When this occurs, the output embeddings inherit the dimensionality of the Worth vectors.

This isn’t a theoretical curiosity. It’s precisely what occurs in actual fashions, particularly in multi-head consideration. Every head operates in its personal subspace, typically with a smaller dimension, and the outcomes are later concatenated into a bigger illustration.

So consideration can do two issues:

- combine info throughout phrases

- reshape the area by which this info lives

This explains why Transformers scale so effectively.

They don’t depend on fastened options. They study:

- how one can evaluate phrases

- how one can route info

- how one can challenge which means into completely different areas

The eye matrix controls the place info flows.

The discovered projections management what info flows and how it’s represented.

Collectively, they type the core mechanism behind fashionable language fashions.

Conclusion

This Creation Calendar was constructed round a easy concept: understanding machine studying fashions by taking a look at how they really remodel information.

Transformers are a becoming method to shut this journey. They don’t depend on fastened guidelines or native patterns, however on discovered relationships between all components of a sequence. Via consideration, they flip static embeddings into contextual representations, which is the muse of contemporary language fashions.

Thanks once more to everybody who adopted this sequence, shared suggestions, and supported it, particularly the In direction of Knowledge Science group.

Merry Christmas 🎄

All of the Excel recordsdata can be found via this Kofi hyperlink. Your assist means rather a lot to me. The value will improve through the month, so early supporters get the most effective worth.

Low cost codes are hidden throughout the articles from Day 19 to Day 24. Discover them and choose the one you like.