Enhancing LLM decision-making: integrating language agent tree search with GPT-4o for superior problem-solving

Giant Language Fashions (LLMs) have demonstrated distinctive talents in performing pure language duties that contain complicated reasoning. Consequently, these fashions have developed to perform as brokers able to planning, strategising, and fixing complicated issues. Nonetheless, challenges persist with regards to making choices underneath uncertainty, the place outcomes will not be deterministic, or when adaptive decision-making is required in altering environments, particularly in multi-step situations the place every step influences the subsequent. We’d like extra superior capabilities…

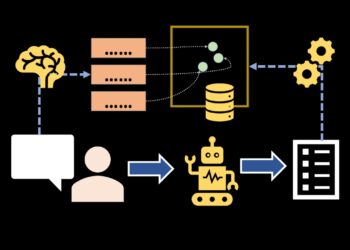

That is the place GPT-4’s superior reasoning capabilities and Language Agent Tree Search (LATS) come collectively to deal with these challenges. LATS incorporates a dynamic, tree-based search methodology that enhances the reasoning capabilities of GPT-4O. By integrating Monte Carlo Tree Search (MCTS) with LLMs, LATS unifies reasoning, appearing, and planning, making a extra deliberate and adaptive problem-solving framework. This highly effective mixture permits for improved decision-making and extra sturdy dealing with of complicated duties, setting a brand new commonplace within the deployment of language fashions as autonomous brokers.

Is “search” the lacking piece in GenAI drawback fixing?

Computational drawback fixing will be broadly outlined as “search by means of a combinatorial drawback area”, represented as a tree. Depth-First Search (DFS) and Breadth-First Search (BFS) are basic strategies for exploring such answer areas. A notable instance of the ability of deep search is AlphaGo’s “Transfer 37,” which showcased how revolutionary, human-surpassing options can emerge from in depth exploration.

Not like conventional strategies that observe predefined paths, LLMs can dynamically generate new branches inside the answer area by predicting potential outcomes, methods, or actions based mostly on context. This functionality permits LLMs to not solely navigate but in addition broaden the issue area, making them exceptionally highly effective in conditions the place the issue construction will not be totally identified, is constantly evolving, or is extremely complicated.

Inference-time Reasoning with Meta Technology Algorithms (MGA)

Scaling compute throughout coaching is broadly recognised for its potential to enhance mannequin efficiency. The advantages of scaling compute throughout inference stay under-explored. MGA’s supply a novel method by amplifying computational assets throughout inference…

Not like conventional token-level era strategies, meta-generation algorithms make use of higher-order management constructions corresponding to planning, loops with a number of mannequin calls, self-reflection, job decomposition, and dynamic conditioning. These mechanisms permit the mannequin to execute duties end-to-end, mimicking higher-level cognitive processes sometimes called Methods-2 pondering.

Due to this fact one-way meta era algorithms might improve LLM reasoning by integrating search into the era course of. Throughout inference, MGA’s dynamically discover a broader answer area, permitting the mannequin to motive by means of potential outcomes and adapt methods in real-time. By producing a number of paths and evaluating their viability, meta era algorithms allow LLMs to simulate deeper, extra complicated reasoning akin to conventional search strategies. This method not solely expands the mannequin’s potential to generate novel insights but in addition improves decision-making in situations with incomplete or evolving data.

Strategies like Tree of Ideas (ToT), and Graph of Thought (GoT) are employed to navigate combinatorial answer areas effectively.

- ToT (2*) allows hierarchical decision-making by structuring potential outcomes as tree branches, facilitating exploration of a number of paths.

- GoT (6*)maps complicated relationships between concepts, permitting the mannequin to dynamically regulate and optimize its reasoning path.

- CoT (5*) gives step-by-step reasoning that hyperlinks sequential ideas, bettering the coherence and depth of the era.

Within the Tree of Ideas (ToT) method, conventional strategies like Depth-First Search (DFS) or Breadth-First Search (BFS) can navigate this tree, however they’re computationally costly as a result of they discover every doable path systematically & exhaustively.

Monte Carlo Tree Search (MCTS) is an enchancment on this by simulating completely different outcomes for actions and updating the tree based mostly on these simulations. It makes use of a “choice” course of the place it picks determination nodes utilizing a method that balances exploration (making an attempt new paths) and exploitation (selecting identified good paths). That is guided by a system referred to as Higher Confidence Certain (UCB).

The UCB system has two key elements:

- Exploration Time period: This represents the potential reward of selecting a node and is calculated by means of simulations.

- Exploitation Time period: This decreases the deeper you go right into a sure path, that means that if a path is over-explored, the algorithm might shift to a less-explored path even when it appears much less promising initially.

By choosing nodes utilizing UCB, simulating outcomes (rewards) with LLMs, and back-propagating the rewards up the tree, MCTS successfully balances between exploring new methods and exploiting identified profitable ones.

The second a part of the UCB system is the ‘exploitation time period,’ which decreases as you discover deeper into a selected path. This lower might lead the choice algorithm to change to a different path within the determination tree, even when that path has a decrease speedy reward, as a result of the exploitation time period stays increased when that path is much less explored.

Node choice with UCB, reward calculations with LLM simulations and backpropagation are the essence of MCTS.

An Implementation — Monetary Choice Making…

For the sake of demonstration we are going to use LATS to resolve the difficult drawback of developing with the optimum funding technique in todays macroeconomic local weather. We’ll feed LLM with the macro-economic statu susing the “IMF World Financial Outlook Report” because the context merely summarising the doc. RAG will not be used. Under is an instance as to how LATS searches by means of the answer area…

Iteration 1:

- Choice: We begin on the root node, and since that is the primary LATS iteration, we are going to choose all preliminary determination nodes generated by the LLM (A, B, and C nodes) and simulate their outcomes.

- Simulation & Backpropagation: Subsequent LLM “simulates” every technique based mostly on the context it has and assigns the next “rewards” — funding returns — to every “node”.

- Technique A: $5,000

- Technique B: $7,000

- Technique C: $4,000

3. Enlargement: Primarily based on the choice, Technique B has the very best UCB1 worth (since all nodes are on the identical depth), so we broaden solely Technique B by simulating its little one nodes.

Iteration 2:

- Choice: Since B1 & B2 methods will not be simulated, there’s a tie by way of their UCB scores and each nodes can be simulated.

- Simulate Each Nodes:

- Simulate B1: LLM predicts a return of $8,500 for B1.

- Simulate B2: LLM predicts a return of $7,500 for B2.

3. Backpropagation:

After every simulation, outcomes of the simulation are back-propagated up the tree, updating the values of the father or mother nodes. This step ensures that the influence of the brand new data is mirrored all through the tree.

Updating Technique B’s Worth: Technique B now must mirror the outcomes of B1 and B2. One frequent method is to common the rewards of B1 and B2 to replace Technique B’s worth. Now, Technique B has an up to date worth of $8,000 based mostly on the outcomes of its little one nodes.

4. Recalculate UCB Scores:

After backpropagation, the UCB scores for all nodes within the tree are recalculated. This recalculation makes use of the up to date values (common rewards) and go to counts, guaranteeing that every node’s UCB1 rating precisely displays each its potential reward and the way a lot it has been explored.

UCB(s) = (exploration/reward time period)+ (exploitation time period)

Be aware once more the exploitation time period decreases for all nodes on a path that’s continously explored deeper.

5. Subsequent choice & simulation:

B1 is chosen for additional enlargement (because it has the upper reward) into little one nodes:

- B1a: “Put money into AI corporations”

- B1b: “Put money into inexperienced tech”

6. Backpropagation:

B1 reward up to date as (9200 + 6800) / 2 = 8000

B reward up to date as (8000 + 7500) / 2 = 7750

7.UCB Calculation:

Following backpropagation UCB values of all nodes are recalculated. Assume that because of the decaying exploration issue, B2 now has a better UCB rating than each B1a and B1b. This might happen if B1 has been extensively explored, lowering the exploration time period for its youngsters. As an alternative of constant to broaden B1’s youngsters, the algorithm shifts again to discover B2, which has develop into extra enticing on account of its unexplored potential i.e. increased exploitation worth.

This instance illustrates how MCTS can dynamically regulate its search path based mostly on new data, guaranteeing that the algorithm stays environment friendly and centered on essentially the most promising methods because it progresses.

An Implementation with Azure OpenAI GPT-4o

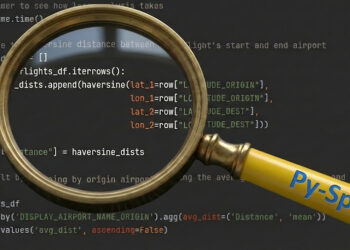

Subsequent we are going to construct a “monetary advisor” utilizing GPT-4o, implementing LATS. (Please check with the Github repo right here for the code.)

(For an correct evaluation I’m utilizing the IMF World Financial Outlook report from July, 24 as my LLM context for simulations i.e. for producing little one nodes and for assigning rewards to determination nodes …)

Right here is how the code runs…

The code leverages the graphviz library to visually symbolize the choice tree generated throughout the execution of the funding technique simulations. Choice tree is simply too broad and can’t match right into a single image therefore I’ve added snippets as to how the tree seems under. You will discover a pattern determination tree within the github repo right here…

Under is the optimum technique inferred by LATS…

Optimum Technique Abstract: The optimum funding technique is structured round a number of key steps influenced by the IMF report. Here is a concise abstract of every step and its significance:

1. **Diversification Throughout Geographies and Sectors:**

- **Geographic Diversification:** This includes spreading investments throughout areas to mitigate danger and faucet into completely different progress potentials. Superior economies just like the U.S. stay important on account of their sturdy shopper spending and resilient labor market, however the portfolio ought to embody cautious weighting to handle dangers. Concurrently, rising markets in Asia, corresponding to India and Vietnam, are highlighted for his or her increased progress potential, offering alternatives for increased returns.

- **Sector Diversification:** Incorporating investments in sectors like inexperienced vitality and sustainability displays the rising world emphasis on renewable vitality and environmentally pleasant applied sciences. This additionally aligns with regulatory modifications and shopper preferences, creating future progress alternatives.

2. **Inexperienced Power and Sustainability:**

- Investing in inexperienced vitality demonstrates foresight into the worldwide shift towards lowering carbon footprints and reliance on fossil fuels. That is vital on account of elevated governmental helps, corresponding to subsidies and coverage incentives, that are prone to propel progress inside this sector.

3. **Fintech and E-Commerce:**

- Allocating capital in the direction of fintech and e-commerce corporations capitalizes on the digital transformation accelerated by the worldwide shift in the direction of digital platforms. This sector is predicted to develop on account of elevated adoption of on-line companies and digital cost methods, thus presenting promising funding alternatives.

Conclusion:

By integrating LATS, we harness the reasoning capabilities of LLMs to simulate and consider potential methods dynamically. This mix permits for the development of determination timber that not solely symbolize the logical development of choices but in addition adapt to altering contexts and insights, supplied by the LLM by means of simulations and reflections.

(Until in any other case famous, all pictures are by the creator)

References:

[1] Language Agent Tree Search: Unifying Reasoning, Appearing, and Planning in Language Fashions by Zhou et al

[2] Tree of Ideas: Deliberate Downside Fixing with Giant Language Fashions by Yao et al

[3] The Panorama of Rising AI Agent Architectures for Reasoning, Planning, and Instrument Calling: A Survey by Tula Masterman, Mason Sawtell, Sandi Besen, and Alex Chao

[4] From Decoding to Meta-Technology: Inference-time Algorithms for Giant Language Fashions” by Sean Welleck, Amanda Bertsch, Matthew Finlayson, Hailey Schoelkopf*, Alex Xie, Graham Neubig, Ilia Kulikov, and Zaid Harchaoui.

[5] Chain-of-Thought Prompting Elicits Reasoning in Giant Language Fashions by Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Brian Ichter, Fei Xia, Ed H. Chi, Quoc V. Le, and Denny Zhou

[7] Graph of Ideas: Fixing Elaborate Issues with Giant Language Fashions by Maciej Besta, Nils Blach, Ales Kubicek, Robert Gerstenberger, Michał Podstawski, Lukas Gianinazzi, Joanna Gajda, Tomasz Lehmann, Hubert Niewiadomski, Piotr Nyczyk, and Torsten Hoefler.

[8] From Decoding to Meta-Technology: Inference-time Algorithms for Giant Language Fashions” by Sean Welleck, Amanda Bertsch, Matthew Finlayson, Hailey Schoelkopf, Alex Xie, Graham Neubig, Ilia Kulikov, and Zaid Harchaoui.