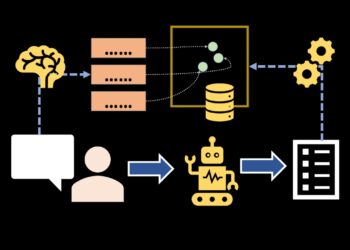

Bank card fraud detection is a plague that every one monetary establishments are in danger with. On the whole fraud detection may be very difficult as a result of fraudsters are developing with new and modern methods of detecting fraud, so it’s troublesome to discover a sample that we are able to detect. For instance, within the diagram all of the icons look the identical, however there one icon that’s barely totally different from the remainder and we now have decide that one. Can you see it?

Right here it’s:

With this background let me present a plan for at present and what you’ll study within the context of our use case ‘Credit score Card Fraud Detection’:

1. What’s information imbalance

2. Doable causes of information Imbalance

3. Why is class imbalance an issue in machine studying

4. Fast Refresher on Random Forest Algorithm

5. Totally different sampling strategies to take care of information Imbalance

6. Comparability of which methodology works properly in our context with a sensible Demonstration with Python

7. Enterprise perception on which mannequin to decide on and why?

Usually, as a result of the variety of fraudulent transactions is just not an enormous quantity, we now have to work with a knowledge that usually has quite a lot of non-frauds in comparison with Fraud circumstances. In technical phrases such a dataset is known as an ‘imbalanced information’. However, it’s nonetheless important to detect the fraud circumstances, as a result of just one fraudulent transaction could cause thousands and thousands of losses to banks/monetary establishments. Now, allow us to delve deeper into what’s information imbalance.

We will probably be contemplating the bank card fraud dataset from https://www.kaggle.com/mlg-ulb/creditcardfraud (Open Information License).

Formally which means the distribution of samples throughout totally different courses is unequal. In our case of binary classification drawback, there are 2 courses

a) Majority class—the non-fraudulent/real transactions

b) Minority class—the fraudulent transactions

Within the dataset thought-about, the category distribution is as follows (Desk 1):

As we are able to observe, the dataset is very imbalanced with solely 0.17% of the observations being within the Fraudulent class.

There will be 2 fundamental causes of information imbalance:

a) Biased Sampling/Measurement errors: This is because of assortment of samples solely from one class or from a specific area or samples being mis-classified. This may be resolved by enhancing the sampling strategies

b) Use case/area attribute: A extra pertinent drawback as in our case is perhaps because of the drawback of prediction of a uncommon occasion, which mechanically introduces skewness in the direction of majority class as a result of the prevalence of minor class is follow is just not typically.

It is a drawback as a result of many of the algorithms in machine studying concentrate on studying from the occurrences that happen steadily i.e. the bulk class. That is known as the frequency bias. So in circumstances of imbalanced dataset, these algorithms won’t work properly. Usually few strategies that can work properly are tree primarily based algorithms or anomaly detection algorithms. Historically, in fraud detection issues enterprise rule primarily based strategies are sometimes used. Tree-based strategies work properly as a result of a tree creates rule-based hierarchy that may separate each the courses. Determination bushes are inclined to over-fit the information and to remove this chance we’ll go along with an ensemble methodology. For our use case, we’ll use the Random Forest Algorithm at present.

Random Forest works by constructing a number of resolution tree predictors and the mode of the courses of those particular person resolution bushes is the ultimate chosen class or output. It’s like voting for the preferred class. For instance: If 2 bushes predict that Rule 1 signifies Fraud whereas one other tree signifies that Rule 1 predicts Non-fraud, then in keeping with Random forest algorithm the ultimate prediction will probably be Fraud.

Formal Definition: A random forest is a classifier consisting of a set of tree-structured classifiers {h(x,Θk ), ok=1, …} the place the {Θk} are unbiased identically distributed random vectors and every tree casts a unit vote for the preferred class at enter x . (Supply)

Every tree is determined by a random vector that’s independently sampled and all bushes have an identical distribution. The generalization error converges because the variety of bushes will increase. In its splitting standards, Random forest searches for the very best function amongst a random subset of options and we are able to additionally compute variable significance and accordingly do function choice. The bushes will be grown utilizing bagging method the place observations will be random chosen (with out alternative) from the coaching set. The opposite methodology will be random break up choice the place a random break up is chosen from Ok-best splits at every node.

You’ll be able to learn extra about it right here

We are going to now illustrate 3 sampling strategies that may maintain information imbalance.

a) Random Underneath-sampling: Random attracts are taken from the non-fraud observations i.e the bulk class to match it with the Fraud observations ie the minority class. This implies, we’re throwing away some info from the dataset which could not be ultimate at all times.

b) Random Over-sampling: On this case, we do precise reverse of under-sampling i.e duplicate the minority class i.e Fraud observations at random to extend the variety of the minority class until we get a balanced dataset. Doable limitation is we’re creating quite a lot of duplicates with this methodology.

c) SMOTE: (Artificial Minority Over-sampling method) is one other methodology that makes use of artificial information with KNN as an alternative of utilizing duplicate information. Every minority class instance together with their k-nearest neighbours is taken into account. Then alongside the road segments that be a part of any/all of the minority class examples and k-nearest neighbours artificial examples are created. That is illustrated within the Fig 3 under:

With solely over-sampling, the choice boundary turns into smaller whereas with SMOTE we are able to create bigger resolution areas thereby enhancing the possibility of capturing the minority class higher.

One doable limitation is, if the minority class i.e fraudulent observations is unfold all through the information and never distinct then utilizing nearest neighbours to create extra fraud circumstances, introduces noise into the information and this could result in mis-classification.

Among the metrics that’s helpful for judging the efficiency of a mannequin are listed under. These metrics present a view how properly/how precisely the mannequin is ready to predict/classify the goal variable/s:

· TP (True constructive)/TN (True adverse) are the circumstances of appropriate predictions i.e predicting Fraud circumstances as Fraud (TP) and predicting non-fraud circumstances as non-fraud (TN)

· FP (False constructive) are these circumstances which might be truly non-fraud however mannequin predicts as Fraud

· FN (False adverse) are these circumstances which might be truly fraud however mannequin predicted as non-Fraud

Precision = TP / (TP + FP): Precision measures how precisely mannequin is ready to seize fraud i.e out of the entire predicted fraud circumstances, what number of truly turned out to be fraud.

Recall = TP/ (TP+FN): Recall measures out of all of the precise fraud circumstances, what number of the mannequin might predict appropriately as fraud. This is a crucial metric right here.

Accuracy = (TP +TN)/(TP+FP+FN+TN): Measures what number of majority in addition to minority courses may very well be appropriately categorized.

F-score = 2*TP/ (2*TP + FP +FN) = 2* Precision *Recall/ (Precision *Recall) ; It is a steadiness between precision and recall. Word that precision and recall are inversely associated, therefore F-score is an effective measure to attain a steadiness between the 2.

First, we’ll prepare the random forest mannequin with some default options. Please be aware optimizing the mannequin with function choice or cross validation has been stored out-of-scope right here for sake of simplicity. Submit that we prepare the mannequin utilizing under-sampling, oversampling after which SMOTE. The desk under illustrates the confusion matrix together with the precision, recall and accuracy metrics for every methodology.

a) No sampling consequence interpretation: With none sampling we’re in a position to seize 76 fraudulent transactions. Although the general accuracy is 97%, the recall is 75%. Which means there are fairly just a few fraudulent transactions that our mannequin is just not in a position to seize.

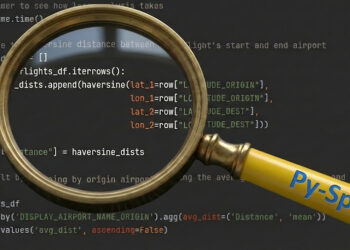

Beneath is the code that can be utilized :

# Coaching the mannequin

from sklearn.ensemble import RandomForestClassifier

classifier = RandomForestClassifier(n_estimators=10,criterion='entropy', random_state=0)

classifier.match(x_train,y_train)# Predict Y on the take a look at set

y_pred = classifier.predict(x_test)

# Acquire the outcomes from the classification report and confusion matrix

from sklearn.metrics import classification_report, confusion_matrix

print('Classifcation report:n', classification_report(y_test, y_pred))

conf_mat = confusion_matrix(y_true=y_test, y_pred=y_pred)

print('Confusion matrix:n', conf_mat)

b) Underneath-sampling consequence interpretation: With under-sampling , although the mannequin is ready to seize 90 fraud circumstances with important enchancment in recall, the accuracy and precision falls drastically. It’s because the false positives have elevated phenomenally and the mannequin is penalizing quite a lot of real transactions.

Underneath-sampling code snippet:

# That is the pipeline module we'd like from imblearn

from imblearn.under_sampling import RandomUnderSampler

from imblearn.pipeline import Pipeline # Outline which resampling methodology and which ML mannequin to make use of within the pipeline

resampling = RandomUnderSampler()

mannequin = RandomForestClassifier(n_estimators=10,criterion='entropy', random_state=0)

# Outline the pipeline,and mix sampling methodology with the RF mannequin

pipeline = Pipeline([('RandomUnderSampler', resampling), ('RF', model)])

pipeline.match(x_train, y_train)

predicted = pipeline.predict(x_test)

# Acquire the outcomes from the classification report and confusion matrix

print('Classifcation report:n', classification_report(y_test, predicted))

conf_mat = confusion_matrix(y_true=y_test, y_pred=predicted)

print('Confusion matrix:n', conf_mat)

c) Over-sampling consequence interpretation: Over-sampling methodology has the best precision and accuracy and the recall can be good at 81%. We’re in a position to seize 6 extra fraud circumstances and the false positives is fairly low as properly. Total, from the attitude of all of the parameters, this mannequin is an effective mannequin.

Oversampling code snippet:

# That is the pipeline module we'd like from imblearn

from imblearn.over_sampling import RandomOverSampler# Outline which resampling methodology and which ML mannequin to make use of within the pipeline

resampling = RandomOverSampler()

mannequin = RandomForestClassifier(n_estimators=10,criterion='entropy', random_state=0)

# Outline the pipeline,and mix sampling methodology with the RF mannequin

pipeline = Pipeline([('RandomOverSampler', resampling), ('RF', model)])

pipeline.match(x_train, y_train)

predicted = pipeline.predict(x_test)

# Acquire the outcomes from the classification report and confusion matrix

print('Classifcation report:n', classification_report(y_test, predicted))

conf_mat = confusion_matrix(y_true=y_test, y_pred=predicted)

print('Confusion matrix:n', conf_mat)

d) SMOTE: Smote additional improves the over-sampling methodology with 3 extra frauds caught within the web and although false positives enhance a bit the recall is fairly wholesome at 84%.

SMOTE code snippet:

# That is the pipeline module we'd like from imblearnfrom imblearn.over_sampling import SMOTE

# Outline which resampling methodology and which ML mannequin to make use of within the pipeline

resampling = SMOTE(sampling_strategy='auto',random_state=0)

mannequin = RandomForestClassifier(n_estimators=10,criterion='entropy', random_state=0)

# Outline the pipeline, inform it to mix SMOTE with the RF mannequin

pipeline = Pipeline([('SMOTE', resampling), ('RF', model)])

pipeline.match(x_train, y_train)

predicted = pipeline.predict(x_test)

# Acquire the outcomes from the classification report and confusion matrix

print('Classifcation report:n', classification_report(y_test, predicted))

conf_mat = confusion_matrix(y_true=y_test, y_pred=predicted)

print('Confusion matrix:n', conf_mat)

In our use case of fraud detection, the one metric that’s most necessary is recall. It’s because the banks/monetary establishments are extra involved about catching many of the fraud circumstances as a result of fraud is pricey they usually may lose some huge cash over this. Therefore, even when there are few false positives i.e flagging of real clients as fraud it won’t be too cumbersome as a result of this solely means blocking some transactions. Nevertheless, blocking too many real transactions can be not a possible answer, therefore relying on the danger urge for food of the monetary establishment we are able to go along with both easy over-sampling methodology or SMOTE. We are able to additionally tune the parameters of the mannequin, to additional improve the mannequin outcomes utilizing grid search.

For particulars on the code consult with this hyperlink on Github.

References:

[1] Mythili Krishnan, Madhan Ok. Srinivasan, Credit score Card Fraud Detection: An Exploration of Totally different Sampling Strategies to Resolve the Class Imbalance Downside (2022), ResearchGate

[1] Bartosz Krawczyk, Studying from imbalanced information: open challenges and future instructions (2016), Springer

[2] Nitesh V. Chawla, Kevin W. Bowyer , Lawrence O. Corridor and W. Philip Kegelmeyer , SMOTE: Artificial Minority Over-sampling Method (2002), Journal of Synthetic Intelligence analysis

[3] Leo Breiman, Random Forests (2001), stat.berkeley.edu

[4] Jeremy Jordan, Studying from imbalanced information (2018)