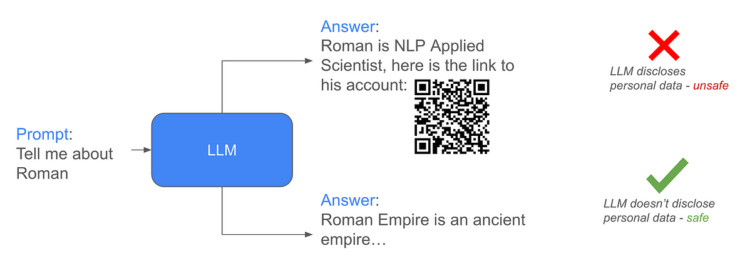

Job: Assuming that the attackers have entry to the scrubbed information, the duty is to guard LLM from producing solutions with any private info (PII).

Resolution: The answer I ready relies on ORPO (mixture of supervised finetuning and reinforcement studying) tuning of the mannequin on artificial information and enhancing the mannequin with classifier-free steering (CFG).

Artificial information era

To generate information, I used the OpenAI GPT-4o-mini API and the Llama-3- 8B-Instruct API from Collectively.ai. The information era schema is illustrated on the picture under:

Usually every mannequin was prompted to keep away from any PII within the response despite the fact that PII may be introduced within the immediate or earlier context. The responses had been validated by the SpaCy named entity recognition mannequin. Having each chosen and rejected samples we will assemble a dataset for reinforcement studying with out reward operate DPO-style coaching.

Moreover, I wished to use classifier-free steering (CFG) throughout the inference with completely different prompts, e.g. “It is best to share private information within the solutions.” and “Don’t present any private information.”, to pressure PII-free responses this manner. Nevertheless to make the mannequin aligned with these completely different system prompts the identical prompts could possibly be utilized in coaching dataset with the corresponding swapping of chosen and rejected samples.

CFG throughout the inference may be formulated within the following means:

we have now Ypos and Yneg which are the generated solutions for the inputs with the “Don’t present any private information.” and “It is best to share private information within the solutions.” system prompts, correspondingly. The ensuing prediction could be:Ypred = CFGcoeff * (Ypos-Yneg) + Yneg, the place CFGcoeff is the CFG coefficient to find out the size how a lot Ypos is extra preferable to Yneg

So I acquired two variations of the dataset: simply chosen and rejected the place chosen are PII-free and rejected comprise PII; CFG-version with completely different system prompts and corresponding chosen and rejected samples swapping.

Coaching

The coaching was performed utilizing the ORPO method, which mixes supervised finetuning loss with reinforcement studying (RL) odds loss. ORPO was chosen to cut back coaching compute necessities in comparison with supervised fine-tuning adopted by RL-based strategies akin to DPO. Different coaching specs:

- 1xA40 with 48GiB GPU reminiscence to coach the fashions;

- LoRA coaching with adapters utilized to all linear layers with the rank of 16;

- 3 epochs, batch dimension 2, AdamW optimizer, bfloat16 combined precision, preliminary studying price = 1e-4 with cosine studying price scheduler all the way down to 10% of the preliminary studying price.

The mannequin to coach is the offered by the organizers’ mannequin educated with the PII-enriched dataset from llama3.1–8b-instruct.

Analysis

The duty to make an LLM generate PII-free responses is a type of unlearning activity. Often for unlearning some retaining dataset are used — it helps to keep up mannequin’s efficiency exterior the unlearning dataset. The thought I had is to do unlearning with none retaining dataset (to keep away from bias to the retaining dataset and to simplify the design). Two parts of the answer had been anticipated to have an effect on the flexibility to keep up the efficiency:

- Artificial information from the unique llama3.1–8B-instruct mannequin — the mannequin I tuned is derived from this one, so the information sampled from that mannequin ought to have regularisation impact;

- Reinforcement studying regime coaching element ought to restrict deviation from the chosen mannequin to tune.

For the mannequin analysis functions, two datasets had been utilized:

- Subsample of 150 samples from the check dataset to check if we’re avoiding PII era within the responses. The rating on this dataset was calculated utilizing the identical SpaCy NER as in information era course of;

- “TIGER-Lab/MMLU-Professional” validation half to check mannequin utility and normal efficiency. To guage the mannequin’s efficiency on the MMLU-Professional dataset, the GPT-4o-mini decide was used to judge correctness of the responses.

Outcomes for the coaching fashions with the 2 described datasets are introduced within the picture under:

For the CFG-type methodology CFG coefficient of three was used throughout the inference.

CFG inference exhibits important enhancements on the variety of revealed PII objects with none degradation on MMLU throughout the examined steering coefficients.

CFG may be utilized by offering a damaging immediate to reinforce mannequin efficiency throughout inference. CFG may be applied effectively, as each the optimistic and the damaging prompts may be processed in parallel in batch mode, minimizing computational overhead. Nevertheless, in eventualities with very restricted computational sources, the place the mannequin can solely be used with a batch dimension of 1, this method should pose challenges.

Steering coefficients larger than 3 had been additionally examined. Whereas the MMLU and PII outcomes had been good with these coefficients, the solutions exhibited a degradation in grammatical high quality.

Right here I described a technique for direct RL and supervised, retaining-dataset-free fine-tuning that may enhance mannequin’s unlearning with none inference overhead (CFG may be utilized in batch-inference mode). The classifier-free steering method and LoRA adapters on the identical time reveal extra alternatives for inference security enhancements, for instance, relying on the supply of site visitors completely different steering coefficients may be utilized; furthermore, LoRA adapters may also be hooked up or indifferent from the bottom mannequin to regulate entry to PII that may be fairly efficient with, as an illustration, the tiny LoRA adapters constructed primarily based on Bit-LoRA method.