A Light Introduction to Batch Normalization

Picture by Editor | ChatGPT

Introduction

Deep neural networks have drastically advanced over time, overcoming frequent challenges that come up when coaching these advanced fashions. This evolution has enabled them to unravel more and more tough issues successfully.

One of many mechanisms that has confirmed particularly influential within the development of neural network-based fashions is batch normalization. This text gives a mild introduction to this technique, which has turn into a regular in lots of fashionable architectures, serving to to enhance mannequin efficiency by stabilizing coaching, dashing up convergence, and extra.

How and Why Batch Normalization Was Born?

Batch normalization is roughly 10 years outdated. It was initially proposed by Ioffe and Szegedy of their paper Batch Normalization: Accelerating Deep Community Coaching by Lowering Inner Covariate Shift.

The motivation for its creation stemmed from a number of challenges, together with sluggish coaching processes and saturation points like exploding and vanishing gradients. One explicit problem highlighted within the unique paper is inside covariate shift: in easy phrases, this concern is expounded to how the distribution of inputs to every layer of neurons retains altering throughout coaching iterations, largely as a result of the learnable parameters (connection weights) within the earlier layers are naturally being up to date throughout your complete coaching course of. These distribution shifts may set off a kind of “hen and egg” drawback, as they pressure the community to maintain readjusting itself, typically resulting in unduly sluggish and unstable coaching.

How Does it Work?

In response to the aforementioned concern, batch normalization was proposed as a technique that normalizes the inputs to layers in a neural community, serving to stabilize the coaching course of because it progresses.

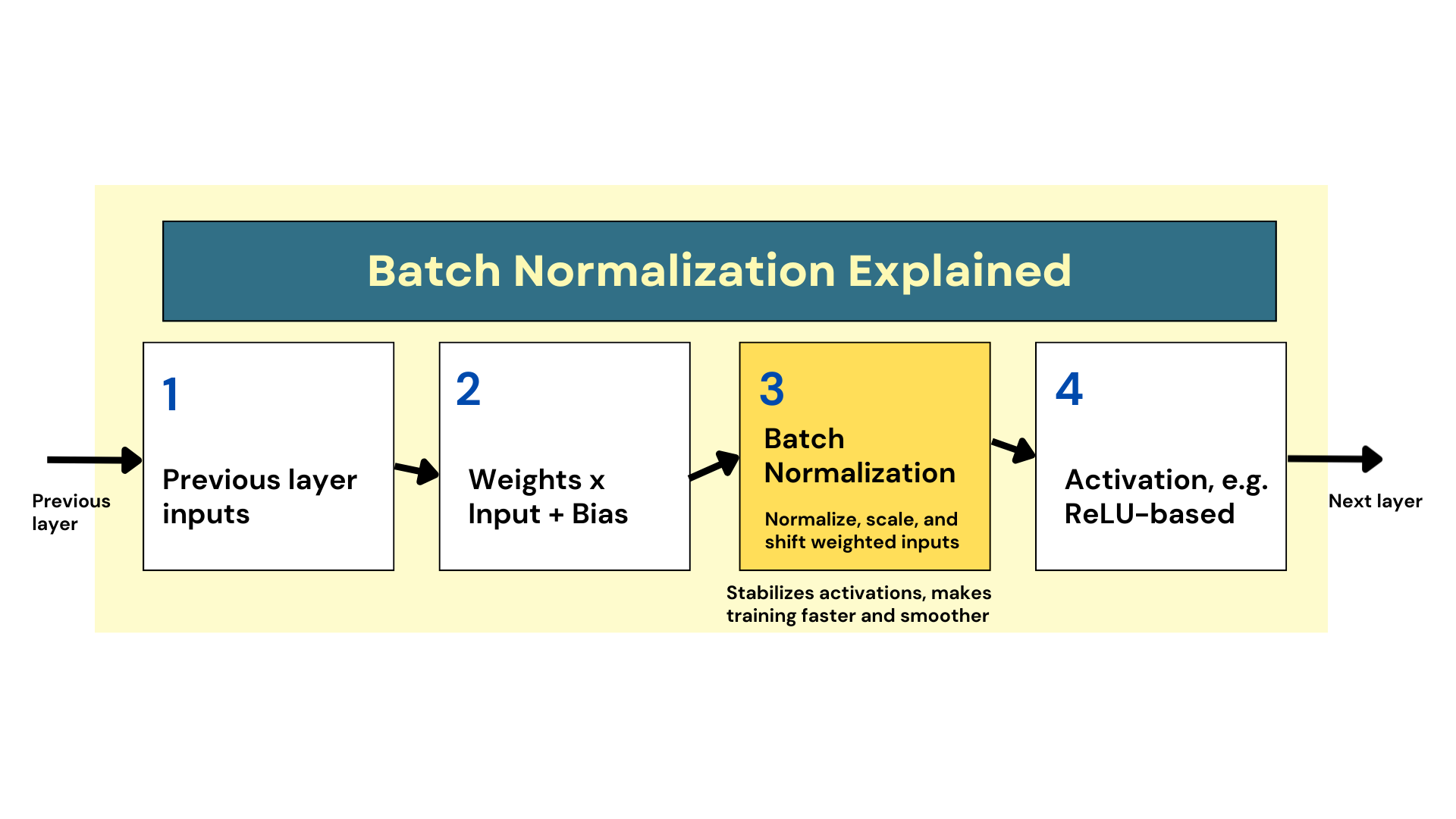

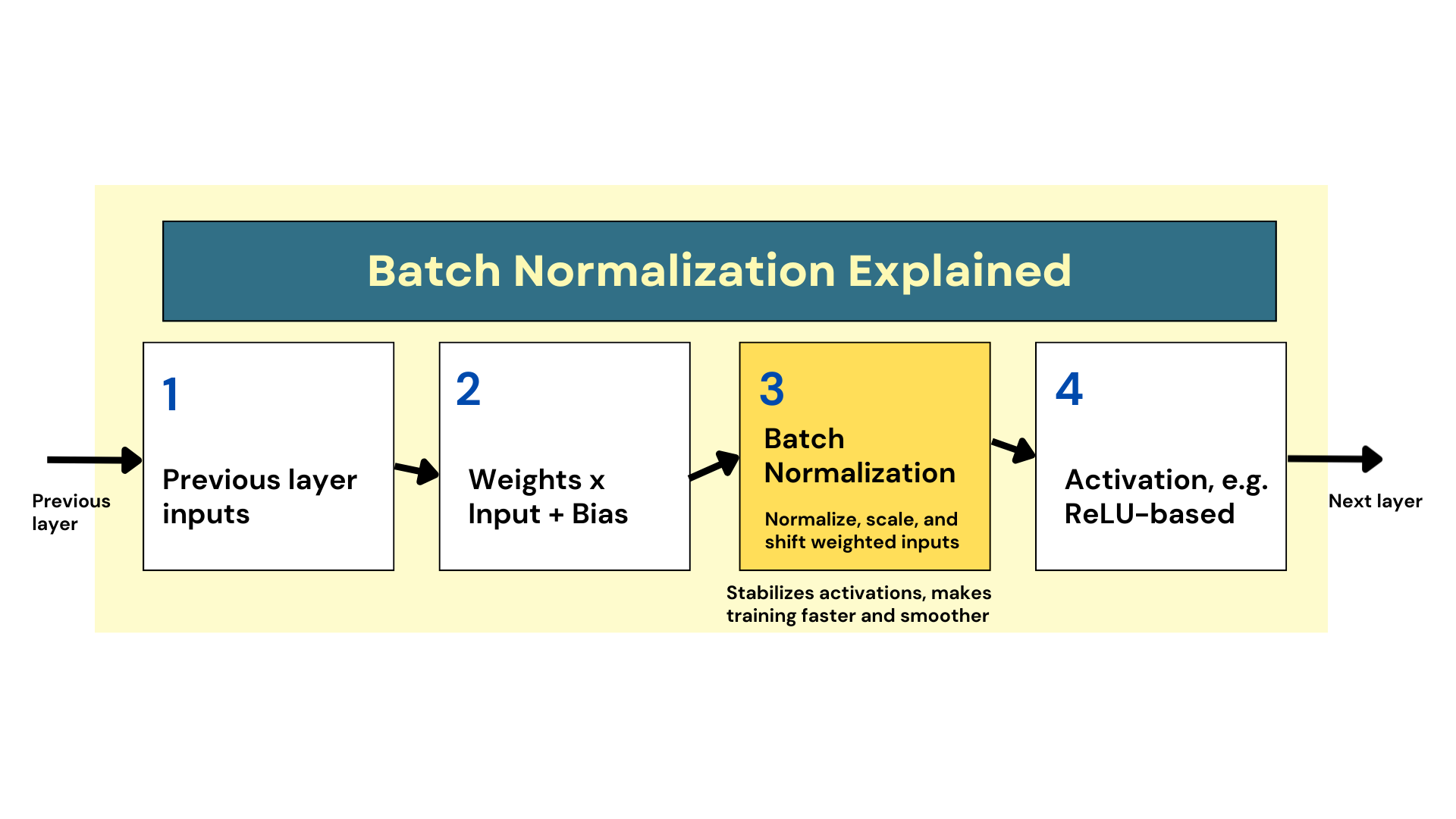

In apply, batch normalization entails introducing an extra normalization step earlier than the assigned activation perform is utilized to weighted inputs in such layers, as proven within the diagram under.

How Batch Normalization Works

Picture by Creator

In its easiest kind, the mechanism consists of zero-centering, scaling, and shifting the inputs in order that values keep inside a extra constant vary. This easy concept helps the mannequin study an optimum scale and imply for inputs on the layer stage. Consequently, gradients that movement backward to replace weights throughout backpropagation achieve this extra easily, decreasing unwanted effects like sensitivity to the burden initialization methodology, e.g., He initialization. And most significantly, this mechanism has confirmed to facilitate sooner and extra dependable coaching.

At this level, two typical questions could come up:

- Why the “batch” in batch normalization?: In case you are pretty conversant in the fundamentals of coaching neural networks, you might know that the coaching set is partitioned into mini-batches — usually containing 32 or 64 cases every — to hurry up and scale the optimization course of underlying coaching. Thus, the method is so named as a result of the imply and variance used for normalization of weighted inputs usually are not calculated over your complete coaching set, however moderately on the batch stage.

- Can or not it’s utilized to all layers in a neural community?: Batch normalization is generally utilized to the hidden layers, which is the place activations can destabilize throughout coaching. Since uncooked inputs are often normalized beforehand, it’s uncommon to use batch normalization within the enter layer. Likewise, making use of it to the output layer is counterproductive, as it might break the assumptions made for the anticipated vary for the output’s values, particularly as an illustration in regression neural networks for predicting features like flight costs, rainfall quantities, and so forth.

A significant optimistic affect of batch normalization is a robust discount within the vanishing gradient drawback. It additionally gives extra robustness, reduces sensitivity to the chosen weight initialization methodology, and introduces a regularization impact. This regularization helps fight overfitting, typically eliminating the necessity for different particular methods like dropout.

How one can Implement it in Keras

Keras is a well-liked Python API on prime of TensorFlow used to construct neural community fashions, the place designing the structure is a vital step earlier than coaching. This instance reveals how easy it’s to implement batch normalization in a easy neural community to be educated with Keras:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

from tensorflow.keras.fashions import Sequential from tensorflow.keras.layers import Dense, BatchNormalization, Activation from tensorflow.keras.optimizers import Adam

mannequin = Sequential([ Dense(64, input_shape=(20,)), BatchNormalization(), Activation(‘relu’),

Dense(32), BatchNormalization(), Activation(‘relu’),

Dense(1, activation=‘sigmoid’) ])

mannequin.compile(optimizer=Adam(), loss=‘binary_crossentropy’, metrics=[‘accuracy’])

mannequin.abstract() |

Introducing this technique is so simple as including BatchNormalization() between the layer definition and its related activation perform. The enter layer on this instance will not be explicitly outlined, with the primary dense layer performing as the primary hidden layer that receives pre-normalized uncooked inputs.

Importantly, notice that incorporating batch normalization forces us to outline every subcomponent within the layer individually, now not with the ability to specify the activation perform as an argument contained in the layer definition, e.g., Dense(32, activation='relu'). Nonetheless, conceptually talking, the three strains of code can nonetheless be interpreted as one neural community layer as an alternative of three, regardless that Keras and TensorFlow internally handle them as separate sublayers.

Wrapping Up

This text offered a mild and approachable introduction to batch normalization: a easy but very efficient mechanism that usually helps alleviate some frequent issues discovered when coaching neural community fashions. Easy phrases (or a minimum of I attempted to!), no math right here and there, and for these a bit extra tech-savvy, a ultimate (additionally light) instance of the way to implement it in Python.

A Light Introduction to Batch Normalization

Picture by Editor | ChatGPT

Introduction

Deep neural networks have drastically advanced over time, overcoming frequent challenges that come up when coaching these advanced fashions. This evolution has enabled them to unravel more and more tough issues successfully.

One of many mechanisms that has confirmed particularly influential within the development of neural network-based fashions is batch normalization. This text gives a mild introduction to this technique, which has turn into a regular in lots of fashionable architectures, serving to to enhance mannequin efficiency by stabilizing coaching, dashing up convergence, and extra.

How and Why Batch Normalization Was Born?

Batch normalization is roughly 10 years outdated. It was initially proposed by Ioffe and Szegedy of their paper Batch Normalization: Accelerating Deep Community Coaching by Lowering Inner Covariate Shift.

The motivation for its creation stemmed from a number of challenges, together with sluggish coaching processes and saturation points like exploding and vanishing gradients. One explicit problem highlighted within the unique paper is inside covariate shift: in easy phrases, this concern is expounded to how the distribution of inputs to every layer of neurons retains altering throughout coaching iterations, largely as a result of the learnable parameters (connection weights) within the earlier layers are naturally being up to date throughout your complete coaching course of. These distribution shifts may set off a kind of “hen and egg” drawback, as they pressure the community to maintain readjusting itself, typically resulting in unduly sluggish and unstable coaching.

How Does it Work?

In response to the aforementioned concern, batch normalization was proposed as a technique that normalizes the inputs to layers in a neural community, serving to stabilize the coaching course of because it progresses.

In apply, batch normalization entails introducing an extra normalization step earlier than the assigned activation perform is utilized to weighted inputs in such layers, as proven within the diagram under.

How Batch Normalization Works

Picture by Creator

In its easiest kind, the mechanism consists of zero-centering, scaling, and shifting the inputs in order that values keep inside a extra constant vary. This easy concept helps the mannequin study an optimum scale and imply for inputs on the layer stage. Consequently, gradients that movement backward to replace weights throughout backpropagation achieve this extra easily, decreasing unwanted effects like sensitivity to the burden initialization methodology, e.g., He initialization. And most significantly, this mechanism has confirmed to facilitate sooner and extra dependable coaching.

At this level, two typical questions could come up:

- Why the “batch” in batch normalization?: In case you are pretty conversant in the fundamentals of coaching neural networks, you might know that the coaching set is partitioned into mini-batches — usually containing 32 or 64 cases every — to hurry up and scale the optimization course of underlying coaching. Thus, the method is so named as a result of the imply and variance used for normalization of weighted inputs usually are not calculated over your complete coaching set, however moderately on the batch stage.

- Can or not it’s utilized to all layers in a neural community?: Batch normalization is generally utilized to the hidden layers, which is the place activations can destabilize throughout coaching. Since uncooked inputs are often normalized beforehand, it’s uncommon to use batch normalization within the enter layer. Likewise, making use of it to the output layer is counterproductive, as it might break the assumptions made for the anticipated vary for the output’s values, particularly as an illustration in regression neural networks for predicting features like flight costs, rainfall quantities, and so forth.

A significant optimistic affect of batch normalization is a robust discount within the vanishing gradient drawback. It additionally gives extra robustness, reduces sensitivity to the chosen weight initialization methodology, and introduces a regularization impact. This regularization helps fight overfitting, typically eliminating the necessity for different particular methods like dropout.

How one can Implement it in Keras

Keras is a well-liked Python API on prime of TensorFlow used to construct neural community fashions, the place designing the structure is a vital step earlier than coaching. This instance reveals how easy it’s to implement batch normalization in a easy neural community to be educated with Keras:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

from tensorflow.keras.fashions import Sequential from tensorflow.keras.layers import Dense, BatchNormalization, Activation from tensorflow.keras.optimizers import Adam

mannequin = Sequential([ Dense(64, input_shape=(20,)), BatchNormalization(), Activation(‘relu’),

Dense(32), BatchNormalization(), Activation(‘relu’),

Dense(1, activation=‘sigmoid’) ])

mannequin.compile(optimizer=Adam(), loss=‘binary_crossentropy’, metrics=[‘accuracy’])

mannequin.abstract() |

Introducing this technique is so simple as including BatchNormalization() between the layer definition and its related activation perform. The enter layer on this instance will not be explicitly outlined, with the primary dense layer performing as the primary hidden layer that receives pre-normalized uncooked inputs.

Importantly, notice that incorporating batch normalization forces us to outline every subcomponent within the layer individually, now not with the ability to specify the activation perform as an argument contained in the layer definition, e.g., Dense(32, activation='relu'). Nonetheless, conceptually talking, the three strains of code can nonetheless be interpreted as one neural community layer as an alternative of three, regardless that Keras and TensorFlow internally handle them as separate sublayers.

Wrapping Up

This text offered a mild and approachable introduction to batch normalization: a easy but very efficient mechanism that usually helps alleviate some frequent issues discovered when coaching neural community fashions. Easy phrases (or a minimum of I attempted to!), no math right here and there, and for these a bit extra tech-savvy, a ultimate (additionally light) instance of the way to implement it in Python.