In Half 3.1 we began discussing how decomposes the time collection information into pattern, seasonality, and residual elements, and as it’s a smoothing-based approach, it means we’d like tough estimates of pattern and seasonality for STL to carry out smoothing.

For that, we calculated a tough estimate of a pattern by calculating it utilizing the Centered Transferring Averages methodology, after which through the use of this preliminary pattern, we additionally calculated the preliminary seasonality. (Detailed math is mentioned in Half 3.1)

On this half, we implement the LOESS (Domestically Estimated Scatterplot Smoothing) methodology subsequent to get the ultimate pattern and seasonal elements of the time collection.

On the finish of half 3.1, we now have the next information:

As we now have the centered seasonal part, the following step is to subtract this from the unique time collection to get the deseasonalized collection.

We bought the collection of deseasonalized values, and we all know that this accommodates each pattern and residual elements.

Now we apply LOESS (Domestically Estimated Scatterplot Smoothing) on this deseasonalized collection.

Right here, we intention to grasp the idea and arithmetic behind the LOESS approach. To do that we think about a single information level from the deseasonalized collection and implement LOESS step-by-step, observing how the worth adjustments.

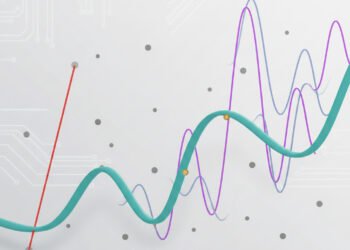

Earlier than understanding the mathematics behind the LOESS, we attempt to perceive what is definitely finished within the LOESS smoothing course of.

LOESS is the method just like Easy Linear Regression, however the one distinction right here is, we assign weights to the factors such that the factors nearer to the goal level will get extra weight and farther from the goal level will get much less weight.

We are able to name it a Weighted Easy Linear Regression.

Right here the goal level is the purpose at which the LOESS smoothing is finished, and, on this course of, we choose an alpha worth which ranges between 0 and 1.

Largely we use 0.3 or 0.5 because the alpha worth.

For instance, let’s say alpha = 0.3 which implies 30% of the info factors is used on this regression, which implies if we now have 100 information factors then 15 factors earlier than the goal level and 15 factors after goal level (together with goal level) are used on this smoothing course of.

Identical as with Easy Linear Regression, on this smoothing course of we match a line to the info factors with added weights.

We add weights to the info factors as a result of it helps the road to adapt to the native habits of the info and ignoring fluctuations or outliers, as we try to estimate the pattern part on this course of.

Now we bought an concept that in LOESS smoothing course of we match a line that most closely fits the info and from that we calculate the smoothed worth on the goal level.

Subsequent, we’ll implement LOESS smoothing by taking a single level for instance.

Let’s attempt to perceive what’s truly finished in LOESS smoothing by taking a single level for instance.

Contemplate 01-08-2010, right here the deseasonalized worth is 14751.02.

Now to grasp the mathematics behind LOESS simply, let’s think about a span of 5 factors.

Right here the span of 5 factors means we think about the factors that are nearest to focus on level (1-8-2010) together with the goal level.

To display LOESS smoothing at August 2010, we thought-about values from June 2010 to October 2010.

Right here the index values (ranging from zero) are from the unique information.

Step one in LOESS smoothing is that we calculate the distances between the goal level and neighboring factors.

We calculate this distance primarily based on the index values.

We calculated the distances and the utmost distance from the goal level is ‘2’.

Now the following step in LOESS smoothing is to calculate the tricube weights, LOESS assigns weights to every level primarily based on the scaled distances.

Right here the tricube weights for five factors are [0.00, 0.66, 1.00, 0.66, 0.00].

Now that we now have calculated the tricube weights, the following step is to carry out weighted easy linear regression.

The formulation are related as SLR with regular averages getting changed by weighted averages.

Right here’s the total step-by-step math to calculate the LOESS smoothed worth at t=7.

Right here the LOESS pattern estimate at August 2010 is 14212.96 which is lower than the deseasonalized worth of 14751.02.

In our 5-point window, if we see the values of neighboring months, we will observe that the values are lowering, and the August worth appears to be like like a sudden leap.

LOESS tries to suit a line that most closely fits the info which represents the underlying native pattern; it smooths out sharp spikes or dips and it offers us a real native habits of the info.

That is how LOESS calculates the smoothed worth for an information level.

For our dataset once we implement STL decomposition utilizing Python, the alpha worth could also be between 0.3 and 0.5 primarily based on the variety of factors within the dataset.

We are able to additionally strive totally different alpha values and see which one represents the info greatest and choose the suitable one.

This course of is repeated for each level within the information.

As soon as we get the LOESS smoothed pattern part, it’s subtracted from the unique collection to isolate seasonality and noise.

Subsequent, we comply with the identical LOESS smoothing process throughout seasonal subseries like all Januaries, Februaries and so forth. (as partly 3.1) to get LOESS smoothed seasonal part.

After getting each the LOESS smoothed pattern and seasonality elements, we subtract them from authentic collection to get the residual.

After this, the entire course of is repeated to additional refine the elements, the LOESS smoothed seasonality is subtracted from the unique collection to search out LOESS smoothed pattern and this new LOESS smoothed pattern is subtracted from the unique collection to search out the LOESS smoothed seasonality.

This we will name as one Iteration, and after a number of rounds of iteration (10-15), the three elements get stabilized and there’s no additional change and STL returns the ultimate pattern, seasonality, and residual elements.

That is what occurs once we use the code under to use STL decomposition on the dataset to get the three elements.

import pandas as pd

import matplotlib.pyplot as plt

from statsmodels.tsa.seasonal import STL

# Load the dataset

df = pd.read_csv("C:/RSDSELDN.csv", parse_dates=['Observation_Date'], dayfirst=True)

df.set_index('Observation_Date', inplace=True)

df = df.asfreq('MS') # Guarantee month-to-month frequency

# Extract the time collection

collection = df['Retail_Sales']

# Apply STL decomposition

stl = STL(collection, seasonal=13)

consequence = stl.match()

# Plot and save STL elements

fig, axs = plt.subplots(4, 1, figsize=(10, 8), sharex=True)

axs[0].plot(consequence.noticed, colour='sienna')

axs[0].set_title('Noticed')

axs[1].plot(consequence.pattern, colour='goldenrod')

axs[1].set_title('Pattern')

axs[2].plot(consequence.seasonal, colour='darkslategrey')

axs[2].set_title('Seasonal')

axs[3].plot(consequence.resid, colour='rebeccapurple')

axs[3].set_title('Residual')

plt.suptitle('STL Decomposition of Retail Gross sales', fontsize=16)

plt.tight_layout()

plt.present()

Dataset: This weblog makes use of publicly obtainable information from FRED (Federal Reserve Financial Information). The collection Advance Retail Gross sales: Division Shops (RSDSELD) is revealed by the U.S. Census Bureau and can be utilized for evaluation and publication with acceptable quotation.

Official quotation:

U.S. Census Bureau, Advance Retail Gross sales: Division Shops [RSDSELD], retrieved from FRED, Federal Reserve Financial institution of St. Louis; https://fred.stlouisfed.org/collection/RSDSELD, July 7, 2025.

Notice: All photos, until in any other case famous, are by the writer.

I hope you bought a fundamental concept of how STL decomposition works, from calculating preliminary pattern and seasonality to discovering last elements utilizing LOESS smoothing.

Subsequent within the collection, we focus on ‘Stationarity of a Time Sequence’ intimately.

Thanks for studying!