Intro

In Pc Science, similar to in human cognition, there are totally different ranges of reminiscence:

- Major Reminiscence (like RAM) is the lively short-term reminiscence used for present duties, reasoning, and decision-making on present duties. It holds the knowledge you’re presently working with. It’s quick however risky, that means that it loses knowledge when the ability is off.

- Secondary Reminiscence (like bodily storage) refers to long-term storage of discovered data that’s not instantly lively in working reminiscence. It’s not all the time accessed throughout real-time decision-making however might be retrieved when wanted. Due to this fact, it’s slower however extra persistent.

- Tertiary Reminiscence (like backup of historic knowledge) refers to archival reminiscence, the place info is saved for backup functions and catastrophe restoration. It’s characterised by excessive capability and low value, however with slower entry time. Consequently, it’s not often used.

AI Brokers can leverage all of the sorts of reminiscence. First, they’ll use Major Reminiscence to deal with your present query. Then, they might entry Secondary Reminiscence to usher in data from current conversations. And, if wanted, they may even retrieve older info from Tertiary Reminiscence.

On this tutorial, I’m going to point out how one can construct an AI Agent with reminiscence throughout a number of periods. I’ll current some helpful Python code that may be simply utilized in different related instances (simply copy, paste, run) and stroll by means of each line of code with feedback to be able to replicate this instance (hyperlink to full code on the finish of the article).

Setup

Let’s begin by organising Ollama (pip set up ollama==0.5.1), a library that enables customers to run open-source LLMs regionally, while not having cloud-based providers, giving extra management over knowledge privateness and efficiency. Because it runs regionally, any dialog knowledge doesn’t go away your machine.

To begin with, it’s essential to obtain Ollama from the web site.

Then, on the immediate shell of your laptop computer, use the command to obtain the chosen LLM. I’m going with Alibaba’s Qwen, because it’s each sensible and light-weight.

After the obtain is accomplished, you’ll be able to transfer on to Python and begin writing code.

import ollama

llm = "qwen2.5"Let’s take a look at the LLM:

stream = ollama.generate(mannequin=llm, immediate='''what time is it?''', stream=True)

for chunk in stream:

print(chunk['response'], finish='', flush=True)Database

An Agent with multi-session reminiscence is an Synthetic Intelligence system that may bear in mind info from one interplay to the following, even when these interactions occur at totally different occasions or over separate periods. For instance, a private assistant AI that remembers your day by day schedule and preferences, or a buyer help Bot that is aware of your subject historical past while not having you to re-explain every time.

Mainly, the Agent must entry the chat historical past. Primarily based on how outdated the previous conversations are, this could possibly be labeled as Secondary or Tertiary Reminiscence.

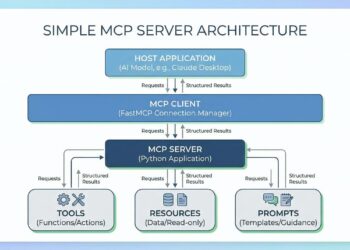

Let’s get to work. We are able to retailer dialog knowledge in a vector database, which is the perfect resolution for effectively storing, indexing, and looking out unstructured knowledge. At present, essentially the most used vector db is Microsoft’s AISearch, whereas the perfect open-source one is ChromaDB, which is helpful, straightforward, and free.

After a fast pip set up chromadb==0.5.23 you’ll be able to work together with the db utilizing Python in three other ways:

chromadb.Consumer()to create a db that stays quickly in reminiscence with out occupying bodily house on disk.chromadb.PersistentClient(path)to save lots of and cargo the db out of your native machine.chromadb.HttpClient(host='localhost', port=8000)to have a client-server mode in your browser.

When storing paperwork in ChromaDB, knowledge are saved as vectors in order that one can search with a query-vector to retrieve the closest matching data. Please notice that, if not specified in any other case, the default embedding operate is a sentence transformer mannequin (all-MiniLM-L6-v2).

import chromadb

## hook up with db

db = chromadb.PersistentClient()

## examine present collections

db.list_collections()

## choose a set

collection_name = "chat_history"

assortment = db.get_or_create_collection(identify=collection_name,

embedding_function=chromadb.utils.embedding_functions.DefaultEmbeddingFunction())To retailer your knowledge, first it’s essential to extract the chat and put it aside as one textual content doc. In Ollama, there are 3 roles within the interplay with an LLM:

- system — used to cross core directions to the mannequin on how the dialog ought to proceed (i.e. the primary immediate)

- person — used for person’s questions, and in addition for reminiscence reinforcement (i.e. “keep in mind that the reply should have a selected format”)

- assistant — it’s the reply from the mannequin (i.e. the ultimate reply)

Be certain that every doc has a novel id, which you’ll be able to generate manually or enable Chroma to auto-generate. One vital factor to say is which you could add further info as metadata (i.e., title, tags, hyperlinks). It’s non-obligatory however very helpful, as metadata enrichment can considerably improve doc retrieval. As an illustration, right here, I’m going to make use of the LLM to summarize every doc into a couple of key phrases.

from datetime import datetime

def save_chat(lst_msg, assortment):

print("--- Saving Chat ---")

## extract chat

chat = ""

for m in lst_msg:

chat += f'{m["role"]}: <<{m["content"]}>>' +'nn'

## get idx

idx = str(assortment.rely() +1)

## generate data

p = "Describe the next dialog utilizing solely 3 key phrases separated by a comma (for instance: 'finance, volatility, shares')."

tags = ollama.generate(mannequin=llm, immediate=p+"n"+chat)["response"]

dic_info = {"tags":tags,

"date": datetime.immediately().strftime("%Y-%m-%d"),

"time": datetime.immediately().strftime("%H:%M")}

## write db

assortment.add(paperwork=[chat], ids=[idx], metadatas=[dic_info])

print(f"--- Chat num {idx} saved ---","n")

print(dic_info,"n")

print(chat)

print("------------------------")We have to begin and save a chat to see it in motion.

Run primary Agent

To start out, I shall run a really primary LLM chat (no instruments wanted) to save lots of the primary dialog within the database. Throughout the interplay, I’m going to say some vital info, not included within the LLM data base, that I need the Agent to recollect within the subsequent session.

immediate = "You might be an clever assistant, present the very best reply to person's request."

messages = [{"role":"system", "content":prompt}]

whereas True:

## Person

q = enter('🙂 >')

if q == "stop":

### save chat earlier than quitting

save_chat(lst_msg=messages, assortment=assortment)

break

messages.append( {"function":"person", "content material":q} )

## Mannequin

agent_res = ollama.chat(mannequin=llm, messages=messages, instruments=[])

res = agent_res["message"]["content"]

## Response

print("👽 >", f"x1b[1;30m{res}x1b[0m")

messages.append( {"role":"assistant", "content":res} )

At the end, the conversation was saved with enriched metadata.

Tools

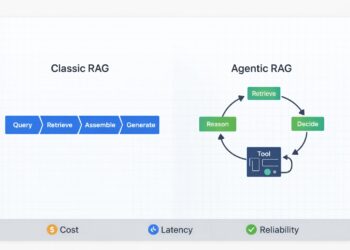

I want the Agent to be able to retrieve information from previous conversations. Therefore, I need to provide it with a Tool to do so. To put it in another way, the Agent must do a Retrieval-Augmented Generation (RAG) from the history. It’s a technique that combines retrieval and generative models by adding to LLMs knowledge facts fetched from external sources (in this case, ChromaDB).

def retrieve_chat(query:str) -> str:

res_db = collection.query(query_texts=[query])["documents"][0][0:10]

historical past = ' '.be part of(res_db).change("n", " ")

return historical past

tool_retrieve_chat = {'sort':'operate', 'operate':{

'identify': 'retrieve_chat',

'description': 'If you data is NOT sufficient to reply the person, you need to use this software to retrieve chats historical past.',

'parameters': {'sort': 'object',

'required': ['query'],

'properties': {

'question': {'sort':'str', 'description':'Enter the person query or the subject of the present chat'},

}}}}After fetching knowledge, the AI should course of all the knowledge and provides the ultimate reply to the person. Generally, it may be simpler to deal with the “remaining reply” as a Instrument. For instance, if the Agent does a number of actions to generate intermediate outcomes, the ultimate reply might be considered the Instrument that integrates all of this info right into a cohesive response. By designing it this manner, you will have extra customization and management over the outcomes.

def final_answer(textual content:str) -> str:

return textual content

tool_final_answer = {'sort':'operate', 'operate':{

'identify': 'final_answer',

'description': 'Returns a pure language response to the person',

'parameters': {'sort': 'object',

'required': ['text'],

'properties': {'textual content': {'sort':'str', 'description':'pure language response'}}

}}}We’re lastly prepared to check the Agent and its reminiscence.

dic_tools = {'retrieve_chat':retrieve_chat,

'final_answer':final_answer}Run Agent with reminiscence

I shall add a few utils features for Instrument utilization and for working the Agent.

def use_tool(agent_res:dict, dic_tools:dict) -> dict:

## use software

if agent_res["message"].tool_calls shouldn't be None:

for software in agent_res["message"].tool_calls:

t_name, t_inputs = software["function"]["name"], software["function"]["arguments"]

if f := dic_tools.get(t_name):

### calling software

print('🔧 >', f"x1b[1;31m{t_name} -> Inputs: {t_inputs}x1b[0m")

### tool output

t_output = f(**tool["function"]["arguments"])

print(t_output)

### remaining res

res = t_output

else:

print('🤬 >', f"x1b[1;31m{t_name} -> NotFoundx1b[0m")

## don't use tool

else:

res = agent_res["message"].content material

t_name, t_inputs = '', ''

return {'res':res, 'tool_used':t_name, 'inputs_used':t_inputs}When the Agent is attempting to resolve a job, I need to preserve observe of the Instruments which were used and the outcomes it will get. The mannequin ought to attempt every Instrument solely as soon as, and the iteration shall cease solely when the Agent is able to give the ultimate reply.

def run_agent(llm, messages, available_tools):

## use instruments till remaining reply

tool_used, local_memory = '', ''

whereas tool_used != 'final_answer':

### use software

attempt:

agent_res = ollama.chat(mannequin=llm, messages=messages, instruments=[v for v in available_tools.values()])

dic_res = use_tool(agent_res, dic_tools)

res, tool_used, inputs_used = dic_res["res"], dic_res["tool_used"], dic_res["inputs_used"]

### error

besides Exception as e:

print("⚠️ >", e)

res = f"I attempted to make use of {tool_used} however did not work. I'll attempt one thing else."

print("👽 >", f"x1b[1;30m{res}x1b[0m")

messages.append( {"role":"assistant", "content":res} )

### update memory

if tool_used not in ['','final_answer']:

local_memory += f"n{res}"

messages.append( {"function":"person", "content material":local_memory} )

available_tools.pop(tool_used)

if len(available_tools) == 1:

messages.append( {"function":"person", "content material":"now activate the software final_answer."} )

### instruments not used

if tool_used == '':

break

return resLet’s begin a brand new interplay, and this time I need the Agent to activate all of the Instruments, for retrieving and processing outdated info.

immediate = '''

You might be an clever assistant, present the very best reply to person's request.

You will need to return pure language response.

When interacting with a person, first it's essential to use the software 'retrieve_chat' to recollect earlier chats historical past.

'''

messages = [{"role":"system", "content":prompt}]

whereas True:

## Person

q = enter('🙂 >')

if q == "stop":

### save chat earlier than quitting

save_chat(lst_msg=messages, assortment=assortment)

break

messages.append( {"function":"person", "content material":q} )

## Mannequin

available_tools = {"retrieve_chat":tool_retrieve_chat, "final_answer":tool_final_answer}

res = run_agent(llm, messages, available_tools)

## Response

print("👽 >", f"x1b[1;30m{res}x1b[0m")

messages.append( {"role":"assistant", "content":res} )

I gave the Agent a task not directly correlated to the topic of the last session. As expected, the Agent activated the Tool and looked into previous chats. Now, it will use the “final answer” to process the information and respond to me.

Conclusion

This article has been a tutorial to demonstrate how to build AI Agents with Multi-Session Memory from scratch using only Ollama. With these building blocks in place, you are already equipped to start developing your own Agents for different use cases.

Full code for this article: GitHub

I hope you enjoyed it! Feel free to contact me for questions and feedback or just to share your interesting projects.

👉 Let’s Connect 👈

(All images, unless otherwise noted, are by the author)