Whether or not you’re making ready for interviews or constructing Machine Studying methods at your job, mannequin compression has change into essential talent. Within the period of LLMs, the place fashions are getting bigger and bigger, the challenges round compressing these fashions to make them extra environment friendly, smaller, and usable on light-weight machines have by no means been extra related.

On this article, I’ll undergo 4 basic compression strategies that each ML practitioner ought to perceive and grasp. I discover pruning, quantization, low-rank factorization, and Information Distillation, every providing distinctive benefits. I will even add some minimal PyTorch code samples for every of those strategies.

I hope you benefit from the article!

Mannequin pruning

Pruning might be essentially the most intuitive compression approach. The thought may be very easy: take away a number of the weights of the community, both randomly or take away the “much less essential” ones. In fact, once we discuss “eradicating” weights within the context of neural networks, it means setting the weights to zero.

Structured vs unstructured pruning

Let’s begin with a easy heuristic: eradicating weights smaller than a threshold.

[ w’_{ij} = begin{cases} w_{ij} & text{if } |w_{ij}| ge theta_0

0 & text{if } |w_{ij}| < theta_0

end{cases} ]

In fact, this isn’t ultimate as a result of we would wish to discover a technique to discover the fitting threshold for our drawback! A extra sensible method is to take away a specified proportion of weights with the smallest magnitudes (norm) inside one layer. There are 2 widespread methods of implementing pruning in a single layer:

- Structured pruning: take away total elements of the community (e.g. a random row from the burden tensor, or a random channel in a convulational layer)

- Unstructured pruning: take away particular person weights no matter their positions and of the construction of the tensor

We will additionally use world pruning with both of the 2 above strategies. It will take away the chosen proportion of weights throughout a number of layers, and doubtlessly have completely different elimination charges relying on the variety of parameters in every layer.

PyTorch makes this gorgeous simple (by the way in which, you’ll find all code snippets in my GitHub repo).

import torch.nn.utils.prune as prune

# 1. Random unstructured pruning (20% of weights at random)

prune.random_unstructured(mannequin.layer, title="weight", quantity=0.2)

# 2. L1‑norm unstructured pruning (20% of smallest weights)

prune.l1_unstructured(mannequin.layer, title="weight", quantity=0.2)

# 3. World unstructured pruning (40% of all weights by L1 norm throughout layers)

prune.global_unstructured(

[(model.layer1, "weight"), (model.layer2, "weight")],

pruning_method=prune.L1Unstructured,

quantity=0.4

)

# 4. Structured pruning (take away 30% of rows with lowest L2 norm)

prune.ln_structured(mannequin.layer, title="weight", quantity=0.3, n=2, dim=0)Notice: when you have taken statistics lessons, you most likely discovered regularization-induced strategies that additionally implicitly prune some weights throughout coaching, by utilizing L0 or L1 norm regularization. Pruning differs from that as a result of it’s utilized as a post-Mannequin Compression approach

Why does pruning work? The Lottery Ticket Speculation

I want to conclude that part with a fast point out of the Lottery Ticket Speculation, which is each an software of pruning and an fascinating rationalization of how eradicating weights can typically enhance a mannequin. I like to recommend studying the related paper ([7]) for extra particulars.

Authors use the next process:

- Prepare the total mannequin to convergence

- Prune the smallest-magnitude weights (say 10%)

- Reset the remaining weights to their unique initialization values

- Retrain this pruned community

- Repeat the method a number of instances

After doing this 30 instances, you find yourself with solely 0.930 ~ 4% of the unique parameters. And surprisingly, this community can do in addition to the unique one.

This implies that there’s essential parameter redundancy. In different phrases, there exists a sub-network (“a lottery ticket”) that really does many of the work!

Pruning is one technique to unveil this sub-network.

I like to recommend this superb video that covers the subject!

Quantization

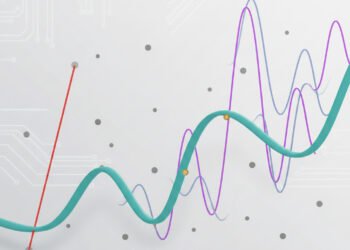

Whereas pruning focuses on eradicating parameters solely, Quantization takes a special method: lowering the precision of every parameter.

Keep in mind that each quantity in a pc is saved as a sequence of bits. A float32 worth makes use of 32 bits (see instance image under), whereas an 8-bit integer (int8) makes use of simply 8 bits.

Most deep studying fashions are skilled utilizing 32-bit floating-point numbers (FP32). Quantization converts these high-precision values to lower-precision codecs like 16-bit floating-point (FP16), 8-bit integers (INT8), and even 4-bit representations.

The financial savings listed below are apparent: INT8 requires 75% much less reminiscence than FP32. However how can we really carry out this conversion with out destroying our mannequin’s efficiency?

The maths behind quantization

To transform from floating-point to integer illustration, we have to map the continual vary of values to a discrete set of integers. For INT8 quantization, we’re mapping to 256 potential values (from -128 to 127).

Suppose our weights are normalized between -1.0 and 1.0 (widespread in deep studying):

[ text{scale} = frac{text{float_max} – text{float_min}}{text{int8_max} – text{int8_min}} = frac{1.0 – (-1.0)}{127 – (-128)} = frac{2.0}{255} ]

Then, the quantized worth is given by

[text{quantized_value} = text{round}(frac{text{original_value}}{text{scale}} ] + textual content{zero_point})

Right here, zero_point=0 as a result of we wish 0 to be mapped to 0. We will then spherical this worth to the closest integer to get integers between -127 and 128.

And, you guessed it: to get integers again to drift, we are able to use the inverse operation: [text{float_value} = text{integer_value} times text{scale} – text{zero_point} ]

Notice: in observe, the scaling issue is set based mostly on the vary values we quantize.

The best way to apply quantization?

Quantization could be utilized at completely different levels and with completely different methods. Listed here are just a few strategies price figuring out about: (under, the phrase “activation” refers back to the output values of every layer)

- Put up-training quantization (PTQ):

- Static Quantization: quantize each weights and activations offline (after coaching and earlier than inference)

- Dynamic Quantization: quantize weights offline, however activations on-the-fly throughout inference. That is completely different from offline quantization as a result of the scaling issue is set based mostly on the values seen to date throughout inference.

- Quantize-aware coaching (QAT): simulate quantization throughout coaching by rounding values, however calculations are nonetheless finished with floating-point numbers. This makes the mannequin be taught weights which are extra sturdy to quantization, which can be utilized after coaching. Underneath the hood, the thought is to add “pretend” operations:

x -> dequantize(quantize(x)): this new worth is near x, nevertheless it nonetheless helps the mannequin tolerate the 8-bit rounding and clipping noise.

import torch.quantization as tq

# 1. Put up‑coaching static quantization (weights + activations offline)

mannequin.eval()

mannequin.qconfig = tq.get_default_qconfig('fbgemm') # assign a static quantization config

tq.put together(mannequin, inplace=True)

# we have to use a calibration dataset to find out the ranges of values

with torch.no_grad():

for information, _ in calibration_data:

mannequin(information)

tq.convert(mannequin, inplace=True) # convert to a completely int8 mannequin

# 2. Put up‑coaching dynamic quantization (weights offline, activations on‑the‑fly)

dynamic_model = tq.quantize_dynamic(

mannequin,

{torch.nn.Linear, torch.nn.LSTM}, # layers to quantize

dtype=torch.qint8

)

# 3. Quantization‑Conscious Coaching (QAT)

mannequin.practice()

mannequin.qconfig = tq.get_default_qat_qconfig('fbgemm') # arrange QAT config

tq.prepare_qat(mannequin, inplace=True) # insert pretend‑quant modules

# [here, train or fine‑tune the model as usual]

qat_model = tq.convert(mannequin.eval(), inplace=False) # convert to actual int8 after QATQuantization may be very versatile! You may apply completely different precision ranges to completely different elements of the mannequin. As an example, you may quantize most linear layers to 8-bit for max velocity and reminiscence financial savings, whereas leaving vital elements (e.g. consideration heads, or batch-norm layers) at 16-bit or full-precision.

Low-Rank Factorization

Now let’s discuss low-rank factorization — a way that has been popularized with the rise of LLMs.

The important thing statement: many weight matrices in neural networks have efficient ranks a lot decrease than their dimensions recommend. In plain English, meaning there may be a variety of redundancy within the parameters.

Notice: when you have ever used PCA for dimensionality discount, you may have already encountered a type of low-rank approximation. PCA decomposes giant matrices into merchandise of smaller, lower-rank elements that retain as a lot info as potential.

The linear algebra behind low-rank factorization

Take a weight matrix W. Each actual matrix could be represented utilizing a Singular Worth Decomposition (SVD):

[ W = USigma V^T ]

the place Σ is a diagonal matrix with singular values in non-increasing order. The variety of optimistic coefficients really corresponds to the rank of the matrix W.

To approximate W with a matrix of rank okay < r, we are able to choose the okay best components of sigma, and the corresponding first okay columns and first okay rows of U and V respectively:

[ begin{aligned} W_k &= U_k,Sigma_k,V_k^T

[6pt] &= underbrace{U_k,Sigma_k^{1/2}}_{Ainmathbb{R}^{mtimes okay}} underbrace{Sigma_k^{1/2},V_k^T}_{Binmathbb{R}^{ktimes n}}. finish{aligned} ]

See how the brand new matrix could be decomposed because the product of A and B, with the full variety of parameters now being m * okay + okay * n = okay*(m+n) as a substitute of m*n! This can be a big enchancment, particularly when okay is far smaller than m and n.

In observe, it’s equal to changing a linear layer x → Wx with 2 consecutive ones: x → A(Bx).

In PyTorch

We will both apply low-rank factorization earlier than coaching (parameterizing every linear layer as two smaller matrices – probably not a compression technique, however a design selection) or after coaching (making use of a truncated SVD on weight matrices). The second method is by far the commonest one and is carried out under.

import torch

# 1. Extract weight and select rank

W = mannequin.layer.weight.information # (m, n)

okay = 64 # desired rank

# 2. Approximate low-rank SVD

U, S, V = torch.svd_lowrank(W, q=okay) # U: (m, okay), S: (okay, okay), V: (n, okay)

# 3. Kind elements A and B

A = U * S.sqrt() # [m, k]

B = V.t() * S.sqrt().unsqueeze(1) # [k, n]

# 4. Change with two linear layers and insert the matrices A and B

orig = mannequin.layer

mannequin.layer = torch.nn.Sequential(

torch.nn.Linear(orig.in_features, okay, bias=False),

torch.nn.Linear(okay, orig.out_features, bias=False),

)

mannequin.layer[0].weight.information.copy_(B)

mannequin.layer[1].weight.information.copy_(A)LoRA: an software of low-rank approximation

I believe it’s essential to say LoRA: you may have most likely heard of LoRA (Low-Rank Adaptation) when you have been following LLM fine-tuning developments. Although not strictly a compression approach, LoRA has change into extraordinarily fashionable for effectively adapting giant language fashions and making fine-tuning very environment friendly.

The thought is straightforward: throughout fine-tuning, slightly than modifying the unique mannequin weights W, LoRA freezes them and be taught trainable low-rank updates:

$$W’ = W + Delta W = W + AB$$

the place A and B are low-rank matrices. This permits for task-specific adaptation with only a fraction of the parameters.

Even higher: QLoRA takes this additional by combining quantization with low-rank adaptation!

Once more, this can be a very versatile approach and could be utilized at numerous levels. Often, LoRA is utilized solely on particular layers (for instance, Consideration layers’ weights).

Information Distillation

Information distillation takes a essentially completely different method from what we have now seen to date. As an alternative of modifying an present mannequin’s parameters, it transfers the “information” from a giant, advanced mannequin (the “instructor”) to a smaller, extra environment friendly mannequin (the “scholar”). The aim is to coach the coed mannequin to mimic the conduct and replicate the efficiency of the instructor, typically a better job than fixing the unique drawback from scratch.

The distillation loss

Let’s clarify some ideas within the case of a classification drawback:

- The instructor mannequin is normally a big, advanced mannequin that achieves excessive efficiency on the duty at hand

- The scholar mannequin is a second, smaller mannequin with a special structure, however tailor-made to the identical job

- Comfortable targets: these are the instructor’s mannequin predictions (possibilities, and never labels!). They are going to be utilized by the coed mannequin to imitate the instructor’s behaviors. Notice that we use uncooked predictions and never labels as a result of additionally they include details about the boldness of the predictions

- Temperature: along with the instructor’s prediction, we additionally use a coefficient T (known as temperature) within the softmax perform to extract extra info from the delicate targets. Growing T softens the distribution and helps the coed mannequin give extra significance to incorrect predictions.

In observe, it’s fairly simple to coach the coed mannequin. We mix the standard loss (commonplace cross-entropy loss based mostly on onerous labels) with the “distillation” loss (based mostly on the instructor’s delicate targets):

$$ L_{textual content{whole}} = alpha L_{textual content{onerous}} + (1 – alpha) L_{textual content{distill}} $$

The distillation loss is nothing however the KL divergence between the instructor and scholar distribution (you may see it as a measure of the space between the two distributions).

$$ L_{textual content{distill}} = D{KL}(q_{textual content{instructor}} | | q_{textual content{scholar}}) = sum_i q_{textual content{instructor}, i} log left( frac{q_{textual content{instructor}, i}}{q_{textual content{scholar}, i}} proper) $$

As for the opposite strategies, it’s potential and inspired to adapt this framework relying on the use case: for instance, one may examine logits and activations from intermediate layers within the community between the coed and instructor mannequin, as a substitute of solely evaluating the ultimate outputs.

Information distillation in observe

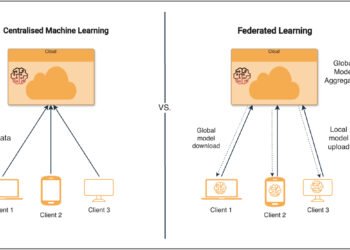

Just like the earlier strategies, there are two choices:

- Offline distillation: the pre-trained instructor mannequin is fastened, and a separate scholar mannequin is skilled to imitate it. Each fashions are utterly separate, and the instructor’s weights stay frozen throughout the distillation course of.

- On-line distillation: each fashions are skilled concurrently, with information switch occurring throughout the joint coaching course of.

And under, a simple technique to apply offline distillation (the final code block of this text 🙂):

import torch.nn.purposeful as F

def distillation_loss_fn(student_logits, teacher_logits, labels, temperature=2.0, alpha=0.5):

# Commonplace Cross-Entropy loss with onerous labels

student_loss = F.cross_entropy(student_logits, labels)

# Distillation loss with delicate targets (KL Divergence)

soft_teacher_probs = F.softmax(teacher_logits / temperature, dim=-1)

soft_student_log_probs = F.log_softmax(student_logits / temperature, dim=-1)

# kl_div expects log possibilities as enter for the primary argument!

distill_loss = F.kl_div(

soft_student_log_probs,

soft_teacher_probs.detach(), # do not calculate gradients for instructor

discount='batchmean'

) * (temperature ** 2) # non-obligatory, a scaling issue

# Mix losses in keeping with system

total_loss = alpha * student_loss + (1 - alpha) * distill_loss

return total_loss

teacher_model.eval()

student_model.practice()

with torch.no_grad():

teacher_logits = teacher_model(inputs)

student_logits = student_model(inputs)

loss = distillation_loss_fn(student_logits, teacher_logits, labels, temperature=T, alpha=alpha)

loss.backward()

optimizer.step()Conclusion

Thanks for studying this text! Within the period of LLMs, with billions and even trillions of parameters, mannequin compression has change into a basic idea, important in nearly each situation to make fashions extra environment friendly and simply deployable.

However as we have now seen, mannequin compression isn’t nearly lowering the mannequin dimension – it’s about making considerate design selections. Whether or not selecting between on-line and offline strategies, compressing the whole community, or focusing on particular layers or channels, every selection considerably impacts efficiency and value. Most fashions now mix a number of of those strategies (take a look at this mannequin, for example).

Past introducing you to the primary strategies, I hope this text additionally conjures up you to experiment and develop your personal artistic options!

Don’t neglect to take a look at the GitHub repository, the place you’ll discover all of the code snippets and a side-by-side comparability of the 4 compression strategies mentioned on this article.

Try my earlier articles:

References

- [1] Hu, E., et al. (2021). Low-rank Adaptation of Massive Language Fashions. arXiv preprint arXiv:2106.09685.

- [2] Lightning AI. Accelerating Massive Language Fashions with Blended Precision Methods. Lightning AI Weblog.

- [3] TensorFlow Weblog. Pruning API in TensorFlow Mannequin Optimization Toolkit. TensorFlow Weblog, Might 2019.

- [4] Towards AI. A Mild Introduction to Information Distillation. In direction of AI, Aug 2022.

- [5] Ju, A. ML Algorithm: Singular Worth Decomposition (SVD). LinkedIn Pulse.

- [6] Algorithmic Simplicity. THIS is why giant language fashions can perceive the world. YouTube, Apr 2023.

- [7] Frankle, J., & Carbin, M. (2019). The Lottery Ticket Speculation: Discovering Sparse, Trainable Neural Networks. arXiv preprint arXiv:1803.03635.