ENSEMBLE LEARNING

Everybody makes errors — even the best resolution timber in machine studying. As an alternative of ignoring them, AdaBoost (Adaptive Boosting) algorithm does one thing totally different: it learns (or adapts) from these errors to get higher.

In contrast to Random Forest, which makes many timber without delay, AdaBoost begins with a single, easy tree and identifies the cases it misclassifies. It then builds new timber to repair these errors, studying from its errors and getting higher with every step.

Right here, we’ll illustrate precisely how AdaBoost makes its predictions, constructing energy by combining focused weak learners identical to a exercise routine that turns targeted workouts into full-body energy.

AdaBoost is an ensemble machine studying mannequin that creates a sequence of weighted resolution timber, usually utilizing shallow timber (usually simply single-level “stumps”). Every tree is skilled on the complete dataset, however with adaptive pattern weights that give extra significance to beforehand misclassified examples.

For classification duties, AdaBoost combines the timber via a weighted voting system, the place better-performing timber get extra affect within the remaining resolution.

The mannequin’s energy comes from its adaptive studying course of — whereas every easy tree could be a “weak learner” that performs solely barely higher than random guessing, the weighted mixture of timber creates a “robust learner” that progressively focuses on and corrects errors.

All through this text, we’ll concentrate on the traditional golf dataset for example for classification.

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

# Create and put together dataset

dataset_dict = {

'Outlook': ['sunny', 'sunny', 'overcast', 'rainy', 'rainy', 'rainy', 'overcast',

'sunny', 'sunny', 'rainy', 'sunny', 'overcast', 'overcast', 'rainy',

'sunny', 'overcast', 'rainy', 'sunny', 'sunny', 'rainy', 'overcast',

'rainy', 'sunny', 'overcast', 'sunny', 'overcast', 'rainy', 'overcast'],

'Temperature': [85.0, 80.0, 83.0, 70.0, 68.0, 65.0, 64.0, 72.0, 69.0, 75.0, 75.0,

72.0, 81.0, 71.0, 81.0, 74.0, 76.0, 78.0, 82.0, 67.0, 85.0, 73.0,

88.0, 77.0, 79.0, 80.0, 66.0, 84.0],

'Humidity': [85.0, 90.0, 78.0, 96.0, 80.0, 70.0, 65.0, 95.0, 70.0, 80.0, 70.0,

90.0, 75.0, 80.0, 88.0, 92.0, 85.0, 75.0, 92.0, 90.0, 85.0, 88.0,

65.0, 70.0, 60.0, 95.0, 70.0, 78.0],

'Wind': [False, True, False, False, False, True, True, False, False, False, True,

True, False, True, True, False, False, True, False, True, True, False,

True, False, False, True, False, False],

'Play': ['No', 'No', 'Yes', 'Yes', 'Yes', 'No', 'Yes', 'No', 'Yes', 'Yes', 'Yes',

'Yes', 'Yes', 'No', 'No', 'Yes', 'Yes', 'No', 'No', 'No', 'Yes', 'Yes',

'Yes', 'Yes', 'Yes', 'Yes', 'No', 'Yes']

}

# Put together information

df = pd.DataFrame(dataset_dict)

df = pd.get_dummies(df, columns=['Outlook'], prefix='', prefix_sep='', dtype=int)

df['Wind'] = df['Wind'].astype(int)

df['Play'] = (df['Play'] == 'Sure').astype(int)# Rearrange columns

column_order = ['sunny', 'overcast', 'rainy', 'Temperature', 'Humidity', 'Wind', 'Play']

df = df[column_order]

# Put together options and goal

X,y = df.drop('Play', axis=1), df['Play']

X_train, X_test, y_train, y_test = train_test_split(X, y, train_size=0.5, shuffle=False)Fundamental Mechanism

Right here’s how AdaBoost works:

- Initialize Weights: Assign equal weight to every coaching instance.

- Iterative Studying: In every step, a easy resolution tree is skilled and its efficiency is checked. Misclassified examples get extra weight, making them a precedence for the subsequent tree. Appropriately labeled examples keep the identical, and all weights are adjusted so as to add as much as 1.

- Construct Weak Learners: Every new, easy tree targets the errors of the earlier ones, making a sequence of specialised weak learners.

- Ultimate Prediction: Mix all timber via weighted voting, the place every tree’s vote is predicated on its significance worth, giving extra affect to extra correct timber.

Right here, we’ll observe the SAMME (Stagewise Additive Modeling utilizing a Multi-class Exponential loss operate) algorithm, the usual strategy in scikit-learn that handles each binary and multi-class classification.

1.1. Determine the weak learner for use. A one-level resolution tree (or “stump”) is the default selection.

1.2. Determine what number of weak learner (on this case the variety of timber) you need to construct (the default is 50 timber).

1.3. Begin by giving every coaching instance equal weight:

· Every pattern will get weight = 1/N (N is whole variety of samples)

· All weights collectively sum to 1

For the First Tree

2.1. Construct a call stump whereas contemplating pattern weights

a. Calculate preliminary weighted Gini impurity for the basis node

b. For every function:

· Type information by function values (precisely like in Resolution Tree classifier)

· For every potential break up level:

·· Cut up samples into left and proper teams

·· Calculate weighted Gini impurity for each teams

·· Calculate weighted Gini impurity discount for this break up

c. Decide the break up that provides the biggest Gini impurity discount

d. Create a easy one-split tree utilizing this resolution

2.2. Consider how good this tree is

a. Use the tree to foretell the label of the coaching set.

b. Add up the weights of all misclassified samples to get error price

c. Calculate tree significance (α) utilizing:

α = learning_rate × log((1-error)/error)

2.3. Replace pattern weights

a. Preserve the unique weights for appropriately labeled samples

b. Multiply the weights of misclassified samples by e^(α).

c. Divide every weight by the sum of all weights. This normalization ensures all weights nonetheless sum to 1 whereas sustaining their relative proportions.

For the Second Tree

2.1. Construct a brand new stump, however now utilizing the up to date weights

a. Calculate new weighted Gini impurity for root node:

· Will likely be totally different as a result of misclassified samples now have greater weights

· Appropriately labeled samples now have smaller weights

b. For every function:

· Similar course of as earlier than, however the weights have modified

c. Decide the break up with finest weighted Gini impurity discount

· Typically fully totally different from the primary tree’s break up

· Focuses on samples the primary tree bought mistaken

d. Create the second stump

2.2. Consider this new tree

a. Calculate error price with present weights

b. Calculate its significance (α) utilizing the identical method as earlier than

2.3. Replace weights once more — Similar course of: enhance weights for errors then normalize.

For the Third Tree onwards

Repeat Step 2.1–2.3 for all remaining timber.

Step 3: Ultimate Ensemble

3.1. Preserve all timber and their significance scores

from sklearn.tree import plot_tree

from sklearn.ensemble import AdaBoostClassifier

from sklearn.tree import plot_tree

import matplotlib.pyplot as plt# Practice AdaBoost

np.random.seed(42) # For reproducibility

clf = AdaBoostClassifier(algorithm='SAMME', n_estimators=50, random_state=42)

clf.match(X_train, y_train)

# Create visualizations for timber 1, 2, and 50

trees_to_show = [0, 1, 49]

feature_names = X_train.columns.tolist()

class_names = ['No', 'Yes']

# Arrange the plot

fig, axes = plt.subplots(1, 3, figsize=(14,4), dpi=300)

fig.suptitle('Resolution Stumps from AdaBoost', fontsize=16)

# Plot every tree

for idx, tree_idx in enumerate(trees_to_show):

plot_tree(clf.estimators_[tree_idx],

feature_names=feature_names,

class_names=class_names,

stuffed=True,

rounded=True,

ax=axes[idx],

fontsize=12) # Elevated font dimension

axes[idx].set_title(f'Tree {tree_idx + 1}', fontsize=12)

plt.tight_layout(rect=[0, 0.03, 1, 0.95])

Testing Step

For predicting:

a. Get every tree’s prediction

b. Multiply every by its significance rating (α)

c. Add all of them up

d. The category with greater whole weight would be the remaining prediction

Analysis Step

After constructing all of the timber, we are able to consider the take a look at set.

# Get predictions

y_pred = clf.predict(X_test)# Create DataFrame with precise and predicted values

results_df = pd.DataFrame({

'Precise': y_test,

'Predicted': y_pred

})

print(results_df) # Show outcomes DataFrame

# Calculate and show accuracy

from sklearn.metrics import accuracy_score

accuracy = accuracy_score(y_test, y_pred)

print(f"nModel Accuracy: {accuracy:.4f}")

Listed here are the important thing parameters for AdaBoost, significantly in scikit-learn:

estimator: That is the bottom mannequin that AdaBoost makes use of to construct its remaining answer. The three commonest weak learners are:

a. Resolution Tree with depth 1 (Resolution Stump): That is the default and hottest selection. As a result of it solely has one break up, it’s thought of a really weak learner that’s only a bit higher than random guessing, precisely what is required for reinforcing course of.

b. Logistic Regression: Logistic regression (particularly with high-penalty) may also be used right here regardless that it’s not actually a weak learner. It might be helpful for information that has linear relationship.

c. Resolution Timber with small depth (e.g., depth 2 or 3): These are barely extra advanced than resolution stumps. They’re nonetheless pretty easy, however can deal with barely extra advanced patterns than the choice stump.

n_estimators: The variety of weak learners to mix, usually round 50–100. Utilizing greater than 100 not often helps.

learning_rate: Controls how a lot every classifier impacts the ultimate outcome. Frequent beginning values are 0.1, 0.5, or 1.0. Decrease numbers (like 0.1) and a bit greater n_estimator normally work higher.

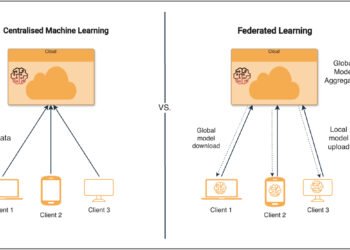

Key variations from Random Forest

As each Random Forest and AdaBoost works with a number of timber, it’s straightforward to confuse the parameters concerned. The important thing distinction is that Random Forest combines many timber independently (bagging) whereas AdaBoost builds timber one after one other to repair errors (boosting). Listed here are another particulars about their variations:

- No

bootstrapparameter as a result of AdaBoost makes use of all information however with altering weights - No

oob_scoreas a result of AdaBoost would not use bootstrap sampling learning_rateturns into essential (not current in Random Forest)- Tree depth is usually saved very shallow (normally simply stumps) in contrast to Random Forest’s deeper timber

- The main focus shifts from parallel unbiased timber to sequential dependent timber, making parameters like

n_jobsmuch less related

Professionals:

- Adaptive Studying: AdaBoost will get higher by giving extra weight to errors it made. Every new tree pays extra consideration to the arduous circumstances it bought mistaken.

- Resists Overfitting: Despite the fact that it retains including extra timber one after the other, AdaBoost normally doesn’t get too targeted on coaching information. It is because it makes use of weighted voting, so no single tree can management the ultimate reply an excessive amount of.

- Constructed-in Function Choice: AdaBoost naturally finds which options matter most. Every easy tree picks probably the most helpful function for that spherical, which suggests it robotically selects vital options because it trains.

Cons:

- Delicate to Noise: As a result of it offers extra weight to errors, AdaBoost can have hassle with messy or mistaken information. If some coaching examples have mistaken labels, it’d focus an excessive amount of on these dangerous examples, making the entire mannequin worse.

- Should Be Sequential: In contrast to Random Forest which may prepare many timber without delay, AdaBoost should prepare one tree at a time as a result of every new tree must understand how the earlier timber did. This makes it slower to coach.

- Studying Price Sensitivity: Whereas it has fewer settings to tune than Random Forest, the training price actually impacts how effectively it really works. If it’s too excessive, it’d study the coaching information too precisely. If it’s too low, it wants many extra timber to work effectively.

AdaBoost is a key boosting algorithm that many more moderen strategies discovered from. Its foremost concept — getting higher by specializing in errors — has helped form many trendy machine studying instruments. Whereas different strategies attempt to be good from the beginning, AdaBoost tries to point out that generally the easiest way to unravel an issue is to study out of your errors and hold bettering.

AdaBoost additionally works finest in binary classification issues and when your information is clear. Whereas Random Forest could be higher for extra normal duties (like predicting numbers) or messy information, AdaBoost may give actually good outcomes when utilized in the correct approach. The truth that individuals nonetheless use it after so a few years reveals simply how effectively the core concept works!

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.ensemble import AdaBoostClassifier

from sklearn.tree import DecisionTreeClassifier# Create dataset

dataset_dict = {

'Outlook': ['sunny', 'sunny', 'overcast', 'rainy', 'rainy', 'rainy', 'overcast',

'sunny', 'sunny', 'rainy', 'sunny', 'overcast', 'overcast', 'rainy',

'sunny', 'overcast', 'rainy', 'sunny', 'sunny', 'rainy', 'overcast',

'rainy', 'sunny', 'overcast', 'sunny', 'overcast', 'rainy', 'overcast'],

'Temperature': [85.0, 80.0, 83.0, 70.0, 68.0, 65.0, 64.0, 72.0, 69.0, 75.0, 75.0,

72.0, 81.0, 71.0, 81.0, 74.0, 76.0, 78.0, 82.0, 67.0, 85.0, 73.0,

88.0, 77.0, 79.0, 80.0, 66.0, 84.0],

'Humidity': [85.0, 90.0, 78.0, 96.0, 80.0, 70.0, 65.0, 95.0, 70.0, 80.0, 70.0,

90.0, 75.0, 80.0, 88.0, 92.0, 85.0, 75.0, 92.0, 90.0, 85.0, 88.0,

65.0, 70.0, 60.0, 95.0, 70.0, 78.0],

'Wind': [False, True, False, False, False, True, True, False, False, False, True,

True, False, True, True, False, False, True, False, True, True, False,

True, False, False, True, False, False],

'Play': ['No', 'No', 'Yes', 'Yes', 'Yes', 'No', 'Yes', 'No', 'Yes', 'Yes', 'Yes',

'Yes', 'Yes', 'No', 'No', 'Yes', 'Yes', 'No', 'No', 'No', 'Yes', 'Yes',

'Yes', 'Yes', 'Yes', 'Yes', 'No', 'Yes']

}

df = pd.DataFrame(dataset_dict)

# Put together information

df = pd.get_dummies(df, columns=['Outlook'], prefix='', prefix_sep='', dtype=int)

df['Wind'] = df['Wind'].astype(int)

df['Play'] = (df['Play'] == 'Sure').astype(int)

# Cut up options and goal

X, y = df.drop('Play', axis=1), df['Play']

X_train, X_test, y_train, y_test = train_test_split(X, y, train_size=0.5, shuffle=False)

# Practice AdaBoost

ada = AdaBoostClassifier(

estimator=DecisionTreeClassifier(max_depth=1), # Create base estimator (resolution stump)

n_estimators=50, # Sometimes fewer timber than Random Forest

learning_rate=1.0, # Default studying price

algorithm='SAMME', # The one presently out there algorithm (might be eliminated in future scikit-learn updates)

random_state=42

)

ada.match(X_train, y_train)

# Predict and consider

y_pred = ada.predict(X_test)

print(f"Accuracy: {accuracy_score(y_test, y_pred)}")