are really easy to make use of that it’s additionally simple to make use of them the mistaken manner, like holding a hammer by the top. The identical is true for Pydantic, a high-performance knowledge validation library for Python.

In Pydantic v2, the core validation engine is carried out in Rust, making it one of many quickest knowledge validation options within the Python ecosystem. Nevertheless, that efficiency benefit is barely realized in case you use Pydantic in a manner that truly leverages this extremely optimized core.

This text focuses on utilizing Pydantic effectively, particularly when validating massive volumes of knowledge. We spotlight 4 frequent gotchas that may result in order-of-magnitude efficiency variations if left unchecked.

1) Favor Annotated constraints over discipline validators

A core function of Pydantic is that knowledge validation is outlined declaratively in a mannequin class. When a mannequin is instantiated, Pydantic parses and validates the enter knowledge in keeping with the sector varieties and validators outlined on that class.

The naïve method: discipline validators

We use a @field_validator to validate knowledge, like checking whether or not an id column is definitely an integer or higher than zero. This type is readable and versatile however comes with a efficiency price.

class UserFieldValidators(BaseModel):

id: int

e-mail: EmailStr

tags: checklist[str]

@field_validator("id")

def _validate_id(cls, v: int) -> int:

if not isinstance(v, int):

elevate TypeError("id should be an integer")

if v < 1:

elevate ValueError("id should be >= 1")

return v

@field_validator("e-mail")

def _validate_email(cls, v: str) -> str:

if not isinstance(v, str):

v = str(v)

if not _email_re.match(v):

elevate ValueError("invalid e-mail format")

return v

@field_validator("tags")

def _validate_tags(cls, v: checklist[str]) -> checklist[str]:

if not isinstance(v, checklist):

elevate TypeError("tags should be an inventory")

if not (1 <= len(v) <= 10):

elevate ValueError("tags size should be between 1 and 10")

for i, tag in enumerate(v):

if not isinstance(tag, str):

elevate TypeError(f"tag[{i}] should be a string")

if tag == "":

elevate ValueError(f"tag[{i}] should not be empty")

The reason being that discipline validators execute in Python, after core kind coercion and constraint validation. This prevents them from being optimized or fused into the core validation pipeline.

The optimized method: Annotated

We are able to use Annotated from Python’s typing library.

class UserAnnotated(BaseModel):

id: Annotated[int, Field(ge=1)]

e-mail: Annotated[str, Field(pattern=RE_EMAIL_PATTERN)]

tags: Annotated[list[str], Area(min_length=1, max_length=10)]This model is shorter, clearer, and exhibits sooner execution at scale.

Why Annotated is quicker

Annotated (PEP 593) is a normal Python function, from the typing library. The constraints positioned inside Annotated are compiled into Pydantic’s inner scheme and executed inside pydantic-core (Rust).

Which means that there are not any user-defined Python validation calls required throughout validation. Additionally no intermediate Python objects or customized management move are launched.

Against this, @field_validator capabilities all the time run in Python, introduce operate name overhead and infrequently duplicate checks that would have been dealt with in core validation.

Vital nuance

An vital nuance is that Annotated itself will not be “Rust”. The speedup comes from utilizing constrains that pydantic-core understands and might use, not from Annotated present by itself.

Benchmark

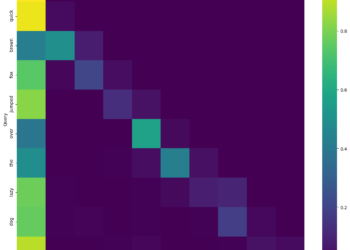

The distinction between no validation and Annotated validation is negligible in these benchmarks, whereas Python validators can turn out to be an order-of-magnitude distinction.

Benchmark (time in seconds)

┏━━━━━━━━━━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━┓

┃ Technique ┃ n=100 ┃ n=1k ┃ n=10k ┃ n=50k ┃

┡━━━━━━━━━━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━┩

│ FieldValidators│ 0.004 │ 0.020 │ 0.194 │ 0.971 │

│ No Validation │ 0.000 │ 0.001 │ 0.007 │ 0.032 │

│ Annotated │ 0.000 │ 0.001 │ 0.007 │ 0.036 │

└────────────────┴───────────┴──────────┴───────────┴───────────┘In absolute phrases we go from almost a second of validation time to 36 milliseconds. A efficiency enhance of virtually 30x.

Verdict

Use Annotated every time attainable. You get higher efficiency and clearer fashions. Customized validators are highly effective, however you pay for that flexibility in runtime price so reserve @field_validator for logic that can not be expressed as constraints.

2). Validate JSON with model_validate_json()

Now we have knowledge within the type of a JSON-string. What’s the most effective strategy to validate this knowledge?

The naïve method

Simply parse the JSON and validate the dictionary:

py_dict = json.masses(j)

UserAnnotated.model_validate(py_dict)The optimized method

Use a Pydantic operate:

UserAnnotated.model_validate_json(j)Why that is sooner

model_validate_json()parses JSON and validates it in a single pipeline- It makes use of Pydantic interal and sooner JSON parser

- It avoids constructing massive intermediate Python dictionaries and traversing these dictionaries a second time throughout validation

With json.masses() you pay twice: first when parsing JSON into Python objects, then for validating and coercing these objects.

model_validate_json() reduces reminiscence allocations and redundant traversal.

Benchmarked

The Pydantic model is sort of twice as quick.

Benchmark (time in seconds)

┏━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━┓

┃ Technique ┃ n=100 ┃ n=1K ┃ n=10K ┃ n=50K ┃ n=250K ┃

┡━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━┩

│ Load json │ 0.000 │ 0.002 │ 0.016 │ 0.074 │ 0.368 │

│ mannequin validate json │ 0.001 │ 0.001 │ 0.009 │ 0.042 │ 0.209 │

└─────────────────────┴───────┴───────┴───────┴───────┴────────┘In absolute phrases the change saves us 0.1 seconds validating 1 / 4 million objects.

Verdict

In case your enter is JSON, let Pydantic deal with parsing and validation in a single step. Efficiency-wise it isn’t completely needed to make use of model_validate_json() however accomplish that anyway to keep away from constructing intermediate Python objects and condense your code.

3) Use TypeAdapter for bulk validation

Now we have a Consumer mannequin and now we wish to validate a checklist of Consumers.

The naïve method

We are able to loop by the checklist and validate every entry or create a wrapper mannequin. Assume batch is a checklist[dict]:

# 1. Per-item validation

fashions = [User.model_validate(item) for item in batch]

# 2. Wrapper mannequin

# 2.1 Outline a wrapper mannequin:

class UserList(BaseModel):

customers: checklist[User]

# 2.2 Validate with the wrapper mannequin

fashions = UserList.model_validate({"customers": batch}).customersOptimized method

Sort adapters are sooner for validating lists of objects.

ta_annotated = TypeAdapter(checklist[UserAnnotated])

fashions = ta_annotated.validate_python(batch)Why that is sooner

Go away the heavy lifting to Rust. Utilizing a TypeAdapter doesn’t required an additional Wrapper to be constructed and validation runs utilizing a single compiled schema. There are fewer Python-to-Rust-and-back boundry crossings and there’s a decrease object allocation overhead.

Wrapper fashions are slower as a result of they do greater than validate the checklist:

- Constructs an additional mannequin occasion

- Tracks discipline units and inner state

- Handles configuration, defaults, extras

That further layer is small per name, however turns into measurable at scale.

Benchmarked

When utilizing massive units we see that the type-adapter is considerably sooner, particularly in comparison with the wrapper mannequin.

Benchmark (time in seconds)

┏━━━━━━━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━┳━━━━━━━━┓

┃ Technique ┃ n=100 ┃ n=1K ┃ n=10K ┃ n=50K ┃ n=100K ┃ n=250K ┃

┡━━━━━━━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━╇━━━━━━━━┩

│ Per-item │ 0.000 │ 0.001 │ 0.021 │ 0.091 │ 0.236 │ 0.502 │

│ Wrapper mannequin│ 0.000 │ 0.001 │ 0.008 │ 0.108 │ 0.208 │ 0.602 │

│ TypeAdapter │ 0.000 │ 0.001 │ 0.021 │ 0.083 │ 0.152 │ 0.381 │

└──────────────┴───────┴───────┴───────┴───────┴────────┴────────┘In absolute phrases, nonetheless, the speedup saves us round 120 to 220 milliseconds for 250k objects.

Verdict

If you simply wish to validate a sort, not outline a website object, TypeAdapter is the quickest and cleanest possibility. Though it’s not completely required for time saved, it skips pointless mannequin instantiation and avoids Python-side validation loops, making your code cleaner and extra readable.

4) Keep away from from_attributes except you want it

With from_attributes you configure your mannequin class. If you set it to True you inform Pydantic to learn values from object attributes as a substitute of dictionary keys. This issues when your enter is something however a dictionary, like a SQLAlchemy ORM occasion, dataclass or any plain Python object with attributes.

By default from_attributes is False. Typically builders set this attribute to True to maintain the mannequin versatile:

class Product(BaseModel):

id: int

identify: str

model_config = ConfigDict(from_attributes=True)

In the event you simply cross dictionaries to your mannequin, nonetheless, it’s greatest to keep away from from_attributes as a result of it requires Python to do much more work. The ensuing overhead offers no profit when the enter is already in plain mapping.

Why from_attributes=True is slower

This methodology makes use of getattr() as a substitute of dictionary lookup, which is slower. Additionally it might probably set off functionalities on the thing we’re studying from like descriptors, properties, or ORM lazy loading.

Benchmark

As batch sizes get bigger, utilizing attributes will get increasingly costly.

Benchmark (time in seconds)

┏━━━━━━━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━┳━━━━━━━━┓

┃ Technique ┃ n=100 ┃ n=1K ┃ n=10K ┃ n=50K ┃ n=100K ┃ n=250K ┃

┡━━━━━━━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━╇━━━━━━━━┩

│ with attribs │ 0.000 │ 0.001 │ 0.011 │ 0.110 │ 0.243 │ 0.593 │

│ no attribs │ 0.000 │ 0.001 │ 0.012 │ 0.103 │ 0.196 │ 0.459 │

└──────────────┴───────┴───────┴───────┴───────┴────────┴────────┘In absolute phrases just a little below 0.1 seconds is saved on validating 250k objects.

Verdict

Solely use from_attributes when your enter is not a dict. It exists to help attribute-based objects (ORMs, dataclasses, area objects). In these circumstances, it may be sooner than first dumping the thing to a dict after which validating it. For plain mappings, it provides overhead with no profit.

Conclusion

The purpose of those optimizations is to not shave off a couple of milliseconds for their very own sake. In absolute phrases, even a 100ms distinction is never the bottleneck in an actual system.

The true worth lies in writing clearer code and utilizing your instruments proper.

Utilizing the guidelines specified on this article results in clearer fashions, extra express intent, and a higher alignment with how Pydantic is designed to work. These patterns transfer validation logic out of ad-hoc Python code and into declarative schemas which can be simpler to learn, motive about, and preserve.

The efficiency enhancements are a facet impact of doing issues the appropriate manner. When validation guidelines are expressed declaratively, Pydantic can apply them constantly, optimize them internally, and scale them naturally as your knowledge grows.

Briefly:

Don’t undertake these patterns simply because they’re sooner. Undertake them as a result of they make your code easier, extra express, and higher suited to the instruments you’re utilizing.

The speedup is only a good bonus.

I hope this text was as clear as I meant it to be but when this isn’t the case please let me know what I can do to make clear additional. Within the meantime, take a look at my different articles on every kind of programming-related matters.

Blissful coding!

— Mike

P.s: like what I’m doing? Observe me!