street in Lao PDR. The varsity is 200 meters away. Site visitors roars, smoke from burning rubbish drifts throughout the trail, and kids stroll straight by means of it. What are they respiration at present? With out native knowledge, nobody actually is aware of.

Throughout East Asia and the Pacific, 325 million kids [1] breathe poisonous air each day, typically at ranges 10 instances above secure limits. The harm is usually silent: affected lungs, bronchial asthma, however it could actually result in missed college days in acute circumstances. The futures are at stake. In the long term, the well being methods are strained, and economies must bear the prices.

In lots of circumstances, air high quality knowledge isn’t even obtainable.

No screens. No proof. No safety.

On this second a part of the weblog collection [2], we examine the information repositories the place helpful air-quality knowledge is offered, how you can import them, and how you can get them up and operating in your pocket book. We might additionally demystify knowledge codecs corresponding to GeoJSON, Parquet/GeoParquet, NetCDF/HDF5, COG, GRIB, and Zarr so you’ll be able to decide the correct software for the job. We are constructing it up in order that within the subsequent half, we are able to go step-by-step by means of how we developed an open-source air high quality mannequin.

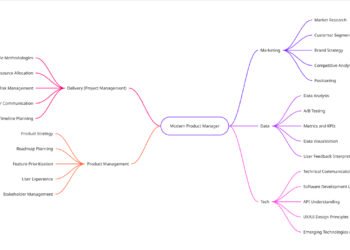

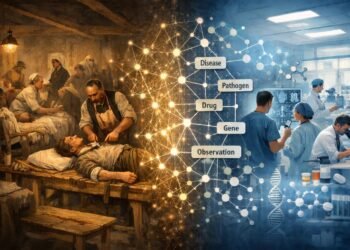

In the previous few years, there was a major push to generate and use air-quality knowledge. These knowledge come from totally different sources, and their high quality varies accordingly. Just a few repositories may help quantify them: regulatory stations for floor fact, group sensors to grasp hyperlocal variations, satellites for regional context, and mannequin reanalyses for estimates (Determine 2). The excellent news: most of that is open. The higher information: the code to get began is comparatively brief.

Repository quick-starts (with minimal Python)

On this part, we transfer from ideas to follow. Under, we stroll by means of a set of generally used open-source repositories and present the smallest doable code you could begin pulling knowledge from every of them. All examples assume Python ≥3.10 with pip set up as wanted.

For every numbered repository, you will discover:

- a brief description of what the information supply is and the way it’s maintained,

- typical use-cases (when this supply is an effective match),

- how you can entry it (API keys, sign-up notes, or direct URLs), and

- a minimal Python code snippet to extract knowledge.

Think about this as a sensible information the place you’ll be able to skim the descriptions, decide the supply that matches your downside, after which adapt the code to plug straight into your individual evaluation or mannequin pipeline.

Tip: Maintain secrets and techniques out of code. Use atmosphere variables for tokens (e.g., export AIRNOW_API_KEY=…).

1) OpenAQ (world floor measurements; open API)

OpenAQ [3] is an open-source knowledge platform that hosts world knowledge for air high quality knowledge, corresponding to PM2.5, PM10, and O3. They supply air high quality knowledge by partnering with varied governmental companions, group companions, and air high quality sensor firms corresponding to Air Gradient, IQAir, amongst others.

Nice for: fast cross-country pulls, harmonised items/metadata, reproducible pipelines.

Join an OpenAQ API key at https://discover.openaq.org. After signing up, discover your API key in your settings. Use this key to authenticate requests.

!pip set up openaq pandasimport pandas as pd

from pandas import json_normalize

from openaq import OpenAQ

import datetime

from datetime import timedelta

import geopandas as gpd

import requests

import time

import json

# comply with the quickstart to get the api key https://docs.openaq.org/using-the-api/quick-start

api_key = '' #enter you API Key earlier than executing

consumer = OpenAQ(api_key=api_key) #use the API key generated earlier

# get the areas of each sensors within the chosen international locations codes: https://docs.openaq.org/sources/international locations

areas = consumer.areas.checklist(

countries_id=[68,111],

restrict = 1000

)

data_locations = areas.dict()

df_sensors_country = json_normalize(data_locations ['results'])

df_sensors_exploded = df_sensors_country.explode('sensors')

df_sensors_exploded['sensor_id']=df_sensors_exploded['sensors'].apply(lambda x: x['id'])

df_sensors_exploded['sensor_type']=df_sensors_exploded['sensors'].apply(lambda x: x['name'])

df_sensors_pm25 = df_sensors_exploded[df_sensors_exploded['sensor_type'] == "pm25 µg/m³"]

df_sensors_pm25

# undergo every location and extract the hourly measurements

df_concat_aq_data=pd.DataFrame()

to_date = datetime.datetime.now()

from_date = to_date - timedelta(days=2) # get the previous 2 days knowledge

sensor_list = df_sensors_pm25.sensor_id

for sensor_id in sensor_list[0:5]:

print("-----")

response = consumer.measurements.checklist(

sensors_id= sensor_id,

datetime_from = from_date,

datetime_to = to_date,

restrict = 500 )

print(response)

data_measurements = response.dict()

df_hourly_data = json_normalize(data_measurements ['results'])

df_hourly_data["sensor_id"] = sensor_id

if len(df_hourly_data) > 0:

df_concat_aq_data=pd.concat([df_concat_aq_data,df_hourly_data])

df_concat_aq_data = df_concat_aq_data[["sensor_id","period.datetime_from.utc","period.datetime_to.utc","parameter.name","value"]]

df_concat_aq_data2) EPA AQS Information Mart (U.S. regulatory archive; token wanted)

The EPA AQS Information Mart [4] is a U.S. regulatory knowledge archive that hosts quality-controlled air-quality measurements from hundreds of monitoring stations throughout the nation. It supplies long-term information for standards pollution corresponding to PM₂․₅, PM₁₀, O₃, NO₂, SO₂, and CO, together with detailed web site metadata and QA flags, and is freely accessible by way of an API when you register and acquire an entry token. It supplies meteorological knowledge as effectively.

Nice for: authoritative QA/QC-d U.S. knowledge.

Join an AQS Information Mart account on the US EPA web site at: https://aqs.epa.gov/aqsweb/paperwork/data_api.html

Create a .env file in your atmosphere and add your credentials, together with AQS electronic mail and AQS key.

# pip set up requests pandas

import os, requests, pandas as pd

AQS_EMAIL = os.getenv("AQS_EMAIL")

AQS_KEY = os.getenv("AQS_KEY")

url = "https://aqs.epa.gov/knowledge/api/sampleData/byState"

params = {"electronic mail": AQS_EMAIL, "key": AQS_KEY, "param": "88101", "b date":"20250101", "edate": "20250107", "state": "06"}

r = requests.get(url, params=params, timeout=60)

df = pd.json_normalize(r.json()["Data"])

print(df[["state_name","county_name","date_local","sample_measurement","units_of_measure"]].head()) 3) AirNow (U.S. real-time indices; API key)

AirNow [5] is a U.S. authorities platform that gives close to real-time air-quality index (AQI) info based mostly on regulatory monitoring knowledge. It publishes present and forecast AQI values for pollution corresponding to PM₂․₅ and O₃, together with class breakpoints (“Good”, “Reasonable”, and so on.) which are straightforward to speak to the general public. Information may be accessed programmatically by way of the AirNow API when you register and acquire an API key.

Nice for: wildfire and public-facing AQI visuals.

Register for an AirNow API account by way of the AirNow API portal: https://docs.airnowapi.org/

From the Log In web page, choose “Request an AirNow API Account” and full the registration kind together with your electronic mail and fundamental particulars. After you activate your account, you will discover your API key in your AirNow API dashboard; use this key to authenticate all calls to the AirNow internet companies.

import os, requests, pandas as pd

API_KEY = os.getenv("AIRNOW_API_KEY")

url = "https://www.airnowapi.org/aq/statement/latLong/present/"

params = {"format":"utility/json", "latitude": 37.7749, "longitude": -122.4194, "distance":25, "API_KEY": API_KEY}

df = pd.DataFrame(requests.get(url, params=params, timeout=30).json())

print(df[["ParameterName", "AQI" ,"Category.Name ","DateObserved", "HourObserved"]]) 4) Copernicus Environment Monitoring Service (CAMS; Environment Information Retailer)

The Copernicus Environment Monitoring Service [6], applied by ECMWF for the EU’s Copernicus programme, supplies world reanalyses and near-real-time forecasts of atmospheric composition. By means of the Environment Information Retailer (ADS), you’ll be able to entry gridded fields for aerosols, reactive gases (O₃, NO₂, and so on.), greenhouse gases and associated meteorological variables, with multi-year information appropriate for each analysis and operational purposes. All CAMS merchandise within the ADS are open and freed from cost, topic to accepting the Copernicus licence.

Nice for: world background fields (aerosols & hint gases), forecasts and reanalyses.

The best way to register and get API entry

- Go to the Environment Information Retailer: https://advertisements.environment.copernicus.eu.

- Click on Login / Register within the top-right nook and create a (free) Copernicus/ECMWF account.

- After confirming your electronic mail, log in and go to your profile web page to seek out your ADS API key (UID + key).

- Comply with the ADS “The best way to use the API” directions to create a configuration file (usually ~/.cdsapirc) with:

- url: https://advertisements.environment.copernicus.eu/api

- key:

:

- On the net web page of every CAMS dataset you need to use, go to the Obtain knowledge tab and settle for the licence on the backside as soon as; solely then will API requests for that dataset succeed.

As soon as that is arrange, you need to use the usual cdsapi Python consumer to programmatically obtain CAMS datasets from the ADS.

# pip set up cdsapi xarray cfgrib

import cdsapi

c = cdsapi.Consumer()

# Instance: CAMS world reanalysis (EAC4) complete column ozone (toy instance)

c.retrieve(

"cams-global-reanalysis-eac4",

{"variable":"total_column_ozone","date":"2025-08-01/2025-08-02","time":["00:00","12:00"],

"format":"grib"}, "cams_ozone.grib") 5) NASA Earthdata (LAADS DAAC / GES DISC; token/login)

NASA Earthdata [7] supplies unified sign-on entry to a variety of Earth science knowledge, together with satellite tv for pc aerosol and hint fuel merchandise which are essential for air-quality purposes. Two key centres for atmospheric composition are:

- LAADS DAAC (Stage-1 and Environment Archive and Distribution System DAAC), which hosts MODIS, VIIRS and different instrument merchandise (e.g., AOD, cloud, fireplace, radiance).

- GES DISC (Goddard Earth Sciences Information and Info Providers Heart), which serves mannequin and satellite tv for pc merchandise corresponding to MERRA-2 reanalysis, OMI, TROPOMI, and associated atmospheric datasets.

Most of those datasets are free to make use of however require a NASA Earthdata Login; downloads are authenticated both by way of HTTP fundamental auth (username/password saved in .netrc) or by way of a private entry token (PAT) in request headers.

Nice for: MODIS/VIIRS AOD, MAIAC, TROPOMI trace-gas merchandise.

The best way to register and get API/obtain entry:

- Create a NASA Earthdata Login account at:

https://urs.earthdata.nasa.gov

- Verify your electronic mail and log in to your Earthdata profile.

- Underneath your profile, generate a private entry token (PAT). Save this token securely; you need to use it in scripts by way of an Authorization: Bearer

header or in instruments that assist Earthdata tokens.

- For traditional wget/curl-based downloads, you’ll be able to alternatively create a ~/.netrc file to retailer your Earthdata username and password, for instance:

machine urs.earthdata.nasa.gov

login

password Then set file permissions to user-only (chmod 600 ~/.netrc) so command-line instruments can authenticate robotically.

- For LAADS DAAC merchandise, go to https://ladsweb.modaps.eosdis.nasa.gov, log in together with your Earthdata credentials, and use the Search & Obtain interface to construct obtain URLs; you’ll be able to copy the auto-generated wget/curl instructions into your scripts.

- For GES DISC datasets, begin from https://disc.gsfc.nasa.gov, select a dataset (e.g., MERRA-2), and use the “Information Entry” or “Subset/Get Information” instruments. The location can generate script templates (Python, wget, and so on.) that already embrace the right endpoints for authenticated entry.

As soon as your Earthdata Login and token are arrange, LAADS DAAC and GES DISC behave like commonplace HTTPS APIs: you’ll be able to name them from Python (e.g., with requests, xarray + pydap/OPeNDAP, or s3fs for cloud buckets) utilizing your credentials or token for authenticated, scriptable downloads.

#Downloads by way of HTTPS with Earthdata login.

# pip set up requests

import requests

url = "https://ladsweb.modaps.eosdis.nasa.gov/archive/allData/6/MCD19A2/2025/214/MCD19A2.A2025214.h21v09.006.2025xxxxxx.hdf"

# Requires a legitimate token cookie; suggest utilizing .netrc or requests.Session() with auth

# See NASA docs for token-based obtain; right here we solely illustrate the sample:

# s = requests.Session(); s.auth = (USERNAME, PASSWORD); r = s.get(url) 6) STAC catalogues (search satellites programmatically)

SpatioTemporal Asset Catalog (STAC) [8] is an open specification for describing geospatial belongings, corresponding to satellite tv for pc scenes, tiles, and derived merchandise, in a constant, machine-readable manner. As an alternative of manually shopping obtain portals, you question a STAC API with filters like time, bounding field, cloud cowl, platform (e.g., Sentinel-2, Landsat-8, Sentinel-5P), or processing stage, and get again JSON gadgets with direct hyperlinks to COGs, NetCDF, Zarr, or different belongings.

Nice for: uncover and stream belongings (COGs/NetCDF) with out bespoke APIs and works effectively with Sentinel-5P, Landsat, Sentinel-2, extra.

The best way to register and get API entry:

STAC itself is simply a normal; entry relies on the particular STAC API you employ:

- Many public STAC catalogues (e.g., demo or analysis endpoints) are totally open and require no registration—you’ll be able to hit their /search endpoint straight with HTTP POST/GET.

- Some cloud platforms that expose STAC (for instance, business or giant cloud suppliers) require you to create a free account and acquire credentials earlier than you’ll be able to learn the underlying belongings (e.g., blobs in S3/Blob storage), though the STAC metadata is open.

A generic sample you’ll be able to describe is:

- Choose a STAC API endpoint for the satellite tv for pc knowledge you care about (usually documented as one thing alongside the strains of https://

/stac or …/stac/search).

- If the supplier requires sign-up, create an account of their portal and acquire the API key or storage credentials they suggest (this is perhaps a token, SAS URL, or cloud entry position).

- Use a STAC consumer library in Python (for instance, pystac-client) to go looking {the catalogue}:

# pip set up pystac-client

from pystac_client import Consumer

api = Consumer.open("https://instance.com/stac")

search = api.search(

collections=["sentinel-2-l2a"],

bbox=[102.4, 17.8, 103.0, 18.2], # minx, miny, maxx, maxy

datetime="2024-01-01/2024-01-31",

question={"eo:cloud_cover": {"lt": 20}},

)

gadgets = checklist(search.get_items())

first_item = gadgets[0]

belongings = first_item.belongings # e.g., COGs, QA bands, metadata - For every returned STAC merchandise, comply with the asset href hyperlinks (usually HTTPS URLs or cloud URIs like s3://…) and skim them with the applicable library (rasterio/xarray/zarr and so on.). If credentials are wanted, configure them by way of atmosphere variables or your cloud SDK as per the supplier’s directions.

As soon as arrange, STAC catalogues provide you with a uniform, programmatic method to search and retrieve satellite tv for pc knowledge throughout totally different suppliers, with out rewriting your search logic each time you turn from one archive to a different.

# pip set up pystac-client planetary-computer rasterio

from pystac_client import Consumer

from shapely.geometry import field, mapping

import geopandas as gpd

catalog = Consumer.open("https://earth-search.aws.element84.com/v1")

aoi = mapping(field(-0.3, 5.5, 0.3, 5.9)) # bbox round Accra

search = catalog.search(collections=["sentinel-2-l2a"], intersects=aoi, restrict=5)

gadgets = checklist(search.get_items())

for it in gadgets:

print(it.id, checklist(it.belongings.keys())[:5]) # e.g., "B04", "B08", "SCL", "visible" It’s preferrable to make use of STAC the place doable as they supply clear metadata, cloud-optimised belongings, and straightforward filtering by time/house.

7) Google Earth Engine (GEE; quick prototyping at scale)

Google Earth Engine [9] is a cloud-based geospatial evaluation platform that hosts a big catalogue of satellite tv for pc, local weather, and land-surface datasets (e.g., MODIS, Landsat, Sentinel, reanalyses) and allows you to course of them at scale with out managing your individual infrastructure. You write brief scripts in JavaScript or Python, and GEE handles the heavy lifting like knowledge entry, tiling, reprojection, and parallel computation thus making it ideally suited for quick prototyping, exploratory analyses, and educating.

Nevertheless, GEE itself isn’t open supply: it’s a proprietary, closed platform the place the underlying codebase isn’t publicly obtainable. This has implications for open, reproducible workflows mentioned within the first Air for Tomorrow weblog [add link]:

Nice for: testing fusion/downscaling over a metropolis/area utilizing petabyte-scale datasets.

The best way to register and get entry

- Go to the Earth Engine sign-up web page: https://earthengine.google.com.

- Register with a Google account and full the non-commercial sign-up kind, describing your meant use (analysis, schooling, or private, non-commercial initiatives).

- As soon as your account is authorised, you’ll be able to:

- use the browser-based Code Editor to jot down JavaScript Earth Engine scripts; and

- allow the Earth Engine API in Google Cloud and set up the earthengine-api Python package deal (pip set up earthengine-api) to run workflows from Python notebooks.

- When sharing your work, think about exporting key intermediate outcomes (e.g., GeoTIFF/COG, NetCDF/Zarr) and documenting your processing steps in open-source code in order that others can re-create the evaluation with out relying fully on GEE.

When used this manner, Earth Engine turns into a robust “speedy laboratory” for testing concepts, which you’ll be able to then harden into totally open, transportable pipelines for manufacturing and long-term stewardship.

# pip set up earthengine-api

import ee

ee.Initialize() # first run: ee.Authenticate() in a console

s5p = ee.ImageCollection('COPERNICUS/S5P/OFFL/L3_NO2').choose('NO2_column_number_density')

.filterDate('2025-08-01', '2025-08-07').imply()

print(s5p.getInfo()['bands'][0]['id'])

# Exporting and visualization occur inside GEE; you'll be able to pattern to a grid then .getDownloadURL() 8) HIMAWARI

Himawari-8 and Himawari-9 are geostationary meteorological satellites operated by the Japan Meteorological Company (JMA). Their Superior Himawari Imager (AHI) supplies multi-band seen, near-infrared and infrared imagery over East Asia and the western–central Pacific, with full-disk scans each 10 minutes and even sooner refresh over goal areas. This high-cadence view is extraordinarily helpful for monitoring smoke plumes, mud, volcanic eruptions, convective storms and the diurnal evolution of clouds—precisely the sorts of processes that modulate near-surface air high quality.

Nice for: monitoring diurnal haze/smoke plumes and fireplace occasions, producing high-frequency AOD to fill polar-orbit gaps, and speedy situational consciousness for cities throughout SE/E Asia (by way of JAXA P-Tree L3 merchandise).

The best way to entry and register

Choice A – Open archive by way of NOAA on AWS (no sign-up required)

- Browse the dataset description on the AWS Registry of Open Information: https://registry.opendata.aws/noaa-himawari/

- Himawari-8 and Himawari-9 imagery are hosted in public S3 buckets (s3://noaa-himawari8/ and s3://noaa-himawari9/). As a result of the buckets are world-readable, you’ll be able to checklist or obtain information anonymously, for instance:

aws s3 ls --no-sign-request s3://noaa-himawari9/

or entry particular person objects by way of HTTPS (e.g., https://noaa-himawari9.s3.amazonaws.com/…).

- For Python workflows, you need to use libraries like s3fs, fsspec, xarray, or rasterio to stream knowledge straight from these buckets with out prior registration, conserving in thoughts the attribution steerage from JMA/NOAA while you publish outcomes.

Choice B – JAXA Himawari Monitor / P-Tree (analysis & schooling account)

- Go to the JAXA Himawari Monitor / P-Tree portal:

https://www.eorc.jaxa.jp/ptree/

- Click on Person Registration / Account request and skim the “Precautions” and “Phrases of Use”. Information entry is restricted to non-profit functions corresponding to analysis and schooling; business customers are directed to the Japan Meteorological Enterprise Help Heart.

- Submit your electronic mail handle within the account request kind. You’ll obtain a brief acceptance electronic mail, then a hyperlink to finish your consumer info. After handbook evaluation, JAXA allows your entry and notifies you as soon as you’ll be able to obtain Himawari Commonplace Information and geophysical parameter merchandise.

- As soon as authorised, you’ll be able to log in to obtain near-real-time and archived Himawari knowledge by way of the P-Tree FTP/HTTP companies, following JAXA’s steerage on non-redistribution and quotation.

In follow, a standard sample is to make use of the NOAA/AWS buckets for open, scriptable entry to uncooked imagery, and the JAXA P-Tree merchandise while you want value-added parameters (e.g., cloud or aerosol properties) and are working inside non-profit analysis or academic initiatives.

# open the downloaded file

!pip set up xarray netCDF4

!pip set up rasterio polars_h3

!pip set up geopandas pykrige

!pip set up polars==1.25.2

!pip set up dask[complete] rioxarray h3==3.7.7

!pip set up h3ronpy==0.21.1

!pip set up geowrangler# Himawari utilizing – JAXA Himawari Monitor / P-Tree

# create your account right here and use the username and password despatched by electronic mail - https://www.eorc.jaxa.jp/ptree/registration_top.html

consumer = '' # enter the username

password = '' # enter the password from ftplib import FTP

from pathlib import Path

import rasterio

from rasterio.remodel import from_origin

import xarray as xr

import os

import matplotlib.pyplot as plt

def get_himawari_ftp_past_2_days(consumer, password):

# FTP connection particulars

ftp = FTP('ftp.ptree.jaxa.jp')

ftp.login(consumer=consumer, passwd=password)

# test the listing content material : /pub/himawari/L2/ARP/031/

# particulars of AOD directoty right here: https://www.eorc.jaxa.jp/ptree/paperwork/README_HimawariGeo_en.txt

overall_path= "/pub/himawari/L3/ARP/031/"

directories = overall_path.strip("/").break up("/")

for listing in directories:

ftp.cwd(listing)

# Checklist information within the goal listing

date_month_files = ftp.nlst()

# order information desc

date_month_files.kind(reverse=False)

print("Recordsdata in goal listing:", date_month_files)

# get a listing of all of the month / days inside the "/pub/himawari/L3/ARP/031/" path inside the previous 2 months

limited_months_list = date_month_files[-2:]

i=0

# for every month within the limited_months_list, checklist all the times inside in

for month in limited_months_list:

ftp.cwd(month)

date_day_files = ftp.nlst()

date_day_files.kind(reverse=False)

# mix every component of the date_day_file checklist with the month : month +"/" + date_day_file

list_combined_days_month_inter = [month + "/" + date_day_file for date_day_file in date_day_files]

if i ==0:

list_combined_days_month= list_combined_days_month_inter

i=i+1

else:

list_combined_days_month= list_combined_days_month + list_combined_days_month_inter

ftp.cwd("..")

# take away all parts containing each day or month-to-month from list_combined_days_month

list_combined_days_month = [item for item in list_combined_days_month if 'daily' not in item and 'monthly' not in item]

# get the checklist of days we need to obtain : in our case final 2 days - for NRT

limited_list_combined_days_month=list_combined_days_month[-2:]

for month_day_date in limited_list_combined_days_month:

#navigate to the related listing

ftp.cwd(month_day_date)

print(f"listing: {month_day_date}")

# get the checklist of the hourly information inside every listing

date_hour_files = ftp.nlst()

!mkdir -p ./raw_data/{month_day_date}

#for every hourly file within the checklist

for date_hour_file in date_hour_files:

target_file_path=f"./raw_data/{month_day_date}/{date_hour_file}"

# Obtain the goal file - provided that it doesn't exist already

if not os.path.exists(target_file_path):

with open(target_file_path, "wb") as local_file:

ftp.retrbinary(f"RETR {date_hour_file}", local_file.write)

print(f"Downloaded {date_hour_file} efficiently!")

else:

print(f"File already exists: {date_hour_file}")

print("--------------")

# return 2 steps within the ftp tree

ftp.cwd("..")

ftp.cwd("..")def transform_to_tif():

# get checklist of information in raw_data folder

month_file_list = os.listdir("./raw_data")

month_file_list

#order month_file_list

month_file_list.kind(reverse=False)

nb_errors=0

# get checklist of every day folder for the previous 2 months solely

for month_file in month_file_list[-2:]:

print(f"-----------------------------------------")

print(f"Month thought of: {month_file}")

date_file_list=os.listdir(f"./raw_data/{month_file}")

date_file_list.kind(reverse=False)

# get checklist of information for every day folder

for date_file in date_file_list[-2:]:

print(f"---------------------------")

print(f"Day thought of: {date_file}")

hour_file_list=os.listdir(f"./raw_data/{month_file}/{date_file}")

hour_file_list.kind(reverse=False)

#course of every hourly file right into a tif file and remodel it into an h3 processed dataframe

for hour_file in hour_file_list:

file_path = f"./raw_data/{month_file}/{date_file}/{hour_file}"

hour_file_tif=hour_file.exchange(".nc",".tif")

output_tif = f"./tif/{month_file}/{date_file}/{hour_file_tif}"

if os.path.exists(output_tif):

print(f"File already exists: {output_tif}")

else:

strive:

dataset = xr.open_dataset(file_path, engine='netcdf4')

besides:

#go to subsequent hour_file

print(f"error opening {hour_file} file - skipping ")

nb_errors=nb_errors+1

proceed

# Entry a selected variable

variable_name = checklist(dataset.data_vars.keys())[1] # Merged AOT product

knowledge = dataset[variable_name]

# Plot knowledge (if it is 2D and suitable)

plt.determine()

knowledge.plot()

plt.title(f'{date_file}')

plt.present()

# Extract metadata (exchange with precise coordinates out of your knowledge if obtainable)

lon = dataset['longitude'] if 'longitude' in dataset.coords else None

lat = dataset['latitude'] if 'latitude' in dataset.coords else None

# Deal with lacking lat/lon (instance assumes evenly spaced grid)

if lon is None or lat is None:

lon_start, lon_step = -180, 0.05 # Instance values

lat_start, lat_step = 90, -0.05 # Instance values

lon = xr.DataArray(lon_start + lon_step * vary(knowledge.form[-1]), dims=['x'])

lat = xr.DataArray(lat_start + lat_step * vary(knowledge.form[-2]), dims=['y'])

# Outline the affine remodel for georeferencing

remodel = from_origin(lon.min().merchandise(), lat.max().merchandise(), abs(lon[1] - lon[0]).merchandise(), abs(lat[0] - lat[1]).merchandise())

# Save to GeoTIFF

!mkdir -p ./tif/{month_file}/{date_file}

with rasterio.open(

output_tif,

'w',

driver='GTiff',

top=knowledge.form[-2],

width=knowledge.form[-1],

rely=1, # Variety of bands

dtype=knowledge.dtype.title,

crs='EPSG:4326', # Coordinate Reference System (e.g., WGS84)

remodel=remodel

) as dst:

dst.write(knowledge.values, 1) # Write the information to band 1

print(f"Saved {output_tif} efficiently!")

print(f"{nb_errors} error(s) ")get_himawari_ftp_past_2_days(consumer, password)

transform_to_tif()9) NASA — FIRMS [Special Highlight]

NASA’s Fireplace Info for Useful resource Administration System (FIRMS) [10] supplies near-real-time info on energetic fires and thermal anomalies detected by devices corresponding to MODIS and VIIRS. It gives world protection with low latency (on the order of minutes to hours), supplying attributes corresponding to fireplace radiative energy, confidence, and acquisition time. FIRMS is broadly used for wildfire monitoring, agricultural burning, forest administration, and as a proxy enter for air-quality and smoke dispersion modelling.

Nice for: pinpointing fireplace hotspots that drive AQ spikes, monitoring plume sources and fire-line development, monitoring crop-residue/forest burns, and triggering speedy response. Quick access by way of CSV/GeoJSON/Shapefile, map tiles/API, with 24–72 h rolling feeds and full archives for seasonal evaluation.

The best way to register and get API entry

- Create a free NASA Earthdata Login account at:

https://urs.earthdata.nasa.gov

- Verify your electronic mail and check in together with your new credentials.

- Go to the FIRMS web site you intend to make use of, for instance:

- Click on Login (high proper) and authenticate together with your Earthdata username and password. As soon as logged in, you’ll be able to:

- customise map views and obtain choices from the online interface, and

- generate or use FIRMS Net Providers/API URLs that honour your authenticated session.

- For scripted entry, you’ll be able to name the FIRMS obtain or internet service endpoints (e.g., GeoJSON, CSV) utilizing commonplace HTTP instruments (e.g., curl, requests in Python). If an endpoint requires authentication, provide your Earthdata credentials by way of a .netrc file or session cookies, as you’d for different Earthdata companies.

In follow, FIRMS is a handy method to pull current fireplace areas into an air-quality workflow: you’ll be able to fetch each day or hourly fireplace detections for a area, convert them to a GeoDataFrame, after which intersect with wind fields, inhabitants grids, or sensor networks to grasp potential smoke impacts.

#FIRMS

!pip set up geopandas rtree shapely import pandas as pd

import geopandas as gpd

from shapely.geometry import Level

import numpy as np

import matplotlib.pyplot as plt

import rtree

# get boundaries of Thailand

boundaries_country = gpd.read_file(f'https://github.com/wmgeolab/geoBoundaries/uncooked/fcccfab7523d4d5e55dfc7f63c166df918119fd1/releaseData/gbOpen/THA/ADM0/geoBoundaries-THA-ADM0.geojson')

boundaries_country.plot()

# Actual time knowledge supply: https://companies.modaps.eosdis.nasa.gov/active_fire/

# Previous 7 days hyperlinks:

modis_7d_url= "https://companies.modaps.eosdis.nasa.gov/knowledge/active_fire/modis-c6.1/csv/MODIS_C6_1_SouthEast_Asia_7d.csv"

suomi_7d_url= "https://companies.modaps.eosdis.nasa.gov/knowledge/active_fire/suomi-npp-viirs-c2/csv/SUOMI_VIIRS_C2_SouthEast_Asia_7d.csv"

j1_7d_url= "https://companies.modaps.eosdis.nasa.gov/knowledge/active_fire/noaa-20-viirs-c2/csv/J1_VIIRS_C2_SouthEast_Asia_7d.csv"

j2_7d_url="https://companies.modaps.eosdis.nasa.gov/knowledge/active_fire/noaa-21-viirs-c2/csv/J2_VIIRS_C2_SouthEast_Asia_7d.csv"

urls = [modis_7d_url, suomi_7d_url, j1_7d_url, j2_7d_url]

# Create an empty GeoDataFrame to retailer the mixed knowledge

gdf = gpd.GeoDataFrame()

for url in urls:

df = pd.read_csv(url)

# Create a geometry column from latitude and longitude

geometry = [Point(xy) for xy in zip(df['longitude'], df['latitude'])]

gdf_temp = gpd.GeoDataFrame(df, crs="EPSG:4326", geometry=geometry)

# Concatenate the short-term GeoDataFrame to the primary GeoDataFrame

gdf = pd.concat([gdf, gdf_temp], ignore_index=True)

# Filter to maintain solely fires inside the nation boundaries

gdf = gpd.sjoin(gdf, boundaries_country, how="internal", predicate="inside")

# Show fires on map

frp = gdf["frp"].astype(float)

fig, ax = plt.subplots(figsize=(9,9))

boundaries_country.plot(ax=ax, facecolor="none", edgecolor="0.3", linewidth=0.8)

gdf.plot(ax=ax, markersize=frp, colour="crimson", alpha=0.55)

ax.set_title("Fires inside nation boundaries (bubble dimension = Fireplace Radiative Energy )")

ax.set_axis_off()

plt.present() Information varieties you will meet (and how you can learn them proper)

Air-quality work hardly ever lives in a single, tidy CSV. So, it helps to know what the file varieties you’ll meet. You’ll transfer between multidimensional mannequin outputs (NetCDF/GRIB/Zarr), satellite tv for pc rasters (COG/GeoTIFF), level measurements (CSV /Parquet /GeoParquet), and web-friendly codecs (JSON/GeoJSON), usually in the identical pocket book.

This part is a fast discipline information to these codecs and how you can open them with out getting caught.

There isn’t a must memorise any of this, so be happy to skim the checklist as soon as, then come again while you hit an unfamiliar file extension within the wild.

- NetCDF4 / HDF5 (self-describing scientific arrays): Broadly used for reanalyses, satellite tv for pc merchandise, and fashions. Wealthy metadata, multi-dimensional (time, stage, lat, lon) Standard extensions: .nc, .nc4, .h5, .hdf5

Learn:

# pip set up xarray netCDF4

import xarray as xr

ds = xr.open_dataset("modis_aod_2025.nc")

ds = ds.sel(time=slice("2025-08-01","2025-08-07"))

print(ds) - Cloud-Optimised GeoTIFF (COG): Raster format tuned for HTTP vary requests (stream simply what you want). Widespread for satellite tv for pc imagery and gridded merchandise. Standard extensions: .tif, .tiff

Learn:

# pip set up rasterio

import rasterio

with rasterio.open("https://example-bucket/no2_mean_2025.tif") as src:

window = rasterio.home windows.from_bounds(*(-0.3,5.5,0.3,5.9), src.remodel)

arr = src.learn(1, window=window)- JSON (nested) & GeoJSON (options + geometry): Nice for APIs and light-weight geospatial. GeoJSON makes use of WGS84 (EPSG:4326) by default. Standard extensions: json, .jsonl, .ndjson, .geojsonl, .ndgeojson

Learn:

# pip set up geopandas

import geopandas as gpd

gdf = gpd.read_file("factors.geojson") # columns + geometry

gdf = gdf.set_crs(4326) # guarantee WGS84 - GRIB2 (meteorology, mannequin outputs): Compact, tiled; usually utilized by CAMS/ECMWF/NWP. Standard extensions: .grib2, .grb2, .grib, .grb. In follow, knowledge suppliers usually add compression suffixes too, e.g. .grib2.gz or .grb2.bz2.

Learn:

# pip set up xarray cfgrib

import xarray as xr

ds = xr.open_dataset("cams_ozone.grib", engine="cfgrib") - Parquet & GeoParquet (columnar, compressed): Greatest for large tables: quick column choice, predicate pushdown, partitioning (e.g., by date/metropolis). GeoParquet provides a normal for geometries. Standard extensions: .parquet, .parquet.gz

Learn/Write:

# pip set up pandas pyarrow geopandas geoparquet

import pandas as pd, geopandas as gpd

df = pd.read_parquet("openaq_accra_2025.parquet") # columns solely

# Convert a GeoDataFrame -> GeoParquet

gdf = gpd.read_file("factors.geojson")

gdf.to_parquet("factors.geoparquet") # preserves geometry & CRS - CSV/TSV (textual content tables): Easy, common. Weak at giant scale (sluggish I/O, no schema), no geometry. Standard extensions: .csv, .tsv (additionally typically .tab, much less widespread)

Learn:

# pip set up pandas

import pandas as pd

df = pd.read_csv("measurements.csv", parse_dates=["datetime"], dtype={"site_id":"string"}) - Zarr (chunked, cloud-native): Splendid for evaluation within the cloud with parallel reads (works nice with Dask). Standard extension: .zarr (usually a listing / retailer ending in .zarr; often packaged as .zarr.zip)

Learn:

# pip set up xarray zarr s3fs

import xarray as xr

ds = xr.open_zarr("s3://bucket/cams_eac4_2025.zarr", consolidated=True) Notice: Shapefile (legacy vector): Works, however brittle (many information, 10-char discipline restrict). . It is a legacy codecs and it’s higher to make use of the options like GeoPackage or GeoParquet

You will need to select the correct geospatial (or scientific) file format as it’s not only a storage choice nevertheless it straight impacts how shortly you’ll be able to learn knowledge, software compatibility, how simply you’ll be able to share it, and the way effectively it scales from a desktop workflow to cloud-native processing. The next desk (Desk 1) supplies a sensible “format-to-task” cheat sheet: for every widespread want (from fast API dumps to cloud-scale arrays and internet mapping), it lists probably the most appropriate format, the extensions you’ll usually encounter, and the core purpose that format is an effective match. It may be used as a default place to begin when designing pipelines, publishing datasets, or choosing what to obtain from an exterior repository.

| Want | Greatest Wager | Standard Extension | Why |

| Human-readable logs or fast API dumps | CSV/JSON | .csv, .json (additionally .jsonl, .ndjson) | Ubiquitous, straightforward to examine |

| Large tables (hundreds of thousands of rows) | Parquet/ GeoParquet | .parquet | Quick scans, column pruning, and partitioning |

| Massive rasters over HTTP | COG | .tif, .tiff | Vary requests; no full obtain |

| Multi-dimensional scientific knowledge | NetCDF4/HDF5 | .nc, .nc4, .h5, .hdf5 | Self-describing, items/attrs |

| Meteorological mannequin outputs | GRIB2 | .grib2, .grb2, .grib, .grb | Compact, broadly supported by wx instruments |

| Cloud-scale arrays | Zarr | .zarr | Chunked + parallel; cloud-native |

| Exchangeable vector file | GeoPackage | .gpkg | Single file; sturdy |

| Net mapping geometries | GeoJSON | .geojsonl, .ndgeojson |

Easy; native to internet stacks |

Tip: An attention-grabbing discuss on STAC and knowledge varieties (particularly GeoParquet): https://github.com/GSA/gtcop-wiki/wiki/June-2025:-GeoParquet,-Iceberg-and-CloudpercentE2percent80percent90Native-Spatial-Information-Infrastructures

A number of open STAC catalogues at the moment are obtainable, together with public endpoints for optical, radar, and atmospheric merchandise (for instance, Landsat and Sentinel imagery by way of suppliers corresponding to Ingredient 84’s Earth Search or Microsoft’s Planetary Laptop). STAC makes it a lot simpler to script “discover and obtain all scenes for this polygon and time vary” and to combine totally different datasets into the identical workflow.

Conclusion — from “the place” the information lives to “how” you employ it

Air for Tomorrow: We began with the query “What are these children respiration at present?” This submit supplies a sensible path and instruments that will help you reply this query. You now know the place open-air high quality knowledge resides, together with regulatory networks, group sensors, satellite tv for pc measurements, and reanalysis. You additionally perceive what these information are (GeoJSON, Parquet/GeoParquet, NetCDF/HDF5, COG, GRIB, Zarr) and how you can retrieve them with compact, reproducible snippets. The aim is past simply downloading them; it’s to make defensible, quick, and shareable analyses that maintain up tomorrow.

You possibly can assemble a reputable native image in hours, not weeks. From fireplace hotspots (Determine 2) to school-route publicity (Determine 1), you’ll be able to create publicity maps (Determine 3).

Up subsequent: We might showcase an precise Air High quality Mannequin developed by us on the UNICEF Nation Workplace of Lao PDR with the UNICEF EAPRO’s Frontier Information Workforce. We might undergo an open, end-to-end mannequin pipeline. When there are ground-level air high quality knowledge streams obtainable, we might cowl how characteristic engineering, bias correction, normalisation, and a mannequin may be developed with an actionable floor {that a} regional can use tomorrow morning.

Contributors: Prithviraj Pramanik, AQAI; Hugo Ruiz Verastegui, Anthony Mockler, Judith Hanan, Frontier Information Lab; Risdianto Irawan, UNICEF EAPRO; Soheib Abdalla, Andrew Dunbrack, UNICEF Lao PDR Nation Workplace; Halim Jun, Daniel Alvarez, Shane O’Connor, UNICEF Workplace of Innovation;