a part of a sequence of posts on optimizing information switch utilizing NVIDIA Nsight™ Programs (nsys) profiler. Half one targeted on CPU-to-GPU information copies, and half two on GPU-to-CPU copies. On this put up, we flip our consideration to information switch between GPUs.

These days, it’s fairly widespread for AI/ML coaching — significantly of enormous fashions — to be distributed throughout a number of GPUs. Whereas there are a lot of completely different schemes for performing such distribution, what all of them have in widespread is their reliance on the fixed switch of knowledge — corresponding to gradients, weights, statistics, and/or metrics — between the GPUs, all through coaching. As with the opposite kinds of information switch we analyzed in our earlier posts, right here too, a poor implementation might simply result in under-utilization of compute assets and the unjustified inflation of coaching prices. Optimizing GPU-to-GPU communication is an energetic space of analysis and innovation involving each {hardware} and software program growth.

On this put up, we are going to give attention to the commonest type of distributed coaching — data-distributed coaching. In data-distributed coaching, similar copies of the ML mannequin are maintained on every GPU. Every enter batch is evenly distributed among the many GPUs, every of which executes a coaching step to calculate the native gradients. The native gradients are then shared and averaged throughout the GPUs, leading to an similar gradient replace to every of the mannequin copies. Utilizing NVIDIA Nsight™ Programs (nsys) profiler, we are going to analyze the impact of the GPU-to-GPU switch of the gradients on the runtime efficiency of coaching a toy mannequin and assess a number of methods for lowering its overhead.

Disclaimers

The code we are going to share on this put up is meant for demonstrative functions; please don’t depend on its accuracy or optimality. Please don’t interpret our point out of any software, framework, library, service, or platform as an endorsement of its use.

Because of Yitzhak Levi for his contributions to this put up.

Occasion Choice for Distributed Coaching

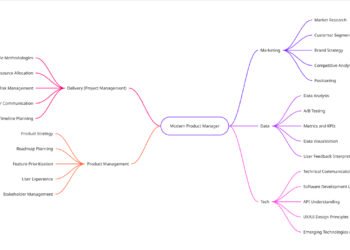

In a earlier put up, Occasion Choice for Deep Studying, we mentioned the significance of selecting an occasion kind that’s best suited in your AI/ML workload and the potential impression of that selection on the success of your undertaking. When selecting an occasion kind for a workload that features numerous GPU-to-GPU visitors, it would be best to take note of how such communication is carried out, together with: the occasion topology, GPU interconnects, maximal throughput, and latency.

On this put up, we are going to restrict our dialogue to distribution between GPUs on a single occasion. We’ll experiment with two occasion varieties: an Amazon EC2 g6e.48xlarge with 8 NVIDIA L40S GPUs and an Amazon EC2 p4d.24xlarge with 8 NVIDIA A100 GPUs. Every will run an AWS Deep Studying (Ubuntu 24.04) AMI with PyTorch (2.8), nsys-cli profiler (model 2025.6.1), and the NVIDIA Instruments Extension (NVTX) library.

One of many major variations between the 2 occasion varieties is how the GPUs are linked: On the g6e.48xlarge communication between the GPUs is over PCI Categorical (PCIe), whereas p4d.24xlarge contains NVIDIA NVLink™ — devoted {hardware} for enabling high-throughput GPU-to-GPU communication. Communication over the PCIe bus is considerably slower than NVLink. Whereas this can be enough for workloads with a low communication-to-compute ratio, it might be a efficiency killer for workloads with excessive communication charges.

To find the topology of the occasion varieties, we run the next command:

nvidia-smi topo -mOn the g6e.48xlarge, we get the next outcomes:

GPUs on the identical NUMA node are linked by a “NODE” hyperlink and GPUs on completely different NUMA nodes by a “SYS” hyperlink. Each hyperlinks traverse the PCIe in addition to one other interconnect; neither is a direct connection (a.okay.a., a “PIX” hyperlink). We’ll see in a while how this will impression throughput efficiency.

On the p4d.24xlarge, each pair of GPUs is linked by a devoted NVLink connection:

A Toy Mannequin

To facilitate our dialogue, we construct a toy data-distributed coaching experiment.

We select a mannequin with a comparatively excessive communication-to-compute ratio — a Imaginative and prescient Transformer (ViT)-L/32 image-classification mannequin with roughly 306 million parameters — and an artificial dataset that we are going to use to coach it:

import os, time, torch, nvtx

import torch.nn as nn

import torch.optim as optim

import torch.distributed as dist

from torch.nn.parallel import DistributedDataParallel as DDP

from torch.utils.information.distributed import DistributedSampler

from torch.utils.information import Dataset, DataLoader

from torchvision.fashions import vit_l_32

WORLD_SIZE = int(os.environ.get("WORLD_SIZE", 1))

BATCH_SIZE = 32

IMG_SIZE = 224

WARMUP_STEPS = 10

PROFILE_STEPS = 3

COOLDOWN_STEPS = 1

TOTAL_STEPS = WARMUP_STEPS + PROFILE_STEPS + COOLDOWN_STEPS

N_WORKERS = 8

def get_model():

return vit_l_32(weights=None)

# An artificial dataset with random photographs and labels

class FakeDataset(Dataset):

def __len__(self):

return TOTAL_STEPS * BATCH_SIZE * WORLD_SIZE

def __getitem__(self, index):

img = torch.randn((3, IMG_SIZE, IMG_SIZE))

label = torch.randint(0, 1000, (1,)).merchandise()

return img, label

def get_data_iter(rank, world_size):

dataset = FakeDataset()

sampler = DistributedSampler(dataset, num_replicas=world_size,

rank=rank, shuffle=True)

train_loader = DataLoader(dataset, batch_size=BATCH_SIZE,

sampler=sampler, num_workers=N_WORKERS,

pin_memory=True)

return iter(train_loader)We outline a utility operate that we are going to use to arrange PyTorch DistributedDataParallel (DDP) coaching. We configure the PyTorch distributed communication bundle to make use of the NVIDIA Collective Communications Library (NCCL) as its communication backend and wrap the mannequin in a DistributedDataParallel container.

def configure_ddp(mannequin, rank):

dist.init_process_group("nccl")

mannequin = DDP(mannequin,

device_ids=[rank],

bucket_cap_mb=2000)

return mannequinObserve that we naively configure the DDP bucket capability to 2 GB. DDP teams the mannequin’s gradients into buckets and performs gradient discount of every bucket in a separate command. The implication of setting the bucket capability to 2 GB is that all the ~306 million (FP32) gradients will slot in a single bucket (306 million x 4 bytes per gradient = ~1.22 GB) and discount will solely happen as soon as all gradients have been calculated.

As in our earlier posts, we schedule nsys profiler programmatically and wrap the completely different parts of the coaching step with color-coded NVTX annotations:

def practice(use_ddp=False):

# detect the env vars set by torchrun

rank = int(os.environ.get("RANK", 0))

local_rank = int(os.environ.get("LOCAL_RANK", 0))

torch.cuda.set_device(local_rank)

mannequin = get_model().to(local_rank)

criterion = nn.CrossEntropyLoss().to(local_rank)

if use_ddp:

mannequin = configure_ddp(mannequin, rank)

optimizer = optim.SGD(mannequin.parameters())

data_iter = get_data_iter(rank, WORLD_SIZE)

mannequin.practice()

for i in vary(TOTAL_STEPS):

# Schedule Profiling

if i == WARMUP_STEPS:

torch.cuda.synchronize()

start_time = time.perf_counter()

torch.cuda.profiler.begin()

elif i == WARMUP_STEPS + PROFILE_STEPS:

torch.cuda.synchronize()

torch.cuda.profiler.cease()

end_time = time.perf_counter()

with nvtx.annotate(f"Batch {i}", shade="blue"):

with nvtx.annotate("get batch", shade="crimson"):

information, goal = subsequent(data_iter)

information = information.to(local_rank, non_blocking=True)

goal = goal.to(local_rank, non_blocking=True)

with nvtx.annotate("ahead", shade="inexperienced"):

output = mannequin(information)

loss = criterion(output, goal)

with nvtx.annotate("backward", shade="purple"):

optimizer.zero_grad()

loss.backward()

with nvtx.annotate("optimizer step", shade="yellow"):

optimizer.step()

if use_ddp:

dist.destroy_process_group()

if rank == 0:

total_time = end_time - start_time

print(f"Throughput: {PROFILE_STEPS/total_time:.2f} steps/sec")

if __name__ == "__main__":

# allow ddp if run with torchrun

practice(use_ddp="RANK" in os.environ)We set the NCCL_DEBUG setting variable for visibility into how NCCL units up the transport hyperlinks between the GPUs.

export NCCL_DEBUG=INFOSingle-GPU Efficiency

We start our experimentation by working our script on a single GPU with out DDP:

nsys profile

--capture-range=cudaProfilerApi

--capture-range-end=cease

--trace=cuda,nvtx,osrt,nccl

--output=baseline

python practice.pyObserve the inclusion of nccl within the hint part; this might be crucial for analyzing the GPU-to-GPU communication within the multi-GPU experiments.

The throughput of the baseline experiment is 8.91 steps per second on the L40S GPU and 5.17 steps per second on the A100: The newer NVIDIA Ada Lovelace Structure performs higher than the NVIDIA Ampere Structure on our toy mannequin. Within the picture beneath we present the timeline view for the L40S experiment.

On this put up we are going to give attention to the CUDA portion of the timeline. Wanting on the NVTX row, we see the recurring crimson (CPU-to-GPU copy), inexperienced (ahead), purple (backward), and yellow (optimizer replace) sample that makes up our practice step. Observe, nonetheless, that the purple seems as only a tiny blip whereas, in observe, the backward cross fills your entire hole between the ahead and optimizer blocks: The NVTX library seems to have captured solely the preliminary launch of the autograd graph.

We’ll use this hint as a comparative baseline for our subsequent experiments.

DDP with 1 GPU

We assess the impression of the DDP wrapper by working torchrun on a single GPU:

torchrun --nproc_per_node=1

--no-python

nsys profile

--capture-range=cudaProfilerApi

--capture-range-end=cease

--trace=cuda,nvtx,nccl,osrt

--output=ddp-1gpu

python practice.pyThe ensuing throughput drops to eight.40 steps per second on the L40S GPU and 5.04 steps per second on the A100. Even within the absence of any cross-GPU communication, DDP introduces overhead that may lower throughput by ~3–7%. The necessary lesson from that is to at all times make sure that single GPU coaching is run with out DDP.

The nsys hint of the L40S experiment confirms the presence of the DDP overhead:

The primary change from the coaching step within the earlier hint is a big chunk of device-to-device (DtoD) reminiscence copies on the finish of the backward block and simply earlier than the optimizer block (highlighted within the hint above). That is the DDP in motion: Even within the absence of a cross GPU gradient discount, DDP prepares the native gradients in a devoted reminiscence block for discount after which copies the outcomes again into the grad property of every parameter in preparation for the gradient replace. (Observe that the DtoD copies are between two reminiscence places on the identical GPU — not between two completely different GPUs).

DDP With A number of GPUs

Subsequent, we assess the impression of gradient sharing between 2, 4, and eight GPUs:

torchrun --nproc_per_node=8

--no-python

nsys profile

--capture-range=cudaProfilerApi

--capture-range-end=cease

--trace=cuda,nvtx,nccl,osrt

--output=ddp-8gpu_percentq{RANK}

python practice.pyThe desk beneath captures impression on the coaching throughput on the g6e.48xlarge and the p4d.24xlarge:

DDP coaching efficiency plummets on the g6e.48xlarge occasion: The 8-GPU throughput is greater than 6 occasions slower than the single-GPU outcome. The NCCL logs embrace a number of strains describing the communication paths between the GPUs, e.g.:

NCCL INFO Channel 00 : 0[0] -> 1[1] through SHM/direct/directThis means that the info switch between every two GPUs passes by CPU shared reminiscence which drastically limits the communication bandwidth.

On the p4d.24xlarge, in distinction, the place the NVLink connections enable for direct peer-to-peer (P2P) communication, the 8-GPU throughput is simply 8% decrease than the baseline outcome:

NCCL INFO Channel 00/0 : 0[0] -> 1[1] through P2P/CUMEM/learnThough every particular person L40S outperforms the A100 on our toy mannequin by 72%, the inclusion of NVLink makes the p4d.24xlarge extra optimum than the g6e.48xlarge for working DDP over 8 GPUs.

The information switch bottleneck on the g6e.48xlarge could be simply seen within the nsys hint. Right here we use the “a number of view” choice to show the exercise of all of the DDP processes in a single timeline:

The gradient discount happens within the ncclAllReduce name on the NCCL row. This comes simply after the native gradient calculation has accomplished and simply earlier than the DtoD reminiscence operation mentioned above that copies the decreased gradients again to the grad area of every parameter. On the g6e.48xlarge the NCCL operation dominates the backward cross, accounting for roughly 84% of the general step time (587 out of 701 milliseconds).

On the p4d.24xlarge, the NCCL name takes up a a lot smaller portion of the coaching step:

Within the subsequent sections we are going to focus on a number of DDP optimization methods and assess their impression on runtime efficiency. We’ll restrict our scope to PyTorch-level optimizations, leaving different strategies (e.g., NCCL/OS/community tuning) for one more put up.

A normal method for coping with communication bottlenecks is to cut back the frequency of communication. In DDP workloads this may be achieved by rising the info batch measurement (i.e., rising the compute per step) or, if the reminiscence capability forbids this, apply gradient accumulation — as a substitute of making use of the gradient discount and replace each step, accumulate the native gradients for N steps and apply the discount each Nth step. Each methods enhance the efficient batch measurement — the variety of general samples between gradient updates which might impression mannequin convergence and will require tuning of the optimization parameters. In our dialogue we assume the efficient batch measurement is mounted.

We’ll cowl 4 methods. The primary two give attention to lowering DDP overhead. The ultimate two straight tackle the info switch bottleneck.

Optimization 1: Static Graph Declaration

Our first change is to cross static_graph=True to the DDP container. Usually talking, fashions may embrace parameters for which gradients will not be calculated at each step — known as “unused parameters” within the DDP documentation. That is widespread in fashions with conditional logic. DDP contains devoted mechanisms for figuring out and dealing with unused parameters. Within the case of our toy mannequin, all the gradients are calculated in every step — our graph is “static”. Explicitly declaring our graph as static reduces the overhead related to dealing with a dynamic gradient set.

def configure_ddp(mannequin, rank):

dist.init_process_group("nccl")

mannequin = DDP(mannequin,

device_ids=[rank],

static_graph=True,

bucket_cap_mb=2000)

return mannequinWithin the case of our toy mannequin, the impression of this alteration is negligible on each the g6e.48xlarge and the p4d.24xlarge. With none additional delay, we proceed to the subsequent optimization.

Optimization 2: Enhance Reminiscence Effectivity

Our second approach addresses the big chunk of DtoD reminiscence copying we recognized above. As an alternative of copying the gradients to and from the NCCL communication reminiscence blocks, we are able to explicitly set the parameter gradients to level on to the NCCL communication buffers. Consequently, the identical reminiscence is used to retailer the native gradients, to carry out the gradient discount, and to use the gradient updates. That is configured by passing gradient_as_bucket_view=True to the DDP container:

def configure_ddp(mannequin, rank):

dist.init_process_group("nccl")

mannequin = DDP(mannequin,

device_ids=[rank],

static_graph=True,

gradient_as_bucket_view=True,

bucket_cap_mb=2000)

return mannequinWithin the hint beneath, captured on the p4d.24xlarge, we not see the block of DtoD reminiscence copy between the all-reduce and (yellow) optimizer steps:

Within the case of our toy instance, this optimization boosts efficiency by a modest 1%.

Optimization 3: Gradient Compression

A typical method for addressing communication bottlenecks is to use compression algorithms to cut back the scale of the payload. PyTorch DDP offers numerous devoted communication hooks that automate compression of gradients earlier than NCCL discount and decompression afterward. Within the code block beneath, we apply bfloat16 gradient compression:

import torch.distributed.algorithms.ddp_comm_hooks.default_hooks as ddp_hks

def configure_ddp(mannequin, rank):

dist.init_process_group("nccl")

mannequin = DDP(mannequin,

device_ids=[rank],

static_graph=True,

gradient_as_bucket_view=True,

bucket_cap_mb=2000)

mannequin.register_comm_hook(state=None, hook=ddp_hks.bf16_compress_hook)

return mannequinOn the closely bottlenecked g6e.48xlarge occasion bfloat16 compression leads to a substantial 65% speed-up! The nsys hint reveals the reduced-sized NCCL name in addition to the newly launched compression operations:

On the p4d.24xlarge the overhead of the compression operations outweigh the positive factors within the communication pace, resulting in an general discount in throughput:

PyTorch gives a extra aggressive compression algorithm than bfloat16, known as PowerSGD. Under we current a naive utilization — in observe this will require numerous tuning. Please see the documentation for particulars:

from torch.distributed.algorithms.ddp_comm_hooks import powerSGD_hook

def configure_ddp(mannequin, rank):

dist.init_process_group("nccl")

mannequin = DDP(mannequin,

device_ids=[rank],

static_graph=True,

gradient_as_bucket_view=True,

bucket_cap_mb=2000)

state = powerSGD_hook.PowerSGDState(

process_group=None

)

mannequin.register_comm_hook(

state,

hook=powerSGD_hook.powerSGD_hook

)

return mannequinPowerSGD has a dramatic impression on the g6e.48xlarge occasion, rising throughput all the best way again as much as 7.5 steps per second — greater than 5 occasions sooner than the baseline outcome! Observe the discount in NCCL kernel measurement within the resultant hint:

It is very important notice that these compression algorithms are precision-lossy and needs to be used with warning, as they might impression your mannequin convergence.

Optimization 4: Parallelize Gradient Discount

DDP teams parameters into a number of buckets and triggers gradient discount of every bucket independently — as quickly because the bucket is stuffed. This permits for working gradient discount of stuffed buckets whereas gradient calculation (of different buckets) continues to be ongoing. The diploma of overlap will depend on the variety of DDP buckets which we management through the bucket_cap_mb setting of the DDP container. Recall that in our preliminary implementation, we explicitly set this to 2000 (2 GB) which (given the scale of our mannequin) translated to a single DDP bucket. The desk beneath reveals the throughput for various values of bucket_cap_mb. The optimum setting will range based mostly on the small print of the mannequin and runtime setting.

Observe the numerous 12% enchancment when utilizing a number of buckets on the g6e.48xlarge with BF16 compression. PowerSGD, however, works finest when utilized to a single bucket.

Within the picture beneath, captured on a p4d.24xlarge with bucket_cap_mb set to 100, we are able to see the impression of this optimization on the profiler hint:

Rather than the one NCCL all-reduce operation, we now have 11 smaller blocks working in parallel with the native gradient computation.

Outcomes

We summarize the outcomes of our experiments within the following desk:

On the p4d.24xlarge, the place the info is transferred over NVLink, the general impression was a modest 4% speed-up. However on the g6e.48xlarge the positive factors have been vital and largely as a result of gradient compression — and 86% increase for the BF16 scheme and an over 5X enchancment for (the naive implementation of) PowerSGD.

Importantly, these outcomes can range significantly based mostly on the mannequin structure and runtime setting.

Abstract

This concludes our three-part sequence on figuring out and fixing information switch bottlenecks with NVIDIA Nsight™ Programs (nsys) profiler. In every of the posts we demonstrated the tendency of knowledge transfers to introduce efficiency bottlenecks and useful resource under-utilization. In every case, we used nsys profiler to establish the bottlenecks and measure the impression of various optimization methods.

The accelerations we achieved in every of the use-cases that we studied strengthen the significance of integrating the common use of instruments corresponding to nsys profiler into the AI/ML growth workflow and spotlight the chance — even for non-CUDA specialists — to realize significant efficiency positive factors and AI/ML value reductions.