the k-NN Regressor and the thought of prediction based mostly on distance, we now take a look at the k-NN Classifier.

The precept is similar, however classification permits us to introduce a number of helpful variants, similar to Radius Nearest Neighbors, Nearest Centroid, multi-class prediction, and probabilistic distance fashions.

So we’ll first implement the k-NN classifier, then talk about how it may be improved.

You should use this Excel/Google sheet whereas studying this text to higher comply with all the reasons.

Titanic survival dataset

We’ll use the Titanic survival dataset, a traditional instance the place every row describes a passenger with options similar to class, intercourse, age, and fare, and the aim is to foretell whether or not the passenger survived.

Precept of k-NN for Classification

k-NN classifier is so just like k-NN regressor that I might nearly write one single article to clarify them each.

In truth, once we search for the okay nearest neighbors, we don’t use the worth y in any respect, not to mention its nature.

BUT, there are nonetheless some fascinating info about how classifiers (binary or multi-class) are constructed, and the way the options will be dealt with in another way.

We start with the binary classification process, after which the multi-class classification.

One Steady Characteristic for Binary Classification

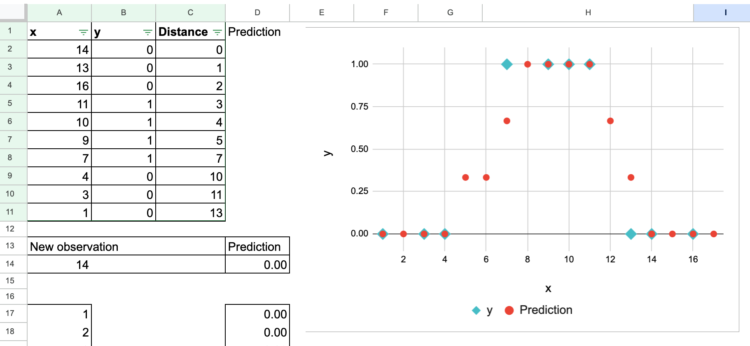

So, very fast, we will do the identical train for one steady function, with this dataset.

For the worth of y, we normally use 0 and 1 to tell apart the 2 lessons. However you possibly can discover, or you’ll discover that it may be a supply of confusion.

Now, give it some thought: 0 and 1 are additionally numbers, proper? So, we will precisely do the identical course of as if we’re doing a regression.

That’s proper. Nothing modifications within the computation, as you see within the following screenshot. And you’ll in fact attempt to modify the worth of the brand new commentary your self.

The one distinction is how we interpret the end result. After we take the “common” of the neighbors’ y values, this quantity is known because the likelihood that the brand new commentary belongs to class 1.

So in actuality, the “common” worth is just not the great interpretation, however it’s moderately the proportion of sophistication 1.

We will additionally manually create this plot, to indicate how the expected likelihood modifications over a variety of x values.

Historically, to keep away from ending up with a 50 % likelihood, we select an odd worth for okay, in order that we will at all times determine with majority voting.

Two-feature for Binary classification

If we have now two options, the operation can also be nearly the identical as in k-NN regressor.

One function for multi-class classification

Now, let’s take an instance of three lessons for the goal variable y.

Then we will see that we can’t use the notion of “common” anymore, because the quantity that represents the class is just not really a quantity. And we should always higher name them “class 0”, “class 1”, and “class 2”.

From k-NN to Nearest Centroids

When okay Turns into too Massive

Now, let’s make okay giant. How giant? As giant as doable.

Bear in mind, we additionally did this train with k-NN regressor, and the conclusion was that if okay equals the entire variety of observations within the coaching dataset, then k-NN regressor is the easy average-value estimator.

For the k-NN classifier, it’s nearly the identical. If okay equals the entire variety of observations, then for every class, we’ll get its total proportion inside your complete coaching dataset.

Some individuals, from a Bayesian perspective, name these proportions the priors!

However this doesn’t assist us a lot to categorise a brand new commentary, as a result of these priors are the identical for each level.

The Creation of Centroids

So allow us to take yet one more step.

For every class, we will additionally group collectively all of the function values x that belong to that class, and compute their common.

These averaged function vectors are what we name centroids.

What can we do with these centroids?

We will use them to categorise a brand new commentary.

As a substitute of recalculating distances to your complete dataset for each new level, we merely measure the space to every class centroid and assign the category of the closest one.

With the Titanic survival dataset, we will begin with a single function, age, and compute the centroids for the 2 lessons: passengers who survived and passengers who didn’t.

Now, it’s also doable to make use of a number of steady options.

For instance, we will use the 2 options age and fare.

And we will talk about some essential traits of this mannequin:

- The dimensions is essential, as we mentioned earlier than for k-NN regressor.

- The lacking values are usually not an issue right here: once we compute the centroids per class, every one is calculated with the obtainable (non-empty) values

- We went from essentially the most “complicated” and “giant” mannequin (within the sense that the precise mannequin is your complete coaching dataset, so we have now to retailer all of the dataset) to the best mannequin (we solely use one worth per function, and we solely retailer these values as our mannequin)

From extremely nonlinear to naively linear

However now, are you able to consider one main downside?

Whereas the essential k-NN classifier is extremely nonlinear, the Nearest Centroid technique is extraordinarily linear.

On this 1D instance, the 2 centroids are merely the typical x values of sophistication 0 and sophistication 1. As a result of these two averages are shut, the choice boundary turns into simply the midpoint between them.

So as a substitute of a piecewise, jagged boundary that is determined by the precise location of many coaching factors (as in k-NN), we receive a straight cutoff that solely is determined by two numbers.

This illustrates how Nearest Centroids compresses your complete dataset right into a easy and really linear rule.

A be aware on regression: why centroids don’t apply

Now, this sort of enchancment is just not doable for the k-NN regressor. Why?

In classification, every class kinds a bunch of observations, so computing the typical function vector for every class is smart, and this provides us the category centroids.

However in regression, the goal y is steady. There are not any discrete teams, no class boundaries, and subsequently no significant method to compute “the centroid of a category”.

A steady goal has infinitely many doable values, so we can’t group observations by their y worth to kind centroids.

The one doable “centroid” in regression can be the world imply, which corresponds to the case okay = N in k-NN regressor.

And this estimator is much too easy to be helpful.

Briefly, Nearest Centroids Classifier is a pure enchancment for classification, but it surely has no direct equal in regression.

Additional statistical enhancements

What else can we do with the essential k-NN classifier?

Common and variance

With Nearest Centroids Classifier, we used the best statistic that’s the common. A pure reflex in statistics is so as to add the variance as nicely.

So, now, distance is now not Euclidean, however Mahalanobis distance. Utilizing this distance, we get the likelihood based mostly on the distribution characterised by the imply and variance of every class.

Categorical Options dealing with

For categorical options, we can’t compute averages or variances. And for k-NN regressor, we noticed that it was doable to do one-hot encoding or ordinal/label encoding. However the scale is essential and never simple to find out.

Right here, we will do one thing equally significant, when it comes to chances: we will rely the proportions of every class inside a category.

These proportions act precisely like chances, describing how doubtless every class is inside every class.

This concept is immediately linked to fashions similar to Categorical Naive Bayes, the place lessons are characterised by frequency distributions over the classes.

Weighted Distance

One other route is to introduce weights, in order that nearer neighbors rely greater than distant ones. In scikit-learn, there may be the “weights” argument that permits us to take action.

We will additionally swap from “okay neighbors” to a set radius across the new commentary, which results in radius-based classifiers.

Radius Nearest Neighbors

Typically, we will discover this following graphic to clarify k-NN classifier. However really, with a radius like this, it displays extra the thought of Radius Nearest Neighbors.

One benefit is the management of the neighborhood. It’s particularly fascinating once we know the concrete that means of the space, such because the geographical distance.

However the downside is that it’s a must to know the radius prematurely.

By the best way, this notion of radius nearest neighbors can also be appropriate for regression.

Recap of various variants

All these small modifications give totally different fashions, every one making an attempt to enhance the essential concept of evaluating neighbors in accordance with a extra complicated definition of distance, with a management parameter what permits us to get native neighbors, or extra world characterization of neighborhood.

We won’t discover all these fashions right here. I merely can’t assist myself from going a bit too far when a small variation naturally results in one other concept.

For now, contemplate this as an announcement of the fashions we’ll implement later this month.

Conclusion

On this article, we explored the k-NN classifier from its most elementary kind to a number of extensions.

The central concept is just not actually modified: a brand new commentary is classed by how comparable it’s to the coaching information.

However this straightforward concept can take many various shapes.

With steady options, similarity relies on geometric distance.

With categorical options, we glance as a substitute at how usually every class seems among the many neighbors.

When okay turns into very giant, your complete dataset collapses into only a few abstract statistics, which leads naturally to the Nearest Centroids Classifier.

Understanding this household of distance-based and probability-based concepts helps us see that many machine-learning fashions are merely other ways of answering the identical query:

Which class does this new commentary most bear a resemblance to?

Within the subsequent articles, we’ll proceed exploring density-based fashions, which will be understood as world measures of similarity between observations and lessons.