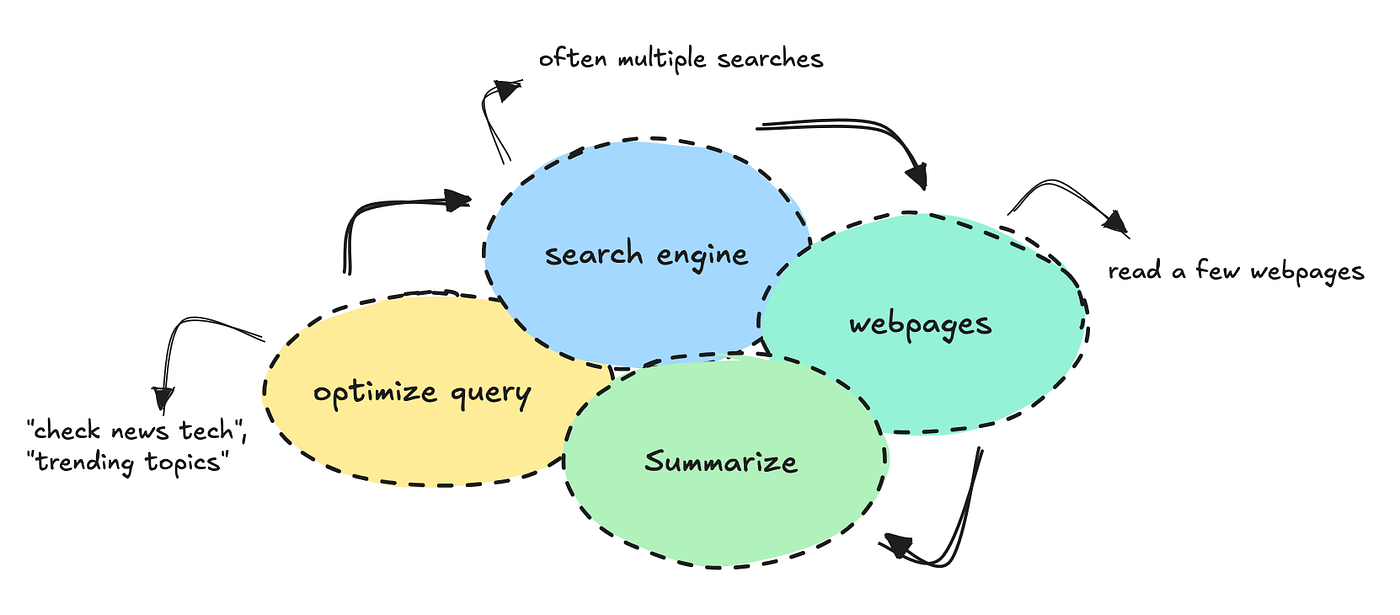

ChatGPT one thing like: “Please scout all of tech for me and summarize developments and patterns based mostly on what you suppose I might be interested by,” you already know that you just’d get one thing generic, the place it searches just a few web sites and information sources and arms you these.

It is because ChatGPT is constructed for basic use instances. It applies regular search strategies to fetch data, typically limiting itself to a couple internet pages.

This text will present you learn how to construct a distinct segment agent that may scout all of tech, combination thousands and thousands of texts, filter information based mostly on a persona, and discover patterns and themes you possibly can act on.

The purpose of this workflow is to keep away from sitting and scrolling by way of boards and social media by yourself. The agent ought to do it for you, grabbing no matter is beneficial.

We’ll be capable to pull this off utilizing a novel information supply, a managed workflow, and a few immediate chaining strategies.

By caching information, we will preserve the fee down to a couple cents per report.

If you wish to attempt the bot with out booting it up your self, you possibly can be a part of this Discord channel. You’ll discover the repository right here if you wish to construct it by yourself.

This text focuses on the final structure and learn how to construct it, not the smaller coding particulars as you could find these in Github.

Notes on constructing

If you happen to’re new to constructing with brokers, you would possibly really feel like this one isn’t groundbreaking sufficient.

Nonetheless, if you wish to construct one thing that works, you’ll need to use numerous software program engineering to your AI functions. Even when LLMs can now act on their very own, they nonetheless want steerage and guardrails.

For workflows like this, the place there’s a clear path the system ought to take, you must construct extra structured “workflow-like” techniques. When you have a human within the loop, you possibly can work with one thing extra dynamic.

The explanation this workflow works so effectively is as a result of I’ve an excellent information supply behind it. With out this information moat, the workflow wouldn’t be capable to do higher than ChatGPT.

Getting ready and caching information

Earlier than we will construct an agent, we have to put together an information supply it could faucet into.

One thing I believe lots of people get mistaken after they work with LLM techniques is the assumption that AI can course of and combination information solely by itself.

In some unspecified time in the future, we’d be capable to give them sufficient instruments to construct on their very own, however we’re not there but by way of reliability.

So after we construct techniques like this, we want information pipelines to be simply as clear as for some other system.

The system I’ve constructed right here makes use of an information supply I already had obtainable, which implies I perceive learn how to educate the LLM to faucet into it.

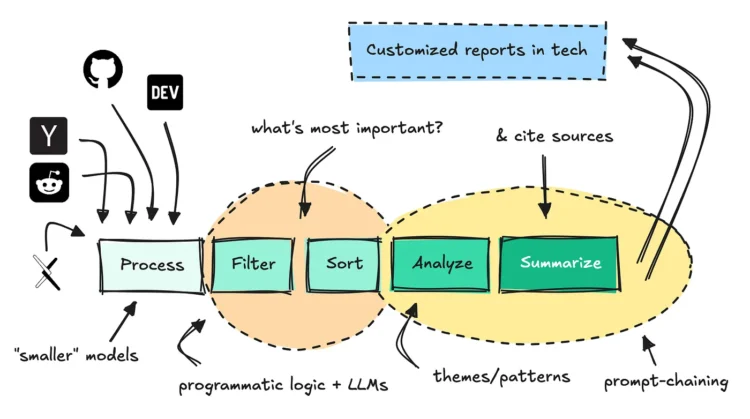

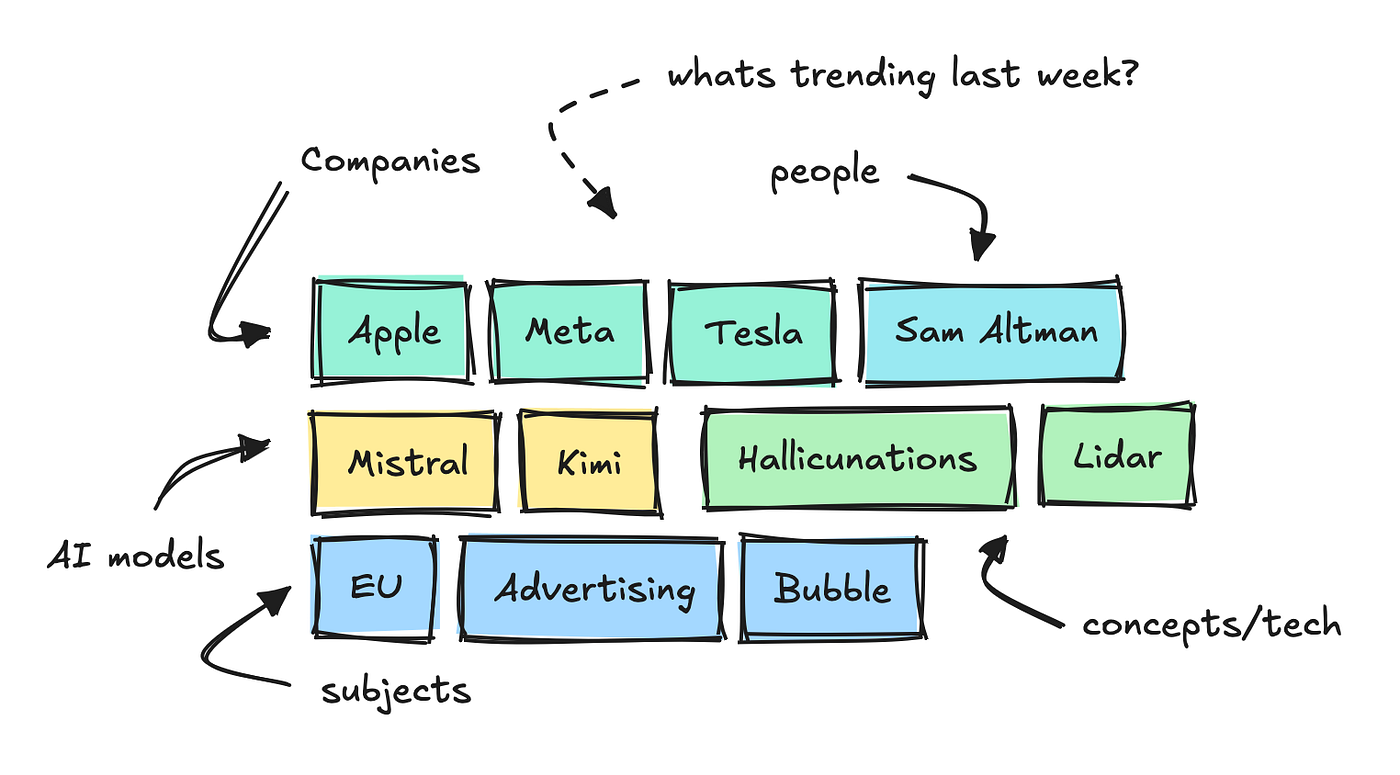

It ingests hundreds of texts from tech boards and web sites per day and makes use of small NLP fashions to interrupt down the principle key phrases, categorize them, and analyze sentiment.

This lets us see which key phrases are trending inside completely different classes over a particular time interval.

To construct this agent, I added one other endpoint that collects “info” for every of those key phrases.

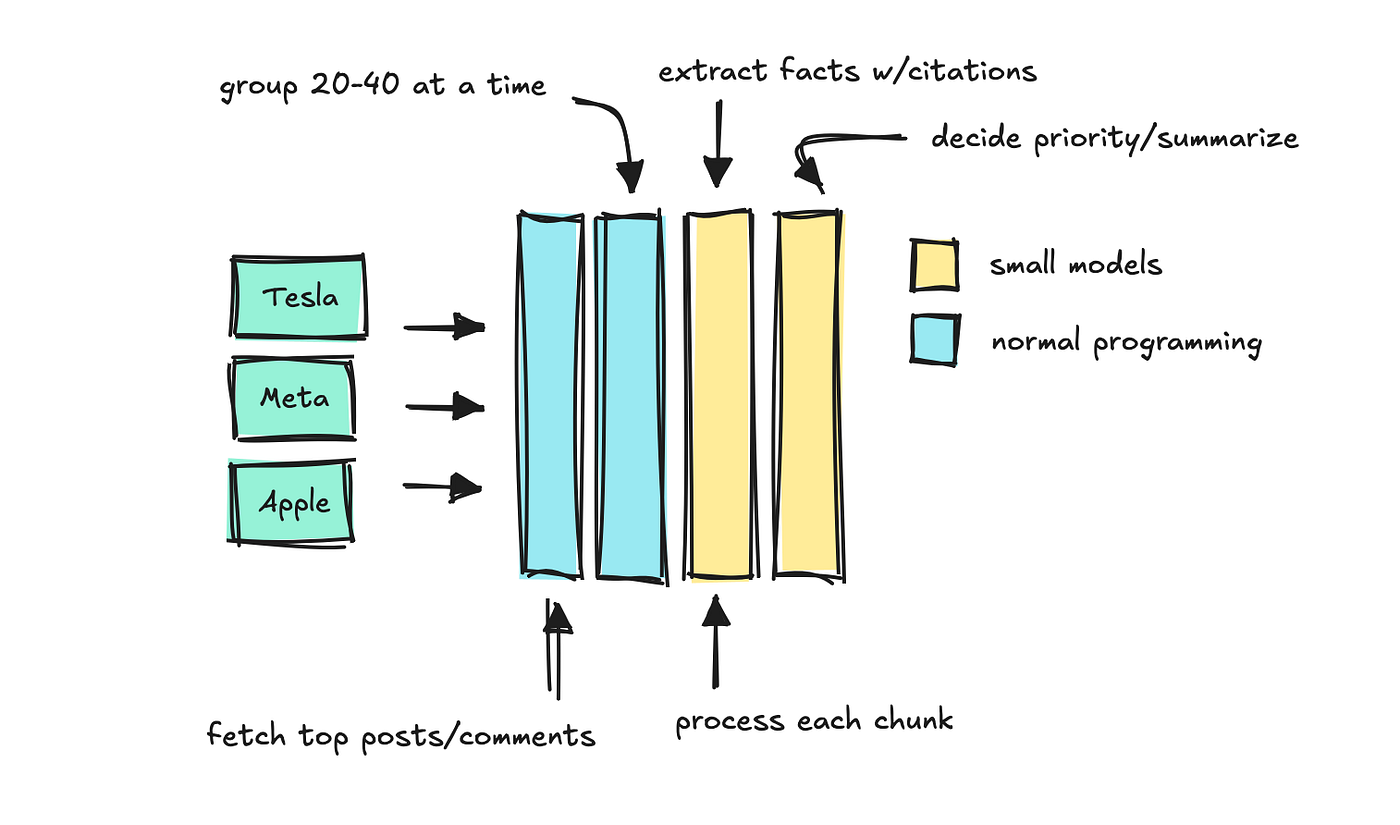

This endpoint receives a key phrase and a time interval, and the system types feedback and posts by engagement. Then it course of the texts in chunks with smaller fashions that may determine which “info” to maintain.

We apply a final LLM to summarize which info are most vital, maintaining the supply citations intact.

This can be a form of immediate chaining course of, and I constructed it to imitate LlamaIndex’s quotation engine.

The primary time the endpoint known as for a key phrase, it could take as much as half a minute to finish. However for the reason that system caches the consequence, any repeat request takes just some milliseconds.

So long as the fashions are sufficiently small, the price of working this on just a few hundred key phrases per day is minimal. Later, we will have the system run a number of key phrases in parallel.

You’ll be able to in all probability think about now that we will construct a system to fetch these key phrases and info to construct completely different studies with LLMs.

When to work with small vs bigger fashions

Earlier than shifting on, let’s simply point out that choosing the proper mannequin dimension issues.

I believe that is on everybody’s thoughts proper now.

There are fairly superior fashions you should utilize for any workflow, however as we begin to apply an increasing number of LLMs to those functions, the variety of calls per run provides up rapidly and this could get costly.

So, when you possibly can, use smaller fashions.

You noticed that I used smaller fashions to quote and group sources in chunks. Different duties which are nice for small fashions embrace routing and parsing pure language into structured information.

If you happen to discover that the mannequin is faltering, you possibly can break the duty down into smaller issues and use immediate chaining, first do one factor, then use that consequence to do the subsequent, and so forth.

You continue to need to use bigger LLMs when it’s worthwhile to discover patterns in very giant texts, or whenever you’re speaking with people.

On this workflow, the fee is minimal as a result of the info is cached, we use smaller fashions for many duties, and the one distinctive giant LLM calls are the ultimate ones.

How this agent works

Let’s undergo how the agent works beneath the hood. I constructed the agent to run inside Discord, however that’s not the main focus right here. We’ll give attention to the agent structure.

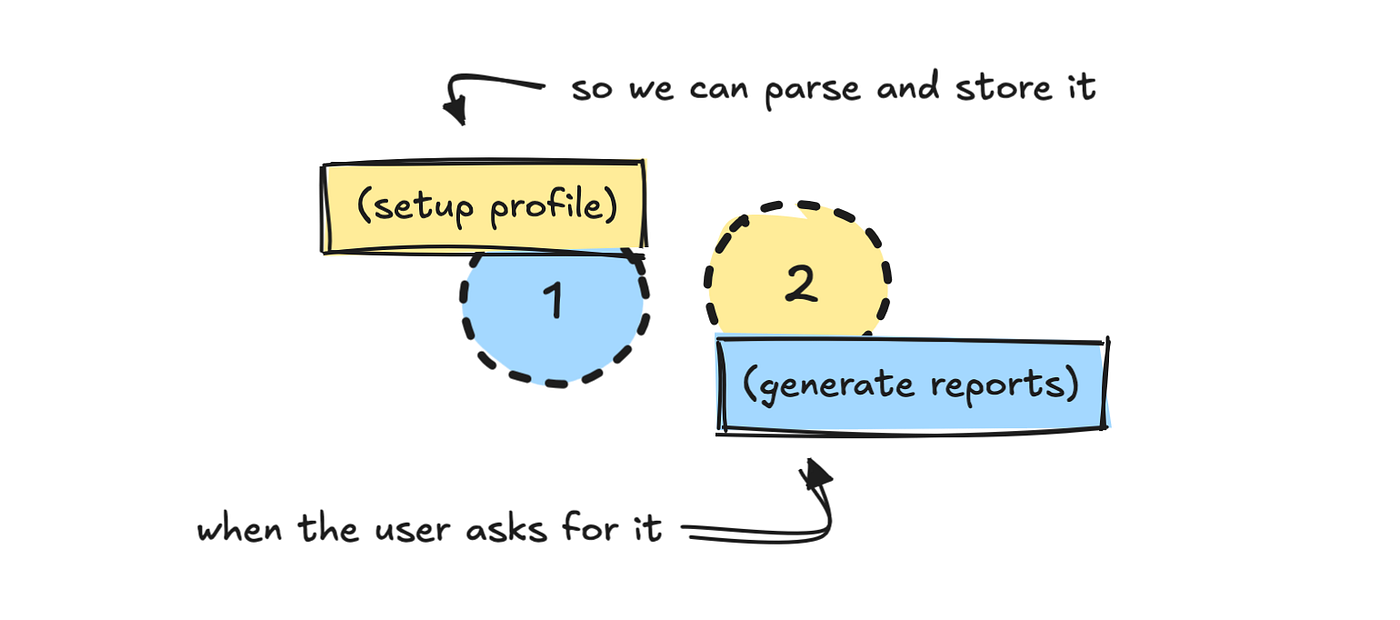

I cut up the method into two components: one setup, and one information. The primary course of asks the person to arrange their profile.

Since I already know learn how to work with the info supply, I’ve constructed a reasonably intensive system immediate that helps the LLM translate these inputs into one thing we will fetch information with later.

PROMPT_PROFILE_NOTES = """

You're tasked with defining a person persona based mostly on the person's profile abstract.

Your job is to:

1. Decide a brief persona description for the person.

2. Choose probably the most related classes (main and minor).

3. Select key phrases the person ought to observe, strictly following the principles under (max 6).

4. Determine on time interval (based mostly solely on what the person asks for).

5. Determine whether or not the person prefers concise or detailed summaries.

Step 1. Character

- Write a brief description of how we must always take into consideration the person.

- Examples:

- CMO for non-technical product → "non-technical, skip jargon, give attention to product key phrases."

- CEO → "solely embrace extremely related key phrases, no technical overload, straight to the purpose."

- Developer → "technical, interested by detailed developer dialog and technical phrases."

[...]

"""

I’ve additionally outlined a schema for the outputs I want:

class ProfileNotesResponse(BaseModel):

persona: str

major_categories: Listing[str]

minor_categories: Listing[str]

key phrases: Listing[str]

time_period: str

concise_summaries: boolWith out having area information of the API and the way it works, it’s unlikely that an LLM would determine how to do that by itself.

You can attempt constructing a extra intensive system the place the LLM first tries to be taught the API or the techniques it’s supposed to make use of, however that might make the workflow extra unpredictable and dear.

For duties like this, I attempt to at all times use structured outputs in JSON format. That method we will validate the consequence, and if validation fails, we re-run it.

That is the best option to work with LLMs in a system, particularly when there’s no human within the loop to test what the mannequin returns.

As soon as the LLM has translated the person profile into the properties we outlined within the schema, we retailer the profile someplace. I used MongoDB, however that’s non-compulsory.

Storing the persona isn’t strictly required, however you do have to translate what the person says right into a type that allows you to generate information.

Producing the studies

Let’s have a look at what occurs within the second step when the person triggers the report.

When the person hits the /information command, with or and not using a time interval set, we first fetch the person profile information we’ve saved.

This offers the system the context it must fetch related information, utilizing each classes and key phrases tied to the profile. The default time interval is weekly.

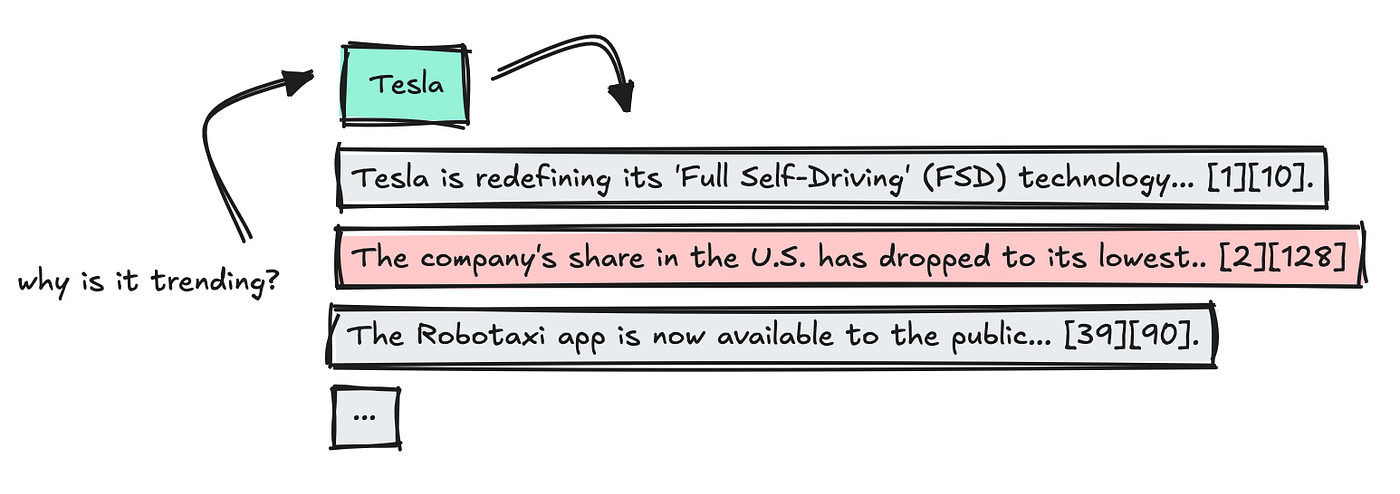

From this, we get a listing of prime and trending key phrases for the chosen time interval that could be attention-grabbing to the person.

With out this information supply, constructing one thing like this is able to have been troublesome. The info must be ready upfront for the LLM to work with it correctly.

After fetching key phrases, it might make sense so as to add an LLM step that filters out key phrases irrelevant to the person. I didn’t do this right here.

The extra pointless data an LLM is handed, the more durable it turns into for it to give attention to what actually issues. Your job is to ensure that no matter you feed it’s related to the person’s precise query.

Subsequent, we use the endpoint ready earlier, which incorporates cached “info” for every key phrase. This offers us already vetted and sorted data for every one.

We run key phrase calls in parallel to hurry issues up, however the first individual to request a brand new key phrase nonetheless has to attend a bit longer.

As soon as the outcomes are in, we mix the info, take away duplicates, and parse the citations so every reality hyperlinks again to a particular supply by way of a key phrase quantity.

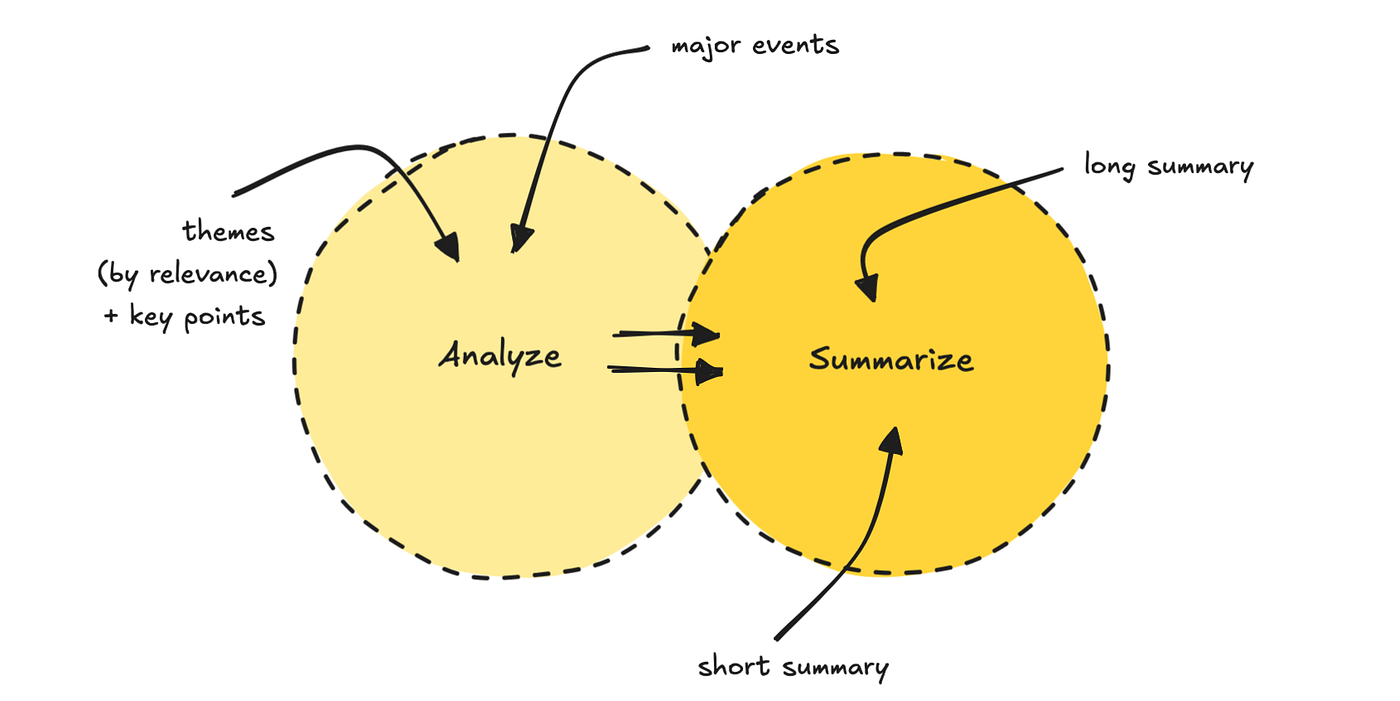

We then run the info by way of a prompt-chaining course of. The primary LLM finds 5 to 7 themes and ranks them by relevance, based mostly on the person profile. It additionally pulls out the important thing factors.

The second LLM move makes use of each the themes and unique information to generate two completely different abstract lengths, together with a title.

We are able to do that to verify to cut back cognitive load on the mannequin.

This final step to construct the report takes probably the most time, since I selected to make use of a reasoning mannequin like GPT-5.

You can swap it for one thing sooner, however I discover superior fashions are higher at this final stuff.

The complete course of takes a couple of minutes, relying on how a lot has already been cached that day.

Try the completed consequence under.

If you wish to have a look at the code and construct this bot your self, you could find it right here. If you happen to simply need to generate a report, you possibly can be a part of this channel.

I’ve some plans to enhance it, however I’m glad to listen to suggestions in case you discover it helpful.

And in order for you a problem, you possibly can rebuild it into one thing else, like a content material generator.

Notes on constructing brokers

Each agent you construct shall be completely different, so that is not at all a blueprint for constructing with LLMs. However you possibly can see the extent of software program engineering this calls for.

LLMs, not less than for now, don’t take away the necessity for good software program and information engineers.

For this workflow, I’m principally utilizing LLMs to translate pure language into JSON after which transfer that by way of the system programmatically. It’s the best option to management the agent course of, but in addition not what folks often think about after they consider AI functions.

There are conditions the place utilizing a extra free-moving agent is good, particularly when there’s a human within the loop.

However, hopefully you realized one thing, or bought inspiration to construct one thing by yourself.

If you wish to comply with my writing, comply with me right here, my web site, Substack, or LinkedIn.

❤