Within the Creator Highlight collection, TDS Editors chat with members of our neighborhood about their profession path in information science and AI, their writing, and their sources of inspiration. At this time, we’re thrilled to share our dialog with Ida Silfverskiöld.

Ida is a generalist, educated as an economist and self-taught in software program engineering. She has knowledgeable background in product and advertising and marketing administration, which suggests she has a uncommon mix of product, advertising and marketing and improvement expertise. Over the previous few years, she’s been instructing and constructing within the LLM, NLP, and pc imaginative and prescient house, digging into areas akin to agentic AI, chain‑of‑thought methods, and the economics of internet hosting fashions.

You studied economics, then discovered to code and moved by product, development, and now hands-on AI constructing. What perspective does that generalist path offer you that specialists typically miss?

I’m undecided.

Individuals see generalists as having shallow information, however generalists may dig deep.

I see generalists as individuals with a number of pursuits and a drive to know the entire, not only one half. As a generalist you have a look at the tech, the shopper, the info, the market, the price of the structure, and so forth. It provides you an edge to maneuver throughout matters and nonetheless do good work.

I’m not saying specialists can’t do that, however generalists are inclined to adapt sooner as a result of they’re used to choosing issues up rapidly.

You’ve been writing quite a bit about agentic programs these days. When do “brokers” really outperform easier LLM + RAG patterns, and when are we overcomplicating issues?

It is dependent upon the use case, however typically we throw AI into plenty of issues that most likely don’t want it. For those who can management the system programmatically, it is best to. LLMs are nice for translating human language into one thing a pc can perceive, however additionally they introduce unpredictability.

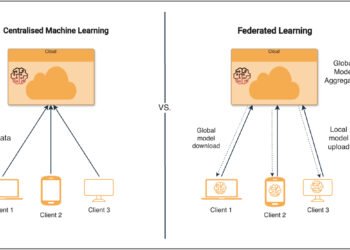

As for RAG, including an agent means including prices, so doing it only for the sake of getting an agent isn’t an ideal concept. You may work round it by utilizing smaller fashions as routers (however this provides work). I’ve added an agent to a RAG system as soon as as a result of I knew there can be questions on constructing it out to additionally “act.” So once more, it is dependent upon the use case.

Whenever you say Agentic AI wants “evaluations” what’s your checklist of go-to metrics? And the way do you resolve which one to make use of?

I wouldn’t say you at all times want evals, however firms will ask for them, so it’s good to know what groups measure for product high quality. If a product shall be utilized by lots of people, ensure you have some in place. I did various analysis right here to know the frameworks and metrics which have been outlined.

Generic metrics are most likely not sufficient although. You want a couple of customized ones on your use case. So the evals differ by utility.

For a coding copilot, you may observe what % of completions a developer accepts (acceptance charge) and whether or not the total chat reached the aim (completeness).

For commerce brokers, you would possibly measure whether or not the agent picked the precise merchandise and whether or not solutions are grounded within the retailer’s information.

Safety and security associated metrics are necessary too, akin to bias, toxicity, and the way simple it’s to interrupt the system (jailbreaks, information leaks).

For RAG, see my article the place I break down the same old metrics. Personally, I’ve solely arrange metrics for RAG thus far.

It might be attention-grabbing to map how totally different AI apps arrange evals in an article. For instance, Shopify Sidekick for commerce brokers and different instruments akin to authorized analysis assistants.

In your Agentic RAG Purposes article, you constructed a Slack agent that takes firm information into consideration (with LlamaIndex and Modal). What design alternative ended up mattering greater than anticipated?

The retrieval half is the place you’ll get caught, particularly chunking. Whenever you work with RAG functions, you cut up the method into two. The primary half is about fetching the proper info, and getting it proper is necessary as a result of you may’t overload an agent with an excessive amount of irrelevant info. To make it exact the chunks should be fairly small and related to the search question.

Nonetheless, in the event you make the chunks too small, you danger giving the LLM too little context. With chunks which might be too massive, the search system could develop into imprecise.

I arrange a system that chunked based mostly on the kind of doc, however proper now I’ve an concept for utilizing context enlargement after retrieval.

One other design alternative you want to remember is that though retrieval typically advantages from hybrid search, it might not be sufficient. Semantic search can join issues that reply the query with out utilizing the precise wording, whereas sparse strategies can determine precise key phrases. However sparse strategies like BM25 are token-based by default, so plain BM25 gained’t match substrings.

So, in the event you additionally wish to seek for substrings (a part of product IDs, that form of factor), you might want to add a search layer that helps partial matches as properly.

There may be extra, however I danger this changing into a whole article if I maintain going.

Throughout your consulting tasks over the previous two years, what issues have come up most frequently on your purchasers, and the way do you tackle them?

The problems I see are that almost all firms are in search of one thing customized, which is nice for consultants, however constructing in-house is riddled with complexities, particularly for individuals who haven’t performed it earlier than. I noticed that 95% quantity from the MIT examine about tasks failing, and I’m not shocked. I believe consultants ought to get good at sure use circumstances the place they will rapidly implement and tweak the product for purchasers, having already learnt how you can do it. However we’ll see what occurs.

You’ve written on TDS about so many various matters. The place do your article concepts come from? Shopper work, instruments you wish to strive, or your personal experiments? And what matter or downside is high of thoughts for you proper now?

A little bit of every thing, frankly. The articles additionally assist me floor my very own information, filling in lacking items I’ll not have researched myself but. Proper now I’m researching a bit on how smaller fashions (mid-sized, round 3B–7B) can be utilized in agent programs, safety, and particularly how you can enhance RAG.

Zooming out: what’s one non-obvious functionality groups ought to domesticate within the subsequent 12–18 months (technical or cultural) to develop into genuinely AI-productive somewhat than simply AI-busy?

Most likely be taught to construct within the house (particularly for enterprise individuals): simply getting an LLM to do one thing constantly is a method to perceive how unpredictable LLMs are. It makes you a bit extra humble.

To be taught extra about Ida‘s work and keep up-to-date along with her newest articles, you may observe her on TDS or LinkedIn.