Picture by Writer

# Introduction

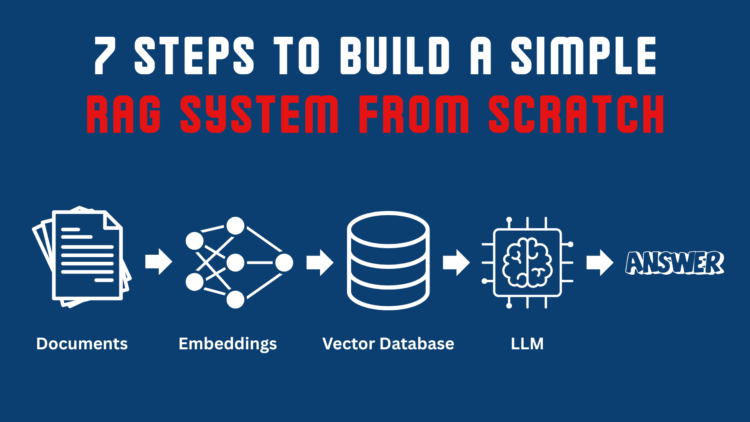

Nowadays, nearly everybody makes use of ChatGPT, Gemini, or one other massive language mannequin (LLM). They make life simpler however can nonetheless get issues mistaken. For instance, I keep in mind asking a generative mannequin who received the latest U.S. presidential election and getting the earlier president’s identify again. It sounded assured, however the mannequin merely relied on coaching knowledge earlier than the election happened. That is the place retrieval-augmented era (RAG) helps LLMs give extra correct and up-to-date responses. As a substitute of relying solely on the mannequin’s inside data, it pulls info from exterior sources — resembling PDFs, paperwork, or APIs — and makes use of that to construct a extra contextual and dependable reply. On this information, I’ll stroll you thru seven sensible steps to construct a easy RAG system from scratch.

# Understanding the Retrieval-Augmented Technology Workflow

Earlier than we proceed to code, right here’s the thought in plain phrases. A RAG system has two core items: the retriever and the generator. The retriever searches your data base and pulls out probably the most related chunks of textual content. The generator is the language mannequin that takes these snippets and turns them right into a pure, helpful reply. The method is easy, as follows:

- A person asks a query.

- The retriever searches your listed paperwork or database and returns the very best matching passages.

- These passages are handed to the LLM as context.

- The LLM then generates a response grounded in that retrieved context.

Now we are going to break that circulation down into seven easy steps and construct it end-to-end.

# Step 1: Preprocessing the Information

Regardless that massive language fashions already know loads from textbooks and internet knowledge, they don’t have entry to your personal or newly generated info like analysis notes, firm paperwork, or undertaking recordsdata. RAG helps you feed the mannequin your individual knowledge, lowering hallucinations and making responses extra correct and up-to-date. For the sake of this text, we’ll hold issues easy and use a number of quick textual content recordsdata about machine studying ideas.

knowledge/

├── supervised_learning.txt

└── unsupervised_learning.txt

supervised_learning.txt:

In any such machine studying (supervised), the mannequin is educated on labeled knowledge.

In easy phrases, each coaching instance has an enter and an related output label.

The target is to construct a mannequin that generalizes effectively on unseen knowledge.

Widespread algorithms embody:

- Linear Regression

- Choice Timber

- Random Forests

- Help Vector Machines

Classification and regression duties are carried out in supervised machine studying.

For instance: spam detection (classification) and home value prediction (regression).

They are often evaluated utilizing accuracy, F1-score, precision, recall, or imply squared error.

unsupervised_learning.txt:

In any such machine studying (unsupervised), the mannequin is educated on unlabeled knowledge.

In style algorithms embody:

- Ok-Means

- Principal Element Evaluation (PCA)

- Autoencoders

There aren't any predefined output labels; the algorithm routinely detects

underlying patterns or constructions inside the knowledge.

Typical use circumstances embody anomaly detection, buyer clustering,

and dimensionality discount.

Efficiency will be measured qualitatively or with metrics resembling silhouette rating

and reconstruction error.

The following process is to load this knowledge. For that, we are going to create a Python file, load_data.py:

import os

def load_documents(folder_path):

docs = []

for file in os.listdir(folder_path):

if file.endswith(".txt"):

with open(os.path.be a part of(folder_path, file), 'r', encoding='utf-8') as f:

docs.append(f.learn())

return docs

Earlier than we use the information, we are going to clear it. If the textual content is messy, the mannequin might retrieve irrelevant or incorrect passages, growing hallucinations. Now, let’s create one other Python file, clean_data.py:

import re

def clean_text(textual content: str) -> str:

textual content = re.sub(r's+', ' ', textual content)

textual content = re.sub(r'[^x00-x7F]+', ' ', textual content)

return textual content.strip()

Lastly, mix every little thing into a brand new file known as prepare_data.py to load and clear your paperwork collectively:

from load_data import load_documents

from clean_data import clean_text

def prepare_docs(folder_path="knowledge/"):

"""

Masses and cleans all textual content paperwork from the given folder.

"""

# Load Paperwork

raw_docs = load_documents(folder_path)

# Clear Paperwork

cleaned_docs = [clean_text(doc) for doc in raw_docs]

print(f"Ready {len(cleaned_docs)} paperwork.")

return cleaned_docs

# Step 2: Changing Textual content into Chunks

LLMs possess a small context window — e.g. they’re able to processing solely a restricted quantity of textual content concurrently. We clear up this by dividing lengthy paperwork into quick, overlapping items (the variety of phrases in a bit is often 300 to 500 phrases). We’ll use LangChain’s RecursiveCharacterTextSplitter, which splits textual content at pure factors like sentences or paragraphs. Every bit is smart, and the mannequin can shortly discover the related piece whereas answering.

split_text.py

from langchain.text_splitter import RecursiveCharacterTextSplitter

def split_docs(paperwork, chunk_size=500, chunk_overlap=100):

# outline the splitter

splitter = RecursiveCharacterTextSplitter(

chunk_size=chunk_size,

chunk_overlap=chunk_overlap

)

# use the splitter to separate docs into chunks

chunks = splitter.create_documents(paperwork)

print(f"Complete chunks created: {len(chunks)}")

return chunks

Chunking helps the mannequin perceive the textual content with out dropping its that means. If we don’t add just a little overlap between items, the mannequin can get confused on the edges, and the reply may not make sense.

# Step 3: Creating and Storing Vector Embeddings

A pc doesn’t perceive textual info; it solely understands numbers. So, we have to convert our textual content chunks into numbers. These numbers are known as vector embeddings, and so they assist the pc perceive the that means behind the textual content. We are able to use instruments like OpenAI, SentenceTransformers, or Hugging Face for this. Let’s create a brand new file known as create_embeddings.py and use SentenceTransformers to generate embeddings.

from sentence_transformers import SentenceTransformer

import numpy as np

def get_embeddings(text_chunks):

# Load embedding mannequin

mannequin = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2')

print(f"Creating embeddings for {len(text_chunks)} chunks:")

embeddings = mannequin.encode(text_chunks, show_progress_bar=True)

print(f"Embeddings form: {embeddings.form}")

return np.array(embeddings)

Every vector embedding captures its semantic that means. Comparable textual content chunks could have embeddings which might be shut to one another in vector area. Now we are going to retailer embeddings in a vector database like FAISS (Fb AI Similarity Search), Chroma, or Pinecone. This helps in quick similarity search. For instance, let’s use FAISS (a light-weight, native possibility). You possibly can set up it utilizing:

Subsequent, let’s create a file known as store_faiss.py. First, we make crucial imports:

import faiss

import numpy as np

import pickle

Now we’ll create a FAISS index from our embeddings utilizing the operate build_faiss_index().

def build_faiss_index(embeddings, save_path="faiss_index"):

"""

Builds FAISS index and saves it.

"""

dim = embeddings.form[1]

print(f"Constructing FAISS index with dimension: {dim}")

# Use a easy flat L2 index

index = faiss.IndexFlatL2(dim)

index.add(embeddings.astype('float32'))

# Save FAISS index

faiss.write_index(index, f"{save_path}.index")

print(f"Saved FAISS index to {save_path}.index")

return index

Every embedding represents a textual content chunk, and FAISS assists in retrieving the closest ones sooner or later when a person poses a query. Lastly, we have to save all textual content chunks (their metadata) right into a pickle file to allow them to be simply reloaded later for retrieval.

def save_metadata(text_chunks, path="faiss_metadata.pkl"):

"""

Saves the mapping of vector positions to textual content chunks.

"""

with open(path, "wb") as f:

pickle.dump(text_chunks, f)

print(f"Saved textual content metadata to {path}")

# Step 4: Retrieving Related Info

On this step, the person’s query is first transformed into numerical type, identical to what we did with all of the textual content chunks earlier than. The pc then compares the numerical values of the chunks with the query’s vector to search out the closest ones. This course of known as similarity search.

Let’s create a brand new file known as retrieve_faiss.py and make the imports as wanted:

import faiss

import pickle

import numpy as np

from sentence_transformers import SentenceTransformer

Now, create a operate to load the beforehand saved FAISS index from disk so it may be searched.

def load_faiss_index(index_path="faiss_index.index"):

"""

Masses the saved FAISS index from disk.

"""

print("Loading FAISS index.")

return faiss.read_index(index_path)

We’ll additionally want one other operate that hundreds the metadata, which comprises the textual content chunks we saved earlier.

def load_metadata(metadata_path="faiss_metadata.pkl"):

"""

Masses textual content chunk metadata (the precise textual content items).

"""

print("Loading textual content metadata.")

with open(metadata_path, "rb") as f:

return pickle.load(f)

The unique textual content chunks are saved in a metadata file (faiss_metadata.pkl) and are used to map FAISS outcomes again to readable textual content. At this level, we will likely be creating one other operate that takes a person’s question, embeds it, and finds the highest matching chunks from the FAISS index. The semantic search takes place right here.

def retrieve_similar_chunks(question, index, text_chunks, top_k=3):

"""

Retrieves top_k most related chunks for a given question.

Parameters:

question (str): The person's enter query.

index (faiss.Index): FAISS index object.

text_chunks (record): Authentic textual content chunks.

top_k (int): Variety of high outcomes to return.

Returns:

record: High matching textual content chunks.

"""

# Embed the question

mannequin = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2')

# Guarantee question vector is float32 as required by FAISS

query_vector = mannequin.encode([query]).astype('float32')

# Search FAISS for nearest vectors

distances, indices = index.search(query_vector, top_k)

print(f"Retrieved high {top_k} related chunks.")

return [text_chunks[i] for i in indices[0]]

This provides you the highest three most related textual content chunks to make use of as context.

# Step 5: Combining the Retrieved Context

As soon as we now have probably the most related chunks, the subsequent step is to mix them right into a single context block. This context is then appended to the person’s question earlier than passing it to the LLM. This step ensures that the mannequin has all the mandatory info to generate correct and grounded responses. You possibly can mix the chunks like this:

context_chunks = retrieve_similar_chunks(question, index, text_chunks, top_k=3)

context = "nn".be a part of(context_chunks)

This merged context will later be used when constructing the ultimate immediate for the LLM.

# Step 6: Utilizing a Massive Language Mannequin to Generate the Reply

Now, we mix the retrieved context with the person question and feed it into an LLM to generate the ultimate reply. Right here, we’ll use a freely out there open-source mannequin from Hugging Face, however you should utilize any mannequin you like.

Let’s create a brand new file known as generate_answer.py and add the imports:

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

from retrieve_faiss import load_faiss_index, load_metadata, retrieve_similar_chunks

Now outline a operate generate_answer() that performs the whole course of:

def generate_answer(question, top_k=3):

"""

Retrieves related chunks and generates a ultimate reply.

"""

# Load FAISS index and metadata

index = load_faiss_index()

text_chunks = load_metadata()

# Retrieve high related chunks

context_chunks = retrieve_similar_chunks(question, index, text_chunks, top_k=top_k)

context = "nn".be a part of(context_chunks)

# Load open-source LLM

print("Loading LLM...")

model_name = "TinyLlama/TinyLlama-1.1B-Chat-v1.0"

# Load tokenizer and mannequin, utilizing a tool map for environment friendly loading

tokenizer = AutoTokenizer.from_pretrained(model_name)

mannequin = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype=torch.float16, device_map="auto")

# Construct the immediate

immediate = f"""

Context:

{context}

Query:

{question}

Reply:

"""

# Generate output

inputs = tokenizer(immediate, return_tensors="pt").to(mannequin.gadget)

# Use the proper enter for mannequin era

with torch.no_grad():

outputs = mannequin.generate(**inputs, max_new_tokens=200, pad_token_id=tokenizer.eos_token_id)

# Decode and clear up the reply, eradicating the unique immediate

full_text = tokenizer.decode(outputs[0], skip_special_tokens=True)

# Easy technique to take away the immediate half from the output

reply = full_text.break up("Reply:")[1].strip() if "Reply:" in full_text else full_text.strip()

print("nFinal Reply:")

print(reply)

# Step 7: Operating the Full Retrieval-Augmented Technology Pipeline

This ultimate step brings every little thing collectively. We’ll create a essential.py file that automates all the workflow from knowledge loading to producing the ultimate reply.

# Information preparation

from prepare_data import prepare_docs

from split_text import split_docs

# Embedding and storage

from create_embeddings import get_embeddings

from store_faiss import build_faiss_index, save_metadata

# Retrieval and reply era

from generate_answer import generate_answer

Now outline the primary operate:

def run_pipeline():

"""

Runs the total end-to-end RAG workflow.

"""

print("nLoad and Clear Information:")

paperwork = prepare_docs("knowledge/")

print(f"Loaded {len(paperwork)} clear paperwork.n")

print("Break up Textual content into Chunks:")

# paperwork is a listing of strings, however split_docs expects a listing of paperwork

# For this easy instance the place paperwork are small, we cross them as strings

chunks_as_text = split_docs(paperwork, chunk_size=500, chunk_overlap=100)

# On this case, chunks_as_text is a listing of LangChain Doc objects

# Extract textual content content material from LangChain Doc objects

texts = [c.page_content for c in chunks_as_text]

print(f"Created {len(texts)} textual content chunks.n")

print("Generate Embeddings:")

embeddings = get_embeddings(texts)

print("Retailer Embeddings in FAISS:")

index = build_faiss_index(embeddings)

save_metadata(texts)

print("Saved embeddings and metadata efficiently.n")

print("Retrieve & Generate Reply:")

question = "Does unsupervised ML cowl regression duties?"

generate_answer(question)

Lastly, run the pipeline:

if __name__ == "__main__":

run_pipeline()

Output:

Screenshot of the Output | Picture by Writer

# Wrapping Up

RAG closes the hole between what an LLM “already is aware of” and the always altering info out on the planet. I’ve applied a really primary pipeline so you can perceive how RAG works. On the enterprise stage, many superior ideas, resembling including guardrails, hybrid search, streaming, and context optimization strategies come into use. If you happen to’re concerned with exploring extra superior ideas, listed below are a number of of my private favorites:

Kanwal Mehreen is a machine studying engineer and a technical author with a profound ardour for knowledge science and the intersection of AI with drugs. She co-authored the book “Maximizing Productiveness with ChatGPT”. As a Google Technology Scholar 2022 for APAC, she champions variety and educational excellence. She’s additionally acknowledged as a Teradata Variety in Tech Scholar, Mitacs Globalink Analysis Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having based FEMCodes to empower ladies in STEM fields.

Picture by Writer

# Introduction

Nowadays, nearly everybody makes use of ChatGPT, Gemini, or one other massive language mannequin (LLM). They make life simpler however can nonetheless get issues mistaken. For instance, I keep in mind asking a generative mannequin who received the latest U.S. presidential election and getting the earlier president’s identify again. It sounded assured, however the mannequin merely relied on coaching knowledge earlier than the election happened. That is the place retrieval-augmented era (RAG) helps LLMs give extra correct and up-to-date responses. As a substitute of relying solely on the mannequin’s inside data, it pulls info from exterior sources — resembling PDFs, paperwork, or APIs — and makes use of that to construct a extra contextual and dependable reply. On this information, I’ll stroll you thru seven sensible steps to construct a easy RAG system from scratch.

# Understanding the Retrieval-Augmented Technology Workflow

Earlier than we proceed to code, right here’s the thought in plain phrases. A RAG system has two core items: the retriever and the generator. The retriever searches your data base and pulls out probably the most related chunks of textual content. The generator is the language mannequin that takes these snippets and turns them right into a pure, helpful reply. The method is easy, as follows:

- A person asks a query.

- The retriever searches your listed paperwork or database and returns the very best matching passages.

- These passages are handed to the LLM as context.

- The LLM then generates a response grounded in that retrieved context.

Now we are going to break that circulation down into seven easy steps and construct it end-to-end.

# Step 1: Preprocessing the Information

Regardless that massive language fashions already know loads from textbooks and internet knowledge, they don’t have entry to your personal or newly generated info like analysis notes, firm paperwork, or undertaking recordsdata. RAG helps you feed the mannequin your individual knowledge, lowering hallucinations and making responses extra correct and up-to-date. For the sake of this text, we’ll hold issues easy and use a number of quick textual content recordsdata about machine studying ideas.

knowledge/

├── supervised_learning.txt

└── unsupervised_learning.txt

supervised_learning.txt:

In any such machine studying (supervised), the mannequin is educated on labeled knowledge.

In easy phrases, each coaching instance has an enter and an related output label.

The target is to construct a mannequin that generalizes effectively on unseen knowledge.

Widespread algorithms embody:

- Linear Regression

- Choice Timber

- Random Forests

- Help Vector Machines

Classification and regression duties are carried out in supervised machine studying.

For instance: spam detection (classification) and home value prediction (regression).

They are often evaluated utilizing accuracy, F1-score, precision, recall, or imply squared error.

unsupervised_learning.txt:

In any such machine studying (unsupervised), the mannequin is educated on unlabeled knowledge.

In style algorithms embody:

- Ok-Means

- Principal Element Evaluation (PCA)

- Autoencoders

There aren't any predefined output labels; the algorithm routinely detects

underlying patterns or constructions inside the knowledge.

Typical use circumstances embody anomaly detection, buyer clustering,

and dimensionality discount.

Efficiency will be measured qualitatively or with metrics resembling silhouette rating

and reconstruction error.

The following process is to load this knowledge. For that, we are going to create a Python file, load_data.py:

import os

def load_documents(folder_path):

docs = []

for file in os.listdir(folder_path):

if file.endswith(".txt"):

with open(os.path.be a part of(folder_path, file), 'r', encoding='utf-8') as f:

docs.append(f.learn())

return docs

Earlier than we use the information, we are going to clear it. If the textual content is messy, the mannequin might retrieve irrelevant or incorrect passages, growing hallucinations. Now, let’s create one other Python file, clean_data.py:

import re

def clean_text(textual content: str) -> str:

textual content = re.sub(r's+', ' ', textual content)

textual content = re.sub(r'[^x00-x7F]+', ' ', textual content)

return textual content.strip()

Lastly, mix every little thing into a brand new file known as prepare_data.py to load and clear your paperwork collectively:

from load_data import load_documents

from clean_data import clean_text

def prepare_docs(folder_path="knowledge/"):

"""

Masses and cleans all textual content paperwork from the given folder.

"""

# Load Paperwork

raw_docs = load_documents(folder_path)

# Clear Paperwork

cleaned_docs = [clean_text(doc) for doc in raw_docs]

print(f"Ready {len(cleaned_docs)} paperwork.")

return cleaned_docs

# Step 2: Changing Textual content into Chunks

LLMs possess a small context window — e.g. they’re able to processing solely a restricted quantity of textual content concurrently. We clear up this by dividing lengthy paperwork into quick, overlapping items (the variety of phrases in a bit is often 300 to 500 phrases). We’ll use LangChain’s RecursiveCharacterTextSplitter, which splits textual content at pure factors like sentences or paragraphs. Every bit is smart, and the mannequin can shortly discover the related piece whereas answering.

split_text.py

from langchain.text_splitter import RecursiveCharacterTextSplitter

def split_docs(paperwork, chunk_size=500, chunk_overlap=100):

# outline the splitter

splitter = RecursiveCharacterTextSplitter(

chunk_size=chunk_size,

chunk_overlap=chunk_overlap

)

# use the splitter to separate docs into chunks

chunks = splitter.create_documents(paperwork)

print(f"Complete chunks created: {len(chunks)}")

return chunks

Chunking helps the mannequin perceive the textual content with out dropping its that means. If we don’t add just a little overlap between items, the mannequin can get confused on the edges, and the reply may not make sense.

# Step 3: Creating and Storing Vector Embeddings

A pc doesn’t perceive textual info; it solely understands numbers. So, we have to convert our textual content chunks into numbers. These numbers are known as vector embeddings, and so they assist the pc perceive the that means behind the textual content. We are able to use instruments like OpenAI, SentenceTransformers, or Hugging Face for this. Let’s create a brand new file known as create_embeddings.py and use SentenceTransformers to generate embeddings.

from sentence_transformers import SentenceTransformer

import numpy as np

def get_embeddings(text_chunks):

# Load embedding mannequin

mannequin = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2')

print(f"Creating embeddings for {len(text_chunks)} chunks:")

embeddings = mannequin.encode(text_chunks, show_progress_bar=True)

print(f"Embeddings form: {embeddings.form}")

return np.array(embeddings)

Every vector embedding captures its semantic that means. Comparable textual content chunks could have embeddings which might be shut to one another in vector area. Now we are going to retailer embeddings in a vector database like FAISS (Fb AI Similarity Search), Chroma, or Pinecone. This helps in quick similarity search. For instance, let’s use FAISS (a light-weight, native possibility). You possibly can set up it utilizing:

Subsequent, let’s create a file known as store_faiss.py. First, we make crucial imports:

import faiss

import numpy as np

import pickle

Now we’ll create a FAISS index from our embeddings utilizing the operate build_faiss_index().

def build_faiss_index(embeddings, save_path="faiss_index"):

"""

Builds FAISS index and saves it.

"""

dim = embeddings.form[1]

print(f"Constructing FAISS index with dimension: {dim}")

# Use a easy flat L2 index

index = faiss.IndexFlatL2(dim)

index.add(embeddings.astype('float32'))

# Save FAISS index

faiss.write_index(index, f"{save_path}.index")

print(f"Saved FAISS index to {save_path}.index")

return index

Every embedding represents a textual content chunk, and FAISS assists in retrieving the closest ones sooner or later when a person poses a query. Lastly, we have to save all textual content chunks (their metadata) right into a pickle file to allow them to be simply reloaded later for retrieval.

def save_metadata(text_chunks, path="faiss_metadata.pkl"):

"""

Saves the mapping of vector positions to textual content chunks.

"""

with open(path, "wb") as f:

pickle.dump(text_chunks, f)

print(f"Saved textual content metadata to {path}")

# Step 4: Retrieving Related Info

On this step, the person’s query is first transformed into numerical type, identical to what we did with all of the textual content chunks earlier than. The pc then compares the numerical values of the chunks with the query’s vector to search out the closest ones. This course of known as similarity search.

Let’s create a brand new file known as retrieve_faiss.py and make the imports as wanted:

import faiss

import pickle

import numpy as np

from sentence_transformers import SentenceTransformer

Now, create a operate to load the beforehand saved FAISS index from disk so it may be searched.

def load_faiss_index(index_path="faiss_index.index"):

"""

Masses the saved FAISS index from disk.

"""

print("Loading FAISS index.")

return faiss.read_index(index_path)

We’ll additionally want one other operate that hundreds the metadata, which comprises the textual content chunks we saved earlier.

def load_metadata(metadata_path="faiss_metadata.pkl"):

"""

Masses textual content chunk metadata (the precise textual content items).

"""

print("Loading textual content metadata.")

with open(metadata_path, "rb") as f:

return pickle.load(f)

The unique textual content chunks are saved in a metadata file (faiss_metadata.pkl) and are used to map FAISS outcomes again to readable textual content. At this level, we will likely be creating one other operate that takes a person’s question, embeds it, and finds the highest matching chunks from the FAISS index. The semantic search takes place right here.

def retrieve_similar_chunks(question, index, text_chunks, top_k=3):

"""

Retrieves top_k most related chunks for a given question.

Parameters:

question (str): The person's enter query.

index (faiss.Index): FAISS index object.

text_chunks (record): Authentic textual content chunks.

top_k (int): Variety of high outcomes to return.

Returns:

record: High matching textual content chunks.

"""

# Embed the question

mannequin = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2')

# Guarantee question vector is float32 as required by FAISS

query_vector = mannequin.encode([query]).astype('float32')

# Search FAISS for nearest vectors

distances, indices = index.search(query_vector, top_k)

print(f"Retrieved high {top_k} related chunks.")

return [text_chunks[i] for i in indices[0]]

This provides you the highest three most related textual content chunks to make use of as context.

# Step 5: Combining the Retrieved Context

As soon as we now have probably the most related chunks, the subsequent step is to mix them right into a single context block. This context is then appended to the person’s question earlier than passing it to the LLM. This step ensures that the mannequin has all the mandatory info to generate correct and grounded responses. You possibly can mix the chunks like this:

context_chunks = retrieve_similar_chunks(question, index, text_chunks, top_k=3)

context = "nn".be a part of(context_chunks)

This merged context will later be used when constructing the ultimate immediate for the LLM.

# Step 6: Utilizing a Massive Language Mannequin to Generate the Reply

Now, we mix the retrieved context with the person question and feed it into an LLM to generate the ultimate reply. Right here, we’ll use a freely out there open-source mannequin from Hugging Face, however you should utilize any mannequin you like.

Let’s create a brand new file known as generate_answer.py and add the imports:

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

from retrieve_faiss import load_faiss_index, load_metadata, retrieve_similar_chunks

Now outline a operate generate_answer() that performs the whole course of:

def generate_answer(question, top_k=3):

"""

Retrieves related chunks and generates a ultimate reply.

"""

# Load FAISS index and metadata

index = load_faiss_index()

text_chunks = load_metadata()

# Retrieve high related chunks

context_chunks = retrieve_similar_chunks(question, index, text_chunks, top_k=top_k)

context = "nn".be a part of(context_chunks)

# Load open-source LLM

print("Loading LLM...")

model_name = "TinyLlama/TinyLlama-1.1B-Chat-v1.0"

# Load tokenizer and mannequin, utilizing a tool map for environment friendly loading

tokenizer = AutoTokenizer.from_pretrained(model_name)

mannequin = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype=torch.float16, device_map="auto")

# Construct the immediate

immediate = f"""

Context:

{context}

Query:

{question}

Reply:

"""

# Generate output

inputs = tokenizer(immediate, return_tensors="pt").to(mannequin.gadget)

# Use the proper enter for mannequin era

with torch.no_grad():

outputs = mannequin.generate(**inputs, max_new_tokens=200, pad_token_id=tokenizer.eos_token_id)

# Decode and clear up the reply, eradicating the unique immediate

full_text = tokenizer.decode(outputs[0], skip_special_tokens=True)

# Easy technique to take away the immediate half from the output

reply = full_text.break up("Reply:")[1].strip() if "Reply:" in full_text else full_text.strip()

print("nFinal Reply:")

print(reply)

# Step 7: Operating the Full Retrieval-Augmented Technology Pipeline

This ultimate step brings every little thing collectively. We’ll create a essential.py file that automates all the workflow from knowledge loading to producing the ultimate reply.

# Information preparation

from prepare_data import prepare_docs

from split_text import split_docs

# Embedding and storage

from create_embeddings import get_embeddings

from store_faiss import build_faiss_index, save_metadata

# Retrieval and reply era

from generate_answer import generate_answer

Now outline the primary operate:

def run_pipeline():

"""

Runs the total end-to-end RAG workflow.

"""

print("nLoad and Clear Information:")

paperwork = prepare_docs("knowledge/")

print(f"Loaded {len(paperwork)} clear paperwork.n")

print("Break up Textual content into Chunks:")

# paperwork is a listing of strings, however split_docs expects a listing of paperwork

# For this easy instance the place paperwork are small, we cross them as strings

chunks_as_text = split_docs(paperwork, chunk_size=500, chunk_overlap=100)

# On this case, chunks_as_text is a listing of LangChain Doc objects

# Extract textual content content material from LangChain Doc objects

texts = [c.page_content for c in chunks_as_text]

print(f"Created {len(texts)} textual content chunks.n")

print("Generate Embeddings:")

embeddings = get_embeddings(texts)

print("Retailer Embeddings in FAISS:")

index = build_faiss_index(embeddings)

save_metadata(texts)

print("Saved embeddings and metadata efficiently.n")

print("Retrieve & Generate Reply:")

question = "Does unsupervised ML cowl regression duties?"

generate_answer(question)

Lastly, run the pipeline:

if __name__ == "__main__":

run_pipeline()

Output:

Screenshot of the Output | Picture by Writer

# Wrapping Up

RAG closes the hole between what an LLM “already is aware of” and the always altering info out on the planet. I’ve applied a really primary pipeline so you can perceive how RAG works. On the enterprise stage, many superior ideas, resembling including guardrails, hybrid search, streaming, and context optimization strategies come into use. If you happen to’re concerned with exploring extra superior ideas, listed below are a number of of my private favorites:

Kanwal Mehreen is a machine studying engineer and a technical author with a profound ardour for knowledge science and the intersection of AI with drugs. She co-authored the book “Maximizing Productiveness with ChatGPT”. As a Google Technology Scholar 2022 for APAC, she champions variety and educational excellence. She’s additionally acknowledged as a Teradata Variety in Tech Scholar, Mitacs Globalink Analysis Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having based FEMCodes to empower ladies in STEM fields.