— that’s the bold title the authors selected for his or her paper introducing each YOLOv2 and YOLO9000. The title of the paper itself is “YOLO9000: Higher, Sooner, Stronger” [1], which was printed again in December 2016. The primary focus of this paper is certainly to create YOLO9000. However let’s make issues clear. Regardless of the title of the paper, the mannequin proposed within the examine is known as YOLOv2. The title YOLO9000 is their proposed algorithm specialised to detect over 9000 object classes which is constructed on high of the YOLOv2 structure.

On this article I’m going to concentrate on how YOLOv2 works and find out how to implement the structure from scratch with PyTorch. I will even discuss slightly bit about how the authors ultimately ended up with YOLO9000.

From YOLOv1 to YOLOv2

Because the title suggests, YOLOv2 is the development of YOLOv1. Thus, with a view to perceive YOLOv2, I like to recommend you learn my earlier article about YOLOv1 [2] and its loss operate [3] earlier than studying this one.

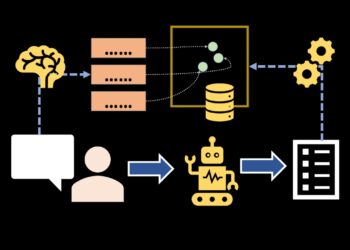

There have been two most important issues raised by the authors on YOLOv1: first, the excessive localization error, or in different phrases the bounding field predictions made by the mannequin is just not fairly correct. Second, the low recall, which is a situation the place the mannequin is unable to detect all objects inside the picture. There have been plenty of modifications made by the authors on YOLOv1 to handle the above points, which generally the modifications they made are summarized in Determine 1. We’re going to talk about every of those modifications one after the other within the subsequent sub-sections.

Batch Normalization

The primary modification the authors did was making use of batch normalization layer. Do not forget that YOLOv1 is sort of outdated. It was first launched again when BN layer was not fairly fashionable simply but, which was the explanation why YOLOv1 don’t make the most of this normalization mechanism within the first place. It’s already confirmed that BN layer is ready to stabilize coaching, pace up convergence, dan regularize mannequin. Because of this motive, the dropout layer we beforehand have in YOLOv1 was omitted as we apply BN layers. It’s talked about within the paper that by attaching this sort layer after every convolution they obtained 2.4% enchancment in mAP from 63.4% to 65.8%.

Higher Positive-Tuning

Subsequent, the authors proposed a greater option to carry out fine-tuning. Beforehand in YOLOv1 the spine mannequin was pretrained on ImageNet classification dataset which the photographs had the scale of 224×224. Then, they changed the classification head with detection head and straight fine-tune it on PASCAL VOC detection dataset which comprises photographs of dimension 448×448. Right here we are able to clearly see that there was one thing like a “leap” because of the totally different picture resolutions in pretraining and fine-tuning. The pipeline used for coaching YOLOv2 is barely modified, the place the authors added an intermediate step, specifically fine-tuning the mannequin on 448×448 ImageNet earlier than fine-tuning it once more on PASCAL VOC of the identical picture decision. This extra step permits the mannequin to get tailored to the upper decision picture earlier than being fine-tuned for detection, not like in YOLOv1 which the mannequin is pressured to work on 448×448 photographs straight after being pretrained on 224×224 photographs. This new fine-tuning pipeline allowed the mAP to extend by 3.7% from 65.8% to 69.5%.

Anchor Field and Absolutely Convolutional Community

The following modification was associated to the usage of anchor field. In case you’re not but aware of it, that is primarily a template bounding field (a.ok.a. prior field) akin to a single grid cell, which is rescaled to match the precise object dimension. The mannequin is then educated to foretell the offset of the anchor field fairly than the bounding field coordinates like YOLOv1. We will consider an anchor field as the start line of the mannequin to make bounding field prediction. In line with the paper, predicting offset like that is simpler than predicting coordinates, therefore permitting the mannequin to carry out higher. Determine 3 beneath illustrates 5 anchor containers that correspond to the top-left grid cell. Afterward, the identical anchor containers will probably be utilized to all grid cells inside the picture.

Using anchor containers additionally modified the best way we do the article classification. Beforehand in YOLOv1 we had every grid cell predicted two bounding containers, but it might solely predict a single object class. YOLOv2 addresses this concern by attaching the article classification mechanism with the anchor field fairly than the grid cell, permitting every anchor field from the identical grid cell to foretell totally different object courses. Mathematically talking, the size of the prediction vector of YOLOv1 will be formulated as (B×5)+C for every grid cell, whereas in YOLOv2 this prediction vector size modified to B×(5+C), the place B is the variety of bounding field to be generated, C is the variety of courses within the dataset, and 5 is the variety of xywh and the bounding field confidence worth. With the mechanism launched in YOLOv2, the prediction vector certainly turns into longer, but it surely permits every anchor field predicts its personal class. The determine beneath illustrates the prediction vectors of YOLOv1 and YOLOv2, the place we set B to 2 and C to twenty. On this explicit case, the size of the prediction vectors of each fashions are (2×5)+20=30 and a pair of×(5+20)=50, respectively.

At this level authors additionally changed the fully-connected layers in YOLOv1 with a stack of convolution layers, inflicting all the mannequin to be a totally convolutional community which has the downsampling issue of 32. This downsampling issue causes an enter tensor of dimension 448×448 to get decreased to 14×14. The authors argued that giant objects are normally situated in the midst of a picture, so that they made the output characteristic map to have odd dimensions, guaranteeing that there’s a single heart cell to foretell such objects. To be able to obtain this, the authors modified the enter form to 416×416 because the default configuration in order that the output dimension has the spatial decision of 13×13.

Apparently, the usage of anchor field and totally convolutional community prompted the mAP to lower by 0.3% from 69.5% to 69.2% as a substitute, but on the similar time the recall elevated by 7% from 81% to 88%. This enchancment in recall was notably brought on by the rise of the variety of predictions made by the mannequin. Within the case of YOLOv1, the mannequin might solely predict 7×7=49 objects in whole, and now YOLOv2 can predict as much as 13×13×5=845 objects, the place the quantity 5 comes from the default variety of anchor containers used. In the meantime, the lower in mAP indicated that there was a room for enchancment on the anchor containers.

Prior Field Clustering and Constrained Predictions

The authors certainly noticed an issue within the anchor containers, and so within the subsequent step they tried to switch the best way it really works. Beforehand in Sooner R-CNN the anchor containers have been manually handpicked, which prompted them to not optimally symbolize all object shapes within the dataset. To handle this downside, authors used Okay-means to cluster the distribution of the bounding field dimension. They did it by taking the w and h values of the bounding containers within the object detection dataset, placing them into two-dimensional area, and clustering the datapoints utilizing Okay-means as common. The authors determined to make use of Okay=5, which primarily means that we’ll later have that variety of clusters.

The illustration in Determine 5 beneath shows what the bounding field dimension distribution seems to be like, the place every black datapoint represents a single bounding field within the dataset and the inexperienced circles are the centroids which can then act as the scale of our anchor containers. Observe that this illustration is certainly created primarily based on dummy knowledge, however the concept right here is that the datapoint situated on the top-right represents a big sq. bounding field, the one within the top-left is a vertical rectangle field, and so forth.

In case you’re aware of Okay-means, we sometimes use Euclidean distance to measure the space between datapoints and the centroids. However right here the authors created a brand new distance metric particularly for this case, by which they used the complement of the IOU between the bounding containers and the cluster centroids. See the equation beneath for the small print.

Utilizing the space metric above, we are able to see within the following desk that the prior containers generated utilizing Okay-means clustering (highlighted in blue) have a higher common IOU in comparison with the prior containers utilized in Sooner R-CNN (highlighted in inexperienced) regardless of the smaller variety of prior containers (5 vs 9). This primarily signifies that the proposed clustering mechanism permits the ensuing prior containers to symbolize the bounding field dimension distribution within the dataset higher as in comparison with the handpicked anchor containers.

Nonetheless associated to prior field, the authors discovered that predicting anchor field offset like Sooner R-CNN was truly nonetheless not fairly optimum because of the unbounded equation. If we check out the Determine 8 beneath, there’s a risk that the field place might be shifted wildly all through all the picture, inflicting the coaching tough particularly in earlier phases.

As an alternative of being relative to the anchor field like Sooner R-CNN, the authors solved this concern by adopting the concept of predicting location coordinates relative to the grid cell from YOLOv1. Nonetheless, the authors additional modify this by introducing sigmoid operate to constrain the xy coordinate prediction of the community, successfully bounds the worth to the vary of 0 to 1 therefore inflicting the expected location won’t ever fall exterior the corresponding grid cell, as proven within the first and the second rows in Determine 9. Subsequent, the w and h of the bounding field are processed with exponential operate (third and fourth row), which is helpful to stop destructive values as a result of it’s simply nonsense to have destructive width or peak. In the meantime, the best way to compute confidence rating on the fifth row is identical as YOLOv1, specifically by calculating the multiplication of objectness confidence and the IOU between the expected and the goal field.

So in easy phrases, we certainly undertake the idea of prior field launched by Sooner R-CNN, however as a substitute of handpicking the field, we use clustering to routinely discover probably the most optimum prior field sizes. The bounding field is created with further sigmoid operate for the xy and exponential operate for the wh. It’s value noting that now the x and y are relative to the grid cell whereas the w and h are relative to the prior field. The authors discovered that this methodology improved mAP from 69.6% to 74.4%.

Passthrough Layer

The ultimate output characteristic map of YOLOv2 has the spatial dimension of 13×13, by which every factor corresponds to a single grid cell. The data contained inside every grid cell is taken into account coarse, which completely is sensible as a result of maxpooling layers inside the community certainly work by taking solely the best values from the sooner characteristic map. This won’t be an issue if the objects to be detected are significantly giant. But when the objects are small, our mannequin could be having laborious time in performing the detection because of the lack of info contained within the non-prominent pixels.

To handle this downside, authors proposed to use the so-called passthrough layer. The target of this layer is to protect fine-grained info from earlier characteristic map earlier than being downsampled by maxpooling layer. In Determine 12, the a part of the community known as passthrough layer is the connection that branches out from the community earlier than ultimately merging again to the primary move in the long run. The concept of this layer is sort of much like identification mapping launched in ResNet. Nonetheless, the method performed in that mannequin is less complicated as a result of the tensor dimension from the unique move and the one within the skip-connection matches precisely, permitting them to be element-wise sumed. The case is totally different in passthrough layer, by which right here the latter characteristic map has a smaller spatial dimension, therefore we have to suppose a option to mix info from the 2 tensors. The authors got here up with an concept the place they divide the picture within the passthrough layer after which stack the divided tensors in channel-wise method as proven in Determine 10 beneath. By doing so, we could have the spatial dimension of the ensuing tensor matches with the following characteristic map, permitting them to be concatenated alongside the channel axis. The fine-grained info from the previous layer will then be mixed with the higher-level options from the latter layer utilizing a convolution layer.

Multi-Scale Coaching

Beforehand I’ve talked about that in YOLOv2 all FC layers have been changed with a stack of convolution layers. This primarily permits us to feed photographs of various scales inside the similar coaching course of, contemplating that the weights of CNN-based mannequin correspond to the trainable parameters within the kernel, which is impartial of the enter picture dimension. The truth is, that is truly the explanation why the authors determined to take away the FC layers within the first place. In the course of the coaching part, authors modified the enter decision each 10 batches randomly from 320×320, 352×352, 384×384, and so forth to 608×608, all with a number of of 32. This course of will be considered their method to reinforce the info in order that the mannequin can detect objects throughout various enter dimensions, which I imagine it additionally permits the mannequin to foretell objects of various scales with higher efficiency. This course of boosted mAP to 76.8% on the default enter decision 416×416, and it obtained even increased to 78.6% once we they elevated the picture decision additional to 544×544.

Darknet-19

All modifications on YOLOv1 we mentioned within the earlier sub sections have been all associated to how the authors enhance detection high quality by way of the mAP and recall. Now the main focus of this sub part is to enhance mannequin efficiency by way of the pace. It’s talked about within the paper that the authors use a mannequin known as Darknet-19 because the spine, which has much less operations in comparison with the spine of YOLOv1 (5.58 billion vs 8.52 billion), permitting YOLOv2 to run quicker than its predecessor. The unique model of this mannequin consists of 19 convolution layers and 5 maxpooling layers, which the small print will be seen in Determine 11 beneath.

It is very important be aware that the above structure is the vanilla Darknet-19 mannequin, which is simply appropriate for classification activity. To adapt it with the requirement of YOLOv2, we have to barely modify it by including passthrough layer and changing the classification head with detection head. You may see the modified structure in Determine 12 beneath.

Right here you’ll be able to see that the passthrough layer is positioned after the final 26×26 characteristic map. This passthrough layer will scale back the spatial dimension to 13×13, permitting it to be concatenated in channel-wise method with the 13×13 characteristic map from the primary move. Later within the subsequent part I’m going to reveal find out how to implement this Darknet-19 structure from scratch together with the detection head in addition to the passthrough layer.

9000-Class Object Detection

YOLOv2 mannequin was initially educated on PASCAL VOC and COCO datasets which have 20 and 80 variety of object courses, respectively. The authors noticed this as an issue as a result of they thought that this quantity may be very restricted for common case, and therefore lack of versatility. As a consequence of this motive, it’s crucial to enhance the mannequin such that it may possibly detect a greater diversity of object courses. Nonetheless, creating object detection dataset may be very costly and laborious, as a result of not solely the article courses however we’re additionally required to annotate the bounding field info.

The authors got here up with a really intelligent concept, the place they mixed ImageNet, which has over 22,000 courses, with COCO utilizing class hierarchy mechanism which they consult with as WordTree as proven in Determine 13. You may see the illustration within the determine that blue nodes are the courses from COCO dataset, whereas the purple ones are from ImageNet dataset. The item classes accessible within the COCO dataset are comparatively common, whereas those in ImageNet are much more fine-grained. For example, if in COCO we obtained airplane, in ImageNet we obtained biplane, jet, airbus, and stealth fighter. So utilizing the concept of WordTree, the authors put these 4 airplane sorts because the subclass of airplane. You may consider the inference like this: the mannequin works by predicting bounding field and the father or mother class, then it’s going to examine if it obtained subclasses. If that’s the case, the mannequin will proceed predicting from the smaller subset of courses.

By combining the 2 datasets like this, we ultimately ended up with a mannequin that’s able to predicting over 9000 object courses (9418 to be actual), therefore the title YOLO9000.

YOLOv2 Structure Implementation

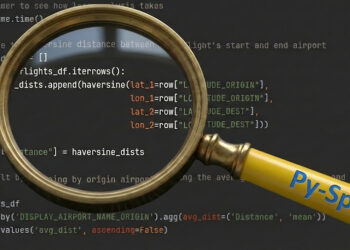

As I promised earlier, on this part I’m going to reveal find out how to implement the YOLOv2 structure from scratch with the intention to get higher understanding about how an enter picture ultimately turns into a tensor containing bounding field and sophistication predictions.

Now what we have to do first is to import the required modules which is proven in Codeblock 1 beneath.

# Codeblock 1

import torch

import torch.nn as nnSubsequent, we create the ConvBlock class, by which it will encapsulate the convolution layer itself, a batch normalization layer and a leaky ReLU activation operate. The negative_slope parameter itself is about to 0.1 as proven at line #(1), which is strictly the identical because the one utilized in YOLOv1.

# Codeblock 2

class ConvBlock(nn.Module):

def __init__(self,

in_channels,

out_channels,

kernel_size,

padding):

tremendous().__init__()

self.conv = nn.Conv2d(in_channels=in_channels,

out_channels=out_channels,

kernel_size=kernel_size,

padding=padding)

self.bn = nn.BatchNorm2d(num_features=out_channels)

self.leaky_relu = nn.LeakyReLU(negative_slope=0.1) #(1)

def ahead(self, x):

print(f'originalt: {x.dimension()}')

x = self.conv(x)

print(f'after convt: {x.dimension()}')

x = self.leaky_relu(x)

print(f'after leaky relu: {x.dimension()}')

return xSimply to examine if the above class works correctly, right here I check it with a quite simple check case, the place I initialize a ConvBlock occasion which accepts an RGB picture of dimension 416×416. You may see within the ensuing output that the picture now has 64 channels, proving that our ConvBlock works correctly.

# Codeblock 3

convblock = ConvBlock(in_channels=3,

out_channels=64,

kernel_size=3,

padding=1)

x = torch.randn(1, 3, 416, 416)

out = convblock(x)# Codeblock 3 Output

unique : torch.Dimension([1, 3, 416, 416])

after conv : torch.Dimension([1, 64, 416, 416])

after leaky relu : torch.Dimension([1, 64, 416, 416])Darknet-19 Implementation

Now let’s use this ConvBlock class to assemble the Darknet-19 structure. The best way to take action is fairly easy, as what we have to do is simply to stack a number of ConvBlock situations adopted by a maxpooling layer in line with the structure in Determine 12. See the small print in Codeblock 4a beneath. Observe that the maxpooling layer for stage4 is positioned firstly of stage5 as proven on the line marked with #(1). That is primarily performed as a result of the output of stage4 will straight be fed into the passthrough layer with out being downsampled. Along with this, you will need to be aware that the time period “stage” is just not formally talked about within the paper. Relatively, that is only a time period I personally use for the sake of this implementation.

# Codeblock 4a

class Darknet(nn.Module):

def __init__(self):

tremendous(Darknet, self).__init__()

self.stage0 = nn.ModuleList([

ConvBlock(3, 32, 3, 1),

nn.MaxPool2d(kernel_size=2, stride=2)

])

self.stage1 = nn.ModuleList([

ConvBlock(32, 64, 3, 1),

nn.MaxPool2d(kernel_size=2, stride=2)

])

self.stage2 = nn.ModuleList([

ConvBlock(64, 128, 3, 1),

ConvBlock(128, 64, 1, 0),

ConvBlock(64, 128, 3, 1),

nn.MaxPool2d(kernel_size=2, stride=2)

])

self.stage3 = nn.ModuleList([

ConvBlock(128, 256, 3, 1),

ConvBlock(256, 128, 1, 0),

ConvBlock(128, 256, 3, 1),

nn.MaxPool2d(kernel_size=2, stride=2)

])

self.stage4 = nn.ModuleList([

ConvBlock(256, 512, 3, 1),

ConvBlock(512, 256, 1, 0),

ConvBlock(256, 512, 3, 1),

ConvBlock(512, 256, 1, 0),

ConvBlock(256, 512, 3, 1),

])

self.stage5 = nn.ModuleList([

nn.MaxPool2d(kernel_size=2, stride=2), #(1)

ConvBlock(512, 1024, 3, 1),

ConvBlock(1024, 512, 1, 0),

ConvBlock(512, 1024, 3, 1),

ConvBlock(1024, 512, 1, 0),

ConvBlock(512, 1024, 3, 1),

])As all layers have been initialized, the following factor we do is to attach all these layers utilizing the ahead() methodology in Codeblock 4b beneath. Beforehand I stated that we’ll take the output of stage4 because the enter for the passthrough layer. To take action, I retailer the characteristic map produced by the final layer of stage4 in a separate variable which I consult with as x_stage4 (#(1)). We then do the identical factor for the output of stage5 (#(2)) and return each x_stage4 and x_stage5 because the output of our Darknet (#(3)).

# Codeblock 4b

def ahead(self, x):

print(f'originalt: {x.dimension()}')

print()

for i in vary(len(self.stage0)):

x = self.stage0[i](x)

print(f'after stage0 #{i}t: {x.dimension()}')

print()

for i in vary(len(self.stage1)):

x = self.stage1[i](x)

print(f'after stage1 #{i}t: {x.dimension()}')

print()

for i in vary(len(self.stage2)):

x = self.stage2[i](x)

print(f'after stage2 #{i}t: {x.dimension()}')

print()

for i in vary(len(self.stage3)):

x = self.stage3[i](x)

print(f'after stage3 #{i}t: {x.dimension()}')

print()

for i in vary(len(self.stage4)):

x = self.stage4[i](x)

print(f'after stage4 #{i}t: {x.dimension()}')

x_stage4 = x.clone() #(1)

print()

for i in vary(len(self.stage5)):

x = self.stage5[i](x)

print(f'after stage5 #{i}t: {x.dimension()}')

x_stage5 = x.clone() #(2)

return x_stage4, x_stage5 #(3)Subsequent, I check the Darknet-19 mannequin above by passing the identical dummy picture because the one in our earlier check case.

# Codeblock 5

darknet = Darknet()

x = torch.randn(1, 3, 416, 416)

out = darknet(x)# Codeblock 5 Output

unique : torch.Dimension([1, 3, 416, 416])

after stage0 #0 : torch.Dimension([1, 32, 416, 416])

after stage0 #1 : torch.Dimension([1, 32, 208, 208])

after stage1 #0 : torch.Dimension([1, 64, 208, 208])

after stage1 #1 : torch.Dimension([1, 64, 104, 104])

after stage2 #0 : torch.Dimension([1, 128, 104, 104])

after stage2 #1 : torch.Dimension([1, 64, 104, 104])

after stage2 #2 : torch.Dimension([1, 128, 104, 104])

after stage2 #3 : torch.Dimension([1, 128, 52, 52])

after stage3 #0 : torch.Dimension([1, 256, 52, 52])

after stage3 #1 : torch.Dimension([1, 128, 52, 52])

after stage3 #2 : torch.Dimension([1, 256, 52, 52])

after stage3 #3 : torch.Dimension([1, 256, 26, 26])

after stage4 #0 : torch.Dimension([1, 512, 26, 26])

after stage4 #1 : torch.Dimension([1, 256, 26, 26])

after stage4 #2 : torch.Dimension([1, 512, 26, 26])

after stage4 #3 : torch.Dimension([1, 256, 26, 26])

after stage4 #4 : torch.Dimension([1, 512, 26, 26])

after stage5 #0 : torch.Dimension([1, 512, 13, 13])

after stage5 #1 : torch.Dimension([1, 1024, 13, 13])

after stage5 #2 : torch.Dimension([1, 512, 13, 13])

after stage5 #3 : torch.Dimension([1, 1024, 13, 13])

after stage5 #4 : torch.Dimension([1, 512, 13, 13])

after stage5 #5 : torch.Dimension([1, 1024, 13, 13])Right here we are able to see that our output matches precisely with the architectural particulars in Determine 12, indicating that our implementation of the Darknet-19 mannequin is appropriate.

The Whole YOLOv2 Structure

Earlier than truly establishing all the YOLOv2 structure, we have to initialize the parameters for the mannequin first. Right here we wish each single cell to generate 5 anchor containers, therefore we have to set the NUM_ANCHORS variable to that quantity. Subsequent, I set NUM_CLASSES to twenty as a result of we assume that we need to prepare the mannequin on PASCAL VOC dataset.

# Codeblock 6

NUM_ANCHORS = 5

NUM_CLASSES = 20Now it’s time to outline the YOLOv2 class. Within the Codeblock 7a beneath, we initially outline the __init__() methodology, the place we initialize the Darknet mannequin (#(1)), a single ConvBlock for the passthrough layer (#(2)), a stack of two convolution layers which I consult with as stage6 (#(3)), and one other stack of two convolution layers which the final one is used to map the tensor into prediction vector with B×(5+C) variety of channels (#(4)).

# Codeblock 7a

class YOLOv2(nn.Module):

def __init__(self):

tremendous().__init__()

self.darknet = Darknet() #(1)

self.passthrough = ConvBlock(512, 64, 1, 0) #(2)

self.stage6 = nn.ModuleList([ #(3)

ConvBlock(1024, 1024, 3, 1),

ConvBlock(1024, 1024, 3, 1),

])

self.stage7 = nn.ModuleList([

ConvBlock(1280, 1024, 3, 1),

ConvBlock(1024, NUM_ANCHORS*(5+NUM_CLASSES), 1, 0) #(4)

])Afterwards, we outline the so-called reorder() methodology, which we’ll use to course of the characteristic map within the passthrough layer. The logic of the code beneath is sort of sophisticated although, however the primary concept is that it follows the precept given in Determine 10. Right here I present you the output of every line with the intention to get higher understanding of how the method goes contained in the operate given an enter tensor of form 1×64×26×26, which represents a single picture of dimension 26×26 with 64 channels. Within the final step we are able to see that the ultimate output tensor has the form of 1×256×13×13. This form matches precisely with our requirement, the place the channel dimension turns into 4 instances bigger than that of the enter whereas on the similar time the spatial dimension halves.

# Codeblock 7b

def reorder(self, x, scale=2): # ([1, 64, 26, 26])

B, C, H, W = x.form

h, w = H // scale, W // scale

x = x.reshape(B, C, h, scale, w, scale) # ([1, 64, 13, 2, 13, 2])

x = x.transpose(3, 4) # ([1, 64, 13, 13, 2, 2])

x = x.reshape(B, C, h * w, scale * scale) # ([1, 64, 169, 4])

x = x.transpose(2, 3) # ([1, 64, 4, 169])

x = x.reshape(B, C, scale * scale, h, w) # ([1, 64, 4, 13, 13])

x = x.transpose(1, 2) # ([1, 4, 64, 13, 13])

x = x.reshape(B, scale * scale * C, h, w) # ([1, 256, 13, 13])

return xSubsequent, the Codeblock 7c beneath reveals how we create the move of the community. We initially begin from the darknet spine, by which it returns x_stage4 and x_stage5 (#(1)). The x_stage5 tensor will straight be processed with the following convolution layers which I consult with as stage6 (#(2)) whereas the x_stage4 tensor will probably be handed to the passthrough layer (#(3)) and processed by the reorder() (#(4)) methodology we outlined in Codeblock 7b above. Afterwards, we then concatenate each tensors in channel-wise method at line #(5). This concatenated tensor is then processed additional with one other stack of convolution layers known as stage7 (#(6)) which returns the prediction vector.

# Codeblock 7c

def ahead(self, x):

print(f'originalttt: {x.dimension()}')

x_stage4, x_stage5 = self.darknet(x) #(1)

print(f'nx_stage4ttt: {x_stage4.dimension()}')

print(f'x_stage5ttt: {x_stage5.dimension()}')

print()

x = x_stage5

for i in vary(len(self.stage6)):

x = self.stage6[i](x) #(2)

print(f'x_stage5 after stage6 #{i}t: {x.dimension()}')

x_stage4 = self.passthrough(x_stage4) #(3)

print(f'nx_stage4 after passthrought: {x_stage4.dimension()}')

x_stage4 = self.reorder(x_stage4) #(4)

print(f'x_stage4 after reordertt: {x_stage4.dimension()}')

x = torch.cat([x_stage4, x], dim=1) #(5)

print(f'nx after concatenatett: {x.dimension()}')

for i in vary(len(self.stage7)): #(6)

x = self.stage7[i](x)

print(f'x after stage7 #{i}t: {x.dimension()}')

return xOnce more, to check the above code we’ll cross by a tensor of dimension 1×3×416×416.

# Codeblock 8

yolov2 = YOLOv2()

x = torch.randn(1, 3, 416, 416)

out = yolov2(x)And beneath is what the output seems to be like after the code is run. The outputs known as stage0 to stage5 are the processes inside the Darknet spine, by which that is precisely the identical because the one I confirmed you earlier in Codeblock 5 Output. Afterwards we are able to see in stage6 that the form of the x_stage5 tensor doesn’t change in any respect (#(1–3)). In the meantime, the channel dimension of x_stage4 elevated from 64 to 256 after being processed by the reorder() operation (#(4–5)). The tensor from the primary move is then concatenated with the one from passthrough layer, which prompted the variety of channels within the ensuing tensor grew to become 1024+256=1280 (#(6)). Lastly, we cross the tensor to stage7 which returns a prediction tensor of dimension 125×13×13, denoting that we have now 13×13 grid cells the place each single of these cells comprises a prediction vector of size 125 (#(7)), storing the bounding field and the article class predictions.

# Codeblock 8 Output

unique : torch.Dimension([1, 3, 416, 416])

after stage0 #0 : torch.Dimension([1, 32, 416, 416])

after stage0 #1 : torch.Dimension([1, 32, 208, 208])

after stage1 #0 : torch.Dimension([1, 64, 208, 208])

after stage1 #1 : torch.Dimension([1, 64, 104, 104])

after stage2 #0 : torch.Dimension([1, 128, 104, 104])

after stage2 #1 : torch.Dimension([1, 64, 104, 104])

after stage2 #2 : torch.Dimension([1, 128, 104, 104])

after stage2 #3 : torch.Dimension([1, 128, 52, 52])

after stage3 #0 : torch.Dimension([1, 256, 52, 52])

after stage3 #1 : torch.Dimension([1, 128, 52, 52])

after stage3 #2 : torch.Dimension([1, 256, 52, 52])

after stage3 #3 : torch.Dimension([1, 256, 26, 26])

after stage4 #0 : torch.Dimension([1, 512, 26, 26])

after stage4 #1 : torch.Dimension([1, 256, 26, 26])

after stage4 #2 : torch.Dimension([1, 512, 26, 26])

after stage4 #3 : torch.Dimension([1, 256, 26, 26])

after stage4 #4 : torch.Dimension([1, 512, 26, 26])

after stage5 #0 : torch.Dimension([1, 512, 13, 13])

after stage5 #1 : torch.Dimension([1, 1024, 13, 13])

after stage5 #2 : torch.Dimension([1, 512, 13, 13])

after stage5 #3 : torch.Dimension([1, 1024, 13, 13])

after stage5 #4 : torch.Dimension([1, 512, 13, 13])

after stage5 #5 : torch.Dimension([1, 1024, 13, 13])

x_stage4 : torch.Dimension([1, 512, 26, 26])

x_stage5 : torch.Dimension([1, 1024, 13, 13]) #(1)

x_stage5 after stage6 #0 : torch.Dimension([1, 1024, 13, 13]) #(2)

x_stage5 after stage6 #1 : torch.Dimension([1, 1024, 13, 13]) #(3)

x_stage4 after passthrough : torch.Dimension([1, 64, 26, 26]) #(4)

x_stage4 after reorder : torch.Dimension([1, 256, 13, 13]) #(5)

x after concatenate : torch.Dimension([1, 1280, 13, 13]) #(6)

x after stage7 #0 : torch.Dimension([1, 1024, 13, 13])

x after stage7 #1 : torch.Dimension([1, 125, 13, 13])Ending

I feel that’s just about all the things about YOLOv2 and its mannequin structure implementation from scratch. The code used on this article can also be accessible on my GitHub repository [6]. Please let me know for those who spot any mistake in my rationalization or within the code. Thanks for studying, I hope you study one thing new from this text. See ya in my subsequent writing!

References

[1] Joseph Redmon and Ali Farhadi. YOLO9000: Higher, Sooner, Stronger. Arxiv. https://arxiv.org/abs/1612.08242 [Accessed August 9, 2025].

[2] Muhammad Ardi. YOLOv1 Paper Walkthrough: The Day YOLO First Noticed the World. Medium. https://medium.com/ai-advances/yolov1-paper-walkthrough-the-day-yolo-first-saw-the-world-ccff8b60d84b [Accessed January 24, 2026].

[3] Muhammad Ardi. YOLOv1 Loss Operate Walkthrough: Regression for All. In direction of Knowledge Science. https://towardsdatascience.com/yolov1-loss-function-walkthrough-regression-for-all/ [Accessed January 24, 2026].

[4] Picture initially created by creator, partially generated with Gemini

[5] Picture initially created by creator

[6] MuhammadArdiPutra. Higher, Sooner, Stronger — YOLOv2 and YOLO9000. GitHub. https://github.com/MuhammadArdiPutra/medium_articles/blob/most important/Betterpercent2Cpercent20Fasterpercent2Cpercent20Strongerpercent20-%20YOLOv2percent20andpercent20YOLO9000.ipynb [Accessed August 9, 2025].