AI for enjoyable and revenue!

Half 1: Creating the Data base

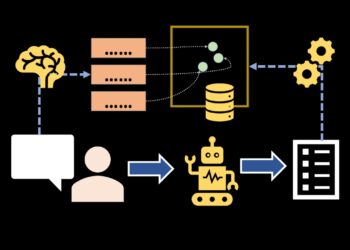

On this article, we’ll discover how one can leverage massive language fashions (LLMs) to look and scientific papers from PubMed Open Entry Subset, a free useful resource for accessing biomedical and life sciences literature. We’ll use Retrieval-Augmented Technology, RAG, to look our digital library.

AWS Bedrock will act as our AI backend, PostgreSQL because the vector database for storing embeddings, and the LangChain library in Python will ingest papers and question the data base.

In case you solely care in regards to the outcomes generated by querying the data base, skip right down to the finish.

The particular use case we’ll be specializing in is querying papers associated to Rheumatoid Arthritis, a power inflammatory dysfunction affecting joints. We’ll use the question ((rheumatoid arthritis) AND gene) AND cell to retrieve round 10,000 related papers from PubMed after which pattern that right down to roughly 5,000 papers for our data base.

Disclaimer

Not all analysis articles or sources have licensing that enables for ingesting with AI!

I’m not together with all of the supply code as a result of the AI libraries change so ceaselessly and since there are oodles of the way to configure a data base backend, however I’ve included some helper features so you may comply with alongside.

PGVector: Storing Embeddings in a Vector Database

To make it simpler for the LLM to course of and perceive the textual information from the analysis papers, we’ll convert the textual content into numerical embeddings, that are dense vector representations of the textual content. These embeddings might be saved in a PostgreSQL database utilizing the PGVector library. This step primarily simplifies the textual content information right into a format that the LLM can extra simply work with.

I’m operating an area postgresql database, which is okay for my datasets. Internet hosting AWS Bedrock Knowledgebases can get costly, and I’m not attempting to run up my AWS invoice this month. It’s summer season, and I’ve youngsters camp to pay for!

AWS Bedrock: The AI Backend

AWS Bedrock is a managed service supplied by Amazon Internet Providers (AWS), permitting you to simply deploy and function massive language fashions. In our setup, Bedrock will host the LLM that we’ll use to question and retrieve related info from our data base of analysis papers.

LangChain: Loading and Querying the Data Base

LangChain is a Python library that simplifies constructing functions with massive language fashions. We’ll use LangChain to load our analysis papers and their related embeddings right into a data base after which question this information base utilizing the LLM hosted on AWS Bedrock.

Utilizing PubGet for Information Acquisition

Whereas this setup can work with analysis papers from any supply, we’re utilizing PubMed as a result of it’s a handy supply for buying a big quantity of papers primarily based on particular search queries. We’ll use the PubGet device to retrieve the preliminary set of 10,000 papers matching our question on Rheumatoid Arthritis, genes, and cells. Behind the scenes pubget fetches articles from the PubMed FTP service.

pubget run -q "((rheumatoid arthritis) AND gene) AND cell"

pubget_data

This can get us articles in xml format.

Structuring and Organizing the Dataset

Past the technical features, this text will deal with how one can construction and arrange your dataset of analysis papers successfully.

- Dataset: Managing your datasets on a worldwide stage utilizing collections.

- Metadata Administration: Dealing with and incorporating metadata related to the papers, equivalent to creator info, publication dates, and key phrases.

You’ll need to take into consideration this upfront. When utilizing LangChain, you question datasets primarily based on their collections. Every assortment has a reputation and a novel identifier.

Whenever you load your information, whether or not it’s pdf papers, xml downloads, markdown information, codebases, powerpoint slides, textual content paperwork, and so on, you may connect further metadata. You possibly can later use this metadata to filter your outcomes. The metadata is an open dictionary, and you may add tags, supply, phenotype, or something you suppose could also be related.

Loading and Querying the Data Base

The article may also cowl greatest practices for loading your preprocessed and structured dataset into the data base and supply examples of how one can question the data base successfully utilizing the LLM hosted on AWS Bedrock.

By the top of this text, you need to have a stable understanding of how one can leverage LLMs to look and retrieve related info from a big corpus of analysis papers, in addition to methods for structuring and organizing your dataset to optimize the efficiency and accuracy of your data base.

import boto3

import pprint

import os

import boto3

import json

import hashlib

import funcy

import glob

from typing import Dict, Any, TypedDict, Record

from langchain.llms.bedrock import Bedrock

from langchain.retrievers.bedrock import AmazonKnowledgeBasesRetriever

from langchain_core.paperwork import Doc

from langchain_aws import ChatBedrock

from langchain_community.embeddings import BedrockEmbeddings # to create embeddings for the paperwork.

from langchain_experimental.text_splitter import SemanticChunker # to separate paperwork into smaller chunks.

from langchain_text_splitters import CharacterTextSplitter

from langchain_postgres import PGVector

from pydantic import BaseModel, Discipline

from langchain_community.document_loaders import (

WebBaseLoader,

TextLoader,

PyPDFLoader,

CSVLoader,

Docx2txtLoader,

UnstructuredEPubLoader,

UnstructuredMarkdownLoader,

UnstructuredXMLLoader,

UnstructuredRSTLoader,

UnstructuredExcelLoader,

DataFrameLoader,

)

import psycopg

import uuid

Arrange your database connection

I’m operating an area Supabase postgresql database operating utilizing their docker-compose setup. In a manufacturing setup, I'd advocate utilizing an actual database, like AWS AuroraDB or Supabase operating someplace in addition to your laptop computer. Additionally, change your password to one thing in addition to password.

I didn’t discover any distinction in efficiency for smaller datasets between an AWS-hosted knowledgebase and my laptop computer, however your mileage might differ.

connection = f"postgresql+psycopg://{consumer}:{password}@{host}:{port}/{database}"

# Set up the connection to the database

conn = psycopg.join(

conninfo = f"postgresql://{consumer}:{password}@{host}:{port}/{database}"

)

# Create a cursor to run queries

cur = conn.cursor()

Insert AWS BedRock Embeddings into the desk utilizing Langchain

We’re utilizing AWS Bedrock as our AI Knowledgebase. Many of the corporations I work with have some sort of proprietary information, and Bedrock has a assure that your information will stay personal. You would use any of the AI backends right here.

os.environ['AWS_DEFAULT_REGION'] = 'us-east-1'

bedrock_client = boto3.shopper("bedrock-runtime")

bedrock_embeddings = BedrockEmbeddings(model_id="amazon.titan-embed-text-v1",shopper=bedrock_client)

bedrock_embeddings_image = BedrockEmbeddings(model_id="amazon.titan-embed-image-v1",shopper=bedrock_client)

llm = ChatBedrock(model_id="anthropic.claude-3-sonnet-20240229-v1:0", shopper=bedrock_client)

# operate to create vector retailer

# be sure that to replace this in case you change collections!

def create_vectorstore(embeddings,collection_name,conn):

vectorstore = PGVector(

embeddings=embeddings,

collection_name=collection_name,

connection=conn,

use_jsonb=True,

)

return vectorstore

def load_and_split_pdf_semantic(file_path, embeddings):

loader = PyPDFLoader(file_path)

pages = loader.load_and_split()

return pages

def load_xml(file_path, embeddings):

loader = UnstructuredXMLLoader(

file_path,

)

docs = loader.load_and_split()

return docs

def insert_embeddings(information, bedrock_embeddings, vectorstore):

logging.data(f"Inserting {len(information)}")

x = 1

y = len(information)

for file_path in information:

logging.data(f"Splitting {file_path} {x}/{y}")

docs = []

if '.pdf' in file_path:

attempt:

with funcy.print_durations('course of pdf'):

docs = load_and_split_pdf_semantic(file_path, bedrock_embeddings)

besides Exception as e:

logging.warning(f"Error loading docs")

if '.xml' in file_path:

attempt:

with funcy.print_durations('course of xml'):

docs = load_xml(file_path, bedrock_embeddings)

besides Exception as e:

logging.warning(e)

logging.warning(f"Error loading docs")

filtered_docs = []

for d in docs:

if len(d.page_content):

filtered_docs.append(d)

# Add paperwork to the vectorstore

ids = []

for d in filtered_docs:

ids.append(

hashlib.sha256(d.page_content.encode()).hexdigest()

)

if len(filtered_docs):

texts = [ i.page_content for i in filtered_docs]

# metadata is a dictionary. You possibly can add to it!

metadatas = [ i.metadata for i in filtered_docs]

#logging.data(f"Including N: {len(filtered_docs)}")

attempt:

with funcy.print_durations('load psql'):

vectorstore.add_texts(texts=texts, metadatas = metadatas, ids=ids)

besides Exception as e:

logging.warning(e)

logging.warning(f"Error {x - 1}/{y}")

#logging.data(f"Full {x}/{y}")

x = x + 1

collection_name_text = "MY_COLLECTION" #pubmed, smiles, and so on

vectorstore = create_vectorstore(bedrock_embeddings,collection_name_text,connection)

Load and course of Pubmed XML Papers

Most of our information was fetched utilizing the pubget device, and the articles are in XML format. We'll use the LangChain XML Loader to course of, cut up and cargo the embeddings.

information = glob.glob("/dwelling/jovyan/information/pubget_ra/pubget_data/*/articles/*/*/article.xml")

#I ran this beforehand

insert_embeddings(information[0:2], bedrock_embeddings, vectorstore)

Load and Course of Pubmed PDF Papers

PDFs are simpler to learn, and I grabbed some for doing QA in opposition to the data base.

information = glob.glob("/dwelling/jovyan/information/pubget_ra/papers/*pdf")

insert_embeddings(information[0:2], bedrock_embeddings, vectorstor

Half 2 — Question the Data base

Now that we have now our data base setup we are able to use Retrieval Augmented Technology, RAG strategies, to make use of the LLMs to run queries.

Our queries are:

- Inform me about T cell–derived cytokines in relation to rheumatoid arthritis and supply citations and article titles

- Inform me about single-cell analysis in rheumatoid arthritis.

- Inform me about protein-protein associations in rheumatoid arthritis.

- Inform me in regards to the findings of GWAS research in rheumatoid arthritis.

import hashlib

import logging

import os

from typing import Elective, Record, Dict, Any

import glob

import boto3

from toolz.itertoolz import partition_all

import json

import funcy

import psycopg

from IPython.show import Markdown, show

from langchain.chains import create_retrieval_chain

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain.prompts import PromptTemplate

from langchain.retrievers.bedrock import (

AmazonKnowledgeBasesRetriever,

RetrievalConfig,

VectorSearchConfig,

)

from aws_bedrock_utilities.fashions.base import BedrockBase, RAGResults

from aws_bedrock_utilities.fashions.pgvector_knowledgebase import BedrockPGWrapper

from sklearn.model_selection import train_test_split

import numpy as np

import pandas as pd

from pprint import pprint

import time

import logging

from wealthy.logging import RichHandler

I don’t record it right here, however I’ll all the time do some QA in opposition to my knowlegebase. Select an article, parse out the abstract or findings, and ask the LLM about it. You need to get your article again.

Structuring Your Queries

You’ll have to first have the gathering title you’re querying alongside together with your queries.

I all the time advocate operating a number of QA queries. Ask the apparent questions in a number of other ways.

You’ll additionally need to alter the MAX_DOCS_RETURNED primarily based in your time constraints and what number of articles are in your knowledgebase. The LLM will search till it hits that most, after which stops. You'll want to extend that quantity for an exhaustive search.

# Be certain that to maintain the gathering title constant!

COLLECTION_NAME = "MY_COLLECTION"

MAX_DOCS_RETURNED = 50

p = BedrockPGWrapper(collection_name=COLLECTION_NAME) credentials.py:1147

#mannequin = "anthropic.claude-3-sonnet-20240229-v1:0"

mannequin = "anthropic.claude-3-haiku-20240307-v1:0"

mannequin = "anthropic.claude-3-haiku-20240307-v1:0"

queries = [

"Tell me about T cell–derived cytokines in relation to rheumatoid arthritis and provide citations and article titles",

"Tell me about single-cell research in rheumatoid arthritis.",

"Tell me about protein-protein associations in rheumatoid arthritis.",

"Tell me about the findings of GWAS studies in rheumatoid arthritis.",

]

ai_responses = []

for question in queries:

reply = p.run_kb_chat(question=question, collection_name= COLLECTION_NAME, model_id=mannequin, search_kwargs={'ok': MAX_DOCS_RETURNED, 'fetch_k': 50000 })

ai_responses.append(reply)

time.sleep(1)

for reply in ai_responses:

t = Markdown(f"""

### Question

{reply['query']}### Response

{reply['result']}

""")

show(t)

Half 3: Outcomes!

We’ve constructed our data base, run some queries, and now we’re prepared to take a look at the outcomes the LLM generated for us.

Every result’s a dictionary with the unique question, the response, and the related snippets of the supply doc.

Inform me about T cell–derived cytokines in relation to rheumatoid arthritis and supply citations and article titles

T cell-derived cytokines play a key function within the pathogenesis of rheumatoid arthritis (RA). Some key findings embody:

Elevated ranges of IL-17, a cytokine produced by Th17 cells, have been discovered within the synovial fluid of RA sufferers. IL-17 can stimulate fibroblast-like synoviocytes (FLS) and macrophages to provide inflammatory mediators like VEGF, IL-1, IL-6, TNF-α, and prostaglandin E2, and promote osteoclast formation, contributing to joint irritation and destruction (Honorati et al. 2006, Schurgers et al. 2011).

Th1 cells, which produce IFN-γ, are additionally implicated in RA pathogenesis. IFN-γ can induce macrophages to polarize in the direction of a pro-inflammatory M1 phenotype (Schurgers et al. 2011, Kebir et al. 2009, Boniface et al. 2010).

CD161+ Th17 cells, which might produce each IL-17 and IFN-γ, are enriched within the synovium of RA sufferers and should contribute to the inflammatory setting (Afzali et al. 2013, Bovenschen et al. 2011, Koenen et al. 2008, Pesenacker et al. 2013).

Regulatory T cells (Tregs), which usually suppress irritation, present impaired operate in RA, probably contributing to the dysregulated immune response (Moradi et al. 2014, Samson et al. 2012, Zhang et al. 2018, Walter et al. 2013, Wang et al. 2018, Morita et al. 2016).

In abstract, the imbalance between pro-inflammatory T cell subsets (Th1, Th17) and anti inflammatory Tregs is a trademark of RA pathogenesis, with cytokines like IL-17 and IFN-γ taking part in central roles in driving joint irritation and destruction.

Inform me about single-cell analysis in rheumatoid arthritis.

Single-cell analysis has supplied necessary insights into the pathogenesis of rheumatoid arthritis (RA):

Single-cell RNA sequencing (scRNA-seq) research have recognized distinct cell states and subpopulations inside the RA synovium, together with pathogenic T cell subsets like T peripheral helper (Tph) cells and cytotoxic CD8+ T cells.

Analyses of the T cell receptor (TCR) repertoire within the RA synovium have revealed clonal enlargement of CD4+ and CD8+ T cell populations, suggesting antigen-driven responses.

scRNA-seq has additionally characterised expanded populations of activated B cells, plasmablasts, and plasma cells within the RA synovium that exhibit substantial clonal relationships.

Receptor-ligand analyses from scRNA-seq information have predicted key cell-cell interactions, equivalent to between Tph cells and B cells, that will drive synovial irritation.

General, single-cell research have uncovered the mobile and molecular heterogeneity inside the RA synovium, figuring out particular immune cell subsets and pathways that might function targets for extra personalised therapeutic approaches.

Inform me about protein-protein associations in rheumatoid arthritis.

Based mostly on the data supplied within the context, some key protein-protein associations in rheumatoid arthritis (RA) embody:

Rheumatoid issue (RF) and anti-citrullinated protein antibodies (ACPAs):

RF is present in about 80% of sufferers within the pre-articular section of RA.

ACPAs are extremely particular for RA and may be detected years earlier than the onset of medical signs.

Peptidylarginine deiminase (PAD) enzymes and anti-PAD antibodies:

Anti-PAD2 antibodies are related to a reasonable illness course, whereas anti-PAD4 antibodies are linked to extra extreme and quickly progressive RA.

Anti-PAD3/4 antibodies might sign the event of RA-associated interstitial lung illness.

Anti-carbamylated protein (anti-CarP) antibodies:

Anti-CarP antibodies are current in 25–50% of RA sufferers, impartial of RF or ACPA positivity.

Anti-CarP antibodies are related to poor prognosis and elevated morbidity, together with RA-associated interstitial lung illness.

Malondialdehyde-acetaldehyde (MAA) adducts and anti-MAA antibodies:

Anti-MAA antibodies are related to radiological development in seronegative RA.

Protein-protein interactions in signaling pathways:

The JAK-STAT, MAPK, PI3K-AKT, and SYK signaling pathways are all implicated within the pathogenesis of RA and are potential targets for therapeutic intervention.

In abstract, the context highlights a number of key protein-protein associations in RA, together with autoantibodies (RF, ACPAs, anti-PAD, anti-CarP, anti-MAA) and signaling pathway elements (JAK, STAT, MAPK, PI3K, SYK), which play necessary roles within the pathogenesis and development of the illness.

Inform me in regards to the findings of GWAS research in rheumatoid arthritis.

Listed below are some key findings from GWAS research in rheumatoid arthritis:

Genome-wide affiliation research (GWAS) have recognized over 100 genetic danger loci related to rheumatoid arthritis susceptibility.

The HLA-DRB1 gene is the strongest genetic danger issue, accounting for about 50% of the genetic element of rheumatoid arthritis. Particular HLA-DRB1 alleles containing the “shared epitope” sequence are strongly related to elevated RA danger.

Different notable genetic danger elements recognized by means of GWAS embody PTPN22, STAT4, CCR6, PADI4, CTLA4, and CD40. These genes are concerned in immune regulation and irritation pathways.

Genetic danger elements can differ between seropositive (ACPA-positive) and seronegative rheumatoid arthritis. For instance, HLA-DRB1 alleles have a stronger affiliation with seropositive RA.

GWAS have additionally recognized genetic variants related to illness severity and response to remedy in rheumatoid arthritis. For instance, variants within the FCGR3A and PTPRC genes have been linked to response to anti-TNF remedy.

General, GWAS have supplied necessary insights into the genetic structure and pathogenesis of rheumatoid arthritis, which has implications for growing focused therapies and personalised remedy approaches.

Examine the Supply Paperwork

Querying the Data base will return related snippets of the supply paperwork. Generally the formatting returned by langchain could be a bit off, however you may all the time return to the supply.

x = 0

y = 10

for reply in ai_responses:

for s in reply['source_documents']:

if x <= y:

print(s.metadata)

else:

break

x = x + 1

{'web page': 6, 'supply': '/dwelling/jovyan/information/pubget_ra/papers/fimmu-12-790122.pdf'}

{'supply': '/dwelling/jovyan/information/pubget_ra/pubget_data/query_55c6003c0195b20fd4bdc411f67a8dcf/articles/d52/pmcid_11167034/article.xml'}

{'web page': 7, 'supply': '/dwelling/jovyan/information/pubget_ra/papers/fimmu-12-790122.pdf'}

{'web page': 17, 'supply': '/dwelling/jovyan/information/pubget_ra/papers/fimmu-12-790122.pdf'}

{'supply': '/dwelling/jovyan/information/pubget_ra/pubget_data/query_55c6003c0195b20fd4bdc411f67a8dcf/articles/657/pmcid_11151399/article.xml'}

{'web page': 3, 'supply': '/dwelling/jovyan/information/pubget_ra/papers/41392_2023_Article_1331.pdf'}

{'web page': 4, 'supply': '/dwelling/jovyan/information/pubget_ra/papers/41392_2023_Article_1331.pdf'}

{'web page': 5, 'supply': '/dwelling/jovyan/information/pubget_ra/papers/fimmu-12-790122.pdf'}

{'supply': '/dwelling/jovyan/information/pubget_ra/pubget_data/query_55c6003c0195b20fd4bdc411f67a8dcf/articles/e02/pmcid_11219584/article.xml'}

{'web page': 8, 'supply': '/dwelling/jovyan/information/pubget_ra/papers/fimmu-12-790122.pdf'}

{'supply': '/dwelling/jovyan/information/pubget_ra/pubget_data/query_55c6003c0195b20fd4bdc411f67a8dcf/articles/6fb/pmcid_11203675/article.xml'}

Wrap Up

There you will have it! We created a data base on a budget, used AWS Bedrock to load the embeddings, after which used a Claude LLM to run our queries. Right here we used PubMed papers, however we additionally may have used assembly notes, powerpoint slides, crawled web sites, or in home databases.

In case you have any questions, feedback, or tutorial requests please don’t hesitate to achieve out to me by electronic mail at jillian@dabbleofdevops.com

Utilizing LLMs to Question PubMed Data Bases for BioMedical Analysis was initially printed in In the direction of Information Science on Medium, the place individuals are persevering with the dialog by highlighting and responding to this story.