A framework to pick the only, quickest, most cost-effective structure that can stability LLMs’ creativity and threat

Take a look at any LLM tutorial and the steered utilization entails invoking the API, sending it a immediate, and utilizing the response. Suppose you need the LLM to generate a thank-you notice, you would do:

import openai

recipient_name = "John Doe"

reason_for_thanks = "serving to me with the venture"

tone = "skilled"

immediate = f"Write a thanks message to {recipient_name} for {reason_for_thanks}. Use a {tone} tone."

response = openai.Completion.create("text-davinci-003", immediate=immediate, n=1)

email_body = response.selections[0].textual content

Whereas that is wonderful for PoCs, rolling to manufacturing with an structure that treats an LLM as simply one other text-to-text (or text-to-image/audio/video) API leads to an utility that’s under-engineered when it comes to threat, value, and latency.

The answer is to not go to the opposite excessive and over-engineer your utility by fine-tuning the LLM and including guardrails, and so forth. each time. The objective, as with all engineering venture, is to seek out the best stability of complexity, fit-for-purpose, threat, value, and latency for the specifics of every use case. On this article, I’ll describe a framework that can assist you strike this stability.

The framework of LLM utility architectures

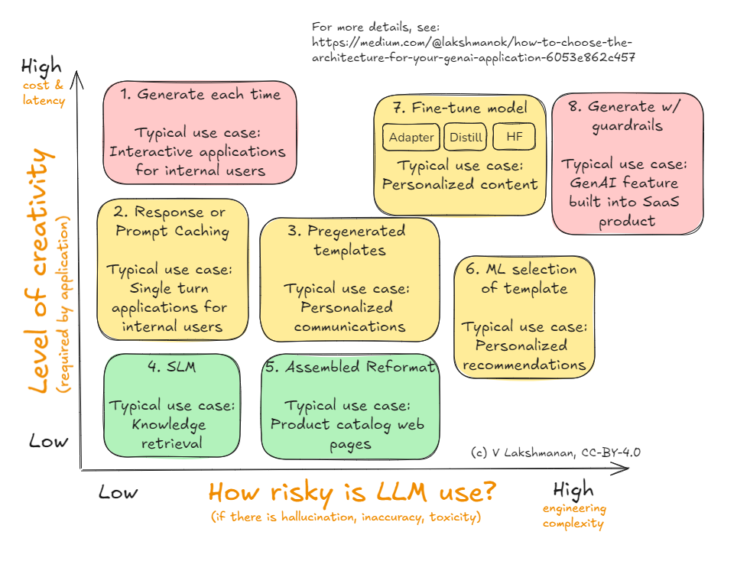

Right here’s a framework that I counsel you utilize to resolve on the structure in your GenAI utility or agent. I’ll cowl every of the eight options proven within the Determine beneath within the sections that comply with.

The axes right here (i.e., the choice standards) are threat and creativity. For every use case the place you’ll make use of an LLM, begin by figuring out the creativity you want from the LLM and the quantity of threat that the use case carries. This helps you slim down the selection that strikes the best stability for you.

Word that whether or not or to not use Agentic Programs is a totally orthogonal determination to this — make use of agentic methods when the duty is just too advanced to be completed by a single LLM name or if the duty requires non-LLM capabilities. In such a scenario, you’d break down the advanced process into less complicated duties and orchestrate them in an agent framework. This text exhibits you tips on how to construct a GenAI utility (or an agent) to carry out a kind of easy duties.

Why the first determination criterion is creativity

Why are creativity and threat the axes? LLMs are a non-deterministic know-how and are extra bother than they’re value if you happen to don’t actually need all that a lot uniqueness within the content material being created.

For instance, in case you are producing a bunch of product catalog pages, how totally different do they actually need to be? Your clients need correct info on the merchandise and will not likely care that each one SLR digital camera pages clarify the advantages of SLR know-how in the identical approach — the truth is, some quantity of standardization could also be fairly preferable for straightforward comparisons. It is a case the place your creativity requirement on the LLM is sort of low.

It seems that architectures that cut back the non-determinism additionally cut back the whole variety of calls to the LLM, and so even have the side-effect of lowering the general value of utilizing the LLM. Since LLM calls are slower than the everyday net service, this additionally has the great side-effect of lowering the latency. That’s why the y-axis is creativity, and why we now have value and latency additionally on that axis.

You might have a look at the illustrative use instances listed within the diagram above and argue whether or not they require low creativity or excessive. It actually relies on your online business drawback. If you’re {a magazine} or advert company, even your informative content material net pages (in contrast to the product catalog pages) might should be artistic.

Why the 2nd determination criterion is threat

LLMs generally tend to hallucinate particulars and to replicate biases and toxicity of their coaching information. Given this, there are dangers related to straight sending LLM-generated content material to end-users. Fixing for this drawback provides loads of engineering complexity — you might need to introduce a human-in-the-loop to assessment content material, or add guardrails to your utility to validate that the generated content material doesn’t violate coverage.

In case your use case permits end-users to ship prompts to the mannequin and the appliance takes actions on the backend (a typical scenario in lots of SaaS merchandise) to generate a user-facing response, the danger related to errors, hallucination, and toxicity is sort of excessive.

The identical use case (artwork era) might carry totally different ranges and sorts of threat relying on the context as proven within the determine beneath. For instance, in case you are producing background instrumental music to a film, the danger related would possibly contain mistakenly reproducing copyrighted notes, whereas in case you are producing advert pictures or movies broadcast to tens of millions of customers, it’s possible you’ll be anxious about toxicity. These various kinds of threat are related to totally different ranges of threat. As one other instance, in case you are constructing an enterprise search utility that returns doc snippets out of your company doc retailer or know-how documentation, the LLM-associated dangers is perhaps fairly low. In case your doc retailer consists of medical textbooks, the danger related to out-of-context content material returned by a search utility is perhaps excessive.

As with the checklist of use instances ordered by creativity, you’ll be able to quibble with the ordering of use instances by threat. However when you determine the danger related to the use case and the creativity it requires, the steered structure is value contemplating as a place to begin. Then, if you happen to perceive the “why” behind every of those architectural patterns, you’ll be able to choose an structure that balances your wants.

In the remainder of this text, I’ll describe the architectures, ranging from #1 within the diagram.

1. Generate every time (for Excessive Creativity, Low Danger duties)

That is the architectural sample that serves because the default — invoke the API of the deployed LLM every time you need generated content material. It’s the only, nevertheless it additionally entails making an LLM name every time.

Sometimes, you’ll use a PromptTemplate and templatize the immediate that you simply ship to the LLM primarily based on run-time parameters. It’s a good suggestion to make use of a framework that lets you swap out the LLM.

For our instance of sending an electronic mail primarily based on the immediate, we might use langchain:

prompt_template = PromptTemplate.from_template(

"""

You might be an AI govt assistant to {sender_name} who writes letters on behalf of the manager.

Write a 3-5 sentence thanks message to {recipient_name} for {reason_for_thanks}.

Extract the primary identify from {sender_name} and signal the message with simply the primary identify.

"""

)

...

response = chain.invoke({

"recipient_name": "John Doe",

"reason_for_thanks": "talking at our Knowledge Convention",

"sender_name": "Jane Brown",

})

Since you are calling the LLM every time, it’s acceptable just for duties that require extraordinarily excessive creativity (e.g., you desire a totally different thanks notice every time) and the place you aren’t anxious concerning the threat (e.g, if the end-user will get to learn and edit the notice earlier than hitting “ship”).

A standard scenario the place this sample is employed is for interactive functions (so it wants to reply to every kind of prompts) meant for inner customers (so low threat).

2. Response/Immediate caching (for Medium Creativity, Low Danger duties)

You in all probability don’t need to ship the identical thanks notice once more to the identical particular person. You need it to be totally different every time.

However what in case you are constructing a search engine in your previous tickets, equivalent to to help inner buyer help groups? In such instances, you do need repeat inquiries to generate the identical reply every time.

A option to drastically cut back value and latency is to cache previous prompts and responses. You are able to do such caching on the consumer aspect utilizing langchain:

from langchain_core.caches import InMemoryCache

from langchain_core.globals import set_llm_cacheset_llm_cache(InMemoryCache())

prompt_template = PromptTemplate.from_template(

"""

What are the steps to place a freeze on my bank card account?

"""

)

chain = prompt_template | mannequin | parser

Once I tried it, the cached response took 1/one thousandth of the time and averted the LLM name fully.

Caching is helpful past client-side caching of tangible textual content inputs and the corresponding responses (see Determine beneath). Anthropic helps “immediate caching” whereby you’ll be able to ask the mannequin to cache a part of a immediate (usually the system immediate and repetitive context) server-side, whereas persevering with to ship it new directions in every subsequent question. Utilizing immediate caching reduces value and latency per question whereas not affecting the creativity. It’s notably useful in RAG, doc extraction, and few-shot prompting when the examples get giant.

Gemini separates out this performance into context caching (which reduces the price and latency) and system directions (which don’t cut back the token depend, however do cut back latency). OpenAI lately introduced help for immediate caching, with its implementation mechanically caching the longest prefix of a immediate that was beforehand despatched to the API, so long as the immediate is longer than 1024 tokens. Server-side caches like these don’t cut back the potential of the mannequin, solely the latency and/or value, as you’ll proceed to doubtlessly get totally different outcomes to the identical textual content immediate.

The built-in caching strategies require actual textual content match. Nevertheless, it’s potential to implement caching in a approach that takes benefit of the nuances of your case. For instance, you would rewrite prompts to canonical varieties to extend the probabilities of a cache hit. One other widespread trick is to retailer the hundred most frequent questions, for any query that’s shut sufficient, you would rewrite the immediate to ask the saved query as a substitute. In a multi-turn chatbot, you would get consumer affirmation on such semantic similarity. Semantic caching methods like this can cut back the potential of the mannequin considerably, since you’ll get the identical responses to even comparable prompts.

3. Pregenerated templates (for Medium Creativity, Low-Medium Danger duties)

Generally, you don’t actually thoughts the identical thanks notice being generated to everybody in the identical scenario. Maybe you’re writing the thanks notice to a buyer who purchased a product, and also you don’t thoughts the identical thanks notice being generated to any buyer who purchased that product.

On the identical time, there’s a larger threat related to this use case as a result of these communications are going out to end-users and there’s no inner employees particular person in a position to edit every generated letter earlier than sending it out.

In such instances, it may be useful to pregenerate templated responses. For instance, suppose you’re a tour firm and also you provide 5 totally different packages. All you want is one thanks message for every of those packages. Possibly you need totally different messages for solo vacationers vs. households vs. teams. You continue to want solely 3x as many messages as you might have packages.

prompt_template = PromptTemplate.from_template(

"""

Write a letter to a buyer who has bought a tour package deal.

The client is touring {group_type} and the tour is to {tour_destination}.

Sound excited to see them and clarify among the highlights of what they are going to see there

and among the issues they'll do whereas there.

Within the letter, use [CUSTOMER_NAME] to point the place to get replaced by their identify

and [TOUR_GUIDE] to point the place to get replaced by the identify of the tour information.

"""

)

chain = prompt_template | mannequin | parser

print(chain.invoke({

"group_type": "household",

"tour_destination": "Toledo, Spain",

}))

The result’s messages like this for a given group-type and tour-destination:

Expensive [CUSTOMER_NAME],We're thrilled to welcome you to Toledo in your upcoming tour! We won't wait to point out you the wonder and historical past of this enchanting metropolis.

Toledo, generally known as the "Metropolis of Three Cultures," boasts an interesting mix of Christian, Muslim, and Jewish heritage. You will be mesmerized by the beautiful structure, from the imposing Alcázar fortress to the majestic Toledo Cathedral.

Throughout your tour, you may have the chance to:

* **Discover the historic Jewish Quarter:** Wander via the slim streets lined with historical synagogues and conventional homes.

* **Go to the Monastery of San Juan de los Reyes:** Admire the beautiful Gothic structure and beautiful cloisters.

* **Expertise the panoramic views:** Take a scenic stroll alongside the banks of the Tagus River and soak within the breathtaking views of town.

* **Delve into the artwork of Toledo:** Uncover the works of El Greco, the famend painter who captured the essence of this metropolis in his artwork.

Our skilled tour information, [TOUR_GUIDE], will present insightful commentary and share fascinating tales about Toledo's wealthy previous.

We all know you may have an exquisite time exploring town's treasures. Be happy to achieve out when you have any questions earlier than your arrival.

We sit up for welcoming you to Toledo!

Sincerely,

The [Tour Company Name] Group

You may generate these messages, have a human vet them, and retailer them in your database.

As you’ll be able to see, we requested the LLM to insert placeholders within the message that we are able to substitute dynamically. Every time you could ship out a response, retrieve the message from the database and substitute the placeholders with precise information.

Utilizing pregenerated templates turns an issue that may have required vetting lots of of messages per day into one which requires vetting a couple of messages solely when a brand new tour is added.

4. Small Language Fashions (Low Danger, Low Creativity)

Current analysis exhibits that it’s unattainable to eradicate hallucination in LLMs as a result of it arises from a pressure between studying all of the computable features we need. A smaller LLM for a extra focused process has much less threat of hallucinating than one which’s too giant for the specified process. You is perhaps utilizing a frontier LLM for duties that don’t require the facility and world-knowledge that it brings.

In use instances the place you might have a quite simple process that doesn’t require a lot creativity and really low threat tolerance, you might have the choice of utilizing a small language mannequin (SLM). This does commerce off accuracy — in a June 2024 research, a Microsoft researcher discovered that for extracting structured information from unstructured textual content similar to an bill, their smaller text-based mannequin (Phi-3 Mini 128K) might get 93% accuracy as in comparison with the 99% accuracy achievable by GPT-4o.

The workforce at LLMWare evaluates a variety of SLMs. On the time of writing (2024), they discovered that Phi-3 was the very best, however that over time, smaller and smaller fashions had been reaching this efficiency.

Representing these two research pictorially, SLMs are more and more reaching their accuracy with smaller and smaller sizes (so much less and fewer hallucination) whereas LLMs have been centered on growing process potential (so increasingly more hallucination). The distinction in accuracy between these approaches for duties like doc extraction has stabilized (see Determine).

If this development holds up, count on to be utilizing SLMs and non-frontier LLMs for increasingly more enterprise duties that require solely low creativity and have a low tolerance for threat. Creating embeddings from paperwork, equivalent to for data retrieval and matter modeling, are use instances that have a tendency to suit this profile. Use small language fashions for these duties.

5. Assembled Reformat (Medium Danger, Low Creativity)

The underlying concept behind Assembled Reformat is to make use of pre-generation to cut back the danger on dynamic content material, and use LLMs just for extraction and summarization, duties that introduce solely a low-level of threat regardless that they’re completed “stay”.

Suppose you’re a producer of machine elements and must create an online web page for every merchandise in your product catalog. You might be clearly involved about accuracy. You don’t need to declare some merchandise is heat-resistant when it’s not. You don’t need the LLM to hallucinate the instruments required to put in the half.

You in all probability have a database that describes the attributes of every half. A easy strategy is to make use of an LLM to generate content material for every of the attributes. As with pre-generated templates (Sample #3 above), make certain to have a human assessment them earlier than storing the content material in your content material administration system.

prompt_template = PromptTemplate.from_template(

"""

You're a content material author for a producer of paper machines.

Write a one-paragraph description of a {part_name}, which is likely one of the elements of a paper machine.

Clarify what the half is used for, and causes which may want to switch the half.

"""

)

chain = prompt_template | mannequin | parser

print(chain.invoke({

"part_name": "moist finish",

}))

Nevertheless, merely appending all of the textual content generated will end in one thing that’s not very pleasing to learn. You might, as a substitute, assemble all of this content material into the context of the immediate, and ask the LLM to reformat the content material into the specified web site format:

class CatalogContent(BaseModel):

part_name: str = Subject("Widespread identify of half")

part_id: str = Subject("distinctive half id in catalog")

part_description: str = Subject("quick description of half")

value: str = Subject("value of half")catalog_parser = JsonOutputParser(pydantic_object=CatalogContent)

prompt_template = PromptTemplate(

template="""

Extract the data wanted and supply the output as JSON.

{database_info}

Half description follows:

{generated_description}

""",

input_variables=["generated_description", "database_info"],

partial_variables={"format_instructions": catalog_parser.get_format_instructions()},

)

chain = prompt_template | mannequin | catalog_parser

If you could summarize critiques, or commerce articles concerning the merchandise, you’ll be able to have this be completed in a batch processing pipeline, and feed the abstract into the context as effectively.

6. ML Choice of Template (Medium Creativity, Medium Danger)

The assembled reformat strategy works for net pages the place the content material is sort of static (as in product catalog pages). Nevertheless, in case you are an e-commerce retailer, and also you need to create customized suggestions, the content material is far more dynamic. You want larger creativity out of the LLM. Your threat tolerance when it comes to accuracy continues to be about the identical.

What you are able to do in such instances is to proceed to make use of pre-generated templates for every of your merchandise, after which use machine studying to pick which templates you’ll make use of.

For customized suggestions, for instance, you’d use a standard suggestions engine to pick which merchandise will probably be proven to the consumer, and pull within the acceptable pre-generated content material (pictures + textual content) for that product.

This strategy of mixing pregeneration + ML will also be used in case you are customizing your web site for various buyer journeys. You’ll pregenerate the touchdown pages and use a propensity mannequin to decide on what the subsequent greatest motion is.

7.Effective-tune (Excessive Creativity, Medium Danger)

In case your creativity wants are excessive, there is no such thing as a option to keep away from utilizing LLMs to generate the content material you want. However, producing the content material each time means you can not scale human assessment.

There are two methods to handle this conundrum. The less complicated one, from an engineering complexity standpoint, is to show the LLM to provide the sort of content material that you really want and never generate the sorts of content material you don’t. This may be completed via fine-tuning.

There are three strategies to fine-tune a foundational mannequin: adapter tuning, distillation, and human suggestions. Every of those fine-tuning strategies tackle totally different dangers:

- Adapter tuning retains the total functionality of the foundational mannequin, however lets you choose for particular model (equivalent to content material that matches your organization voice). The chance addressed right here is model threat.

- Distillation approximates the potential of the foundational mannequin, however on a restricted set of duties, and utilizing a smaller mannequin that may be deployed on premises or behind a firewall. The chance addressed right here is of confidentiality.

- Human suggestions both via RLHF or via DPO permits the mannequin to begin off with cheap accuracy, however get higher with human suggestions. The chance addressed right here is of fit-for-purpose.

Widespread use instances for fine-tuning embrace with the ability to create branded content material, summaries of confidential info, and customized content material.

8. Guardrails (Excessive Creativity, Excessive Danger)

What in order for you the total spectrum of capabilities, and you’ve got multiple sort of threat to mitigate — maybe you’re anxious about model threat, leakage of confidential info, and/or excited by ongoing enchancment via suggestions?

At that time, there is no such thing as a different however to go complete hog and construct guardrails. Guardrails might contain preprocessing the data going into the mannequin, post-processing the output of the mannequin, or iterating on the immediate primarily based on error circumstances.

Pre-built guardrails (eg. Nvidia’s NeMo) exist for generally wanted performance equivalent to checking for jailbreak, masking delicate information within the enter, and self-check of information.

Nevertheless, it’s possible that you simply’ll need to implement among the guardrails your self (see Determine above). An utility that must be deployed alongside programmable guardrails is essentially the most advanced approach that you would select to implement a GenAI utility. Be sure that this complexity is warranted earlier than happening this route.

I counsel you utilize a framework that balances creativity and threat to resolve on the structure in your GenAI utility or agent. Creativity refers back to the stage of uniqueness required within the generated content material. Danger pertains to the affect if the LLM generates inaccurate, biased, or poisonous content material. Addressing high-risk eventualities necessitates engineering complexity, equivalent to human assessment or guardrails.

The framework consists of eight architectural patterns that tackle totally different mixture of creativity and threat:

1. Generate Every Time: Invokes the LLM API for each content material era request, providing most creativity however with larger value and latency. Appropriate for interactive functions that don’t have a lot threat, equivalent to inner instruments..

2. Response/Immediate Caching: For medium creativity, low-risk duties. Caches previous prompts and responses to cut back value and latency. Helpful when constant solutions are fascinating, equivalent to inner buyer help engines like google. Strategies like immediate caching, semantic caching, and context caching improve effectivity with out sacrificing creativity.

3. Pregenerated Templates: Employs pre-generated, vetted templates for repetitive duties, lowering the necessity for fixed human assessment. Appropriate for medium creativity, low-medium threat conditions the place standardized but customized content material is required, equivalent to buyer communication in a tour firm.

4. Small Language Fashions (SLMs): Makes use of smaller fashions to cut back hallucination and value as in comparison with bigger LLMs. Excellent for low creativity, low-risk duties like embedding creation for data retrieval or matter modeling.

5. Assembled Reformat: Makes use of LLMs for reformatting and summarization, with pre-generated content material to make sure accuracy. Appropriate for content material like product catalogs the place accuracy is paramount on some elements of the content material, whereas artistic writing is required on others.

6. ML Choice of Template: Leverages machine studying to pick acceptable pre-generated templates primarily based on consumer context, balancing personalization with threat administration. Appropriate for customized suggestions or dynamic web site content material.

7. Effective-tune: Includes fine-tuning the LLM to generate desired content material whereas minimizing undesired outputs, addressing dangers associated to considered one of model voice, confidentiality, or accuracy. Adapter Tuning focuses on stylistic changes, distillation on particular duties, and human suggestions for ongoing enchancment.

8. Guardrails: Excessive creativity, high-risk duties require guardrails to mitigate a number of dangers, together with model threat and confidentiality, via preprocessing, post-processing, and iterative prompting. Off-the-shelf guardrails tackle widespread considerations like jailbreaking and delicate information masking whereas custom-built guardrails could also be crucial for business/application-specific necessities.

By utilizing the above framework to architect GenAI functions, it is possible for you to to stability complexity, fit-for-purpose, threat, value, and latency for every use case.

(Periodic reminder: these posts are my private views, not these of my employers, previous or current.)