is a contemporary begin. Except you explicitly provide data from earlier periods, the mannequin has no constructed‑in sense of continuity throughout requests or periods. This stateless design is nice for parallelism and security, but it surely poses an enormous problem for chat functions that requires user-level personalization.

In case your chatbot treats the person as a stranger each time they log in, how can it ever generate personalised responses?

On this article, we’ll construct a easy reminiscence system from scratch, impressed by the favored Mem0 structure.

Except in any other case talked about, all illustrations embedded right here had been created by me, the writer.

The purpose of this text is to coach readers on reminiscence administration as a context engineering downside. On the finish of the article additionally, you will discover:

- A GitHub hyperlink that accommodates the total reminiscence undertaking, you may host your self

- An in-depth YouTube tutorial that goes over the ideas line by line.

Reminiscence as a Context Engineering downside

Context Engineering is the strategy of filling within the context of an LLM with all of the related data it wants to finish a activity. For my part, reminiscence is likely one of the hardest and most attention-grabbing context engineering issues.

Tackling reminiscence introduces you (as a developer) to a number of the most necessary strategies required in nearly all context engineering issues, particularly:

- Extracting structured data from uncooked textual content streams

- Summarization

- Vector databases

- Question era and similarity search

- Question post-processing and re-ranking

- Agentic device calling

And a lot extra.

As we’re constructing our reminiscence layer from scratch, we must apply all of those strategies! Learn on.

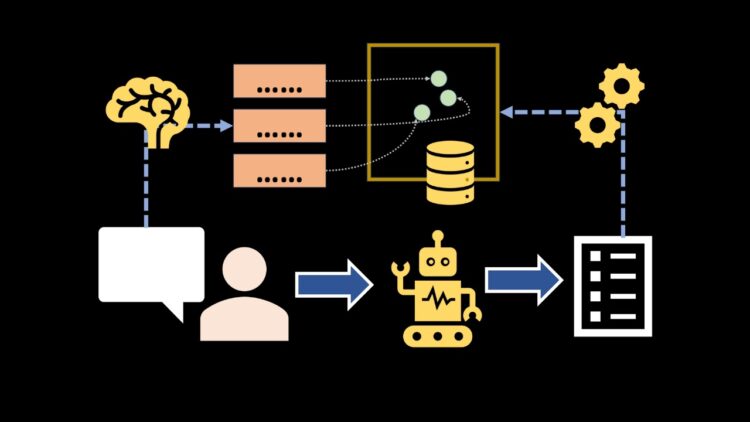

Excessive‑degree structure

At a look, the system ought to be capable of do 4 issues: extract, embed, retrieve, and keep. Let’s scout the high-level plans earlier than we start the implementation.

Elements

• Extraction: Extracts candidate atomic reminiscences from the present user-assistant messages.

• Vector DB: Embed the extracted factoids into steady vectors and retailer them in a vector database.

• Retrieval: When the person asks a query, we’ll generate a question with an LLM and retrieve reminiscences much like that question.

• Upkeep: Utilizing a ReAct (Reasoning and Performing) loop, the agent decides whether or not so as to add, replace, delete, or no‑op based mostly on the flip and contradictions with present information.

Importantly, each step above must be optionally available. If the LLM agent doesn’t want entry to earlier reminiscences to reply a query, it shouldn’t attempt to search our vector database in any respect.

The technique is to supply the LLM all of the instruments it could actually want to perform the duties, together with clear directions of what every device does – and depend on the LLM’s intelligence to make use of these instruments autonomously!

Let’s see this in motion!

2) Reminiscence Extraction with DSPy: From Transcript to Factoids

On this part, let’s design a sturdy extraction step that converts dialog transcripts right into a handful of atomic, categorized factoids.

What we’re extracting and why it issues

The purpose is to make a reminiscence retailer that could be a per-user, persistent vector-backed database.

What’s a “good” reminiscence?

A brief, self-contained reality—an atomic unit—that may be embedded and retrieved later with excessive precision.

With DSPy, extracting structured data could be very simple. Think about the code snippet under.

- We outline a DSPy signature known as

MemoryExtract. - The inputs of this signature (annotated as

InputField) are the transcript, - and the anticipated output (annotated as

OutputField) is an inventory of strings containing every factoid.

Context string in, listing of reminiscence strings out.

# ... different imports

import dspy

from pydantic import BaseModel

class MemoryExtract(dspy.Signature):

"""

Extract related data from the dialog.

Reminiscences are atomic unbiased factoids that we should be taught concerning the person.

If transcript doesn't comprise any data value extracting, return empty listing.

"""

transcript: str = dspy.InputField()

reminiscences: listing[str] = dspy.OutputField()

memory_extractor = dspy.Predict(MemoryExtract)In DSPy, the signature’s docstring is used as a system immediate. We will customise the docstring to explicitly tailor the type of data that the LLM will extract from the dialog.

Lastly, to extract reminiscences, we go the dialog historical past into the reminiscence extractor as a JSON string. Try the code snippet under.

async def extract_memories_from_messages(messages):

transcript = json.dumps(messages)

with dspy.context(lm=dspy.LM(mannequin=MODEL_NAME)):

out = await memory_extractor.acall(transcript=transcript)

return out.reminiscences # returns an inventory of reminiscencesThat’s it! Let’s run the code with a dummy dialog and see what occurs.

if __name__ == "__main__":

messages = [

{

"role": "user",

"content": "I like coffee"

},

{

"role": "assistant",

"content": "Got it!"

},

{

"role": "user",

"content": "actually, no I like tea more. I also like football"

}

]

reminiscences = asyncio.run(extract_memories_from_messages(messages))

print(reminiscences)

'''

Outputs:

[

"User used to like tea, but does not anymore",

"User likes coffee",

"User likes football"

]

'''As you may see, we will extract unbiased factoids from conversations. What does this imply?

We will save the extracted factoids in a database that exists exterior the chat session.

If DSPy pursuits you, try this Context Engineering with DSPy article that goes deeper into the idea. Or watch this video under

Embedding extracted reminiscences

So we will extract reminiscences from conversations. Subsequent, let’s embed them so we will finally retailer them in a vector database.

On this undertaking, we’ll use QDrant as our vector database – they’ve a cool free tier that’s extraordinarily quick and helps extra options like hybrid filtering (the place you may go SQL “the place”-like attribute filters to your vector question search).

Selecting the embedding mannequin and fixing the dimension

For price, velocity, and strong high quality on quick factoids, we select text-embedding-3-small. We pin the vector measurement to 64, which lowers storage and quickens search whereas remaining expressive sufficient for concise reminiscences. This can be a hyperparam we will tune later to go well with our wants.

shopper = openai.AsyncClient()

async def generate_embeddings(strings: listing[str]):

out = await shopper.embeddings.create(

enter=strings,

mannequin="text-embedding-3-small",

dimensions=64

)

embeddings = [item.embedding for item in out.data]

return embeddings

To insert into QDrant, let’s create our databases first and create an index on user_id. This can allow us to rapidly filter our data by customers.

from qdrant_client import AsyncQdrantClient

COLLECTION_NAME = "reminiscences"

async def create_memory_collection():

if not (await shopper.collection_exists(COLLECTION_NAME)):

await shopper.create_collection(

collection_name=COLLECTION_NAME,

vectors_config=VectorParams(measurement=64, distance=Distance.DOT),

)

await shopper.create_payload_index(

collection_name=COLLECTION_NAME,

field_name="user_id",

field_schema=fashions.PayloadSchemaType.INTEGER

)I wish to outline contracts utilizing Pydantic on the prime in order that different modules know the output form of those features.

from pydantic import BaseModel

class EmbeddedMemory(BaseModel):

user_id: int

memory_text: str

date: str

embedding: listing[float]

class RetrievedMemory(BaseModel):

point_id: str

user_id: int

memory_text: str

date: str

rating: float

Subsequent, let’s write helper features to insert, delete, and replace reminiscences.

async def insert_memories(reminiscences: listing[EmbeddedMemory]):

"""

Given an inventory of reminiscences, insert them to the database

"""

await shopper.upsert(

collection_name=COLLECTION_NAME,

factors=[

models.PointStruct(

id=uuid4().hex,

payload={

"user_id": memory.user_id,

"memory_text": memory.memory_text,

"date": memory.date

},

vector=memory.embedding

)

for memory in memories

]

)

async def delete_records(point_ids):

"""

Delete an inventory of level ids from the database

"""

await shopper.delete(

collection_name=COLLECTION_NAME,

points_selector=fashions.PointIdsList(

factors=point_ids

)

)Equally, let’s write one for looking. This accepts a search vector and a user_id, and fetches nearest neighbors to that vector.

from qdrant_client.fashions import Distance, Filter, fashions

async def search_memories(

search_vector: listing[float],

user_id: int,

topk_neighbors=5

):

# Filter by user_id

must_conditions: listing[models.Condition] = [

models.FieldCondition(

key="user_id",

match=models.MatchValue(value=user_id)

)

]

outs = await shopper.query_points(

collection_name=COLLECTION_NAME,

question=search_vector,

with_payload=True,

query_filter=Filter(should=must_conditions),

score_threshold=0.1,

restrict=topk_neighbors

)

return [

convert_retrieved_records(point)

for point in outs.points

if point is not None

]Discover how we will set hybrid question filters just like the

fashions.MatchValuefilter. Creating the index on user_id permits us to run these queries rapidly towards our information. You’ll be able to prolong this concept to incorporate class tags, date ranges, and another metadata that your utility cares about. Simply make certain to create an index for quicker retrieval efficiency.

Within the subsequent chapter, we’ll join this storage layer to our agent loop utilizing DSPy Signatures and ReAct (Reasoning and Performing).

Reminiscence Retrieval

On this part, we construct a clear retrieval interface that pulls probably the most related, per-user reminiscences for a given flip.

Our algorithm is easy – we’ll create a tool-calling chatbot agent. At each flip, the agent receives the transcript of the dialog and should generate a solution. Let’s outline the DSPy signature.

class ResponseGenerator(dspy.Signature):

"""

You'll be given a previous dialog transcript between person and an AI agent. Additionally the most recent query by the person.

You've the choice to search for the previous reminiscences from a vector database to fetch related context if required.

If you cannot discover the reply to person's query from transcript or from your personal inner data, use the offered search device calls to seek for data.

You have to output the ultimate response, and in addition determine the most recent interplay must be recorded into the reminiscence database. New reminiscences are supposed to retailer new data that the person gives.

New reminiscences must be made when the USER gives new information. It isn't to save lots of details about the the AI or the assistant.

"""

transcript: listing[dict] = dspy.InputField()

query: str = dspy.InputField()

response: str = dspy.OutputField()

save_memory: bool = dspy.OutputField(description=

"True if a brand new reminiscence report must be created for the most recent interplay"

) The docstring of the dspy Signature acts as extra directions we go into the LLM to assist it choose its actions. Additionally, discover the save_memory flag we marked as an OutputField. We’re asking the LLM additionally to output if a brand new reminiscence must be saved due to the most recent interplay with the reply.

We additionally want to resolve how we wish to fetch related reminiscences into the agent’s context. One possibility is to at all times execute the search_memories operate, however there are two huge issues with this:

- Not all person questions want a reminiscence retrieval.

- Whereas the search_memories operate expects a search vector, it’s not at all times simple “what textual content we must be embedding”. It may very well be the complete transcript, or simply the person’s newest message, or it may very well be a metamorphosis of the present dialog context.

Fortunately, we will default to tool-calling. When the agent thinks it lacks context to hold out a request, it could actually invoke a device name to fetch related reminiscences associated to the dialog’s context. In DSPy, instruments may be created by simply writing vanilla Python operate with a docstring. The LLM reads this docstring to determine when and easy methods to name this device.

async def fetch_similar_memories(search_text: str):

"""

Search reminiscences from vector database if dialog requires extra context.

Args:

- search_text : The string to embed and do vector similarity search

"""

search_vector = (await generate_embeddings([search_text]))[0]

reminiscences = await search_memories(search_vector,

user_id=user_id)

memories_str = [

f"id={m_.id}ntext={m_.text}ncreated_at={m_.date}"

for m_ in memories

]

return {

"reminiscences": memories_str

}Be aware that we hold monitor of the person’s id externally and use it from our supply of fact with out asking the LLM to generate it. This ensures isolation contextual to the present chat session.

Subsequent, let’s create a ReAct agent with DSPy. ReAct stands for “Reasoning and Performing”. Mainly, the LLM agent observes the info (on this case, the dialog historical past), causes about it, after which acts

An motion may be to generate a solution immediately or attempt to retrieve reminiscences first.

response_generator = dspy.ReAct(

ResponseGenerator,

instruments=[fetch_similar_memories],

max_iters=4

)In an agentic move, the DSPy ReAct coverage can craft a concise search_text from the present flip and the identified activity. The ReAct agent can name the fetch_similar_memories upto 4 occasions to seek for reminiscences earlier than it should reply the person’s query.

Different Retrieval Methods

You can even select different retrieval methods than simply similarity search. Listed here are some concepts:

- Key phrase Search – Look into algorithms like BM-25 or TF-IDF

- Class Filtering – Should you power each reminiscence to have clear metadata tagging (like “meals”, “sports activities”, “habits”), the agent can generate queries to go looking these particular subcategories as a substitute of the entire reminiscence stack.

- Time Queries – Enable the agent to retrieve data from particular time ranges!

These decisions largely rely in your utility.

No matter your retrieval technique is, as soon as the device fetches the LLM solutions, the agent goes to generate solutions from the retrieved information! Bear in mind, it additionally outputs that save_memory flag? We will set off our customized replace logic when it’s turned to true.

out = await response_generator.acall(

transcript=past_messages,

query=query,

)

response = out.response # the response

save_memory = out.save_memory # the LLM's choice to save lots of reminiscence or not

past_messages.prolong(

[

{"role": "user", "content": question},

{"role": "assistant", "content": response},

]

) # replace dialog stack

if (save_memory): # Replace reminiscences provided that LLM outputs this flag as true

update_result = await update_memories(

user_id=user_id,

messages=past_messages,

)Let’s see how the replace step works.

Reminiscence Upkeep

Reminiscence isn’t a easy log of data. It’s an ever-evolving pool of data. Some reminiscences must be deleted as a result of it’s now not related. Some reminiscences should be up to date as a result of the underlying world circumstances have modified.

For instance, suppose we had a reminiscence for “person loves tea”, and we simply bought to know that the “person hates tea”. As an alternative of making a model new reminiscence, we must always delete the outdated reminiscence and create a brand new one.

When the response generator agent decides to save lots of new reminiscences, we’ll use a separate agentic move to determine easy methods to do the updates. The Replace reminiscence agent receives as enter the brand new reminiscence, and an inventory of comparable reminiscences to the dialog state.

.... # if save_memory is True

response = await update_memories_agent(

user_id=user_id,

existing_memories=similar_memories,

messages=messages

)

As soon as we have now determined to replace the reminiscence database, there are 4 logical issues the reminiscence supervisor agent can do:

• add_memory(textual content): Inserts a brand-new atomic factoid. It computes a contemporary embedding and writes the report for the present person. It also needs to apply deduplication logic earlier than insertion.

• update_memory(id, updated_text): Replaces an present reminiscence’s textual content. It deletes the outdated level, re-embeds the brand new textual content, and reinserts it beneath the identical person, optionally preserving or adjusting classes. That is the canonical option to deal with refinements or corrections.

• delete_memories(ids): Removes a number of reminiscences which are now not legitimate attributable to contradictions or obsolescence.

• no_op(): Explicitly does nothing if the upkeep agent decides that the brand new reminiscence is irrelevant or already totally captured within the database state.

Once more this structure is impressed by the Mem0 analysis paper.

The code under exhibits these instruments built-in right into a DSPy ReAct agent with a structured signature and gear choice loop.

class MemoryWithIds(BaseModel):

memory_id: int

memory_text: str

class UpdateMemorySignature(dspy.Signature):

"""

You'll be given the dialog between person and assistant and a few related reminiscences from the database. Your purpose is to determine easy methods to mix the brand new reminiscences into the database with the prevailing reminiscences.

Actions that means:

- ADD: add new reminiscences into the database as a brand new reminiscence

- UPDATE: replace an present reminiscence with richer data.

- DELETE: take away reminiscence objects from the database that are not required anymore attributable to new data

- NOOP: No have to take any motion

If no motion is required you may end.

Assume much less and do actions.

"""

messages: listing[dict] = dspy.InputField()

existing_memories: listing[MemoryWithIds] = dspy.InputField()

abstract: str = dspy.OutputField(

description="Summarize what you probably did. Very quick (lower than 10 phrases)"

)

Subsequent, let’s write the instruments our upkeep agent wants. We want features so as to add, delete, replace reminiscences, and a dummy no_op operate the LLM can name when it desires to “go”.

async def update_memories_agent(

user_id: int,

messages: listing[dict],

existing_memories: listing[RetrievedMemory]

):

def get_point_id_from_memory_id(memory_id):

return existing_memories[memory_id].point_id

async def add_memory(memory_ext: str) -> str:

"""

Add the new_memory into the database.

"""

embeddings = await generate_embeddings(

[memory_text]

)

await insert_memories(

reminiscences = [

EmbeddedMemory(

user_id=user_id,

memory_text=memory_text,

date=datetime.now().strftime("%Y-%m-%d %H:%m"),

embedding=embeddings[0]

)

]

)

return f"Reminiscence: '{memory_text}' was added to DB"

async def replace(memory_id: int,

updated_memory_text: str,

):

"""

Updating memory_id to make use of updated_memory_text

Args:

memory_id: integer index of the reminiscence to interchange

updated_memory_text: Easy atomic factoid to interchange the outdated reminiscence with the brand new reminiscence

"""

point_id = get_point_id_from_memory_id(memory_id)

await delete_records([point_id])

embeddings = await generate_embeddings(

[updated_memory_text]

)

await insert_memories(

reminiscences = [

EmbeddedMemory(

user_id=user_id,

memory_text=updated_memory_text,

categories=categories,

date=datetime.now().strftime("%Y-%m-%d %H:%m"),

embedding=embeddings[0]

)

]

)

return f"Reminiscence {memory_id} has been up to date to: '{updated_memory_text}'"

async def noop():

"""

Name that is no motion is required

"""

return "No motion achieved"

async def delete(memory_ids: listing[int]):

"""

Take away these memory_ids from the database

"""

await delete_records(memory_ids)

return f"Reminiscence {memory_ids} deleted"

memory_updater = dspy.ReAct(

UpdateMemorySignature,

instruments=[add_memory, update, delete, noop],

max_iters=3

)

out = await memory_updater.acall(

messages=messages,

existing_memories=memory_ids

)

And that’s it! Relying on what motion the ReAct agent chooses, we will merely insert, delete, replace, or ignore the brand new reminiscences. Under you may see a easy instance of how issues look after we run the code.

The complete model of the code additionally has extra options like metadata tagging for correct retrieval which I didn’t cowl on this article to maintain it beginner-friendly. Make sure to try the GitHub repo under or the YouTube tutorial to discover the total undertaking!

What’s subsequent

You’ll be able to watch the total video tutorial that goes into extra element about constructing Reminiscence brokers right here.

The code repo may be discovered right here: https://github.com/avbiswas/mem0-dspy

This tutorial defined the constructing blocks of a reminiscence system. Listed here are some concepts on easy methods to develop this concept:

- A Graph Reminiscence system – as a substitute of utilizing a vector database, retailer reminiscences in a graph database. This implies, your dspy modules ought to extract triplets as a substitute of flat strings to signify reminiscences.

- Metadata – Alongside textual content, insert extra attribute filters. For instance, you may group all “meals” associated reminiscences. This can permit the LLM brokers to question particular tags whereas fetching reminiscences, as a substitute of querying all reminiscences directly.

- Optimizing prompts per person: You’ll be able to hold monitor of integral data in your reminiscence database and immediately inject it into the system immediate. These get handed into every message as session reminiscence.

- File-Primarily based Methods: One other widespread sample that’s rising is file-based retrieval. The core rules stay the identical that we mentioned right here, however as a substitute of a vector database, you should utilize a file system. Inserting and updating data means writing .md recordsdata. And querying will normally contain extra indexing steps or just use instruments like regex searches or grep.

My Patreon:

https://www.patreon.com/NeuralBreakdownwithAVB

My YouTube channel:

https://www.youtube.com/@avb_fj

Comply with me on Twitter:

https://x.com/neural_avb

Learn my articles:

https://towardsdatascience.com/writer/neural-avb/