we’re.

That is the mannequin that motivated me, from the very starting, to make use of Excel to raised perceive Machine Studying.

And as we speak, you will see a completely different clarification of SVM than you normally see, which is the one with:

- margin separators,

- distances to a hyperplane,

- geometric constructions first.

As an alternative, we are going to construct the mannequin step-by-step, ranging from issues we already know.

So possibly that is additionally the day you lastly say “oh, I perceive higher now.”

Constructing a New Mannequin on What We already Know

One in all my foremost studying ideas is easy:

at all times begin from what we already know.

Earlier than SVM, we already studied:

- logistic regression,

- penalization and regularization.

We are going to use these fashions and ideas as we speak.

The concept is to not introduce a brand new mannequin, however to rework an present one.

Coaching datasets and label conference

We are going to use a one-feature dataset to clarify the SVM.

Sure, I do know, that is most likely the primary time you see somebody clarify SVM utilizing just one characteristic.

Why not?

In truth, it’s mandatory, for a number of causes.

For different fashions, similar to linear regression or logistic regression, we normally begin with a single characteristic. We must always do the identical with SVM, in order that we will examine the fashions correctly.

In the event you construct a mannequin with many options and assume you perceive the way it works, however you can not clarify it with only one characteristic, then you don’t actually perceive it but.

Utilizing a single characteristic makes the mannequin:

- less complicated to implement,

- simpler to visualise,

- and far simpler to debug.

So, we use two datasets that I generated as an example the 2 attainable conditions a linear classifier can face:

- one dataset is utterly separable

- the opposite is not utterly separable

It’s possible you’ll already know why we use these two datasets, whereas we solely use one, proper?

We additionally use the label conference -1 and 1 as a substitute of 0 and 1.

Why? We are going to see later, that’s truly fascinating historical past, about how the fashions are seen in GLM and Machine Studying views.

In logistic regression, earlier than making use of the sigmoid, we compute a logit. And we will name it f, this can be a linear rating.

This amount is a linear rating that may take any actual worth, from −∞ to +∞.

- constructive values correspond to 1 class,

- destructive values correspond to the opposite,

- zero is the choice boundary.

Utilizing labels -1 and 1 matches this interpretation naturally.

It emphasizes the signal of the logit, with out going via chances.

So, we’re working with a pure linear mannequin, not inside the GLM framework.

There isn’t a sigmoid, no chance, solely a linear determination rating.

A compact method to specific this concept is to have a look at the amount:

y(ax + b) = y f(x)

- If this worth is constructive, the purpose is appropriately categorized.

- Whether it is massive, the classification is assured.

- Whether it is destructive, the purpose is misclassified.

At this level, we’re nonetheless not speaking about SVMs.

We’re solely making express what good classification means in a linear setting.

From log-loss to a brand new loss operate

With this conference, we will write the log-loss for logistic regression immediately as a operate of the amount:

y f(x) = y (ax+b)

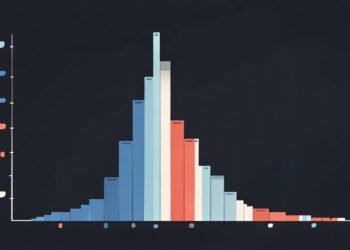

We will plot this loss as a operate of yf(x).

Now, allow us to introduce a brand new loss operate referred to as the hinge loss.

After we plot the 2 losses on the identical graph, we will see that they’re fairly comparable in form.

Do you keep in mind Gini vs. Entropy in Choice Tree Classifiers?

The comparability could be very comparable right here.

In each instances, the thought is to penalize:

- factors which are misclassified yf(x)<0,

- factors which are too near the choice boundary.

The distinction is in how this penalty is utilized.

- The log-loss penalizes errors in a clean and progressive means.

Even well-classified factors are nonetheless barely penalized. - The hinge loss is extra direct and abrupt.

As soon as some extent is appropriately categorized with a ample margin, it’s not penalized in any respect.

So the objective is to not change what we take into account a superb or dangerous classification,

however to simplify the way in which we penalize it.

One query naturally follows.

Might we additionally use a squared loss?

In any case, linear regression can be used as a classifier.

However once we do that, we instantly see the issue:

the squared loss retains penalizing factors which are already very properly categorized.

As an alternative of specializing in the choice boundary, the mannequin tries to suit actual numeric targets.

This is the reason linear regression is normally a poor classifier, and why the selection of the loss operate issues a lot.

Description of the brand new mannequin

Allow us to now assume that the mannequin is already educated and look immediately on the outcomes.

For each fashions, we compute precisely the identical portions:

- the linear rating (and it’s referred to as logit for Logistic Regression)

- the chance (we will simply apply the sigmoid operate in each instances),

- and the loss worth.

This permits a direct, point-by-point comparability between the 2 approaches.

Though the loss capabilities are completely different, the linear scores and the ensuing classifications are very comparable on this dataset.

For the utterly separable dataset, the result’s instant: all factors are appropriately categorized and lie sufficiently removed from the choice boundary. As a consequence, the hinge loss is the same as zero for each statement.

This results in an essential conclusion.

When the info is completely separable, there’s not a novel resolution. In truth, there are infinitely many linear determination capabilities that obtain precisely the identical consequence. We will shift the road, rotate it barely, or rescale the coefficients, and the classification stays excellent, with zero loss in every single place.

So what will we do subsequent?

We introduce regularization.

Simply as in ridge regression, we add a penalty on the measurement of the coefficients. This extra time period doesn’t enhance classification accuracy, however it permits us to pick out one resolution amongst all of the attainable ones.

So in our dataset, we get the one with the smallest slope a.

And congratulations, we have now simply constructed the SVM mannequin.

We will now simply write down the associated fee operate of the 2 fashions: Logistic Regression and SVM.

Do you keep in mind that Logistic Regression will be regularized, and it’s nonetheless referred to as so, proper?

Now, why does the mannequin embody the time period “Assist Vectors”?

In the event you have a look at the dataset, you’ll be able to see that only some factors, for instance those with values 6 and 10, are sufficient to find out the choice boundary. These factors are referred to as help vectors.

At this stage, with the angle we’re utilizing, we can not establish them immediately.

We are going to see later that one other viewpoint makes them seem naturally.

And we will do the identical train for one more dataset, with non-separable dataset, however the precept is similar. Nothing modified.

However now, we will see that for certains factors, the hinge loss shouldn’t be zero. In our case beneath, we will see visually that there are 4 factors that we’d like as Assist Vectors.

SVM Mannequin Coaching with Gradient Descent

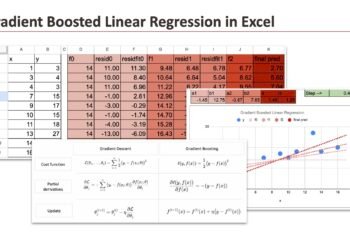

We now prepare the SVM mannequin explicitly, utilizing gradient descent.

Nothing new is launched right here. We reuse the identical optimization logic we already utilized to linear and logistic regression.

New conference: Lambda (λ) or C

In lots of fashions we studied beforehand, similar to ridge or logistic regression, the target operate is written as:

data-fit loss +λ ∥w∥

Right here, the regularization parameter λ controls the penalty on the scale of the coefficients.

For SVMs, the standard conference is barely completely different. We reasonably use C in entrance of the data-fit time period.

Each formulations are equal.

They solely differ by a rescaling of the target operate.

We maintain the parameter C as a result of it’s the usual notation utilized in SVMs. And we are going to see why we have now this conference later.

Gradient (subgradient)

We work with a linear determination operate, and we will outline the margin for every level as: mi = yi (axi + b)

Solely observations such that mi<1 contribute to the hinge loss.

The subgradients of the target are as follows, and we will implement in Excel, utilizing logical masks and SUMPRODUCT.

Parameter replace

With a studying fee or step measurement η, the gradient descent updates are as follows, and we will do the standard components:

We iterate these updates till convergence.

And, by the way in which, this coaching process additionally provides us one thing very good to visualise. At every iteration, because the coefficients are up to date, the measurement of the margin modifications.

So we will visualize, step-by-step, how the margin evolves through the studying course of.

Optimization vs. geometric formulation of SVM

This determine beneath exhibits the identical goal operate of the SVM mannequin written in two completely different languages.

On the left, the mannequin is expressed as an optimization downside.

We reduce a mix of two issues:

- a time period that retains the mannequin easy, by penalizing massive coefficients,

- and a time period that penalizes classification errors or margin violations.

That is the view we have now been utilizing thus far. It’s pure once we assume by way of loss capabilities, regularization, and gradient descent. It’s the most handy kind for implementation and optimization.

On the correct, the identical mannequin is expressed in a geometric means.

As an alternative of speaking about losses, we discuss:

- margins,

- constraints,

- and distances to the separating boundary.

When the info is completely separable, the mannequin appears to be like for the separating line with the largest attainable margin, with out permitting any violation. That is the hard-margin case.

When excellent separation is unattainable, violations are allowed, however they’re penalized. This results in the soft-margin case.

What’s essential to grasp is that these two views are strictly equal.

The optimization formulation robotically enforces the geometric constraints:

- penalizing massive coefficients corresponds to maximizing the margin,

- penalizing hinge violations corresponds to permitting, however controlling, margin violations.

So this isn’t two completely different fashions, and never two completely different concepts.

It’s the identical SVM, seen from two complementary views.

As soon as this equivalence is obvious, the SVM turns into a lot much less mysterious: it’s merely a linear mannequin with a selected means of measuring errors and controlling complexity, which naturally results in the maximum-margin interpretation everybody is aware of.

Unified Linear Classifier

From the optimization viewpoint, we will now take a step again and have a look at the larger image.

What we have now constructed is not only “the SVM”, however a basic linear classification framework.

A linear classifier is outlined by three impartial selections:

- a linear determination operate,

- a loss operate,

- a regularization time period.

As soon as that is clear, many fashions seem as easy combos of those parts.

In observe, that is precisely what we will do with SGDClassifier in scikit-learn.

From the identical viewpoint, we will:

- mix the hinge loss with L1 regularization,

- substitute hinge loss with squared hinge loss,

- use log-loss, hinge loss, or different margin-based losses,

- select L2 or L1 penalties relying on the specified conduct.

Every selection modifications how errors are penalized or how coefficients are managed, however the underlying mannequin stays the identical: a linear determination operate educated by optimization.

Primal vs Twin Formulation

It’s possible you’ll have already got heard in regards to the twin kind of SVM.

To this point, we have now labored totally within the primal kind:

- we optimized the mannequin coefficients immediately,

- utilizing loss capabilities and regularization.

The twin kind is one other method to write the identical optimization downside.

As an alternative of assigning weights to options, the twin kind assigns a coefficient, normally referred to as alpha, to every information level.

We won’t derive or implement the twin kind in Excel, however we will nonetheless observe its consequence.

Utilizing scikit-learn, we will compute the alpha values and confirm that:

- the primal and twin types result in the identical mannequin,

- identical determination boundary, identical predictions.

What makes the twin kind notably fascinating for SVM is that:

- most alpha values are precisely zero,

- only some information factors have non-zero alpha.

These factors are the help vectors.

This conduct is particular to margin-based losses just like the hinge loss.

Lastly, the twin kind additionally explains why SVMs can use the kernel trick.

By working with similarities between information factors, we will construct non-linear classifiers with out altering the optimization framework.

We are going to see this tomorrow.

Conclusion

On this article, we didn’t strategy SVM as a geometrical object with sophisticated formulation. As an alternative, we constructed it step-by-step, ranging from fashions we already know.

By altering solely the loss operate, then including regularization, we naturally arrived on the SVM. The mannequin didn’t change. Solely the way in which we penalize errors did.

Seen this fashion, SVM shouldn’t be a brand new household of fashions. It’s a pure extension of linear and logistic regression, seen via a special loss.

We additionally confirmed that:

- the optimization view and the geometric view are equal,

- the maximum-margin interpretation comes immediately from regularization,

- and the notion of help vectors emerges naturally from the twin perspective.

As soon as these hyperlinks are clear, SVM turns into a lot simpler to grasp and to put amongst different linear classifiers.

Within the subsequent step, we are going to use this new perspective to go additional, and see how kernels lengthen this concept past linear fashions.