Within the context of Language Fashions and Agentic AI, reminiscence and grounding are each sizzling and rising fields of analysis. And though they’re typically positioned intently in a sentence and are sometimes associated, they serve totally different features in apply. On this article, I hope to clear up the confusion round these two phrases and reveal how reminiscence can play a job within the general grounding of a mannequin.

In my final article, we mentioned the necessary position of reminiscence in Agentic AI. Reminiscence in language fashions refers back to the capability of AI programs to retain and recall pertinent info, contributing to its capability to purpose and constantly be taught from its experiences. Reminiscence could be considered in 4 classes: brief time period reminiscence, brief long run reminiscence, long run reminiscence, and dealing reminiscence.

It sounds complicated, however let’s break them down merely:

Quick Time period Reminiscence (STM):

STM retains info for a really transient time period, which could possibly be seconds to minutes. In the event you ask a language mannequin a query it must retain your messages for lengthy sufficient to generate a solution to your query. Similar to folks, language fashions wrestle to recollect too many issues concurrently.

Miller’s regulation, states that “Quick-term reminiscence is a part of reminiscence that holds a small quantity of knowledge in an lively, available state for a quick interval, usually a number of seconds to a minute. The period of STM appears to be between 15 and 30 seconds, and STM’s capability is restricted, typically regarded as about 7±2 objects.”

So in the event you ask a language mannequin “what style is that ebook that I discussed in my earlier message?” it wants to make use of its brief time period reminiscence to reference current messages and generate a related response.

Implementation:

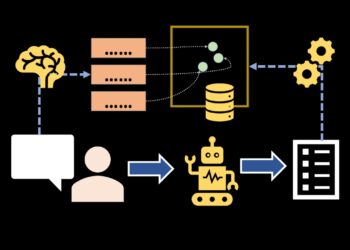

Context is saved in exterior programs, comparable to session variables or databases, which maintain a portion of the dialog historical past. Every new consumer enter and assistant response is appended to the present context to create dialog historical past. Throughout inference, context is shipped together with the consumer’s new question to the language mannequin to generate a response that considers your complete dialog. This analysis paper presents a extra in depth view of the mechanisms that allow brief time period reminiscence.

Quick Lengthy Time period Reminiscence (SLTM):

SLTM retains info for a average interval, which could be minutes to hours. For instance, inside the similar session, you’ll be able to choose again up the place you left off in a dialog with out having to repeat context as a result of it has been saved as SLTM. This course of can also be an exterior course of reasonably than a part of the language mannequin itself.

Implementation:

Classes could be managed utilizing identifiers that hyperlink consumer interactions over time. Context knowledge is saved in a means that it might probably persist throughout consumer interactions inside an outlined interval, comparable to a database. When a consumer resumes dialog, the system can retrieve the dialog historical past from earlier classes and cross that to the language mannequin throughout inference. Very similar to briefly time period reminiscence, every new consumer enter and assistant response is appended to the present context to maintain dialog historical past present.

Lengthy Time period Reminiscence (LTM):

LTM retains info for a admin outlined period of time that could possibly be indefinitely. For instance, if we had been to construct an AI tutor, it might be necessary for the language mannequin to grasp what topics the scholar performs nicely in, the place they nonetheless wrestle, what studying types work finest for them, and extra. This fashion, the mannequin can recall related info to tell its future educating plans. Squirrel AI is an instance of a platform that makes use of long run reminiscence to “craft customized studying pathways, engages in focused educating, and gives emotional intervention when wanted”.

Implementation:

Data could be saved in structured databases, information graphs, or doc shops which can be queried as wanted. Related info is retrieved primarily based on the consumer’s present interplay and previous historical past. This gives context for the language mannequin that’s handed again in with the consumer’s response or system immediate.

Working Reminiscence:

Working reminiscence is a part of the language mannequin itself (in contrast to the opposite kinds of reminiscence which can be exterior processes). It allows the language mannequin to carry info, manipulate it, and refine it — bettering the mannequin’s capability to purpose. That is necessary as a result of because the mannequin processes the consumer’s ask, its understanding of the duty and the steps it must take to execute on it might probably change. You may consider working reminiscence because the mannequin’s personal scratch pad for its ideas. For instance, when supplied with a multistep math downside comparable to (5 + 3) * 2, the language mannequin wants the power to calculate the (5+3) within the parentheses and retailer that info earlier than taking the sum of the 2 numbers and multiplying by 2. In the event you’re fascinated with digging deeper into this topic, the paper “TransformerFAM: Suggestions consideration is working reminiscence” presents a brand new strategy to extending the working reminiscence and enabling a language mannequin to course of inputs/context window of limitless size.

Implementation:

Mechanisms like consideration layers in transformers or hidden states in recurrent neural networks (RNNs) are liable for sustaining intermediate computations and supply the power to control intermediate outcomes inside the similar inference session. Because the mannequin processes enter, it updates its inner state, which allows stronger reasoning talents.

All 4 kinds of reminiscence are necessary parts of making an AI system that may successfully handle and make the most of info throughout numerous timeframes and contexts.

The response from a language mannequin ought to all the time make sense within the context of the dialog — they shouldn’t simply be a bunch of factual statements. Grounding measures the power of a mannequin to provide an output that’s contextually related and significant. The method of grounding a language mannequin could be a mixture of language mannequin coaching, fine-tuning, and exterior processes (together with reminiscence!).

Language Mannequin Coaching and Fantastic Tuning

The information that the mannequin is initially educated on will make a considerable distinction in how grounded the mannequin is. Coaching a mannequin on a big corpora of numerous knowledge allows it to be taught language patterns, grammar, and semantics, to foretell the following most related phrase. The pre-trained mannequin is then fine-tuned on domain-specific knowledge, which helps it generate extra related and correct outputs for explicit purposes that require deeper area particular information. That is particularly necessary in the event you require the mannequin to carry out nicely on particular texts which it won’t have been uncovered to throughout its preliminary coaching. Though our expectations of a language mannequin’s capabilities are excessive, we are able to’t count on it to carry out nicely on one thing it has by no means seen earlier than. Similar to we wouldn’t count on a pupil to carry out nicely on an examination in the event that they hadn’t studied the fabric.

Exterior Context

Offering the mannequin with real-time or up-to-date context-specific info additionally helps it keep grounded. There are a lot of strategies of doing this, comparable to integrating it with exterior information bases, APIs, and real-time knowledge. This technique is also called Retrieval Augmented Era (RAG).

Reminiscence Programs

Reminiscence programs in AI play a vital position in guaranteeing that the system stays grounded primarily based on its beforehand taken actions, classes realized, efficiency over time, and expertise with customers and different programs. The 4 kinds of reminiscence outlined beforehand within the article play a vital position in grounding a language mannequin’s capability to remain context-aware and produce related outputs. Reminiscence programs work in tandem with grounding methods like coaching, fine-tuning, and exterior context integration to boost the mannequin’s general efficiency and relevance.

Reminiscence and grounding are interconnected parts that improve the efficiency and reliability of AI programs. Whereas reminiscence allows AI to retain and manipulate info throughout totally different timeframes, grounding ensures that the AI’s outputs are contextually related and significant. By integrating reminiscence programs and grounding methods, AI programs can obtain a better stage of understanding and effectiveness of their interactions and duties.