On this article, you’ll find out how vector databases energy quick, scalable similarity seek for trendy machine studying functions and when to make use of them successfully.

Subjects we are going to cowl embody:

- Why typical database indexing breaks down for high-dimensional embeddings.

- The core ANN index households (HNSW, IVF, PQ) and their trade-offs.

- Manufacturing considerations: recall vs. latency tuning, scaling, filtering, and vendor decisions.

Let’s get began!

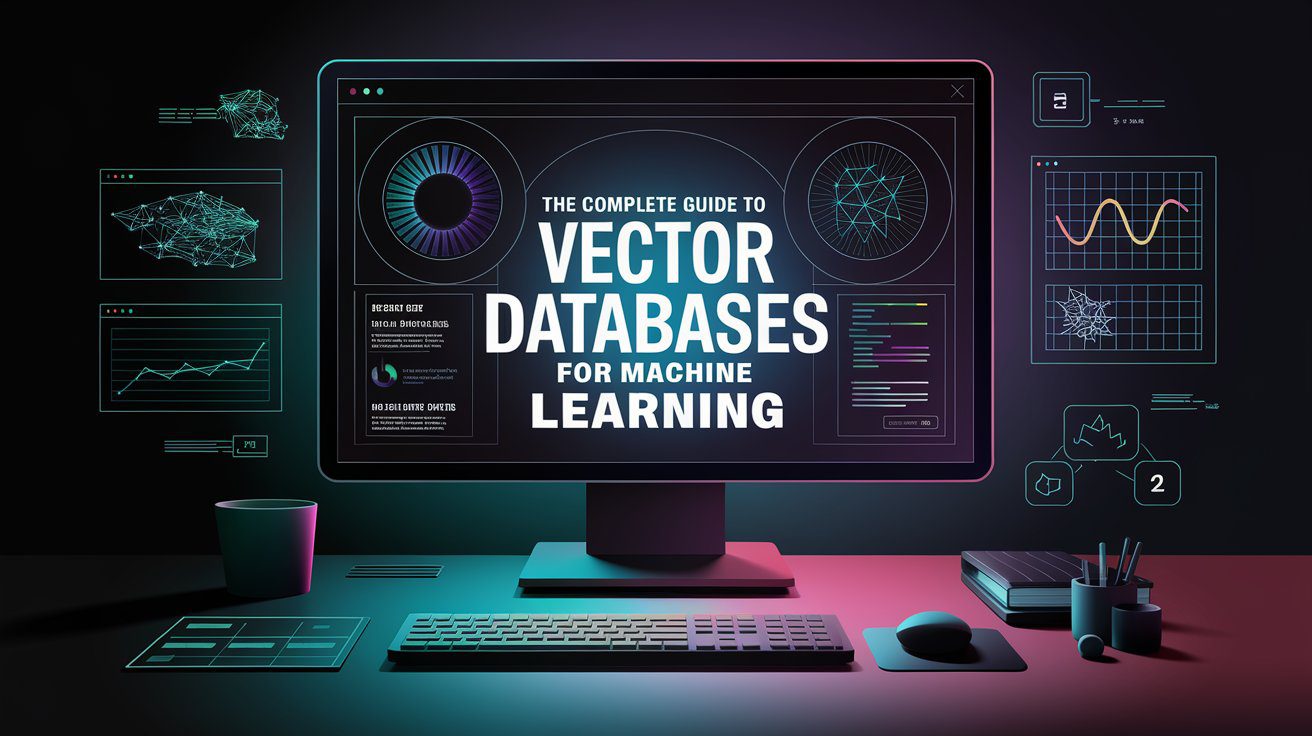

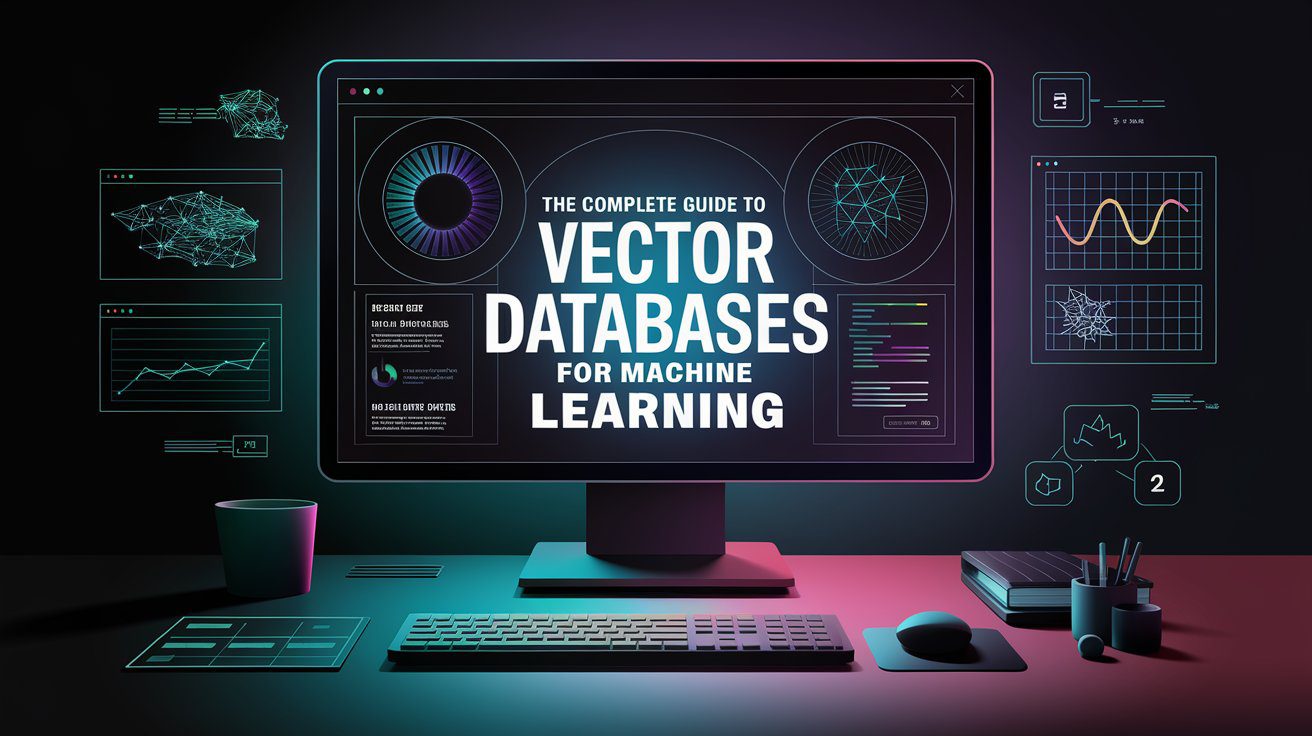

The Full Information to Vector Databases for Machine Studying

Picture by Writer

Introduction

Vector databases have grow to be important in most trendy AI functions. If you happen to’ve constructed something with embeddings — semantic search, advice engines, RAG programs — you’ve seemingly hit the wall the place conventional databases don’t fairly suffice.

Constructing search functions sounds easy till you attempt to scale. If you transfer from a prototype to actual knowledge with hundreds of thousands of paperwork and lots of of hundreds of thousands of vectors, you hit a roadblock. Every search question compares your enter towards each vector in your database. With 1024- or 1536-dimensional vectors, that’s over a billion floating-point operations per million vectors searched. Your search characteristic turns into unusable.

Vector databases remedy this with specialised algorithms that keep away from brute-force distance calculations. As an alternative of checking each vector, they use methods like hierarchical graphs and spatial partitioning to look at solely a small share of candidates whereas nonetheless discovering nearest neighbors. The important thing perception: you don’t want good outcomes; discovering the ten most comparable objects out of one million is sort of an identical to discovering absolutely the prime 10, however the approximate model is usually a thousand occasions quicker.

This text explains why vector databases are helpful in machine studying functions, how they work beneath the hood, and if you really need one. Particularly, it covers the next matters:

- Why conventional database indices fail for similarity search in high-dimensional areas

- Key algorithms powering vector databases: HNSW, IVF, and Product Quantization

- Distance metrics and why your alternative issues

- Understanding the recall-latency tradeoff and tuning for manufacturing

- How vector databases deal with scale by sharding, compression, and hybrid indices

- If you really need a vector database versus less complicated options

- An outline of main choices: Pinecone, Weaviate, Chroma, Qdrant, Milvus, and others

Why Conventional Databases Aren’t Efficient for Similarity Search

Conventional databases are extremely environment friendly for actual matches. You do issues like: discover a consumer with ID 12345; retrieve merchandise priced beneath $50. These queries depend on equality and comparability operators that map completely to B-tree indices.

However machine studying offers in embeddings, that are high-dimensional vectors that signify semantic which means. Your search question “finest Italian eating places close by” turns into a 1024- or 1536-dimensional array (for widespread OpenAI and Cohere embeddings you’ll use usually). Discovering comparable vectors, due to this fact, requires computing distances throughout lots of or 1000’s of dimensions.

A naive strategy would calculate the space between your question vector and each vector in your database. For one million embeddings with over 1,000 dimensions, that’s about 1.5 billion floating-point operations per question. Conventional indices can’t assist since you’re not on the lookout for actual matches—you’re on the lookout for neighbors in high-dimensional area.

That is the place vector databases are available.

What Makes Vector Databases Totally different

Vector databases are purpose-built for similarity search. They arrange vectors utilizing specialised knowledge buildings that allow approximate nearest neighbor (ANN) search, buying and selling good accuracy for dramatic velocity enhancements.

The important thing distinction lies within the index construction. As an alternative of B-trees optimized for vary queries, vector databases use algorithms designed for high-dimensional geometry. These algorithms exploit the construction of embedding areas to keep away from brute-force distance calculations.

A well-tuned vector database can search by hundreds of thousands of vectors in milliseconds, making real-time semantic search sensible.

Some Core Ideas Behind Vector Databases

Vector databases depend on algorithmic approaches. Every makes totally different trade-offs between search velocity, accuracy, and reminiscence utilization. I’ll go over three key vector index approaches right here.

Hierarchical Navigable Small World (HNSW)

Hierarchical Navigable Small World (HNSW) builds a multi-layer graph construction the place every layer comprises a subset of vectors linked by edges. The highest layer is sparse, containing only some well-distributed vectors. Every decrease layer provides extra vectors and connections, with the underside layer containing all vectors.

Search begins on the prime layer and greedily navigates to the closest neighbor. As soon as it might probably’t discover something nearer, it strikes down a layer and repeats. This continues till reaching the underside layer, which returns the ultimate nearest neighbors.

Hierarchical Navigable Small World (HNSW) | Picture by Writer

The hierarchical construction means you solely study a small fraction of vectors. Search complexity is O(log N) as an alternative of O(N), making it scale to hundreds of thousands of vectors effectively.

HNSW affords wonderful recall and velocity however requires holding the complete graph in reminiscence. This makes it costly for enormous datasets however ideally suited for latency-sensitive functions.

Inverted File Index (IVF)

Inverted File Index (IVF) partitions the vector area into areas utilizing clustering algorithms like Okay-means. Throughout indexing, every vector is assigned to its nearest cluster centroid. Throughout search, you first determine probably the most related clusters, then search solely inside these clusters.

IVF: Partitioning Vector Area into Clusters | Picture by Writer

The trade-off is obvious: search extra clusters for higher accuracy, fewer clusters for higher velocity. A typical configuration may search 10 out of 1,000 clusters, inspecting just one% of vectors whereas sustaining over 90% recall.

IVF makes use of much less reminiscence than HNSW as a result of it solely masses related clusters throughout search. This makes it appropriate for datasets too massive for RAM. The draw back is decrease recall on the similar velocity, although including product quantization can enhance this trade-off.

Product Quantization (PQ)

Product quantization compresses vectors to scale back reminiscence utilization and velocity up distance calculations. It splits every vector into subvectors, then clusters every subspace independently. Throughout indexing, vectors are represented as sequences of cluster IDs reasonably than uncooked floats.

Product Quantization: Compressing Excessive-Dimensional Vectors | Picture by Writer

A 1536-dimensional float32 vector usually requires ~6KB. With PQ utilizing compact codes (e.g., ~8 bytes per vector), this may drop by orders of magnitude—a ~768× compression on this instance. Distance calculations use precomputed lookup tables, making them dramatically quicker.

The associated fee is accuracy loss from quantization. PQ works finest mixed with different strategies: IVF for preliminary filtering, PQ for scanning candidates effectively. This hybrid strategy dominates manufacturing programs.

How Vector Databases Deal with Scale

Trendy vector databases mix a number of methods to deal with billions of vectors effectively.

Sharding distributes vectors throughout machines. Every shard runs impartial ANN searches, and outcomes merge utilizing a heap. This parallelizes each indexing and search, scaling horizontally.

Filtering integrates metadata filters with vector search. The database wants to use filters with out destroying index effectivity. Options embody separate metadata indices that intersect with vector outcomes, or partitioned indices that duplicate knowledge throughout filter values.

Hybrid search combines vector similarity with conventional full-text search. BM25 scores and vector similarities merge utilizing weighted combos or reciprocal rank fusion. This handles queries that want each semantic understanding and key phrase precision.

Dynamic updates pose challenges for graph-based indices like HNSW, which optimize for learn efficiency. Most programs queue writes and periodically rebuild indices, or use specialised knowledge buildings that help incremental updates with some efficiency overhead.

Key Similarity Measures

Vector similarity depends on distance metrics that quantify how shut two vectors are in embedding area.

Euclidean distance measures straight-line distance. It’s intuitive however delicate to vector magnitude. Two vectors pointing the identical route however with totally different lengths are thought of dissimilar.

Cosine similarity measures the angle between vectors, ignoring magnitude. That is ideally suited for embeddings the place route encodes which means however scale doesn’t. Most semantic search makes use of cosine similarity as a result of embedding fashions produce normalized vectors.

Dot product is cosine similarity with out normalization. When all vectors are unit size, it’s equal to cosine similarity however quicker to compute. Many programs normalize as soon as throughout indexing after which use dot product for search.

The selection issues as a result of totally different metrics create totally different nearest-neighbor topologies. An embedding mannequin educated with cosine similarity needs to be searched with cosine similarity.

Understanding Recall and Latency Commerce-offs

Vector databases sacrifice good accuracy for velocity by approximate search. Understanding this trade-off is essential for manufacturing programs.

Recall measures what share of true nearest neighbors your search returns. Ninety p.c recall means discovering 9 of the ten precise closest vectors. Recall is determined by index parameters: HNSW’s ef_search, IVF’s nprobe, or common exploration depth.

Latency measures how lengthy queries take. It scales with what number of vectors you study. Greater recall requires checking extra candidates, growing latency.

The candy spot is usually 90–95% recall. Going from 95% to 99% may triple your question time whereas semantic search high quality barely improves. Most functions can’t distinguish between the tenth and twelfth nearest neighbors.

Benchmark your particular use case. Construct a ground-truth set with exhaustive search, then measure how recall impacts your software metrics. You’ll usually discover that 85% recall produces indistinguishable outcomes from 99% at a fraction of the fee.

When You Truly Want a Vector Database

Not each software with embeddings wants a specialised vector database.

You don’t really need vector databases if you:

- Have fewer than 100K vectors. Brute-force search with NumPy needs to be quick sufficient.

- Have vectors that change continuously. The indexing overhead may exceed search financial savings.

- Want good accuracy. Use actual search with optimized libraries like FAISS.

Use vector databases if you:

- Have hundreds of thousands of vectors and wish low-latency search.

- Are constructing semantic search, RAG, or advice programs at scale.

- Must filter vectors by metadata whereas sustaining search velocity.

- Need infrastructure that handles sharding, replication, and updates.

Many groups begin with easy options and migrate to vector databases as they scale. That is usually the correct strategy.

Manufacturing Vector Database Choices

The vector database panorama has exploded over the previous few years. Right here’s what you want to know in regards to the main gamers.

Pinecone is a totally managed cloud service. You outline your index configuration; Pinecone handles infrastructure. It makes use of a proprietary algorithm combining IVF and graph-based search. Greatest for groups that wish to keep away from operations overhead. Pricing scales with utilization, which may get costly at excessive volumes.

Weaviate is open-source and deployable anyplace. It combines vector search with GraphQL schemas, making it highly effective for functions that want each unstructured semantic search and structured knowledge relationships. The module system integrates with embedding suppliers like OpenAI and Cohere. A sensible choice in case you want flexibility and management.

Chroma focuses on developer expertise with an embedding database designed for AI functions. It emphasizes simplicity—minimal configuration, batteries-included defaults. Runs embedded in your software or as a server. Best for prototyping and small-to-medium deployments. The backing implementation makes use of HNSW by way of hnswlib.

Qdrant is inbuilt Rust for efficiency. It helps filtered search effectively by a payload index that works alongside vector search. The structure separates storage from search, enabling disk-based operation for enormous datasets. A robust alternative for high-performance necessities.

Milvus handles large-scale deployments. It’s constructed on a disaggregated structure separating compute and storage. It helps a number of index varieties (IVF, HNSW, DiskANN) and intensive configuration. Extra advanced to function however scales additional than most options.

Postgres with pgvector provides vector search to PostgreSQL. For functions already utilizing Postgres, this eliminates a separate database. Efficiency is enough for average scale, and also you get transactions, joins, and acquainted tooling. Help contains actual search and IVF; availability of different index varieties can depend upon model and configuration.

Elasticsearch and OpenSearch added vector search by HNSW indices. If you happen to already run these for logging or full-text search, including vector search is easy. Hybrid search combining BM25 and vectors is especially robust. Not the quickest pure vector databases, however the integration worth is usually larger.

Past Easy Similarity Search

Vector databases are evolving past easy similarity search. If you happen to observe these working within the search area, you may need seen a number of enhancements and newer approaches examined and adopted by the developer neighborhood.

Hybrid vector indices mix a number of embedding fashions. Retailer each sentence embeddings and key phrase embeddings, looking throughout each concurrently. This captures totally different points of similarity.

Multimodal search indexes vectors from totally different modalities — textual content, pictures, audio — in the identical area. CLIP-style fashions allow looking pictures with textual content queries or vice versa. Vector databases that deal with a number of vector varieties per merchandise allow this.

Realized indices use machine studying to optimize index buildings for particular datasets. As an alternative of generic algorithms, prepare a mannequin that predicts the place vectors are positioned. That is experimental however reveals promise for specialised workloads.

Streaming updates have gotten first-class operations reasonably than batch rebuilds. New index buildings help incremental updates with out sacrificing search efficiency—essential for functions with quickly altering knowledge.

Conclusion

Vector databases remedy a selected downside: quick similarity search over high-dimensional embeddings. They’re not a substitute for conventional databases however a complement for workloads centered on semantic similarity. The algorithmic basis stays constant throughout implementations. Variations lie in engineering: how programs deal with scale, filtering, updates, and operations.

Begin easy. If you do want a vector database, perceive the recall–latency trade-off and tune parameters to your use case reasonably than chasing good accuracy. The vector database area is advancing shortly. What was experimental analysis three years in the past is now manufacturing infrastructure powering semantic search, RAG functions, and advice programs at large scale. Understanding how they work helps you construct higher AI functions.

So yeah, glad constructing! If you’d like particular hands-on tutorials, tell us what you’d like us to cowl within the feedback.

On this article, you’ll find out how vector databases energy quick, scalable similarity seek for trendy machine studying functions and when to make use of them successfully.

Subjects we are going to cowl embody:

- Why typical database indexing breaks down for high-dimensional embeddings.

- The core ANN index households (HNSW, IVF, PQ) and their trade-offs.

- Manufacturing considerations: recall vs. latency tuning, scaling, filtering, and vendor decisions.

Let’s get began!

The Full Information to Vector Databases for Machine Studying

Picture by Writer

Introduction

Vector databases have grow to be important in most trendy AI functions. If you happen to’ve constructed something with embeddings — semantic search, advice engines, RAG programs — you’ve seemingly hit the wall the place conventional databases don’t fairly suffice.

Constructing search functions sounds easy till you attempt to scale. If you transfer from a prototype to actual knowledge with hundreds of thousands of paperwork and lots of of hundreds of thousands of vectors, you hit a roadblock. Every search question compares your enter towards each vector in your database. With 1024- or 1536-dimensional vectors, that’s over a billion floating-point operations per million vectors searched. Your search characteristic turns into unusable.

Vector databases remedy this with specialised algorithms that keep away from brute-force distance calculations. As an alternative of checking each vector, they use methods like hierarchical graphs and spatial partitioning to look at solely a small share of candidates whereas nonetheless discovering nearest neighbors. The important thing perception: you don’t want good outcomes; discovering the ten most comparable objects out of one million is sort of an identical to discovering absolutely the prime 10, however the approximate model is usually a thousand occasions quicker.

This text explains why vector databases are helpful in machine studying functions, how they work beneath the hood, and if you really need one. Particularly, it covers the next matters:

- Why conventional database indices fail for similarity search in high-dimensional areas

- Key algorithms powering vector databases: HNSW, IVF, and Product Quantization

- Distance metrics and why your alternative issues

- Understanding the recall-latency tradeoff and tuning for manufacturing

- How vector databases deal with scale by sharding, compression, and hybrid indices

- If you really need a vector database versus less complicated options

- An outline of main choices: Pinecone, Weaviate, Chroma, Qdrant, Milvus, and others

Why Conventional Databases Aren’t Efficient for Similarity Search

Conventional databases are extremely environment friendly for actual matches. You do issues like: discover a consumer with ID 12345; retrieve merchandise priced beneath $50. These queries depend on equality and comparability operators that map completely to B-tree indices.

However machine studying offers in embeddings, that are high-dimensional vectors that signify semantic which means. Your search question “finest Italian eating places close by” turns into a 1024- or 1536-dimensional array (for widespread OpenAI and Cohere embeddings you’ll use usually). Discovering comparable vectors, due to this fact, requires computing distances throughout lots of or 1000’s of dimensions.

A naive strategy would calculate the space between your question vector and each vector in your database. For one million embeddings with over 1,000 dimensions, that’s about 1.5 billion floating-point operations per question. Conventional indices can’t assist since you’re not on the lookout for actual matches—you’re on the lookout for neighbors in high-dimensional area.

That is the place vector databases are available.

What Makes Vector Databases Totally different

Vector databases are purpose-built for similarity search. They arrange vectors utilizing specialised knowledge buildings that allow approximate nearest neighbor (ANN) search, buying and selling good accuracy for dramatic velocity enhancements.

The important thing distinction lies within the index construction. As an alternative of B-trees optimized for vary queries, vector databases use algorithms designed for high-dimensional geometry. These algorithms exploit the construction of embedding areas to keep away from brute-force distance calculations.

A well-tuned vector database can search by hundreds of thousands of vectors in milliseconds, making real-time semantic search sensible.

Some Core Ideas Behind Vector Databases

Vector databases depend on algorithmic approaches. Every makes totally different trade-offs between search velocity, accuracy, and reminiscence utilization. I’ll go over three key vector index approaches right here.

Hierarchical Navigable Small World (HNSW)

Hierarchical Navigable Small World (HNSW) builds a multi-layer graph construction the place every layer comprises a subset of vectors linked by edges. The highest layer is sparse, containing only some well-distributed vectors. Every decrease layer provides extra vectors and connections, with the underside layer containing all vectors.

Search begins on the prime layer and greedily navigates to the closest neighbor. As soon as it might probably’t discover something nearer, it strikes down a layer and repeats. This continues till reaching the underside layer, which returns the ultimate nearest neighbors.

Hierarchical Navigable Small World (HNSW) | Picture by Writer

The hierarchical construction means you solely study a small fraction of vectors. Search complexity is O(log N) as an alternative of O(N), making it scale to hundreds of thousands of vectors effectively.

HNSW affords wonderful recall and velocity however requires holding the complete graph in reminiscence. This makes it costly for enormous datasets however ideally suited for latency-sensitive functions.

Inverted File Index (IVF)

Inverted File Index (IVF) partitions the vector area into areas utilizing clustering algorithms like Okay-means. Throughout indexing, every vector is assigned to its nearest cluster centroid. Throughout search, you first determine probably the most related clusters, then search solely inside these clusters.

IVF: Partitioning Vector Area into Clusters | Picture by Writer

The trade-off is obvious: search extra clusters for higher accuracy, fewer clusters for higher velocity. A typical configuration may search 10 out of 1,000 clusters, inspecting just one% of vectors whereas sustaining over 90% recall.

IVF makes use of much less reminiscence than HNSW as a result of it solely masses related clusters throughout search. This makes it appropriate for datasets too massive for RAM. The draw back is decrease recall on the similar velocity, although including product quantization can enhance this trade-off.

Product Quantization (PQ)

Product quantization compresses vectors to scale back reminiscence utilization and velocity up distance calculations. It splits every vector into subvectors, then clusters every subspace independently. Throughout indexing, vectors are represented as sequences of cluster IDs reasonably than uncooked floats.

Product Quantization: Compressing Excessive-Dimensional Vectors | Picture by Writer

A 1536-dimensional float32 vector usually requires ~6KB. With PQ utilizing compact codes (e.g., ~8 bytes per vector), this may drop by orders of magnitude—a ~768× compression on this instance. Distance calculations use precomputed lookup tables, making them dramatically quicker.

The associated fee is accuracy loss from quantization. PQ works finest mixed with different strategies: IVF for preliminary filtering, PQ for scanning candidates effectively. This hybrid strategy dominates manufacturing programs.

How Vector Databases Deal with Scale

Trendy vector databases mix a number of methods to deal with billions of vectors effectively.

Sharding distributes vectors throughout machines. Every shard runs impartial ANN searches, and outcomes merge utilizing a heap. This parallelizes each indexing and search, scaling horizontally.

Filtering integrates metadata filters with vector search. The database wants to use filters with out destroying index effectivity. Options embody separate metadata indices that intersect with vector outcomes, or partitioned indices that duplicate knowledge throughout filter values.

Hybrid search combines vector similarity with conventional full-text search. BM25 scores and vector similarities merge utilizing weighted combos or reciprocal rank fusion. This handles queries that want each semantic understanding and key phrase precision.

Dynamic updates pose challenges for graph-based indices like HNSW, which optimize for learn efficiency. Most programs queue writes and periodically rebuild indices, or use specialised knowledge buildings that help incremental updates with some efficiency overhead.

Key Similarity Measures

Vector similarity depends on distance metrics that quantify how shut two vectors are in embedding area.

Euclidean distance measures straight-line distance. It’s intuitive however delicate to vector magnitude. Two vectors pointing the identical route however with totally different lengths are thought of dissimilar.

Cosine similarity measures the angle between vectors, ignoring magnitude. That is ideally suited for embeddings the place route encodes which means however scale doesn’t. Most semantic search makes use of cosine similarity as a result of embedding fashions produce normalized vectors.

Dot product is cosine similarity with out normalization. When all vectors are unit size, it’s equal to cosine similarity however quicker to compute. Many programs normalize as soon as throughout indexing after which use dot product for search.

The selection issues as a result of totally different metrics create totally different nearest-neighbor topologies. An embedding mannequin educated with cosine similarity needs to be searched with cosine similarity.

Understanding Recall and Latency Commerce-offs

Vector databases sacrifice good accuracy for velocity by approximate search. Understanding this trade-off is essential for manufacturing programs.

Recall measures what share of true nearest neighbors your search returns. Ninety p.c recall means discovering 9 of the ten precise closest vectors. Recall is determined by index parameters: HNSW’s ef_search, IVF’s nprobe, or common exploration depth.

Latency measures how lengthy queries take. It scales with what number of vectors you study. Greater recall requires checking extra candidates, growing latency.

The candy spot is usually 90–95% recall. Going from 95% to 99% may triple your question time whereas semantic search high quality barely improves. Most functions can’t distinguish between the tenth and twelfth nearest neighbors.

Benchmark your particular use case. Construct a ground-truth set with exhaustive search, then measure how recall impacts your software metrics. You’ll usually discover that 85% recall produces indistinguishable outcomes from 99% at a fraction of the fee.

When You Truly Want a Vector Database

Not each software with embeddings wants a specialised vector database.

You don’t really need vector databases if you:

- Have fewer than 100K vectors. Brute-force search with NumPy needs to be quick sufficient.

- Have vectors that change continuously. The indexing overhead may exceed search financial savings.

- Want good accuracy. Use actual search with optimized libraries like FAISS.

Use vector databases if you:

- Have hundreds of thousands of vectors and wish low-latency search.

- Are constructing semantic search, RAG, or advice programs at scale.

- Must filter vectors by metadata whereas sustaining search velocity.

- Need infrastructure that handles sharding, replication, and updates.

Many groups begin with easy options and migrate to vector databases as they scale. That is usually the correct strategy.

Manufacturing Vector Database Choices

The vector database panorama has exploded over the previous few years. Right here’s what you want to know in regards to the main gamers.

Pinecone is a totally managed cloud service. You outline your index configuration; Pinecone handles infrastructure. It makes use of a proprietary algorithm combining IVF and graph-based search. Greatest for groups that wish to keep away from operations overhead. Pricing scales with utilization, which may get costly at excessive volumes.

Weaviate is open-source and deployable anyplace. It combines vector search with GraphQL schemas, making it highly effective for functions that want each unstructured semantic search and structured knowledge relationships. The module system integrates with embedding suppliers like OpenAI and Cohere. A sensible choice in case you want flexibility and management.

Chroma focuses on developer expertise with an embedding database designed for AI functions. It emphasizes simplicity—minimal configuration, batteries-included defaults. Runs embedded in your software or as a server. Best for prototyping and small-to-medium deployments. The backing implementation makes use of HNSW by way of hnswlib.

Qdrant is inbuilt Rust for efficiency. It helps filtered search effectively by a payload index that works alongside vector search. The structure separates storage from search, enabling disk-based operation for enormous datasets. A robust alternative for high-performance necessities.

Milvus handles large-scale deployments. It’s constructed on a disaggregated structure separating compute and storage. It helps a number of index varieties (IVF, HNSW, DiskANN) and intensive configuration. Extra advanced to function however scales additional than most options.

Postgres with pgvector provides vector search to PostgreSQL. For functions already utilizing Postgres, this eliminates a separate database. Efficiency is enough for average scale, and also you get transactions, joins, and acquainted tooling. Help contains actual search and IVF; availability of different index varieties can depend upon model and configuration.

Elasticsearch and OpenSearch added vector search by HNSW indices. If you happen to already run these for logging or full-text search, including vector search is easy. Hybrid search combining BM25 and vectors is especially robust. Not the quickest pure vector databases, however the integration worth is usually larger.

Past Easy Similarity Search

Vector databases are evolving past easy similarity search. If you happen to observe these working within the search area, you may need seen a number of enhancements and newer approaches examined and adopted by the developer neighborhood.

Hybrid vector indices mix a number of embedding fashions. Retailer each sentence embeddings and key phrase embeddings, looking throughout each concurrently. This captures totally different points of similarity.

Multimodal search indexes vectors from totally different modalities — textual content, pictures, audio — in the identical area. CLIP-style fashions allow looking pictures with textual content queries or vice versa. Vector databases that deal with a number of vector varieties per merchandise allow this.

Realized indices use machine studying to optimize index buildings for particular datasets. As an alternative of generic algorithms, prepare a mannequin that predicts the place vectors are positioned. That is experimental however reveals promise for specialised workloads.

Streaming updates have gotten first-class operations reasonably than batch rebuilds. New index buildings help incremental updates with out sacrificing search efficiency—essential for functions with quickly altering knowledge.

Conclusion

Vector databases remedy a selected downside: quick similarity search over high-dimensional embeddings. They’re not a substitute for conventional databases however a complement for workloads centered on semantic similarity. The algorithmic basis stays constant throughout implementations. Variations lie in engineering: how programs deal with scale, filtering, updates, and operations.

Begin easy. If you do want a vector database, perceive the recall–latency trade-off and tune parameters to your use case reasonably than chasing good accuracy. The vector database area is advancing shortly. What was experimental analysis three years in the past is now manufacturing infrastructure powering semantic search, RAG functions, and advice programs at large scale. Understanding how they work helps you construct higher AI functions.

So yeah, glad constructing! If you’d like particular hands-on tutorials, tell us what you’d like us to cowl within the feedback.