1.

In one in all my earlier articles, we explored Google’s Titans (Behrouz et al., 2024)1 and the way TTT (Take a look at-Time Coaching) can be utilized to equip an LLM with a human-like, malleable reminiscence, which might replace its data at check time.

Take a look at-time coaching, because the title suggests, is a paradigm that lets the mannequin replace its parameters on unseen information. However at check time, there are not any floor fact labels that may assist steer the mannequin in the appropriate path (as a result of that may be overt dishonest). As an alternative, it performs a job with the info (designed and baked into the mannequin), which leads the mannequin to “subconsciously” find out about it.

Examples of such duties could be:

- Rotation Prediction (Gidaris et al., 2018)2: The enter pictures are rotated arbitrarily (eg, by 90°, 180°, or 270°), with the mannequin being made to foretell which is the right orientation. This allows it to acknowledge salient options and decide which manner is “up”.

- Masked-Language Modeling (Devlin et al., 2019)3: A number of tokens are masked from the check occasion. The mannequin’s job is to foretell the lacking tokens whereas the masked tokens play as the bottom truths, which incentivizes a multi-faceted understanding of language.

- Confidence Maximization (Solar et al., 2020)4: The place the mannequin is incentivized to make its output logits (eg, classification logits [0.3, 0.4, 0.3]) to be extra peaked (eg, [0.1, 0.8, 0.1]), therefore ebbing its diplomatic tendencies.

However these are all educated guesses as to which job may translate the most effective to studying, as a result of people imagined them, and as people are usually not the “smartest” ones as of late, why don’t we let AI determine it out for itself?

Our gradient descent and optimization algorithms are usually thought of among the many most consequential algorithms humanity has ever invented. So, why not go away the check time coaching to those algorithms altogether and let the fashions find out about studying?

2. Motivation: Why was it wanted?

At its coronary heart, this analysis was pushed by a core frustration with the present Take a look at-Time Coaching (TTT) paradigm. Prior TTT algorithms have traditionally relied on a type of artistry. A human “designer” (i.e., a inventive researcher) should hand-craft a self-supervised job like those talked about above and hope that working towards this particular job will one way or the other translate to raised efficiency on the principle goal. The paper aptly calls this an “artwork, combining ingenuity with trial and error,” a course of that’s extraordinarily susceptible to humanistic fallacies.

Not solely can human-designed duties carry out suboptimally, however they will even be counter-productive. Think about making a mannequin an skilled on rotation-prediction as its TTT job. However now, if a picture has direction-specific traits, like a pointing-down arrow that signifies “obtain this file,” will get flipped to a pointing-up arrow due to the TTT job (which signifies add), it’d utterly corrupt the understanding of the mannequin for that picture.

Furthermore, we are able to extrapolate it to ever-decreasing reliance on human ingenuity and growing reliance on automation. Duties like curating a word-bank with hundreds of “dangerous phrases”, simply to categorise spam emails, are a relic of the previous, that remind us how far we’ve come. Through the years, a traditional rule has emerged: automation has all the time eclipsed the very human ingenuity that conceived it.

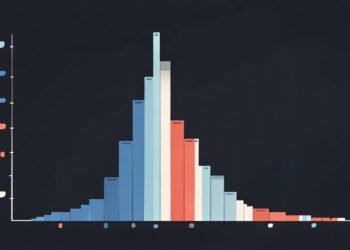

Visible depiction of why guide TTT design could be inferior to Meta-TTT through Gradient Descent.

3. Studying to (Study at test-time)

Researchers at Meta, Stanford, and Berkley (Solar et al., 2024)5 all got here collectively for this monumental collaboration, and so they efficiently parameterized the TTT job itself, which signifies that now the mannequin can select, as a substitute of people, which job can have the best influence on bettering the efficiency on the principle goal.

Which means now the mannequin can’t solely prepare on check information, but in addition select how that check information is for use to coach itself!

3.1 How Does It Work?

The researchers segregated all the course of into two components — Internal and Outer Loop, the place the Outer loop trains the mannequin on its major goal and defines the TTT job, whereas the Internal loop trains the hidden layers on the outlined TTT job.

3.1.1. The Outer Loop: Taking Human Ingenuity Out of The Equation

This acts because the “meta-teacher” on this system. Aside from making the mannequin learn to classify pictures, it’s additionally assigned to create a curriculum for the internal loop to carry out TTT on. It achieves this by reworking all the TTT course of right into a one big, differentiable operate and optimizing it from finish to finish.

This multi-step course of could be outlined as under:

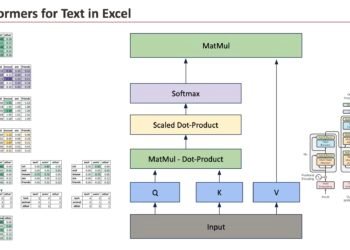

The complete architectural diagram of the mannequin, together with a zoomed-in view of the MTTT layer.

The numbers in black point out the sequence of data circulate within the mannequin (Steps).

Steps 1 & 2: Enter Preparation

First, the enter picture X is damaged down into patches, and every patch is then transformed into an embedding through Embedding Layers. This provides us a sequence of vectors, the Patch embedding vector, which we’ll name P = (P₁, P₂, …, Pₙ).

Step 3: The Total Structure

This vector P is then fed via a collection of Stacked MTTT layers, that are additionally the mind of the mannequin. After passing via all of the layers, the ultimate illustration is shipped to an ordinary Classification Head to supply the ultimate output. To grasp what occurs in every MTTT layer, we zoom into one to dissect and perceive its internal equipment.

Step 4: Studying From the Embeddings

Every MTTT layer has a set of learnable parameters W₀ (Step 4b), which act as a “generic” or “start-off” state, earlier than it sees any information.

The unique enter patch embeddings (P) are marked as Step 4a.

Step 5: The Internal Loop and Information Transformation

The Outer Loop now invokes the Internal Loop, which we’ll deal with as a black-box for now. As per the diagram, it supplies two key issues:

- The Beginning Level (5b): The Preliminary layer weights, W₀, are fed to the Internal Loop, together with the present enter. The Internal Loop outputs WT weights for the layer, that are tuned particularly for the present enter.

WT, W0: The Enter-Particular Weights and Baseline Generic Weights, respectively.

P: Patch Embedding Vector.

θI: Learnable Parameters of the Internal Loop.

- The Information (5a): The Enter embeddings P are ready to be processed by the tailored layer by a easy linear transformation (ψ). That is accomplished to extend the expressivity and make each MTTT layer study completely different units of attributes in regards to the enter.

Right here, the brand new weights WT, which at the moment are particularly tuned for the pet picture, are loaded into the layer.

Steps 6 & 7: The Essential Activity Ahead Move

Now that the function extractor has the specialised weights WT, it makes use of them to course of the info for the principle job.

The remodeled enter embeddings from Step 5a are lastly processed by the input-specific function extractor layer (Step 6) and are yielded because the output of the primary MTTT layer (Step 7), that are then processed by a number of different MTTT layers, repeating the method another time.

Steps 8 & 9: The Remaining Output

After the info has handed via all of the stacked MTTT layers (Step 8) and the ultimate Classification Head (Step 9), we get a last prediction, ŷ.

Take a look at vs Prepare:

If the mannequin is being examined, ŷ stays as the ultimate output, but when the mannequin is being educated, the output (Step 9) is used to calculate a loss (sometimes cross-entropy) in opposition to the bottom fact y.

The Outer Loop, with this loss, calculates the gradient with respect to all parameters, and is therefore known as the “meta-gradient”. This gradient, together with coaching the mannequin on the principle job, additionally trains the Internal Loop’s parameters, which outline the TTT’s self-supervised job. In essence, it makes use of the ultimate classification error sign to ask itself:

“How ought to I’ve arrange the test-time studying downside in order that the ultimate end result would have been higher?”

This makes the mannequin setup the simplest supervised job to greatest enhance the efficiency on the principle job, taking human guesswork and intuitive sense utterly off the equation.

3.1.2 The Internal Loop: Unveiling the Black-Field

Now that we perceive the Outer Loop, we unroll the Black-box, a.ok.a. the Internal Loop.

Its aim is to take the generic layer weights (W₀) and quickly adapt them into specialised weights (W_T) for the enter it’s at present observing.

It achieves this by fixing the self-supervised reconstruction job, which the Outer Loop designed for it. This self-contained studying process seems like this:

Zoomed-in view of the Internal Loop, describing its internal workings.

The numbers in black point out the sequence of data circulate (Steps).

Steps 1-3: Setting Up the Studying Downside

The Internal Loop will get two distinct inputs from the Outer Loop:

- The Enter Patch Embeddings (Step 2), and,

- The generic weights for the function extractor, W0.

As proven in Step 3, these unique embeddings P=(P1, P2, ...) are made right into a “test-time dataset”, the place every datapoint is a singular patch’s embedding yielded sequentially.

Steps 4 & 5: The Ahead Move – Making a Puzzle

First, an enter patch is handed via the Encoder (a linear layer whose parameters, θΦ, had been discovered by the Outer Loop). This operate “corrupts” the enter (Step 4), making a puzzle that the next community should resolve. This corrupted patch is then fed into the Function Extractor (The ‘Mind’), which processes it utilizing its present generic weights (Step 5) to create a function illustration.

Steps 6 & 7: The Studying Step – Fixing the Puzzle

The function illustration from the “Mind” is then handed to the Decoder (a linear layer whose parameters, θg, had been additionally discovered). The Decoder’s job is to make the most of these options to reconstruct the unique, uncorrupted patch (Step 6). The Internal Loop then measures how nicely it did by calculating a loss—sometimes Imply Squared Error (MSE)—between its reconstruction and the unique patch. This error sign drives the Gradient Step (Step 7), which calculates a small replace for the Function Extractor’s weights.

Steps 8-9: The Remaining Output

This replace course of, from the previous weights to the brand new, is proven in Step 8a. After operating for a set variety of steps, T (till all patches are utilized sequentially), the ultimate, tailored weights (WT) are prepared. The Internal Loop’s job is full, and as proven in Step 8b, it outputs these new weights for use by the Outer Loop for the principle job prediction.

3.2 Consideration as a Particular Case of the MTTT Framework

Up to now, we’ve handled MTTT as a novel framework. However right here is the place the paper delivers its most elegant perception: the eye mechanisms, that are globally accepted because the de facto, are simply easy variations of this exact same “studying to study” course of. This additionally is sensible as a result of now the mannequin shouldn’t be constrained to stick to a selected schema; somewhat, it may well select and curate the right framework for itself, which makes it act as a superset that encompasses all the pieces, together with consideration.

The authors show this with a collection of deterministic mathematical derivations (which might be manner past the scope of this text). They present that in case you make particular decisions for the “Mind” of the internal loop (the Function Extractor), all the complicated, two-loop MTTT process simplifies and turns into an consideration mechanism.

Case 1: Function Extractor = Easy Linear Mannequin

Linear consideration (Katharopoulos et al., 2020)6 is a a lot quicker and related implementation to the self-attention (Vaswani et al., 2017)7 we use broadly in the present day. Not like self-attention, the place we compute the (N×N) consideration matrix (the place ‘N‘ is the variety of tokens) that leads to an O(n2) bottleneck, linear consideration calculates the OkayT×V matrix (DXD; ‘D‘ is the hidden dimension), which is linear in N.

By multiplying OkayT and V matrices first, we circumvent the O(n2) consideration matrix, which we calculate in the usual self-attention

When “the mind” is only a single linear layer that takes one studying step (T=1, aka only one patch), its “correction” (the gradient step) is mathematically linear regression. The researchers confirmed that this complete course of collapses completely into the method for Linear Consideration. The Encoder learns the position of the Key (Okay), the Decoder learns the position of the Worth (V), and the principle job’s Enter Transformation (ψ) learns the position of the Question (Q)!

Case 2: Function Extractor = Kernel Estimator.

Now, if the educational layer (function extractor) is changed with a Kernel Estimator (which computes a weighted common), particularly the Nadaraya-Watson estimator (Nadaraya, 1964)8 & (Watson, 1964)9, the MTTT course of turns into similar to the usual Self-Consideration. The kernel’s similarity operate collapses to the Question-Key dot product, and its normalization step turns into the Softmax operate.

The usual self-attention method can be simply an instantiation of the “studying to study” superset

What does this imply?

The authors state that previously three a long time of machine studying and AI, a transparent sample concerning the efficiency of algorithms could be noticed.

We all know that:

- When the function extractor is a linear mannequin, we get quick however not so spectacular linear consideration.

- When the function extractor is a kernel, we get the ever-present self-attention.

- When the function extractor is a deep-learning mannequin (an MLP, for instance), we get….?

What occurs if we put an excellent higher learner (like MLP) contained in the Internal Loop? Would it not carry out higher?

4. MTTT-MLP: The Major Contribution

The reply to the above query is the principle contribution of the authors on this paper. They equip the internal loop with a small, 2-layer Multi-Layer Perceptron (MLP) because the function extractor.

4.1 Self-Consideration vs. MTTT-MLP vs. Linear-Consideration

The authors put MTTT-MLP to the check in two drastically completely different eventualities on the ImageNet dataset:

State of affairs 1: The Customary State of affairs (ImageNet with Patches)

First, they examined a Imaginative and prescient Transformer (ViT) on commonplace 224×224 pictures, damaged into 196 patches. On this configuration, the O(n²) strategies are sensible as nicely, which makes it an excellent enjoying area for all fashions.

- The Outcomes:

- MTTT-MLP (74.6% acc.) beat its theoretical predecessor, MTTT-Linear (72.8% acc.), confirming the speculation that extra complicated learners carry out higher.

- Nonetheless, commonplace self-attention (76.5% acc.) nonetheless reigned supreme. Though opposite to our speculation, it nonetheless is sensible as a result of when you possibly can afford the costly quadratic computation on brief sequences, the unique is tough to high.

State of affairs 2: The Non-Customary State of affairs (ImageNet with Uncooked Pixels)

The researchers drastically modified the setting by feeding the mannequin uncooked pixels as a substitute of patches. This inflates the sequence size from a manageable 196 to an enormous 50,176 tokens, which is the very arch-nemesis of the usual consideration algorithms.

- The Outcomes:

- This comparability might solely be held between linear consideration and MTTT-MLP as a result of self-attention didn’t even run. Modeling 50,176 tokens resulted in 2.5 billion entries within the consideration matrix, which instantly threw an OOM (Out-Of-Reminiscence) error on any commonplace GPU.

- Linear Consideration carried out mediocre, attaining round 54-56% accuracy.

- MTTT-MLP gained this spherical by a big margin, reaching 61.9% accuracy.

- Even when pitted in opposition to a bigger Linear Consideration mannequin with 3x the parameters and 2x the FLOPs, MTTT-MLP nonetheless gained by round a ten% margin.

The important thing takeaway from these experiments was that although self-attention reigned supreme when it comes to uncooked efficiency, MTTT-MLP supplies an enormous enhance in modeling energy over linear consideration whereas retaining the identical candy O(n) linear complexity that permits it to scale to large inputs.

4.2 Watching How the Internal Loop Learns

To interpret the traits of their novel strategy, the authors present a pair of graphs that assist us peek into how the internal loop learns and the way the outer loop makes it study the absolute best classes.

Steps vs. Accuracy: The Extra The Merrier, However Not At all times

The x-axis exhibits the variety of inner-loop gradient steps (T), and the y-axis exhibits the ultimate classification accuracy on the ImageNet dataset.

As T will increase from 1 to 4, the mannequin’s accuracy on the principle classification job will increase commensurately. This demonstrates that permitting the layer to carry out just a few steps of self-adaptation on every picture straight interprets to raised total efficiency. This exhibits that the internal loop does certainly assist the principle job, however the profit isn’t infinite.

The efficiency peaks at T=4 after which barely dips. Which means T=4 is the candy spot, the place the mannequin learns sufficient to help the principle job, however not sufficient the place the mannequin focuses an excessive amount of on the present enter and forgets generalizability.

Epochs vs. Loss: Synergy Between the Two Loops

The x-axis exhibits the coaching epochs, and the y-axis exhibits the internal loop’s reconstruction loss on the TTT job. The colours of various traces point out the internal loop’s coaching steps (T).

This graph is essentially the most information-dense. It provides us a have a look at how the efficiency of the internal loop adjustments because the outer loop learns to design a extra subtle TTT job.

There are two key traits to look at:

Internal-Loop Optimization (The Vertical Development)

Should you have a look at the blue line (T=0) as a complete, you’ll discover that it has the best loss, as a result of it’s the case when the outer loop retains getting higher at designing the TTT job (as epochs progress), whereas the internal loop doesn’t study something from it.

Should you have a look at any single epoch (a vertical slice of the graph), for all of the others (T ∈ [1,4]), the loss is decrease than the blue line, and for each increment in T, the loss decreases. This means that the extra the internal loop is allowed to study, the higher its efficiency will get (which is the anticipated habits).

Outer-Loop Meta-Studying (The Horizontal Development)

This could possibly be a bit counterintuitive, as each single line traits upwards in loss over the course of coaching. Should you discover, all of the traces besides the blue (T=0) begin from comparatively the identical loss worth (at 0th epoch), which is way decrease than the blue’s loss. It is because the internal loop is allowed to coach on the “not-hard” TTT job. In any case, the outer loop hasn’t gotten the possibility to design it but, which causes all besides the blue to ace it.

However as quickly because the outer loop begins to select up tempo (as epochs go by), the internal loop finds it tougher and tougher to finish the now more and more tough however useful job, resulting in the internal loop’s loss to slowly creep up.

References:

[1] Behrouz, Ali, Peilin Zhong, and Vahab Mirrokni. “Titans: Studying to memorize at check time.” arXiv preprint arXiv:2501.00663 (2024).

[2] Gidaris, Spyros, Praveer Singh, and Nikos Komodakis. “Unsupervised illustration studying by predicting picture rotations.” arXiv preprint arXiv:1803.07728 (2018).

[3] Devlin, Jacob, et al. “Bert: Pre-training of deep bidirectional transformers for language understanding.” Proceedings of the 2019 convention of the North American chapter of the affiliation for computational linguistics: human language applied sciences, quantity 1 (lengthy and brief papers). 2019.

[4] Solar, Yu, et al. “Take a look at-time coaching with self-supervision for generalization below distribution shifts.” Worldwide convention on machine studying. PMLR, 2020.

[5] Solar, Yu, et al. “Studying to (study at check time): Rnns with expressive hidden states.” arXiv preprint arXiv:2407.04620 (2024).

[6] Katharopoulos, Angelos, et al. “Transformers are rnns: Quick autoregressive transformers with linear consideration.” Worldwide convention on machine studying. PMLR, 2020.

[7] Vaswani, Ashish, et al. “Consideration is all you want.” Advances in neural data processing programs 30 (2017).

[8] Nadaraya, Elizbar A. “On estimating regression.” Concept of Likelihood & Its Functions 9.1 (1964): 141-142.

[9] Watson, Geoffrey S. “Clean regression evaluation.” Sankhyā: The Indian Journal of Statistics, Collection A (1964): 359-372.