By no means miss a brand new version of The Variable, our weekly e-newsletter that includes a top-notch number of editors’ picks, deep dives, neighborhood information, and extra.

Like so many LLM-based workflows earlier than it, vibe coding has attracted sturdy opposition and sharp criticism not as a result of it affords no worth, however as a result of unrealistic, hype-based expectations.

The thought of leveraging highly effective AI instruments to experiment with app-building, generate quick-and-dirty prototypes, and iterate rapidly appears noncontroversial. The issues often start when human practitioners take no matter output the mannequin produced and assume it’s sturdy and error-free.

To assist us kind by the nice, unhealthy, and ambiguous facets of vibe coding, we flip to our specialists. The lineup we ready for you this week affords nuanced and pragmatic takes on how AI code assistants work, and when and easy methods to use them.

The Insufferable Lightness of Coding

“The quantity of technical doubt weighs closely on my shoulders, way more than I’m used to.” In her highly effective, brutally sincere “confessions of a vibe coder,” Elena Jolkver takes an unflinching have a look at what it means to be a developer within the age of Cursor, Claude Code, et al. She additionally argues that the trail ahead entails acknowledging each vibe coding’s pace and productiveness advantages and its (many) potential pitfalls.

Tips on how to Run Claude Code for Free with Native and Cloud Fashions from Ollama

For those who’re already bought on the promise of AI-assisted coding however are involved about its nontrivial prices, you shouldn’t miss Thomas Reid’s new tutorial.

How Cursor Truly Indexes Your Codebase

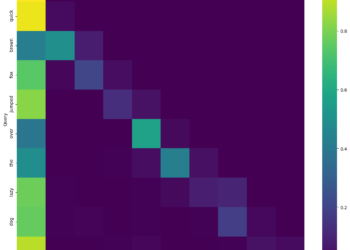

Curious concerning the internal workings of some of the fashionable vibe-coding instruments? Kenneth Leung presents an in depth have a look at the Cursor RAG pipeline that ensures coding brokers are environment friendly at indexing and retrieval.

This Week’s Most-Learn Tales

In case you missed them, listed here are three articles that resonated with a large viewers prior to now week.

Going Past the Context Window: Recursive Language Fashions in Motion, by Mariya Mansurova

Discover a sensible strategy to analysing large datasets with LLMs.

Causal ML for the Aspiring Knowledge Scientist, by Ross Lauterbach

An accessible introduction to causal inference and ML.

Optimizing Vector Search: Why You Ought to Flatten Structured Knowledge, by Oleg Tereshin

An evaluation of how flattening structured knowledge can enhance precision and recall by as much as 20%.

Different Advisable Reads

Python abilities, MLOps, and LLM analysis are only a few of the subjects we’re highlighting with this week’s number of top-notch tales.

Why SaaS Product Administration Is the Greatest Area for Knowledge-Pushed Professionals in 2026, by Yassin Zehar

Creating an Etch A Sketch App Utilizing Python and Turtle, by Mahnoor Javed

Machine Studying in Manufacturing? What This Actually Means, by Sabrine Bendimerad

Evaluating Multi-Step LLM-Generated Content material: Why Buyer Journeys Require Structural Metrics, by Diana Schneider

Google Traits is Deceptive You: Tips on how to Do Machine Studying with Google Traits Knowledge, by Leigh Collier

Meet Our New Authors

We hope you are taking the time to discover glorious work from TDS contributors who lately joined our neighborhood:

- Luke Stuckey checked out how neural networks strategy the query of musical similarity within the context of advice apps.

- Aneesh Patil walked us by a geospatial-data venture aimed toward estimating neighborhood-level pedestrian danger.

- Tom Narock argues that one of the best ways to sort out knowledge science’s “id disaster” is by reframing it as an engineering observe.

We love publishing articles from new authors, so when you’ve lately written an fascinating venture walkthrough, tutorial, or theoretical reflection on any of our core subjects, why not share it with us?