! My title is Kirill Khrylchenko, and I lead the RecSys R&D group at Yandex. One in every of our objectives is to develop transformer applied sciences throughout the context of recommender programs, an goal we’ve been pursuing for 5 years now. Not too way back, we reached a brand new milestone within the growth of advice applied sciences, which I want to share with you on this article.

The relevance of recommender programs on the planet is straightforward to justify: the quantity of content material is rising extremely quick, making it unimaginable to view in its entirety, and we want recommender programs to deal with the data overload. Music, films, books, merchandise, movies, posts, buddies, however it’s vital to keep in mind that these providers profit not solely customers but additionally content material creators who want to seek out their target market.

We’ve deployed a brand new technology of transformer recommenders in a number of providers and are actively integrating them with different providers. We’ve considerably improved the standard of the suggestions throughout the board.

If you happen to’re an ML engineer working with suggestions, this text will give you some concepts on implement an identical method to your recommender system. And in case you are a person, you’ve gotten a chance to study extra about how that very recommender system works.

How Recommender Techniques Work

The advice downside itself has a easy mathematical definition: for every person

we wish to choose objects, objects, paperwork, or merchandise

that they’re prone to like.

However there’s a catch:

- Merchandise catalogs are huge (as much as billions of things).

- There’s a vital variety of customers, and their pursuits are always shifting.

- Interactions between customers and objects are very sparse.

- It’s unclear outline precise person preferences.

To deal with the advice downside successfully, we have to leverage non-trivial fashions that use machine studying.

Neural networks are a potent machine studying instrument, particularly when there’s a considerable amount of unstructured information, resembling textual content or photographs. Whereas conventional classical machine studying includes knowledgeable area information and appreciable handbook work (function engineering), neural networks can extract advanced relationships and patterns from uncooked information virtually mechanically.

Within the RecSys area, we have now a considerable amount of principally unstructured information (actually trillions of anonymized user-item interactions), in addition to entities which might be content-based (objects encompass titles, descriptions, photographs, movies, and audio; customers could be represented as sequences of occasions). Moreover, it’s essential that the recommender system performs properly for brand spanking new objects and chilly customers, and encoding customers and objects by way of content material helps obtain this.

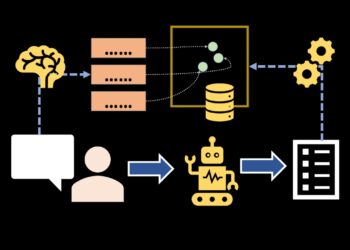

The time we have now to generate suggestions for the person could be very strictly restricted. Each millisecond counts! Moreover, we don’t have infinite assets (by way of {hardware}), and the catalogs we want suggestions from are fairly giant. For this reason suggestions are normally shaped in a number of phases:

- First, we choose a comparatively small set of candidates from your complete catalog utilizing light-weight fashions (retrieval stage).

- Then, we run these candidates by way of extra advanced fashions that make the most of further info and extra intensive computations for every candidate (rating stage).

Architecturally, fashions fluctuate considerably between phases, making it difficult to debate any facet with out referring to particular phases of the recommender system.

The 2-tower neural community structure could be very widespread for the retrieval stage. Customers and objects (for info retrieval, this could be queries and paperwork, independently encoded into vector representations) are used, and the dot product is employed to calculate the similarity between them.

You would additionally say that such fashions “embed” customers and objects right into a shared “semantic house”, the place the semantic facet represents the truth that the nearer the user-item pair is by way of vector house, the extra comparable they’re.

Two-tower fashions are high-speed. Let’s assume the person requests suggestions. The 2-tower mannequin then must calculate:

- The “person tower” as soon as per request.

- Vectors of all candidate objects for which you wish to calculate user-item affinity.

- Dot merchandise.

You don’t even have to recalculate the vectors of candidate objects for every person question, as a result of they’re the identical for all customers and infrequently change; for example, we don’t assume {that a} film or a music observe usually adjustments its title. In observe, we commonly recalculate merchandise vectors for your complete catalog offline (for instance, every day) and add them to both the service the place we have to calculate the dot product or to a different service that we entry on-line to retrieve the required merchandise vectors.

However that’s me describing a use case the place you’ve gotten some affordable, small variety of candidates you wish to calculate user-item affinities for. That is true for the rating stage. Nevertheless, on the candidate technology stage, the issue turns into extra difficult: we have to calculate proximities for all objects within the catalog, choose the top-N (the place N is often expressed in tons of to hundreds) with the very best affinity values, after which ahead them to the following phases.

That is the place two-tower fashions are invaluable: we will shortly generate an approximate top-N by scalar product, even for enormous catalogs, utilizing approximate search strategies. We construct a selected “index” (sometimes a graph construction, resembling within the HNSW technique) for the set of already calculated merchandise vectors that we will retailer within the service and use to feed person vectors, extracting an approximate high for these vectors.

Constructing this index is troublesome and time-consuming (with a separate problem of shortly updating and rebuilding an index). With that being stated, it might nonetheless be accomplished offline, after which the binary and the index could be uploaded to the service, the place we’ll seek for candidates within the runtime setting.

How Do We Encode a Consumer Right into a Vector?

Classical algorithms solved this downside fairly simply: in matrix factorization strategies (like ALS), the person vector was “trainable”, represented by the mannequin parameters, and decided throughout the optimization process. In user-item collaborative filtering strategies, a person was assigned a vector of catalog dimensionality through which the i-th coordinate corresponded to a selected merchandise and represented how usually the person interacted with that merchandise (e.g., how steadily they purchased it or how they rated it).

The fashionable method could be to encode customers with transformers, suggesting {that a} person could be encoded right into a vector utilizing transformers. We take the person’s anonymized historical past—that’s, a sequence of occasions—and encode these occasions into vectors, then make the most of a transformer. In probably the most fundamental case, occasions are represented by purchases or likes; nonetheless, in different instances, it could possibly be your complete historical past of interactions inside an organization’s ecosystem.

Initially, when transformers have been first utilized in suggestions, researchers drew analogies from similarities with NLP: a person is sort of a sentence, and the phrases in it characterize purchases, likes, and different interactions.

One other kind of neural community recommender mannequin is fashions with early fusion. These fashions don’t separate person and merchandise info into two towers however slightly course of all info collectively. That’s, we fuse all details about the person, the merchandise, and their interplay at an early stage. In distinction, two-tower fashions are stated to function late fusion by way of the scalar product. Early-fusion fashions are extra expressive than two-tower fashions. They’ll seize extra advanced indicators and study extra non-trivial dependencies.

Nevertheless, it’s troublesome to use them outdoors the rating stage due to their computational burden and the necessity to recalculate your complete mannequin for every person question and every candidate. In contrast to two-tower fashions, they don’t assist the factorization of computations.

We make the most of numerous structure varieties, together with two-tower fashions with transformers and fashions with early fusion. We use two-tower architectures extra actually because they’re extremely environment friendly, appropriate for all phases concurrently, and nonetheless yield good high quality features with significantly fewer assets.

We used to coach two-tower fashions in two phases:

- Pre-training with contrastive studying. We practice the mannequin to align customers with their constructive user-item interactions utilizing contrastive studying,

- Job-specific fine-tuning. As with NLP, fine-tuning is a task-specific method. If the mannequin shall be used for rating, we practice it to precisely rank the suggestions proven to the person. We confirmed two objects—the person preferred one, disliked the opposite—we wish to rank objects in the identical order. With retrieval, the duty resembles pre-training however employs further strategies that improve the candidates’ recall.

Within the subsequent part, we’ll discover how this course of has modified with our newer fashions.

Scaling Recommender Techniques

Is there a restrict to the dimensions of recommender fashions, after which we now not see size-related enhancements within the high quality of suggestions?

For a very long time, our recommender fashions (and never simply ours, however fashions throughout trade and academia) have been very small, which steered that the reply to this query was “sure”.

Nevertheless, in deep studying, there’s the scaling speculation, which states that as fashions change into bigger and the amount of knowledge will increase, the mannequin high quality ought to enhance considerably.

A lot of the progress in deep studying over the previous decade could be attributed to this speculation. Even the earliest successes in deep studying have been based mostly on scaling, with the emergence of an intensive dataset for picture classification, ImageNet, and the great efficiency of neural networks (AlexNet) on that dataset.

The scaling speculation is much more evident in language fashions and pure language processing (NLP): you may predict the dependence of high quality enchancment on the quantity of computations and specific the corresponding scaling legal guidelines.

What do I imply once I say recommender fashions could be made larger?

There are as many as 4 completely different axes to scale.

Embeddings. We have now quite a lot of details about customers and objects, so we have now entry to a variety of options, and a big portion of those options are categorical. An instance of a categorical function is Merchandise ID, artist ID, style, or language.

Categorical options have a really excessive cardinality (variety of distinctive values)—reaching billions—so when you make giant trainable embeddings (vector representations) for them, you get big embedding matrices.

That stated, embeddings are the bottleneck between the enter information and the mannequin, so it is advisable make them giant for good high quality. For instance, Meta* has embedding matrices with dimensions starting from 675 billion to 13 trillion parameters, whereas Google reported no less than 1 billion parameters in YoutubeDNN again in 2016. Even Pinterest, which had lengthy promoted inductive graph embeddings from PinSage [1, 2], has just lately began utilizing giant embedding matrices.

Context size. For many years, recommender system engineers have been busy producing options. In trendy rating programs, the variety of options can attain tons of and even hundreds, and Yandex additionally provides such providers.

One other instance of “context” in a mannequin is the person’s historical past in a transformer. Right here, the dimensions of the context is set by the size of the historical past. In each trade and academia, the quantity tends to be very small, with only some hundred occasions at greatest.

Coaching dataset dimension. I already talked about that we have now numerous information. Recommender programs produce tons of of datasets which might be comparable in dimension to the GPT-3 coaching dataset.

The trade has a number of use instances of large datasets with billions of coaching examples on show: 2 billion, 2.1 billion, 3 billion, 60 billion, 100 billion, 146 billion, 500 billion.

Encoder dimension. The usual for early-fusion fashions shall be in hundreds of thousands or tens of hundreds of thousands of parameters. In line with the Google papers, “simplified” variations of their Large&Deep fashions had 1 to 68 million parameters for the experiments [1, 2]. And if we use a two-layer DCN-v2 (a preferred neural community layer for early-fusion fashions) over a thousand steady options, we’ll get not more than 10 million parameters.

Two-tower fashions most frequently use tiny transformers to encode the person: for instance, two transformer blocks with hidden layer dimensionality not exceeding a few hundred. This configuration can have at most a couple of million parameters.

And whereas the sizes of the embedding matrices and coaching datasets are already fairly giant, scaling the size of person historical past and the capability of the encoder a part of the mannequin stays an open query. Is there any vital scaling by these parameters or not?

This was the query on our minds in February, 2024. Then an article from researchers at Meta, titled Actions Communicate Louder than Phrases, cheered us all up a bit.

The аuthors offered a brand new encoder structure known as HSTU and formulated each the rating downside and the candidate technology downside as a generative mannequin. The mannequin had a really lengthy historical past size (8000 occasions!) together with an intensive coaching dataset (100 billion examples), and the person historical past encoder was a lot bigger than the last few million parameters. Nevertheless, even right here, the most important encoder configuration talked about, has solely 176 million parameters, and it’s unclear whether or not they applied it (judging by the following articles, they didn’t).

Are 176 million parameters in an encoder so much or a bit of? If we take a look at language fashions, the reply is evident: an LLM with 176 million parameters within the encoder shall be extremely inferior in functionality and problem-solving high quality to trendy SOTA fashions with billions and even trillions of parameters.

Why, then, do we have now such small fashions in recommender programs?

Why can’t we obtain an identical leap in high quality if we exchange pure language texts with anonymized person histories through which actions act as phrases? Have recommender fashions already reached the ceiling of their baseline high quality, and all we have now left is to make small incremental enhancements, tweaking options and goal values.

These have been the existential questions we requested ourselves when designing our personal new ARGUS method.

RecSys × LLM × RL

After plowing by way of the in depth literature on scaling, we discovered that three primary situations decide the success of neural community scaling:

- Numerous information.

- Fairly expressive structure with a big mannequin capability.

- Essentially the most basic, basic studying process attainable.

For instance, LLMs are very expressive and highly effective transformers that study from actually all the information on the web. Moreover, the duty of predicting the subsequent phrase is a basic process that, in actuality, decomposes into numerous duties associated to completely different fields, together with grammar, erudition, arithmetic, physics, and programming. All three situations are met!

If we take a look at recommender programs:

- We even have numerous information: trillions of interactions between customers and objects.

- We are able to simply as simply use transformers.

- We simply want to seek out the appropriate studying process to scale the recommender mannequin.

That’s what we did.

There’s an fascinating facet of pre-training giant language fashions. If you happen to simply ask a pre-trained LLM about one thing, it would give a mean reply. The more than likely reply it has encountered within the coaching information. That reply gained’t essentially be good or proper.

However when you add a immediate earlier than the query, like “Think about you’re an knowledgeable in X”, it would begin offering rather more related and proper solutions.

That’s as a result of LLMs don’t simply study to mimic solutions from the web; additionally they purchase a extra basic understanding of the world in an try and condense all the data from the coaching set. It learns patterns and abstractions. And it’s exactly as a result of the LLM is aware of a variety of solutions and but possesses a basic understanding of the world that we will acquire good solutions from it.

We tried to use this logic to recommender programs. First, it is advisable specific the suggestions as a reinforcement studying process:

- A recommender system is an agent.

- Actions are suggestions. In probably the most fundamental case, the recommender system recommends one merchandise at a time (for instance, recommends one music observe within the music streaming app every time).

- The setting means customers, their behaviors, patterns, preferences, and pursuits.

- The coverage is a chance distribution over objects.

- The reward is a person’s constructive suggestions in response to a suggestion.

There’s a direct analogy to the LLM instance. “Solutions from the web” are the actions of previous recommender programs (logging insurance policies), and basic information in regards to the world is knowing customers, their patterns, and preferences. We would like our new mannequin to have the ability to:

- Imitate the actions of previous recommender programs.

- Have an excellent understanding of the customers.

- Modify their actions to attain a greater end result.

Earlier than we transfer on to our new method, let’s study the preferred setup for coaching suggestion transformers: subsequent—merchandise prediction. The SASRec mannequin could be very consultant right here. The system accumulates a person’s historical past of constructive interactions with the service (for instance, purchases), and the mannequin learns to foretell which buy is prone to come subsequent within the sequence. That’s, as a substitute of next-token prediction, as in NLP, we go for next-item prediction.

This method (SASRec and customary subsequent merchandise prediction) will not be in step with the philosophy I described earlier, which centered on adjusting the logging coverage based mostly on basic information of the world. It might appear that to foretell what the person will purchase subsequent, the mannequin ought to function underneath this philosophy:

- It ought to perceive what could possibly be proven to the person by the recommender system that was in manufacturing on the time for which the prediction must be made. That’s, it ought to have an excellent mannequin of logging coverage habits (i.e., a mannequin that can be utilized to mimic).

- It wants to grasp what the person might need preferred from the issues proven by the previous recommender system, which means that it wants to grasp their preferences, that are the very basic beliefs in regards to the world.

However fashions like SASRec don’t explicitly mannequin any of this stuff. They lack full details about previous logging insurance policies (we solely see suggestions with constructive outcomes), and we additionally don’t learn to replicate these logging insurance policies. There’s no approach to know what the previous recommender system may have supplied. On the identical time, we don’t totally perceive the mannequin of the world or the person: we ignore all adverse suggestions and solely contemplate constructive suggestions.

ARGUS: AutoRegressive Generative Consumer Sequential Modeling

AutoRegressive Generative Consumer Sequential modeling (ARGUS) is our new method to coaching suggestion transformers.

First, we study your complete anonymized person historical past, together with constructive interactions but additionally all different interactions. We seize the essence of the interplay context, the time it occurred, the gadget used, the product web page the person was on, their My Vibe personalization settings, and different related particulars.

Consumer historical past is a selected sequence of triples (context, merchandise, suggestions), the place context refers back to the interplay context, merchandise represents the article the person interacts with, and suggestions denotes the person’s response to the interplay (resembling whether or not the person preferred the merchandise, purchased it, and so forth.).

Subsequent, we determine two new studying duties, each of which lengthen past the standard next-item prediction broadly utilized in trade and academia.

Subsequent merchandise prediction

Our first process can be known as subsequent merchandise prediction. Trying on the historical past and the present interplay context, we predict which merchandise shall be interacted with: P(merchandise | historical past, context).

- If the historical past comprises solely suggestion site visitors (occasions generated straight by the recommender system), then the mannequin learns to mimic the logging coverage (suggestions from the previous recommender system).

- If there’s additionally natural site visitors (any site visitors aside from referral site visitors, resembling site visitors from search engines like google and yahoo, or if the person visits the library and listens to their favourite observe), we additionally achieve extra basic information in regards to the person, unrelated to the logging coverage.

Vital: although this process has the identical title as in SASRec (subsequent merchandise prediction), it’s not the identical process in any respect. We predict not solely constructive but additionally adverse interactions, and in addition take note of the present context. The context helps us perceive whether or not the motion is natural or not, and if it’s a suggestion, what floor it’s on (place, web page, or carousel). Additionally, it usually reduces the noise stage throughout mannequin coaching.

Context is important for music suggestions: the person’s temper and their present scenario have a major influence on the kind of music they wish to take heed to.

The duty of predicting a component from a set is often expressed as a classification downside, the place the weather of the unique set function lessons. Then, we have to use a cross-entropy loss operate for coaching, the place the softmax operate is utilized to the logits (unnormalized outputs of the neural community). Softmax calculation requires computing the sum of exponents from logits throughout all lessons.

Whereas the dimensions of dictionaries in LLMs can attain tons of of hundreds of things within the worst case, and softmax calculation will not be a major downside, it turns into a priority in recommender programs. Right here, catalogs encompass hundreds of thousands and even billions of things, and calculating the complete softmax is an unimaginable process. It is a subject for a separate huge article, however finally, we have now to make use of a difficult loss operate known as “sampled softmax” with a logQ correction:

- N means a mixture of in-batch and uniform negatives

logQ(n)means logQ correction- Temperature

Tmeans a educated parameterEᵀclipped to [0.01, 100].

Suggestions prediction

Suggestions prediction is the second studying process. Contemplating historical past, the present context, and the merchandise, we predict person suggestions: P(suggestions | historical past, context, merchandise).

The primary process, subsequent merchandise prediction, teaches us imitate logging insurance policies (and understanding customers if there’s natural site visitors). The suggestions prediction process, alternatively, is concentrated completely, on getting basic information about customers, their preferences, and pursuits.

It is extremely much like how the rating variant of the mannequin from “Actions Communicate Louder than Phrases” learns on a sequence of pairs (merchandise, motion). Nonetheless, right here the context token is handled individually, and there are extra than simply recommender contexts current.

Suggestions can have a number of elements: whether or not a observe was preferred, disliked, added to a playlist, and what portion of the observe was listened to. We predict all kinds of suggestions by decomposing them into particular person loss features. You need to use any loss operate as a selected loss operate, together with cross-entropy or regression. For instance, binary cross-entropy is adequate to foretell whether or not a like was current or not.

Though some suggestions is extra widespread (there are normally far fewer likes than lengthy listens), the mannequin does an excellent job of studying to foretell all indicators. The bigger the mannequin, the simpler it’s to study all duties directly, with out conflicts. Furthermore, frequent suggestions (listens), quite the opposite, helps the mannequin learn to simulate uncommon, sparse suggestions (likes).

If we mix all this right into a single studying process, we get the next:

- Create histories for the person from triples (context, merchandise, suggestions).

- Use the transformer.

- Predict the subsequent merchandise based mostly on the hidden state of the context.

- Predict the person’s suggestions after interacting with the merchandise based mostly on the merchandise’s hidden state.

Let me additionally touch upon how this differs from HSTU. In Actions Communicate Louder than Phrases, the authors practice two separate fashions for candidate technology and rating. The candidate technology mannequin comprises your complete historical past, however, like SASRec, it fashions solely constructive interactions and doesn’t contemplate the loss operate in instances the place there’s a adverse interplay. The rating mannequin, as talked about earlier, learns for a process much like our suggestions prediction.

Our answer provides a extra complete subsequent merchandise prediction process and a extra complete suggestions prediction process, and the mannequin learns in each features concurrently.

Simplified ARGUS

Our method has one huge downside—we’re inflating the person’s historical past. As a result of every interplay with an merchandise is represented by three tokens directly (context, merchandise, suggestions), we must feed virtually 25,000 tokens into the transformer to research 8192 current person listens.

One may argue that that is nonetheless not vital and that the context size is for much longer in LLMs; nonetheless, this isn’t solely correct. LLMs, on common, have a lot smaller numbers, sometimes tons of of tokens, particularly throughout pre-training.

In distinction, in our music streaming platform, for instance, customers usually have hundreds and even tens of hundreds of occasions. We have already got for much longer context lengths, and inflating these lengths by an element of three has a fair higher influence on studying pace. To deal with this, we created a simplified model of the mannequin, through which every triple (context, merchandise, suggestions) is condensed right into a single vector. When it comes to enter format, it resembles our earlier generations of transformer fashions; nonetheless, we keep the identical two studying duties—subsequent merchandise prediction and suggestions prediction.

To foretell the subsequent merchandise, we take the hidden state from the transformer comparable to the triple (c, i, f) at a previous time limit, concatenate the present context vector to it, compress it to a decrease dimension utilizing an MLP, after which use the sampled softmax to study to foretell the subsequent merchandise.

To foretell the suggestions, we concatenate the vector of the present merchandise after which use an MLP to foretell all of the required goal variables. When it comes to recommender transformer architectures, our mannequin turns into much less target-aware and fewer context-aware; nonetheless, it nonetheless performs properly, enabling a three-fold acceleration.

ARGUS Implementation

A mannequin educated on this two-headed mode for each duties concurrently (subsequent merchandise prediction and suggestions prediction) could be applied as is. The NIP head is liable for candidate choice, and the FP head for ultimate rating.

However we didn’t wish to try this, no less than not for our first implementation:

- Our aim was to implement a really giant mannequin, so we initially centered on offline deployment. With offline deployment, person and merchandise vectors are recalculated every day inside a separate common course of, and also you solely have to calculate the dot product within the runtime setting.

- The pre-trained model of ARGUS implies entry to the person’s historical past with none delay: we see all occasions of their historical past as much as the present time limit when the prediction is made. That’s, it must be utilized at runtime.

- The NIP head predicts all person interactions, and the mannequin is normally educated to foretell solely future constructive interactions to generate candidates. However predicting constructive interactions is a heuristic, a surrogate studying process. It’d even be higher to make use of a head that predicts all interactions as a result of it learns to be in step with the rating. If an merchandise has been really helpful, it means the rating preferred it. However on this scenario, we weren’t able to experiment with that and as a substitute wished to observe the well-trodden path.

- The FP head learns for pointwise losses: whether or not a observe shall be preferred or not, what portion of the observe shall be heard, and so forth. However we nonetheless usually practice fashions for pairwise rating: we study to rank objects that have been really helpful “subsequent to one another” and acquired completely different suggestions. Some argue that pointwise losses are adequate for coaching rating fashions, however on this case, we don’t exchange your complete rating stack. As a substitute, we intention so as to add a brand new, highly effective, neural-network-based function to the ultimate rating mannequin. If the ultimate rating mannequin is educated for a selected process (resembling pairwise rating), then the neural community that generates the function is most effectively educated for that process; in any other case, the ultimate mannequin will rely much less on our function. Accordingly, we’d prefer to pre-train ARGUS for a similar process as the unique rating mannequin, permitting us to put it to use in rating.

There are different deployment use instances past the standard candidate technology and rating phases, and we’re actively researching these as properly. Nevertheless, for our first deployment, we went with an offline two-tower rating:

- We determined to fine-tune ARGUS in order that it could possibly be used as an offline two-tower mannequin. We use it to recalculate person and merchandise vectors every day, whereas person preferences are decided by way of the dot product of the person with the objects.

- We pre-trained ARGUS for a pairwise rating process much like the one on which the ultimate rating mannequin is educated. Because of this we have now by some means chosen pairs of tracks that the person heard and rated in another way by way of constructive suggestions, and we wish to learn to rank them appropriately.

We construct these fashions very often: they’re simple to coach and implement by way of assets and growth prices. Nevertheless, our earlier fashions have been considerably smaller and discovered in another way. Not with the ARGUS process, however first with the standard contrastive studying between customers and positives, after which fine-tuned for the duty.

Our earlier contrastive pre-training process implied compiling a number of coaching examples for a person: if the person had n purchases, then there could be n samples within the dataset. That stated, we didn’t use autoregressive studying. That’s, we ran the transformer n occasions throughout coaching. This method enabled us to be very versatile in creating pairs (person, merchandise) for coaching, use any historical past format, encode context along with the person, and account for lags. When predicting likes, we will use a one-day lag within the person’s historical past. Nevertheless, issues have been operating fairly slowly.

ARGUS pre-training employs autoregressive studying, the place we study from all occasions within the person’s exercise concurrently in a single transformer run. It is a highly effective acceleration that allowed us to coach a lot bigger fashions utilizing the identical assets.

Throughout fine-tuning, we additionally ran the transformer many occasions for a single person. It’s known as impression-level studying that Meta used to have earlier than HSTU. If a person is proven an merchandise at a selected second, we generate a pattern of the shape (person, merchandise). The dataset can include numerous such impressions for a single person, and we are going to rerun the transformer for every considered one of them. For pairwise rating, we thought-about triples of the shape (person, item1, item2). Those we used earlier than.

Inspecting the acceleration in the course of the pre-training stage, we determined to make use of an identical method with fine-tuning. We develop a fine-tuning process for the two-tower mannequin to show it rating, the place the transformer solely must be run as soon as.

Let’s say we have now the person’s whole historical past for a 12 months, and all of the suggestions proven to the person throughout the identical interval. By implementing a transformer with a causal masks over your complete historical past, we get vector representations of the person for all of the moments in that 12 months directly, and so we will:

- Individually calculate the vectors of the proven objects.

- Evaluate the timestamps and map suggestion impressions to person vectors comparable to the required lag in person historical past supply.

- Calculate all of the required scalar merchandise and all phrases of the loss operate.

And all of this directly for your complete 12 months—in a single transformer run.

Beforehand, we might rerun the transformer for every pair of impressions; now, we course of all of the impressions directly in a single run. It is a large acceleration: by an element of tens, tons of, and even hundreds. To make use of a two-tower mannequin like this, we will merely use the vector illustration of the person on the final second in time (comparable to the final occasion within the historical past) as the present vector illustration. For the objects, we will use the encoder that was used throughout coaching for the impressions. In coaching, we simulate a one-day person historical past lag after which run the mannequin as an offline mannequin, recalculating person vectors every day.

After I say that we course of the person’s whole 12 months of historical past in a single transformer run, I’m being considerably deceptive. In actuality, we have now a sure restrict on the utmost historical past size that we implement, and a person in a dataset can have a number of samples or chunks. For pre-training, these chunks don’t overlap.

Nevertheless, throughout fine-tuning, there are limits not solely on the utmost historical past size but additionally on its minimal size, in addition to on the utmost variety of suggestion impressions in a single coaching instance used to coach the mannequin for rating.

Outcomes

We selected our music streaming as the primary service to experiment with. Suggestions are essential right here, and the service has numerous energetic customers. We’ve constructed an enormous coaching dataset with over 300 billion listens from hundreds of thousands of customers. That is tens and even tons of of occasions bigger than the coaching datasets we’d used earlier than.

What’s a triple (context, merchandise, suggestions) in a music streaming service?

- Context: whether or not the present interplay is a suggestion or natural. If it’s a suggestion—what floor it’s on, and if it’s My Vibe—what the settings are.

- Merchandise: a music observe. A very powerful function for merchandise encoding is the merchandise ID. We use unified embeddings to encode options with excessive cardinality. On this case, we take three 512K hashes per merchandise. We use a set unified embedding matrix with 130 million parameters in our experiments.

- Consumer suggestions: whether or not a observe was preferred, and what portion of the observe was heard.

For offline high quality evaluation, we use information from the week following the coaching interval by way of the worldwide temporal cut up.

To evaluate the standard of the pre-trained mannequin, we study the loss operate values within the pre-training duties: subsequent merchandise prediction and suggestions prediction. That’s, we measure how properly the mannequin discovered to unravel the duties we created for it. The smaller the worth, the higher.

Vital: We contemplate the person’s historical past over a protracted interval, however the loss operate is simply calculated for occasions that happen throughout the take a look at interval.

Throughout fine-tuning, we study to appropriately rank merchandise pairs based mostly on person suggestions, making PairAccuracy— a metric that measures the share of pairs appropriately ordered by the mannequin —an appropriate offline metric for us. In observe, we reweigh pairs barely extra based mostly on suggestions: for instance, pairs through which the individual preferred and skipped a observe have the next weight than these through which the individual listened to and skipped a observe.

Our deployment state of affairs includes including a strong new function to the ultimate ranker. For that reason, we measure the relative improve in PairAccuracy for the ultimate ranker with the brand new function added, in comparison with the ultimate ranker with out it. The ultimate ranker in our music streaming platform is gradient boosting.

A/B Check Outcomes and Measurements

Our preliminary aim was to scale suggestion transformers. To check the scaling, we chosen 4 different-sized transformer configurations, starting from 3.2 million to 1.007 billion parameters.

We additionally determined to check the efficiency of the HSTU structure. In “Actions Communicate Louder than Phrases“, the authors proposed a brand new encoder structure, which is sort of completely different from the transformer structure. Based mostly on the authors’ experiments, this structure outperforms transformers in suggestion duties.

There’s scaling! Every new leap in structure dimension ends in a top quality achieve, each in pre-training and fine-tuning.

HSTU proved to be no higher than transformers. We used the most important configuration talked about by the authors in “Actions Communicate Louder than Phrases.” It has one and a half occasions extra parameters than our medium transformer, whereas having roughly the identical high quality.

Let’s visualize the metrics from the desk as a graph. In that case, we will observe the scaling regulation for our 4 factors: the dependence of high quality on the logarithm of the variety of parameters seems linear.

We carried out a small ablation research to seek out out whether or not we may simplify our mannequin or take away any components from the coaching.

If you happen to take away pre-training, the mannequin’s high quality drops.

If you happen to cut back the period of fine-tuning, the drop turns into much more pronounced.

Firstly of this text, I discussed that the authors of “Actions Communicate Louder than Phrases” educated a mannequin with a historical past size of 8,000 objects. We determined to offer it a attempt: it seems that dealing with such a deep person’s musical historical past ends in a noticeable enchancment in suggestions. Beforehand, our fashions utilized a most of 1,500–2,000 occasions. This was the primary time we had the chance to cross this threshold.

Implementation Outcomes

We’ve been working to develop transformers for music suggestions for about three years now and we’ve come a great distance. Right here’s all the things we have now discovered and the way we have now progressed creating transformer-based fashions for music suggestions over this time.

- Our first three transformers have been all offline. Consumer and merchandise vectors have been recalculated every day. Then, person vectors have been loaded right into a key-value retailer, and merchandise vectors have been saved within the service’s RAM, whereas solely the dot product was calculated at runtime. We utilized a few of these fashions not just for rating, but additionally for candidate technology (we’re acquainted with constructing multi-head fashions that carry out each duties). In instances like this, the HNSW index, from which candidates could be retrieved, additionally resides within the service’s RAM.

- The primary mannequin solely had a sign about likes, the second mannequin had a sign about listens (together with skips), and within the third mannequin, we mixed each sign varieties (specific and implicit).

- The v4 model of the mannequin is an adaptation of v3, which is applied in runtime with a slight lag in person historical past, its encoder is 6x smaller than that of the v3 mannequin.

- The brand new ARGUS mannequin has eight occasions the person historical past size and ten occasions the encoder dimension. It additionally makes use of a brand new studying course of I described earlier.

TLT is the full listening time. The “like” probability represents the possibilities of a person liking a suggestion when it’s proven to them. Every implementation resulted in a metrics increase for our user-tailored suggestions. And the primary ARGUS gave about the identical improve in metrics as all of the earlier implementations mixed!

My Vibe additionally has a particular setting, which we use a separate rating stack for: Unfamiliar. We had a separate ARGUS implementation for this setting, reaching a 12% improve in complete listening time and a ten% progress in probability. The Unfamiliar setting is utilized by people who find themselves fascinated about discovering new suggestions. The truth that we skilled a major improve on this class confirms that ARGUS is more practical at dealing with non-trivial eventualities.

We applied ARGUS in music eventualities on good units and efficiently elevated the full time customers spend with an energetic speaker by 0.75%. Right here, the ultimate ranker will not be a gradient boosting mannequin, however a full-scale rating neural community. Due to this, we have been capable of not solely feed a single scalar function from ARGUS but additionally cross full person and merchandise vectors as enter to the ultimate ranker. In comparison with a single scalar function, this elevated the standard achieve by one other one and a half to 2 occasions.

ARGUS has already been applied not solely as a rating function, but additionally to generate candidates. The group has tailored the offline ARGUS right into a runtime model. These implementations yielded vital features in key metrics. Neural networks are the way forward for recommender programs however there’s nonetheless a protracted journey forward.

Thanks for studying.