We talk about MELON, a way that may decide object-centric digital camera poses totally from scratch whereas reconstructing the article in 3D. MELON can simply be built-in into current NeRF strategies and requires as few as 4–6 photos of an object.

An individual’s prior expertise and understanding of the world typically permits them to simply infer what an object appears to be like like in entire, even when solely taking a look at a number of 2D footage of it. But the capability for a pc to reconstruct the form of an object in 3D given just a few photos has remained a tough algorithmic downside for years. This basic pc imaginative and prescient process has purposes starting from the creation of e-commerce 3D fashions to autonomous automobile navigation.

A key a part of the issue is learn how to decide the precise positions from which photos had been taken, generally known as pose inference. If digital camera poses are recognized, a spread of profitable strategies — reminiscent of neural radiance fields (NeRF) or 3D Gaussian Splatting — can reconstruct an object in 3D. But when these poses usually are not out there, then we face a tough “rooster and egg” downside the place we might decide the poses if we knew the 3D object, however we will’t reconstruct the 3D object till we all know the digital camera poses. The issue is made tougher by pseudo-symmetries — i.e., many objects look related when seen from totally different angles. For instance, sq. objects like a chair are likely to look related each 90° rotation. Pseudo-symmetries of an object could be revealed by rendering it on a turntable from numerous angles and plotting its photometric self-similarity map.

Self-Similarity map of a toy truck mannequin. Left: The mannequin is rendered on a turntable from numerous azimuthal angles, θ. Proper: The typical L2 RGB similarity of a rendering from θ with that of θ*. The pseudo-similarities are indicated by the dashed crimson strains.

The diagram above solely visualizes one dimension of rotation. It turns into much more complicated (and tough to visualise) when introducing extra levels of freedom. Pseudo-symmetries make the issue ill-posed, with naïve approaches typically converging to native minima. In follow, such an strategy may mistake the again view because the entrance view of an object, as a result of they share the same silhouette. Earlier strategies (reminiscent of BARF or SAMURAI) side-step this downside by counting on an preliminary pose estimate that begins near the worldwide minima. However how can we strategy this if these aren’t out there?

Strategies reminiscent of GNeRF and VMRF leverage generative adversarial networks (GANs) to beat the issue. These strategies have the flexibility to artificially “amplify” a restricted variety of coaching views, aiding reconstruction. GAN strategies, nonetheless, typically have complicated, generally unstable, coaching processes, making sturdy and dependable convergence tough to attain in follow. A variety of different profitable strategies, reminiscent of SparsePose or RUST, can infer poses from a restricted quantity views, however require pre-training on a big dataset of posed photos, which aren’t at all times out there, and might undergo from “domain-gap” points when inferring poses for several types of photos.

In “MELON: NeRF with Unposed Photos in SO(3)”, spotlighted at 3DV 2024, we current a way that may decide object-centric digital camera poses totally from scratch whereas reconstructing the article in 3D. MELON (Modulo Equal Latent Optimization of NeRF) is without doubt one of the first strategies that may do that with out preliminary pose digital camera estimates, complicated coaching schemes or pre-training on labeled information. MELON is a comparatively easy approach that may simply be built-in into current NeRF strategies. We exhibit that MELON can reconstruct a NeRF from unposed photos with state-of-the-art accuracy whereas requiring as few as 4–6 photos of an object.

MELON

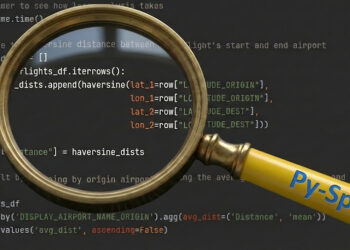

We leverage two key strategies to help convergence of this ill-posed downside. The primary is a really light-weight, dynamically skilled convolutional neural community (CNN) encoder that regresses digital camera poses from coaching photos. We cross a downscaled coaching picture to a 4 layer CNN that infers the digital camera pose. This CNN is initialized from noise and requires no pre-training. Its capability is so small that it forces related trying photos to related poses, offering an implicit regularization significantly aiding convergence.

The second approach is a modulo loss that concurrently considers pseudo symmetries of an object. We render the article from a set set of viewpoints for every coaching picture, backpropagating the loss solely by means of the view that most closely fits the coaching picture. This successfully considers the plausibility of a number of views for every picture. In follow, we discover N=2 views (viewing an object from the opposite aspect) is all that’s required typically, however generally get higher outcomes with N=4 for sq. objects.

These two strategies are built-in into normal NeRF coaching, besides that as an alternative of fastened digital camera poses, poses are inferred by the CNN and duplicated by the modulo loss. Photometric gradients back-propagate by means of the best-fitting cameras into the CNN. We observe that cameras typically converge shortly to globally optimum poses (see animation under). After coaching of the neural area, MELON can synthesize novel views utilizing normal NeRF rendering strategies.

We simplify the issue through the use of the NeRF-Artificial dataset, a preferred benchmark for NeRF analysis and customary within the pose-inference literature. This artificial dataset has cameras at exactly fastened distances and a constant “up” orientation, requiring us to deduce solely the polar coordinates of the digital camera. This is similar as an object on the heart of a globe with a digital camera at all times pointing at it, transferring alongside the floor. We then solely want the latitude and longitude (2 levels of freedom) to specify the digital camera pose.

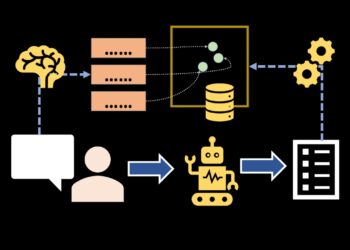

MELON makes use of a dynamically skilled light-weight CNN encoder that predicts a pose for every picture. Predicted poses are replicated by the modulo loss, which solely penalizes the smallest L2 distance from the bottom fact coloration. At analysis time, the neural area can be utilized to generate novel views.

Outcomes

We compute two key metrics to judge MELON’s efficiency on the NeRF Artificial dataset. The error in orientation between the bottom fact and inferred poses could be quantified as a single angular error that we common throughout all coaching photos, the pose error. We then check the accuracy of MELON’s rendered objects from novel views by measuring the peak signal-to-noise ratio (PSNR) in opposition to held out check views. We see that MELON shortly converges to the approximate poses of most cameras inside the first 1,000 steps of coaching, and achieves a aggressive PSNR of 27.5 dB after 50k steps.

Convergence of MELON on a toy truck mannequin throughout optimization. Left: Rendering of the NeRF. Proper: Polar plot of predicted (blue x), and floor fact (crimson dot) cameras.

MELON achieves related outcomes for different scenes within the NeRF Artificial dataset.

Reconstruction high quality comparability between ground-truth (GT) and MELON on NeRF-Artificial scenes after 100k coaching steps.

Noisy photos

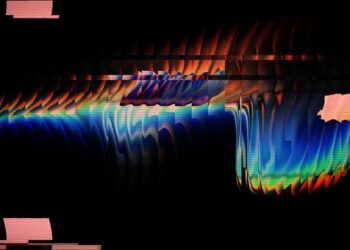

MELON additionally works properly when performing novel view synthesis from extraordinarily noisy, unposed photos. We add various quantities, σ, of white Gaussian noise to the coaching photos. For instance, the article in σ=1.0 under is unattainable to make out, but MELON can decide the pose and generate novel views of the article.

Novel view synthesis from noisy unposed 128×128 photos. Prime: Instance of noise degree current in coaching views. Backside: Reconstructed mannequin from noisy coaching views and imply angular pose error.

This maybe shouldn’t be too shocking, on condition that strategies like RawNeRF have demonstrated NeRF’s glorious de-noising capabilities with recognized digital camera poses. The truth that MELON works for noisy photos of unknown digital camera poses so robustly was sudden.

Conclusion

We current MELON, a way that may decide object-centric digital camera poses to reconstruct objects in 3D with out the necessity for approximate pose initializations, complicated GAN coaching schemes or pre-training on labeled information. MELON is a comparatively easy approach that may simply be built-in into current NeRF strategies. Although we solely demonstrated MELON on artificial photos we’re adapting our approach to work in actual world situations. See the paper and MELON website to be taught extra.

Acknowledgements

We want to thank our paper co-authors Axel Levy, Matan Sela, and Gordon Wetzstein, in addition to Florian Schroff and Hartwig Adam for steady assist in constructing this expertise. We additionally thank Matthew Brown, Ricardo Martin-Brualla and Frederic Poitevin for his or her useful suggestions on the paper draft. We additionally acknowledge the usage of the computational assets on the SLAC Shared Scientific Knowledge Facility (SDF).

We talk about MELON, a way that may decide object-centric digital camera poses totally from scratch whereas reconstructing the article in 3D. MELON can simply be built-in into current NeRF strategies and requires as few as 4–6 photos of an object.

An individual’s prior expertise and understanding of the world typically permits them to simply infer what an object appears to be like like in entire, even when solely taking a look at a number of 2D footage of it. But the capability for a pc to reconstruct the form of an object in 3D given just a few photos has remained a tough algorithmic downside for years. This basic pc imaginative and prescient process has purposes starting from the creation of e-commerce 3D fashions to autonomous automobile navigation.

A key a part of the issue is learn how to decide the precise positions from which photos had been taken, generally known as pose inference. If digital camera poses are recognized, a spread of profitable strategies — reminiscent of neural radiance fields (NeRF) or 3D Gaussian Splatting — can reconstruct an object in 3D. But when these poses usually are not out there, then we face a tough “rooster and egg” downside the place we might decide the poses if we knew the 3D object, however we will’t reconstruct the 3D object till we all know the digital camera poses. The issue is made tougher by pseudo-symmetries — i.e., many objects look related when seen from totally different angles. For instance, sq. objects like a chair are likely to look related each 90° rotation. Pseudo-symmetries of an object could be revealed by rendering it on a turntable from numerous angles and plotting its photometric self-similarity map.

Self-Similarity map of a toy truck mannequin. Left: The mannequin is rendered on a turntable from numerous azimuthal angles, θ. Proper: The typical L2 RGB similarity of a rendering from θ with that of θ*. The pseudo-similarities are indicated by the dashed crimson strains.

The diagram above solely visualizes one dimension of rotation. It turns into much more complicated (and tough to visualise) when introducing extra levels of freedom. Pseudo-symmetries make the issue ill-posed, with naïve approaches typically converging to native minima. In follow, such an strategy may mistake the again view because the entrance view of an object, as a result of they share the same silhouette. Earlier strategies (reminiscent of BARF or SAMURAI) side-step this downside by counting on an preliminary pose estimate that begins near the worldwide minima. However how can we strategy this if these aren’t out there?

Strategies reminiscent of GNeRF and VMRF leverage generative adversarial networks (GANs) to beat the issue. These strategies have the flexibility to artificially “amplify” a restricted variety of coaching views, aiding reconstruction. GAN strategies, nonetheless, typically have complicated, generally unstable, coaching processes, making sturdy and dependable convergence tough to attain in follow. A variety of different profitable strategies, reminiscent of SparsePose or RUST, can infer poses from a restricted quantity views, however require pre-training on a big dataset of posed photos, which aren’t at all times out there, and might undergo from “domain-gap” points when inferring poses for several types of photos.

In “MELON: NeRF with Unposed Photos in SO(3)”, spotlighted at 3DV 2024, we current a way that may decide object-centric digital camera poses totally from scratch whereas reconstructing the article in 3D. MELON (Modulo Equal Latent Optimization of NeRF) is without doubt one of the first strategies that may do that with out preliminary pose digital camera estimates, complicated coaching schemes or pre-training on labeled information. MELON is a comparatively easy approach that may simply be built-in into current NeRF strategies. We exhibit that MELON can reconstruct a NeRF from unposed photos with state-of-the-art accuracy whereas requiring as few as 4–6 photos of an object.

MELON

We leverage two key strategies to help convergence of this ill-posed downside. The primary is a really light-weight, dynamically skilled convolutional neural community (CNN) encoder that regresses digital camera poses from coaching photos. We cross a downscaled coaching picture to a 4 layer CNN that infers the digital camera pose. This CNN is initialized from noise and requires no pre-training. Its capability is so small that it forces related trying photos to related poses, offering an implicit regularization significantly aiding convergence.

The second approach is a modulo loss that concurrently considers pseudo symmetries of an object. We render the article from a set set of viewpoints for every coaching picture, backpropagating the loss solely by means of the view that most closely fits the coaching picture. This successfully considers the plausibility of a number of views for every picture. In follow, we discover N=2 views (viewing an object from the opposite aspect) is all that’s required typically, however generally get higher outcomes with N=4 for sq. objects.

These two strategies are built-in into normal NeRF coaching, besides that as an alternative of fastened digital camera poses, poses are inferred by the CNN and duplicated by the modulo loss. Photometric gradients back-propagate by means of the best-fitting cameras into the CNN. We observe that cameras typically converge shortly to globally optimum poses (see animation under). After coaching of the neural area, MELON can synthesize novel views utilizing normal NeRF rendering strategies.

We simplify the issue through the use of the NeRF-Artificial dataset, a preferred benchmark for NeRF analysis and customary within the pose-inference literature. This artificial dataset has cameras at exactly fastened distances and a constant “up” orientation, requiring us to deduce solely the polar coordinates of the digital camera. This is similar as an object on the heart of a globe with a digital camera at all times pointing at it, transferring alongside the floor. We then solely want the latitude and longitude (2 levels of freedom) to specify the digital camera pose.

MELON makes use of a dynamically skilled light-weight CNN encoder that predicts a pose for every picture. Predicted poses are replicated by the modulo loss, which solely penalizes the smallest L2 distance from the bottom fact coloration. At analysis time, the neural area can be utilized to generate novel views.

Outcomes

We compute two key metrics to judge MELON’s efficiency on the NeRF Artificial dataset. The error in orientation between the bottom fact and inferred poses could be quantified as a single angular error that we common throughout all coaching photos, the pose error. We then check the accuracy of MELON’s rendered objects from novel views by measuring the peak signal-to-noise ratio (PSNR) in opposition to held out check views. We see that MELON shortly converges to the approximate poses of most cameras inside the first 1,000 steps of coaching, and achieves a aggressive PSNR of 27.5 dB after 50k steps.

Convergence of MELON on a toy truck mannequin throughout optimization. Left: Rendering of the NeRF. Proper: Polar plot of predicted (blue x), and floor fact (crimson dot) cameras.

MELON achieves related outcomes for different scenes within the NeRF Artificial dataset.

Reconstruction high quality comparability between ground-truth (GT) and MELON on NeRF-Artificial scenes after 100k coaching steps.

Noisy photos

MELON additionally works properly when performing novel view synthesis from extraordinarily noisy, unposed photos. We add various quantities, σ, of white Gaussian noise to the coaching photos. For instance, the article in σ=1.0 under is unattainable to make out, but MELON can decide the pose and generate novel views of the article.

Novel view synthesis from noisy unposed 128×128 photos. Prime: Instance of noise degree current in coaching views. Backside: Reconstructed mannequin from noisy coaching views and imply angular pose error.

This maybe shouldn’t be too shocking, on condition that strategies like RawNeRF have demonstrated NeRF’s glorious de-noising capabilities with recognized digital camera poses. The truth that MELON works for noisy photos of unknown digital camera poses so robustly was sudden.

Conclusion

We current MELON, a way that may decide object-centric digital camera poses to reconstruct objects in 3D with out the necessity for approximate pose initializations, complicated GAN coaching schemes or pre-training on labeled information. MELON is a comparatively easy approach that may simply be built-in into current NeRF strategies. Although we solely demonstrated MELON on artificial photos we’re adapting our approach to work in actual world situations. See the paper and MELON website to be taught extra.

Acknowledgements

We want to thank our paper co-authors Axel Levy, Matan Sela, and Gordon Wetzstein, in addition to Florian Schroff and Hartwig Adam for steady assist in constructing this expertise. We additionally thank Matthew Brown, Ricardo Martin-Brualla and Frederic Poitevin for his or her useful suggestions on the paper draft. We additionally acknowledge the usage of the computational assets on the SLAC Shared Scientific Knowledge Facility (SDF).