Contributions of This Work

This paper offers each an illuminating evaluation of token-level coaching dynamics and a brand new method known as SLM:

Token Loss Evaluation:

They show {that a} majority of tokens contribute little past the preliminary coaching part, whereas a small subset stays persistently excessive loss.

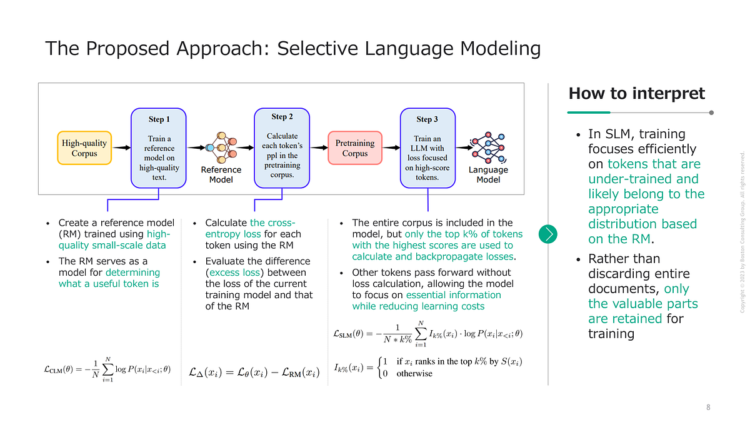

SLM for Centered Studying:

By leveraging a reference mannequin to gauge how “helpful” every token is, they handle to cut back coaching tokens drastically with out sacrificing high quality — in lots of instances even boosting downstream efficiency.

Broad Demonstration of Effectiveness:

SLM works not solely on math-specific duties but additionally in additional common domains, with both a meticulously curated reference dataset or a reference mannequin drawn from the identical massive corpus.

The place May This Go Subsequent?

SLM encompasses varied potential instructions for future analysis. For instance:

Scaling Up Additional:

Although the paper primarily focuses on fashions round 1B to 7B parameters, there stays the open query of how SLM performs on the 30B, 70B, or 100B+ scale. If the token-level strategy generalizes effectively, the price financial savings might be monumental for really huge LLMs.

Reference Fashions through API:

Should you can’t collect curated knowledge, perhaps you might use an API-based language mannequin as your reference. Which may make SLM extra sensible for smaller analysis groups who lack the assets for selective reference coaching.

Reinforcement Studying Extensions:

Think about coupling SLM with reinforcement studying. The reference mannequin might act as a “reward mannequin,” and token choice would possibly then be optimized by means of one thing akin to coverage gradients.

A number of Reference Fashions:

As an alternative of a single RM, you might prepare or collect a number of, every specializing in a special area or fashion. Then, mix their token scores to provide a extra sturdy multi-domain filtering system.

Alignment and Security:

There’s a rising development towards factoring in alignment or truthfulness. One would possibly prepare a reference mannequin to offer larger scores to well-supported statements and nil out tokens that look factually incorrect or dangerous.