The Enduring Recognition of AI’s Most Prestigious Convention

By all accounts this 12 months’s NeurIPS, the world’s premiere AI convention, was one of many largest and most energetic in its historical past. This 12 months’s convention was held on the San Diego Conference Heart in San Diego, California from Sunday, November 30, 2025 by way of Sunday, December 7, 2025. As a way of the size, NeurIPS 2025 obtained 21,575 legitimate paper submissions. From 2023 (~12.3 okay) to 2025 (~21.6 okay) this displays a ~75–80% bounce over two years, roughly ~30% per 12 months common. In individual attendance has been equally as spectacular, which has normally been the tens of 1000’s of individuals usually capped by venue dimension, with previous places working close to the higher restrict of what the bodily venue can deal with. Reinforcement studying dominated the dialog this 12 months, with the sector is shifting from scaling fashions to tuning them for particular use instances. Trade momentum appeared to centre strongly round Google, with Google DeepMind specifically surging and pushing new and refreshing analysis instructions, for instance continuous studying and nested studying, somewhat than simply “larger LLMs”. The size and intesity of the convention is a mirrored image of maybe each the tempo of AI progress and the cultural peak of the trendy AI gold rush.

This 12 months, the exhibitor corridor was packed, with main business gamers from expertise, finance, and AI infrastructure all setting out their stalls to exhibit their newest breakthroughs, spotlight open roles to gifted delegates, and hand out the ever-coveted branded “stash” — pens, T-shirts, water bottles, and extra. The particularly lucky convention goer may even obtain an invitation to company-hosted “after-parties”, which have change into a staple of the NeurIPS expertise and a super alternative to decompress, shed the information-overload and community, from Konwinski’s Laude Lounge to the invite-only Mannequin Ship cruise full of prime researchers. Diamond sponsors this 12 months included Ant Group, Google, Apple, ByteDance, Tesla, and Microsoft. The buy-side presence this 12 months was significantly sturdy, with main companies resembling Citadel, Citadel Securities, Hudson River Buying and selling, Jane Avenue, Soar Buying and selling, and The D. E. Shaw Group represented. On the infrastructure and tooling facet, Lambda showcased its GPU cloud platform, whereas corporations like Ollama and Poolside highlighted advances in native LLM runtimes and frontier mannequin improvement.

The NeurIPS Expo showcased many equally fascinating applied-AI demos. Highlights included BeeAI, demonstrating how autonomous brokers can behave reliably throughout totally different LLM backends; a multimodal forensic search system able to scanning massive video corpora with AI; an AI-accelerated LiDAR processing demo that confirmed how heterogeneous compute can dramatically pace up 3D notion; and LLM-driven data-engineering workflows that automate ingestion, transformation, and high quality checks. It’s clear from the EXPO that AI is heading full steam forward towards brokers, multimodal intelligence, accelerated notion, and end-to-end automated information programs.

The NeurIPS Greatest Paper Award ceremony arguably represents a pinnacle of the convention and a celebration of its most impactful work. The very best paper awards are given to exceptionally modern and impactful analysis that’s more likely to have a right away and longlasting impact on the sector of AI. It goes with out saying {that a} greatest paper award is a serious skilled accomplishment in a extremely aggressive and fast-paced analysis subject. It’s much more spectacular if we take note of the large quantity of submitted papers to NeurIPS. Standing out in that crowd is exceptionally tough.

The Anatomy of a NeurIPS Greatest Paper: Exploring the advantages of Gated Consideration in LLMs

Gating Defined: How a Tiny Valve Controls Massive Neural Fashions

Within the the rest of this text, we take a deep dive into one among this 12 months’s greatest papers from NeurIPS: “Gated Consideration for Massive Language Fashions: Non-linearity, Sparsity, and Consideration-Sink-Free” by the Qwen group. Arguably, this dense paper title packs quite a lot of info into a really small footprint, so, in what follows, I’ll unpack the paper piece by piece with the target of giving working towards Knowledge Scientists a transparent psychological mannequin of consideration gating and concrete takeaways from the paper they will instantly apply to their very own work.

First, we start with an understanding of the gate, the core module beneath examine within the paper. What precisely is a gate within the context of neural networks? A gate is nothing greater than a sign modulation mechanism, a computational unit that takes the output of an current transformation within the community and regulates it by selectively amplifying, attenuating, or suppressing elements of the enter sign.

As an alternative of permitting each activation to movement unchanged by way of the community, a gate introduces a realized management pathway that determines how a lot of the remodeled info ought to cross ahead.

Operationally talking, a gate computes a vector of coefficients, sometimes utilizing a sigmoid, softmax, or often a ReLU-based squashing perform, and these coefficients are utilized multiplicatively to a different vector of activations originating from an upstream computation. This has the impact of regulating how a lot of that enter makes its means downstream, a bit like twisting a faucet deal with backward and forward to control the quantity of water passing by way of. That’s all there may be to it, now you perceive gating, what it’s and the way it’s utilized.

As a result of the gating weights are sometimes learnable parameters, the community can uncover throughout coaching the way to modulate inside indicators in ways in which minimise the general community loss. On this means, the gate turns into a dynamic filter, adjusting the interior info movement based mostly on the enter context, the mannequin’s frequently evolving parameters, and the gradients obtained throughout optimisation.

A Transient Tour Down Reminiscence Lane: The Lengthy Historical past of Gating

It’s price taking in a bit little bit of the historical past of Gating, earlier than we transfer to the primary contributions of the paper. Gating is admittedly nothing new, and the Qwen paper didn’t invent this commonplace element, their contribution lies elsewhere and will probably be lined shortly. The truth is gating has been a core mechanism in deep architectures for a lot of a long time now. For instance, Lengthy Quick-Time period Reminiscence (LSTM) networks, launched in 1997, pioneered the systematic use of multiplicative gates — the enter, overlook, and output gates — to control the movement of data by way of time. These gates act as realized filters that decide which indicators must be written to reminiscence, which must be retained, and which must be uncovered to downstream layers. By controlling info movement on this fine-grained means, LSTMs successfully mitigated the multiplicative explosion or vanishing of gradients that hampered early recurrent networks, enabling secure long-term credit score project throughout backpropagation by way of time (BPTT).

Making use of Gating to the LLM Consideration Block

The Qwen group’s contribution focuses on making use of gating on to the transformer’s softmax consideration mechanism, a particular sort of configuration known as consideration gating. On this article, I received’t spend an excessive amount of time on the what of consideration, as there are a lot of assets on the market to find out about it, together with this current course by the DeepLearning.ai group and this prior article I’ve written on the topic. In an excellent temporary abstract, consideration is the core mechanism within the transformer structure that lets every enter sequence token collect contextual info from another token within the sequence, enabling tokens to ‘talk’ throughout coaching and inference, sharing info no matter how far aside they seem within the enter. The computational graph for the favored scaled dot product consideration (SDPA) is proven beneath:

Though consideration gating has been used for a few years, the Qwen group spotlight a shocking hole in our physique of information: as AI practitioners we’ve broadly utilized consideration gating with out really understanding why it really works or the way it shapes studying dynamics. The Qwen group’s work exhibits that we’ve been benefiting from this module for a very long time and not using a rigorous, systematic account of its effectiveness or the circumstances beneath which it performs greatest. The Qwen paper does simply that and plugs the hole, with the NeurIPS greatest paper choice committee quotation mentioning:

“This paper represents a considerable quantity of labor that’s doable solely with entry to industrial scale computing assets, and the authors’ sharing of the outcomes of their work, which can advance the neighborhood’s understanding of consideration in massive language fashions, is extremely commendable, particularly in an setting the place there was a transfer away from open sharing of scientific outcomes round LLMs.”

NeurIPS 2025, Choose Committee assertion.

Given the sheer quantity of {dollars} flowing in and the large business curiosity in AI as of late, it’s very nice to see that the Qwen group determined to ship this wealthy batch of classes learnt to the broader neighborhood, somewhat than preserve these informational nuggets behind closed doorways. In doing so, the Qwen group have delivered a stupendous paper full of sensible classes and clear explanations of the why behind consideration gating, all distilled in a means that Knowledge Scientists can instantly take and apply in real-world fashions.

The Qwen’s group systematic examine makes a number of concrete contributions to data that may be simply and instantly utilized to enhance many commonplace LLM architectures:

- Positioning of Gating: Placing a gating module proper after the worth matrix computation offers enhanced LLM efficiency, by way of introduction of a non-linearity and the inducement of input-dependent sparsity. In addition they examine key parameterisations of the gating module, resembling the kind of activation perform (SiLU or sigmoid) and the mixture perform (multiplication, addition).

- Consideration Sink and Huge Activations: Gating can radically curtail the facility of the eye sink phenomenon, the place most if not the entire consideration in a layer concentrates on a single token — I cowl this phenomenon intimately later. By suppressing these excessive activations, the mannequin turns into much more numerically secure throughout optimisation, eliminating the loss spikes that sometimes seem in deep or long-training runs. This elevated stability permits the mannequin to tolerate considerably greater studying charges, unlocking higher scaling with out the divergence seen in ungated transformers.

- Context Size Extension: Gating additionally facilitates context-length extension with out requiring full mannequin retraining. In observe, this implies a mannequin could be educated with a comparatively quick context window and later scaled to for much longer sequences by retrospectively adjusting parts such because the RoPE base. This adjustment successfully reparameterises the positional embedding geometry, permitting the mannequin to function at prolonged context lengths (e.g., as much as 32k tokens) whereas preserving stability and with out degrading beforehand realized representations.

Leveraging Gating to Enhance Efficiency, Studying Stability and Consideration Mechanics

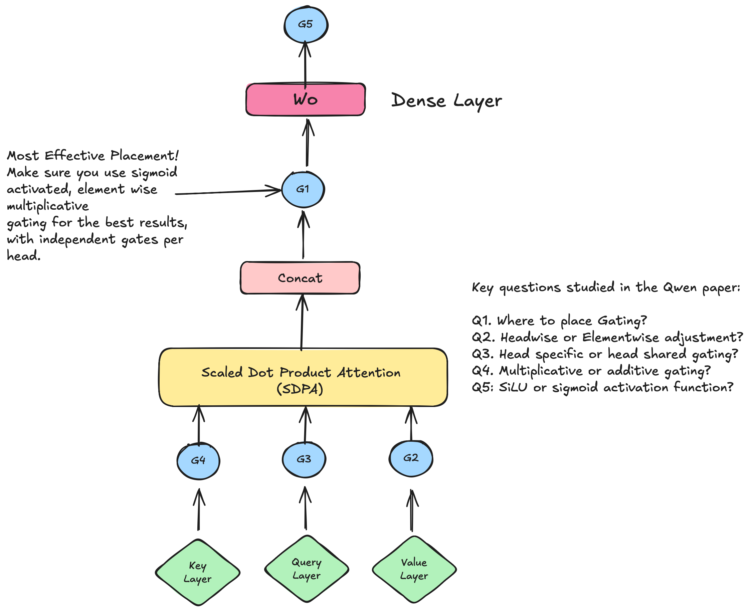

The Qwen group focus their investigation on how gating interacts with the LLMs softmax consideration module, aiming to know its affect on the module’s studying dynamics and to establish the optimum placement of the gate — for instance, after the Q, Ok, or V projections, after the eye computation, or after the dense layers. The setup of this examine is illustrated within the following diagram beneath:

The authors consider each mixture-of-experts — MoE (15B, 2.54B energetic) and dense (1.7B) — feed ahead community (FFN) — fashions. The MoE variant makes use of 128 consultants, top-8 softmax gating and fine-grained consultants. Fashions are educated on subsets of a 4T-token high-quality corpus protecting multilingual, math, and common data information, with a 4096 sequence size. Coaching makes use of AdamW defaults, with particular learning-rate and batch-size particulars supplied per experiment. They discover that gating provides minimal overhead — <2% latency. Analysis covers commonplace few-shot benchmarks: HellaSwag, MMLU, GSM8K, HumanEval, C-Eval, and CMMLU, plus perplexity exams throughout domains together with English, Chinese language, code, math, regulation, and literature.

The experimental analysis is organised to review the next questions in a scientific means. I additionally add the important thing takeaways beneath every analysis query, which apply equally MoEs and FFN fashions examined by the authors:

Q1: The place is it greatest to put the gating within the consideration head? After the Ok, Q, V projections? After the scaled dot product consideration? After the ultimate multi-head consideration concatenation?

- The authors discover that inserting gating on the output of the scaled dot product consideration (SDPA) module or after the worth map (G2), are the best placements.

- Moreover, SDPA consideration placement is more practical than at G2. To clarify this, the authors exhibit that gating placement at SDPA induces very low sparse gating scores, which is correlated with superior process efficiency.

- Worth gating (G2) produces greater, much less sparse scores and performs worse than SDPA-output gating (G1). Sparsity is vital to efficiency. This means that sparsity is most helpful when the gating is dependent upon the present question, permitting the mannequin to filter irrelevant context. The gate decides what to suppress or amplify based mostly on what the present token wants.

- Their experiments with input-independent gating affirm this: it gives minor beneficial properties by way of added non-linearity however lacks the selective sparsity supplied by query-dependent gating.

This discovering above is greatest defined by way of an instance. Although the Ok and V maps are technically input-dependent, they don’t seem to be conditioned on the present question token. For instance, if the question is “Paris,” the worth tokens is perhaps “France,” “capital,” “climate,” or “Eiffel Tower,” however every worth token solely is aware of its personal illustration and never what Paris is asking for. G2 gating bases its determination on the supply tokens themselves, which can be irrelevant to the question’s wants. In distinction, G1 gating is computed from the question illustration, so it is ready to selectively suppress or amplify context based mostly on what the question is definitely attempting to retrieve. This results in sparser, cleaner gating and higher efficiency for G1, whereas the Qwen group finds that G2 tends to provide greater, noisier scores and weaker outcomes.

Q2: Will we regulate the output by way of elementwise multiplication for fine-grained management or will we simply study a scalar that coarsely adjusts output?

The leads to the paper present that multiplicative SDPA gating is best than additive. When utilizing a gating perform in softmax consideration, we’re higher of multiplying its output somewhat than including it.

Q3: As consideration in LLMs is often multi-headed, will we share gates throughout heads or will we study head-specific gating?

The authors are unequivocal that gating should be realized per head somewhat than shared throughout heads. They discover that when gates are shared, the mannequin tends to provide bigger, much less selective gating values, which dilutes head-level specialization and harms efficiency. In distinction, head-specific gating preserves every head’s distinctive function and persistently yields higher outcomes. Curiously, the authors state that head-specific gating is probably the most essential design selection that has the most important impact on efficiency, with the granularity of the gating and activation perform selection having a extra minor influence.

This autumn: We are able to modulate the output both multiplicatively or additively. Which strategy works higher?

The leads to the paper present that multiplicative SDPA gating is best than additive. When utilizing a gating perform in softmax consideration, we’re higher of multiplying its output somewhat than including it.

Q5: What activation perform makes extra sense within the gating module, a sigmoid or a SiLU?

Sigmoid outperforms SiLU when used within the best-performing configuration, specifically elementwise gating utilized to the SDPA output (G1). Changing sigmoid with SiLU on this setup persistently results in worse outcomes, indicating that sigmoid is the more practical activation for gating.

Mitigating the Scourge of Consideration Sinks

A key problem in LLMs is consideration sinking, the place the primary token absorbs a lot of the consideration weight and overwhelms the remainder of the sequence, resulting in disproportionately massive activations that may destabilise coaching and warp the mannequin’s representations. Importantly, the Qwen group present that gating can mitigate this impact, with the SDPA output gating lowering the large activations and a focus sink.

Extending Context Size by Altering the Rotary Place Embeddings (RoPE) Base

To construct long-context fashions, the Qwen group observe a three-stage coaching technique, detailed beneath. This coaching technique offers an extra fascinating perception into how frontier labs practice large-scale fashions, and what instruments they discover efficient:

- Increasing RoPE base: First, they develop the Rotary Place Embeddings (RoPE) base from 10k to 1M which flattens the positional frequency curve and permits secure consideration at for much longer place.

- Mid-Coaching: the Qwen group then proceed coaching the mannequin for an extra 80B tokens utilizing 32k-length sequences. This continuation section (generally known as “mid-training”) lets the mannequin adapt naturally to the brand new RoPE geometry with out relearning every little thing.

- YaRN Extension: they then apply But One other RoPE eNhancement (YaRN) to develop the context size as much as 128k, with out additional coaching.

Let’s step again and briefly make clear what RoPE is and why it issues in LLMs. With out injecting positional info, a Transformer’s consideration mechanism has no sense of the place tokens seem in a sequence. Like many strategies in AI there’s a easy, underlying geometric instinct to how they work, that makes every little thing actually clear. That is definitely the case for positional embeddings and RoPE. In a easy 2D analogy, you possibly can think about token embeddings as a cloud of factors scattered in house, with no indication of their order or relative spacing within the authentic sequence.

RoPE encodes place by rotating every 2D slice of the question/key embedding by an angle proportional to the token’s place. The embedding is partitioned into many 2D sub-vectors, every assigned its personal rotation frequency (θ₁, θ₂, …), so totally different slices rotate at totally different speeds. Low-frequency slices rotate slowly and seize broad, long-range positional construction, whereas high-frequency slices rotate quickly and seize fine-grained, short-range relationships. Collectively, these multi-scale rotations permit consideration to deduce relative distances between tokens throughout each native and world contexts. It is a lovely concept and implementation, and it’s strategies like these that make me grateful to be working within the subject of AI.

The important thing perception right here is that the relative angle between two rotated embeddings naturally encodes their relative distance within the sequence, permitting the eye mechanism to deduce ordering and spacing by way of geometry alone. This makes positional info a property of how queries and keys work together. For instance, if the tokens are shut within the sequence, their rotations will probably be related, which equates to a big dot product, giving a better consideration weight. Conversely, when tokens are farther aside, their rotations differ extra, so the dot product between their queries and keys modifications in a position-dependent means, sometimes lowering consideration to distant tokens until the mannequin has realized that long-range interactions are vital.

YaRN is a contemporary and versatile solution to prolong an LLM’s context window with out retraining, and with out inflicting the instabilities seen in naïvely extrapolated RoPE. RoPE begins to fail at lengthy ranges as a result of its highest-frequency rotational dimensions wrap round too rapidly. As soon as positions exceed the coaching horizon, these dimensions produce repeated phases, which means tokens which can be far aside can seem deceptively related in positional house. This section aliasing (or matching) destabilises consideration and may trigger it to break down. YaRN fixes this by easily stretching the RoPE frequency spectrum preserving the mannequin’s short-range positional behaviour whereas progressively interpolating to decrease frequencies for long-range positions. The result’s a positional embedding scheme that behaves naturally as much as 32k, 64k, and even 128k tokens, with far much less distortion than older NTK or linear-scaling strategies. As soon as their mannequin was discovered to be secure at 32k, the Qwen group utilized YaRN to additional interpolate the RoPE frequencies, extending the efficient context window to 128k.

Of their analysis, the Qwen group discover, that throughout the educated 32k window, SDPA-gated fashions barely outperform the baseline, indicating that gating improves consideration dynamics with out harming long-context stability, even beneath substantial positional scaling.

Moreover, with the YaRN extension and within the large-context regime, they discover that the SDPA-output gated community considerably outperforms the baseline between 64k-128k context lengths. The authors tie this efficiency enhance to the mitigation of the eye sink phenomenon, that they surmise the baseline mannequin depends upon to distribute consideration scores throughout tokens. They hypothesise that the SDPA-output gated mannequin is way much less delicate to the RoPE and YaRN induced modifications to the positioning encoding scheme and context size changes. Making use of YaRN, which doesn’t require additional coaching, could disrupt these realized sink patterns, resulting in the noticed degradation within the base mannequin efficiency. The SDPA-gated mannequin, in distinction, doesn’t depend on the eye sink to stabilise consideration.

Coding Up our Personal Gating Implementation

Earlier than we conclude, it’s could be instructive to attempt to code up an implementation of an AI approach immediately from a paper, and it’s an effective way to solidify the important thing ideas. To this finish, we’ll stroll by way of a easy Python implementation of scaled dot product consideration with softmax gating.

We’ll first outline our key hyper parameters, such because the sequence size (seq_len), the hidden dimension of the mannequin (d_model), the variety of heads (n_heads) and the pinnacle dimension (head_dim).

import numpy as np

np.random.seed(0)

# ---- Toy config ----

seq_len = 4 # tokens

d_model = 8 # mannequin dim

n_heads = 2

head_dim = d_model // n_headsWe subsequent outline some (faux) token embeddings (merely generated randomly right here), alongside our randomly initialised venture weights (not learnt for the needs of this straightforward instance).

# Faux token embeddings

x = np.random.randn(seq_len, d_model) # [T, D]

# ---- Projection weights ----

W_q = np.random.randn(d_model, d_model)

W_k = np.random.randn(d_model, d_model)

W_v = np.random.randn(d_model, d_model)

W_o = np.random.randn(d_model, d_model) # output projectionWe then outline the same old suspects, softmax, sigmoid, and in addition a technique to separate the dimension D into n_heads:

def softmax(logits, axis=-1):

logits = logits - np.max(logits, axis=axis, keepdims=True)

exp = np.exp(logits)

return exp / np.sum(exp, axis=axis, keepdims=True)

def sigmoid(z):

return 1 / (1 + np.exp(-z))

# ---- Helper: break up/concat heads ----

def split_heads(t): # [T, D] -> [H, T, Dh]

return t.reshape(seq_len, n_heads, head_dim).transpose(1, 0, 2)

def concat_heads(t): # [H, T, Dh] -> [T, D]

return t.transpose(1, 0, 2).reshape(seq_len, d_model)Now we will dive into the core gating implementation and see precisely the way it works in observe. In the entire examples beneath, we use random tensors as stand-ins for the realized gate parameters that an actual mannequin would practice end-to-end.

#==================================================

# Ahead cross

# ============================================================

def attention_with_gates(x):

# 1) Linear projections

Q = x @ W_q # [T, D]

Ok = x @ W_k

V = x @ W_v

# ----- G4: gate on Queries (after W_q) -----

G4 = sigmoid(np.random.randn(*Q.form))

Q = G4 * Q

# ----- G3: gate on Keys (after W_k) -----

G3 = sigmoid(np.random.randn(*Ok.form))

Ok = G3 * Ok

# ----- G2: gate on Values (after W_v) -----

G2 = sigmoid(np.random.randn(*V.form))

V = G2 * V

# 2) Break up into heads

Qh = split_heads(Q) # [H, T, Dh]

Kh = split_heads(Ok)

Vh = split_heads(V)

# 3) Scaled Dot Product Consideration per head

scale = np.sqrt(head_dim)

scores = Qh @ Kh.transpose(0, 2, 1) / scale # [H, T, T]

attn = softmax(scores, axis=-1)

head_out = attn @ Vh # [H, T, Dh]

# 4) Concat heads

multi_head_out = concat_heads(head_out) # [T, D]

# ----- G1: gate on concatenated heads (earlier than W_o) -----

G1 = sigmoid(np.random.randn(*multi_head_out.form))

multi_head_out = G1 * multi_head_out

# 5) Output projection

y = multi_head_out @ W_o # [T, D]

# ----- G5: gate on ultimate dense output -----

G5 = sigmoid(np.random.randn(*y.form))

y = G5 * y

return {

"Q": Q, "Ok": Ok, "V": V,

"G2": G2, "G3": G3, "G4": G4,

"multi_head_out": multi_head_out,

"G1": G1, "final_out": y, "G5": G5,

}

out = attention_with_gates(x)

print("Remaining output form:", out["final_out"].form)The code above inserts gating modules at 4 places, replicating the positioning within the Qwen paper: the question map (G4), key map (G3), worth map (G2), and the output of the SDPA module (G1). Though the Qwen group suggest utilizing solely the G1 configuration in observe — inserting a single gate on the SDPA output — we embody all 4 right here for illustration. The objective is to indicate that gating is solely a light-weight modulation mechanism utilized to totally different pathways throughout the consideration block. Hopefully this makes the general idea really feel extra concrete and intuitive.

Conclusions & Remaining Ideas

On this article we took a whistle-stop tour into the idea of gating for softmax consideration in LLMs and lined the important thing classes learnt from the NeurIPS 2025 paper, “Gated Consideration for Massive Language Fashions: Non-linearity, Sparsity, and Consideration-Sink-Free”.

The Qwen paper is an AI tour-de-force and a treasure trove of sensible findings which can be instantly relevant to enhancing most modern LLM architectures. The Qwen group have prodcued an exhaustive examine into the configuration of gating for LLM softmax consideration, throwing mild on this vital element. There’s little doubt in my thoughts that the majority, if not all, frontier AI labs will probably be furiously scrambling to replace their architectures in step with the steerage popping out of the Qwen paper, one among this 12 months’s NeurIPS greatest papers, a extremely coveted achievement within the subject. As we converse there are most likely 1000’s of GPUs crunching away at studying LLMs with gating module configurations impressed by the clear classes within the Qwen paper.

Kudos to the Qwen group for making this information public for the advantage of the complete neighborhood. The unique code could be discovered right here in case you are excited by incorporating the Qwen group’s implementation into your individual fashions or driving their analysis additional (each nice analysis contribution results in extra questions, there are turtles all the way in which down!) to handle unanswered questions resembling what inside dynamics change when a gate is added, and why this results in the noticed robustness throughout positional regimes.

Disclaimer: The views and opinions expressed on this article are solely my very own and don’t symbolize these of my employer or any affiliated organisations. The content material is predicated on private reflections and speculative serious about the way forward for science and expertise. It shouldn’t be interpreted as skilled, educational, or funding recommendation. These forward-looking views are meant to spark dialogue and creativeness, to not make predictions with certainty.

📚 Additional Studying

- Alex Heath (2025) — Google’s Rise, RL Mania, and a Occasion Boat — A primary-hand roundup of NeurIPS 2025 takeaways, highlighting the surge of reinforcement studying, Google/DeepMind’s momentum, and the more and more extravagant convention social gathering tradition. Printed in Sources, a publication analysing AI business developments.

- Jianlin Su et al. (2024) — RoFormer: Enhanced Transformer with Rotary Place Embedding — The unique RoPE paper that launched rotary place embeddings, now used universally in LLMs. It explains how rotational encoding preserves relative place info and clarifies why altering the RoPE base impacts long-range consideration habits.

- Bowen Peng et al. (2023) — YaRN: Environment friendly Context Window Extension of Massive Language Fashions — The core reference behind YaRN interpolation. This work exhibits how adjusting RoPE frequencies by way of clean extrapolation can prolong fashions to 128k+ contexts with out retraining.

- Zihan Qiu et al. (2025) — Gated Consideration for Massive Language Fashions: Non-Linearity, Sparsity, and Consideration-Sink-Free — The definitive examine on gating in softmax consideration, reviwed on this article. It introduces SDPA-output gating (G1), explains why sigmoid gating introduces non-linearity and sparsity, exhibits how gating eliminates consideration sinks, and demonstrates superior context-length generalization beneath RoPE/YaRN modifications.

- Guangxuan Xiao et al. (2023) — StreamingLLM: Environment friendly Streaming LMs with Consideration Sinks — The paper that formally identifies the “consideration sink” phenomenon: early tokens attracting disproportionately massive consideration weights. It explains why baseline transformers usually collapse consideration to the primary token.

- Mingjie Solar et al. (2024) — Huge Activations in Massive Language Fashions — Exhibits that extraordinarily massive hidden activations in particular layers propagate by way of the residual stream and trigger pathological consideration distributions. The Qwen paper empirically validates this hyperlink and demonstrates how gating suppresses huge activations.

- Noam Shazeer (2020) — GLU Variants Enhance Transformer — The foundational reference for gating inside feedforward blocks (SwiGLU, GEGLU). Fashionable LLMs closely depend on this household of gated FFN activations; the Qwen paper connects this lineage to gating inside consideration itself.

- Hochreiter & Schmidhuber (1997) — LSTM: Lengthy Quick-Time period Reminiscence –The earliest and most influential gating structure. LSTMs introduce enter, output, and overlook gates for selective info passage — the conceptual precursor to all fashionable gating methods, together with SDPA-output gating in transformers.

- Xiangming Gu et al. (2024) — When Consideration Sink Emerges in Language Fashions — Supplies a contemporary empirical therapy of consideration sinks, key biases, and non-informative early-token dominance.

- Dong et al. (2025) — LongRed: Mitigating Quick-Textual content Degradation of Lengthy-Context LLMs — Presents a mathematical derivation (referenced in Qwen) displaying how modifying RoPE modifications consideration distributions and hidden-state geometry.