Function The explosive development of datacenters that adopted ChatGPT’s debut in 2022 has shone a highlight on the environmental influence of those power-hungry services.

But it surely’s not simply energy we now have to fret about. These services are able to sucking down prodigious portions of water.

Within the US, datacenters can eat wherever between 300,000 and 4 million gallons of water a day to maintain the compute housed inside them cool, Austin Shelnutt of Texas-based Strategic Thermal Labs defined in a presentation at SC24 in Atlanta this fall.

We’ll get to why some datacenters use extra water than others in a bit, however in some areas charges of consumption are as excessive as 25 p.c of the municipality’s water provide.

This stage of water consumption, understandably, has led to considerations over water shortage and desertification, which had been already problematic resulting from local weather change, and have solely been exacerbated by the proliferation of generative AI. Right now, the AI datacenters constructed to coach these fashions usually require tens of 1000’s of GPUs, every able to producing 1,200 watts of energy and warmth.

Nonetheless, over the following few years, hyperscalers, cloud suppliers, and mannequin builders plan to deploy hundreds of thousands of GPUs and different AI accelerators requiring gigawatts of power, and meaning even larger charges of water consumption.

In response to researchers at UC Riverside and the College of Texas Arlington, by 2027 international AI demand might account for the withdrawal of 4.2-6.6 billion cubic meters of water yearly. That is roughly the equal of half the UK’s water withdrawal over the course of a yr.

Nonetheless, mitigating datacenter water consumption is not so simple as ditching evaporative cooling towers for waterless options.

The datacenter water cycle

Datacenters eat water in a few methods. The primary, and the world we’ll focus most of our consideration on, is direct water consumption. That is water that is pulled from native sources together with water and wastewater remedy crops.

This water is pumped into cooling towers, the place it evaporates, transferring warmth to the air. In the event you’ve ever used a swamp cooler to sit back your own home or residence, cooling towers work in an identical method.

Evaporative cooling has turn into standard amongst datacenter operators for a few causes, however the massive one is that they’re actually good at eliminating warmth and do not require a ton of electrical energy to do it.

In response to Shelnutt, evaporating ten gallons a minute is sufficient to cool roughly 1.5 megawatts of compute.

After we discuss “consumption,” we’re referring to water that is been evaporated. It is not truly consumed a lot because it’s faraway from the native watershed by the prevailing winds. This may be problematic given evaporative coolers are only in arid climates the place water shortage is usually an issue.

In response to researchers, about 70-80 p.c of the water that enters a cooling tower is definitely consumed [PDF], the remaining is used to flush out mineral deposits much like these discovered when cleansing a humidifier. The brine that is left behind is recycled by means of the system till it exceeds a sure focus, at which level it is flushed away to a holding pond or remedy plant run onsite or by the native municipality earlier than it is returned to the native watershed.

For this to work, the wastewater remedy plant must be sized accurately to deal with the amount and focus of brine generated by datacenters within the area. Issues can get difficult fairly shortly when this is not carried out, as was the case for Microsoft’s campus in Goodyear, Arizona.

Why datacenters’ consuming behavior is so arduous to give up

One of many causes that datacenter operators have gravitated towards evaporative coolers is as a result of they’re so low-cost to function in comparison with different applied sciences.

“It’s at all times of a better coefficient of efficiency (COP), that means much less power required, to evaporate water, no matter what cooling medium is being utilized,” Shelnutt stated.

Actually, COP, which refers back to the quantity of warmth eliminated for a given quantity of energy, for evaporative cooling is available in at 1,230 whereas dry coolers and chillers handle a COP of about 12 and 4, respectively, he defined.

When it comes to power consumption, this makes an evaporatively cooled datacenter much more power environment friendly than one that does not eat water, and that interprets to a decrease working value.

The problem is that not each location and local weather is effectively suited to evaporative cooling. In hotter climates the place water is both scarce or locations with excessive humidity the place evaporative coolers are ineffective, chillers, which operate much like your AC unit, could also be used as a substitute.

In cooler climates such because the Nordic areas, datacenters usually make use of free cooling and dry coolers, which reap the benefits of the decrease ambient air temperature to eject warmth into the environment with out consuming any water.

Whether or not or not evaporating cooling is used is extremely depending on location and local weather, Digital Realty CTO Chris Sharp instructed The Register.

“It’s a must to perceive water is a scarce useful resource. All people has to start out at that base level,” he defined. “It’s a must to be good stewards of that useful resource simply to make sure that you are using it successfully.”

The colocation big operates greater than 300 bit barns across the globe, and makes use of a wide range of designs based mostly on predicted capability necessities and environmental elements. The corporate’s normal datacenter design, Sharp says, would not eat any water in any respect, as a substitute counting on chillers to tug power from the power. Nonetheless, in some areas, evaporative cooling and dry coolers are employed as a substitute.

Most datacenter water is not consumed onsite

Whereas dry coolers and chillers might not eat water onsite, they don’t seem to be with out compromise. These applied sciences eat considerably extra energy from the native grid and probably lead to larger oblique water consumption.

In response to the US Power Data Administration, the US sources roughly 89 p.c of its energy from pure fuel, nuclear, and coal crops. Many of those crops make use of steam generators to generate energy, which consumes loads of water within the course of.

Paradoxically, whereas evaporative coolers are why datacenters eat a lot water onsite, the identical know-how is usually employed to cut back the quantity of water misplaced to steam. Even nonetheless the quantity of water consumed by means of power technology far exceeds that of contemporary datacenters.

A 2016 examine [PDF] by Lawrence Berkeley Nationwide Lab (LBL) discovered that roughly 83 p.c of water consumption attributable to datacenters might be attributed to energy technology. Because of this, decreasing onsite water consumption on the expense of upper energy draw might result in a rise within the quantity of water consumed.

Nonetheless, simply because energy crops might pull extra water than datacenters, that does not imply they’re pulling the identical water, Shaolei Ren, affiliate professor {of electrical} and laptop engineering at UC Riverside, instructed The Register, including that many energy crops get their water from sources like rivers and lakes that is probably not appropriate for datacenters.

Ren and his staff have been finding out the datacenter’s environmental influence on water consumption and air high quality.

This, once more, is extremely depending on location and the grid combine. For instance, datacenters positioned in areas with an abundance of hydroelectric, photo voltaic, or wind energy could have decrease oblique water consumption than one powered by fossil fuels or combustion.

What may be carried out to curb datacenter water consumption?

Understanding that datacenters are, with few exceptions, at all times going to make use of some quantity of water, there are nonetheless loads of methods operators want to cut back direct and oblique consumption.

Some of the apparent is matching water stream charges to facility load and using free cooling wherever potential. Utilizing a mixture of sensors and software program automation to watch pumps and filters at services using evaporative cooling, Sharp says Digital Realty has noticed a 15 p.c discount in total water utilization.

“That equates to about 126 million gallons of prevented withdrawal from the system as a result of we’re simply operating it extra effectively,” he stated.

We’re additionally seeing datacenters inbuilt colder climates that may reap the benefits of free cooling a lot of the yr. Higher but, in lots of Nordic nations, giant portions of hydroelectric energy imply that even when auxiliary dry coolers or chillers are required, oblique water consumption is not as a lot of a difficulty.

We have additionally seen warmth generated by datacenters used to heat native places of work, help district heating grids, and even greenhouses to develop produce yr spherical.

In areas the place free cooling and warmth reuse aren’t sensible, shifting to direct-to-chip and immersion liquid cooling (DLC) for AI clusters, which, by the best way, is a closed loop that does not actually eat water, can facilitate the usage of dry coolers. Whereas dry coolers are nonetheless extra energy-intensive than evaporative coolers, the considerably decrease and due to this fact higher energy use effectiveness (PUE) of liquid cooling might make up the distinction.

In the event you’re not acquainted, PUE describes how a lot energy consumed by datacenters goes towards compute, storage, or networking gear – stuff that makes cash – versus issues like facility cooling, which do not. The nearer the PUE is to 1.0, the extra environment friendly the power.

That is potential as a result of a large chunk, upward of 20 p.c, of the power utilized by air-cooled AI techniques goes to chassis followers. On prime of that, water is a significantly better conductor of warmth. Shifting to DLC, one thing that is already occurring with Nvidia’s top-specced Blackwell components, has the potential to drop PUE from 1.69-1.44 to round 1.1 or decrease.

Nonetheless, as Shelnutt famous in his SC24 presentation, this balancing act relies upon closely on the facility saved by DLC not being reallocated to help extra compute.

Water-aware computing

Whereas many of those water-saving applied sciences require modifications to facility infrastructure to implement, one other method may be to vary the best way workloads are distributed throughout datacenters.

The thought right here is not that completely different from carbon-aware computing, the place workloads are routed to completely different areas based mostly on the time and carbon-intensity of the grid, Ren defined. “They will do one thing comparable based mostly on the water stress stage and real-time water effectivity, as a result of this water evaporation charge does change over time – an hourly midday time versus the evening time.”

This, he admits, is not one thing that the cloud suppliers and hyperscalers could have a neater time reaching as they keep a decent grip on the orchestration of their infrastructure. “Colocation suppliers have extra challenges resulting from restricted management over the servers and workloads.”

This method may not be acceptable for latency-sensitive workloads, like AI inferencing, the place proximity to customers is crucial for real-time information processing. Nonetheless, workloads like AI coaching do not have these identical limitations. One can think about an AI coaching workload, which could run for weeks or months, might be queued as much as run in a far-flung datacenter positioned within the polar areas that may reap the benefits of free cooling.

Superb-tuning workloads, which contain altering the conduct of a pre-trained mannequin, are far much less computationally intensive. Relying on the dimensions of the bottom mannequin and the dataset used, a fine-tuning job might solely require a number of hours to finish. On this case, the job might be scheduled to run at evening when temperatures are decrease and fewer water is misplaced to evaporation.

Is water the brand new oil?

Whereas datacenter water consumption stays a subject of concern, significantly in drought-prone areas, Shelnutt argues the larger difficulty is the place the water utilized by these services is coming from.

“Planet Earth has no scarcity of water. What planet Earth has a scarcity of, in some instances, is regional drinkable water, and there’s a water distribution shortage difficulty in sure components of the world,” he stated.

To handle these considerations, Shelnutt suggests datacenter operators must be investing in desalination crops, water distribution networks, on-premises wastewater remedy services, and non-potable storage to help broader adoption of evaporative coolers.

Whereas the concept of first desalinating after which delivery water by pipeline or prepare may sound cost-prohibitive, many hyperscalers have already dedicated a whole bunch of hundreds of thousands of {dollars} to securing onsite nuclear energy over the following few years. As such, investing in water desalination and transportation is probably not to this point fetched.

Extra importantly, Shelnutt claims that desalinating and delivery water from the coasts remains to be extra environment friendly than utilizing dry coolers or refrigerant-based cooling tech.

“Desalinate ocean water proper now at three kilowatts per cubic meter – that is a median over the past ten years; there are lots of installations of desalination crops which can be down under one kilowatt hour per cubic meter – that is a COP of 222,” he stated.

Ship that 1,000 miles by pipeline and Shelnutt says the COP drops to 132. Shipped by prepare, the COP falls but additional to 38, far lower than evaporating water sourced from a municipal remedy plant, however nonetheless much more environment friendly than utilizing dry coolers. ®

Function The explosive development of datacenters that adopted ChatGPT’s debut in 2022 has shone a highlight on the environmental influence of those power-hungry services.

But it surely’s not simply energy we now have to fret about. These services are able to sucking down prodigious portions of water.

Within the US, datacenters can eat wherever between 300,000 and 4 million gallons of water a day to maintain the compute housed inside them cool, Austin Shelnutt of Texas-based Strategic Thermal Labs defined in a presentation at SC24 in Atlanta this fall.

We’ll get to why some datacenters use extra water than others in a bit, however in some areas charges of consumption are as excessive as 25 p.c of the municipality’s water provide.

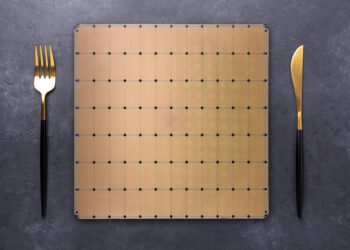

This stage of water consumption, understandably, has led to considerations over water shortage and desertification, which had been already problematic resulting from local weather change, and have solely been exacerbated by the proliferation of generative AI. Right now, the AI datacenters constructed to coach these fashions usually require tens of 1000’s of GPUs, every able to producing 1,200 watts of energy and warmth.

Nonetheless, over the following few years, hyperscalers, cloud suppliers, and mannequin builders plan to deploy hundreds of thousands of GPUs and different AI accelerators requiring gigawatts of power, and meaning even larger charges of water consumption.

In response to researchers at UC Riverside and the College of Texas Arlington, by 2027 international AI demand might account for the withdrawal of 4.2-6.6 billion cubic meters of water yearly. That is roughly the equal of half the UK’s water withdrawal over the course of a yr.

Nonetheless, mitigating datacenter water consumption is not so simple as ditching evaporative cooling towers for waterless options.

The datacenter water cycle

Datacenters eat water in a few methods. The primary, and the world we’ll focus most of our consideration on, is direct water consumption. That is water that is pulled from native sources together with water and wastewater remedy crops.

This water is pumped into cooling towers, the place it evaporates, transferring warmth to the air. In the event you’ve ever used a swamp cooler to sit back your own home or residence, cooling towers work in an identical method.

Evaporative cooling has turn into standard amongst datacenter operators for a few causes, however the massive one is that they’re actually good at eliminating warmth and do not require a ton of electrical energy to do it.

In response to Shelnutt, evaporating ten gallons a minute is sufficient to cool roughly 1.5 megawatts of compute.

After we discuss “consumption,” we’re referring to water that is been evaporated. It is not truly consumed a lot because it’s faraway from the native watershed by the prevailing winds. This may be problematic given evaporative coolers are only in arid climates the place water shortage is usually an issue.

In response to researchers, about 70-80 p.c of the water that enters a cooling tower is definitely consumed [PDF], the remaining is used to flush out mineral deposits much like these discovered when cleansing a humidifier. The brine that is left behind is recycled by means of the system till it exceeds a sure focus, at which level it is flushed away to a holding pond or remedy plant run onsite or by the native municipality earlier than it is returned to the native watershed.

For this to work, the wastewater remedy plant must be sized accurately to deal with the amount and focus of brine generated by datacenters within the area. Issues can get difficult fairly shortly when this is not carried out, as was the case for Microsoft’s campus in Goodyear, Arizona.

Why datacenters’ consuming behavior is so arduous to give up

One of many causes that datacenter operators have gravitated towards evaporative coolers is as a result of they’re so low-cost to function in comparison with different applied sciences.

“It’s at all times of a better coefficient of efficiency (COP), that means much less power required, to evaporate water, no matter what cooling medium is being utilized,” Shelnutt stated.

Actually, COP, which refers back to the quantity of warmth eliminated for a given quantity of energy, for evaporative cooling is available in at 1,230 whereas dry coolers and chillers handle a COP of about 12 and 4, respectively, he defined.

When it comes to power consumption, this makes an evaporatively cooled datacenter much more power environment friendly than one that does not eat water, and that interprets to a decrease working value.

The problem is that not each location and local weather is effectively suited to evaporative cooling. In hotter climates the place water is both scarce or locations with excessive humidity the place evaporative coolers are ineffective, chillers, which operate much like your AC unit, could also be used as a substitute.

In cooler climates such because the Nordic areas, datacenters usually make use of free cooling and dry coolers, which reap the benefits of the decrease ambient air temperature to eject warmth into the environment with out consuming any water.

Whether or not or not evaporating cooling is used is extremely depending on location and local weather, Digital Realty CTO Chris Sharp instructed The Register.

“It’s a must to perceive water is a scarce useful resource. All people has to start out at that base level,” he defined. “It’s a must to be good stewards of that useful resource simply to make sure that you are using it successfully.”

The colocation big operates greater than 300 bit barns across the globe, and makes use of a wide range of designs based mostly on predicted capability necessities and environmental elements. The corporate’s normal datacenter design, Sharp says, would not eat any water in any respect, as a substitute counting on chillers to tug power from the power. Nonetheless, in some areas, evaporative cooling and dry coolers are employed as a substitute.

Most datacenter water is not consumed onsite

Whereas dry coolers and chillers might not eat water onsite, they don’t seem to be with out compromise. These applied sciences eat considerably extra energy from the native grid and probably lead to larger oblique water consumption.

In response to the US Power Data Administration, the US sources roughly 89 p.c of its energy from pure fuel, nuclear, and coal crops. Many of those crops make use of steam generators to generate energy, which consumes loads of water within the course of.

Paradoxically, whereas evaporative coolers are why datacenters eat a lot water onsite, the identical know-how is usually employed to cut back the quantity of water misplaced to steam. Even nonetheless the quantity of water consumed by means of power technology far exceeds that of contemporary datacenters.

A 2016 examine [PDF] by Lawrence Berkeley Nationwide Lab (LBL) discovered that roughly 83 p.c of water consumption attributable to datacenters might be attributed to energy technology. Because of this, decreasing onsite water consumption on the expense of upper energy draw might result in a rise within the quantity of water consumed.

Nonetheless, simply because energy crops might pull extra water than datacenters, that does not imply they’re pulling the identical water, Shaolei Ren, affiliate professor {of electrical} and laptop engineering at UC Riverside, instructed The Register, including that many energy crops get their water from sources like rivers and lakes that is probably not appropriate for datacenters.

Ren and his staff have been finding out the datacenter’s environmental influence on water consumption and air high quality.

This, once more, is extremely depending on location and the grid combine. For instance, datacenters positioned in areas with an abundance of hydroelectric, photo voltaic, or wind energy could have decrease oblique water consumption than one powered by fossil fuels or combustion.

What may be carried out to curb datacenter water consumption?

Understanding that datacenters are, with few exceptions, at all times going to make use of some quantity of water, there are nonetheless loads of methods operators want to cut back direct and oblique consumption.

Some of the apparent is matching water stream charges to facility load and using free cooling wherever potential. Utilizing a mixture of sensors and software program automation to watch pumps and filters at services using evaporative cooling, Sharp says Digital Realty has noticed a 15 p.c discount in total water utilization.

“That equates to about 126 million gallons of prevented withdrawal from the system as a result of we’re simply operating it extra effectively,” he stated.

We’re additionally seeing datacenters inbuilt colder climates that may reap the benefits of free cooling a lot of the yr. Higher but, in lots of Nordic nations, giant portions of hydroelectric energy imply that even when auxiliary dry coolers or chillers are required, oblique water consumption is not as a lot of a difficulty.

We have additionally seen warmth generated by datacenters used to heat native places of work, help district heating grids, and even greenhouses to develop produce yr spherical.

In areas the place free cooling and warmth reuse aren’t sensible, shifting to direct-to-chip and immersion liquid cooling (DLC) for AI clusters, which, by the best way, is a closed loop that does not actually eat water, can facilitate the usage of dry coolers. Whereas dry coolers are nonetheless extra energy-intensive than evaporative coolers, the considerably decrease and due to this fact higher energy use effectiveness (PUE) of liquid cooling might make up the distinction.

In the event you’re not acquainted, PUE describes how a lot energy consumed by datacenters goes towards compute, storage, or networking gear – stuff that makes cash – versus issues like facility cooling, which do not. The nearer the PUE is to 1.0, the extra environment friendly the power.

That is potential as a result of a large chunk, upward of 20 p.c, of the power utilized by air-cooled AI techniques goes to chassis followers. On prime of that, water is a significantly better conductor of warmth. Shifting to DLC, one thing that is already occurring with Nvidia’s top-specced Blackwell components, has the potential to drop PUE from 1.69-1.44 to round 1.1 or decrease.

Nonetheless, as Shelnutt famous in his SC24 presentation, this balancing act relies upon closely on the facility saved by DLC not being reallocated to help extra compute.

Water-aware computing

Whereas many of those water-saving applied sciences require modifications to facility infrastructure to implement, one other method may be to vary the best way workloads are distributed throughout datacenters.

The thought right here is not that completely different from carbon-aware computing, the place workloads are routed to completely different areas based mostly on the time and carbon-intensity of the grid, Ren defined. “They will do one thing comparable based mostly on the water stress stage and real-time water effectivity, as a result of this water evaporation charge does change over time – an hourly midday time versus the evening time.”

This, he admits, is not one thing that the cloud suppliers and hyperscalers could have a neater time reaching as they keep a decent grip on the orchestration of their infrastructure. “Colocation suppliers have extra challenges resulting from restricted management over the servers and workloads.”

This method may not be acceptable for latency-sensitive workloads, like AI inferencing, the place proximity to customers is crucial for real-time information processing. Nonetheless, workloads like AI coaching do not have these identical limitations. One can think about an AI coaching workload, which could run for weeks or months, might be queued as much as run in a far-flung datacenter positioned within the polar areas that may reap the benefits of free cooling.

Superb-tuning workloads, which contain altering the conduct of a pre-trained mannequin, are far much less computationally intensive. Relying on the dimensions of the bottom mannequin and the dataset used, a fine-tuning job might solely require a number of hours to finish. On this case, the job might be scheduled to run at evening when temperatures are decrease and fewer water is misplaced to evaporation.

Is water the brand new oil?

Whereas datacenter water consumption stays a subject of concern, significantly in drought-prone areas, Shelnutt argues the larger difficulty is the place the water utilized by these services is coming from.

“Planet Earth has no scarcity of water. What planet Earth has a scarcity of, in some instances, is regional drinkable water, and there’s a water distribution shortage difficulty in sure components of the world,” he stated.

To handle these considerations, Shelnutt suggests datacenter operators must be investing in desalination crops, water distribution networks, on-premises wastewater remedy services, and non-potable storage to help broader adoption of evaporative coolers.

Whereas the concept of first desalinating after which delivery water by pipeline or prepare may sound cost-prohibitive, many hyperscalers have already dedicated a whole bunch of hundreds of thousands of {dollars} to securing onsite nuclear energy over the following few years. As such, investing in water desalination and transportation is probably not to this point fetched.

Extra importantly, Shelnutt claims that desalinating and delivery water from the coasts remains to be extra environment friendly than utilizing dry coolers or refrigerant-based cooling tech.

“Desalinate ocean water proper now at three kilowatts per cubic meter – that is a median over the past ten years; there are lots of installations of desalination crops which can be down under one kilowatt hour per cubic meter – that is a COP of 222,” he stated.

Ship that 1,000 miles by pipeline and Shelnutt says the COP drops to 132. Shipped by prepare, the COP falls but additional to 38, far lower than evaporating water sourced from a municipal remedy plant, however nonetheless much more environment friendly than utilizing dry coolers. ®