I work as an AI Engineer in a specific area of interest: doc automation and data extraction. In my business utilizing Massive Language Fashions has offered numerous challenges relating to hallucinations. Think about an AI misreading an bill quantity as $100,000 as a substitute of $1,000, resulting in a 100x overpayment. When confronted with such dangers, stopping hallucinations turns into a important side of constructing strong AI options. These are a few of the key ideas I give attention to when designing options that could be vulnerable to hallucinations.

There are numerous methods to include human oversight in AI programs. Typically, extracted data is at all times offered to a human for overview. For example, a parsed resume could be proven to a person earlier than submission to an Applicant Monitoring System (ATS). Extra typically, the extracted data is routinely added to a system and solely flagged for human overview if potential points come up.

An important a part of any AI platform is figuring out when to incorporate human oversight. This typically includes several types of validation guidelines:

1. Easy guidelines, comparable to guaranteeing line-item totals match the bill complete.

2. Lookups and integrations, like validating the overall quantity in opposition to a purchase order order in an accounting system or verifying cost particulars in opposition to a provider’s earlier information.

These processes are factor. However we additionally don’t need an AI that continually triggers safeguards and forces handbook human intervention. Hallucinations can defeat the aim of utilizing AI if it’s continually triggering these safeguards.

One answer to stopping hallucinations is to make use of Small Language Fashions (SLMs) that are “extractive”. Which means the mannequin labels elements of the doc and we gather these labels into structured outputs. I like to recommend attempting to make use of a SLMs the place doable somewhat than defaulting to LLMs for each downside. For instance, in resume parsing for job boards, ready 30+ seconds for an LLM to course of a resume is commonly unacceptable. For this use case we’ve discovered an SLM can present leads to 2–3 seconds with larger accuracy than bigger fashions like GPT-4o.

An instance from our pipeline

In our startup a doc may be processed by as much as 7 completely different fashions — solely 2 of which could be an LLM. That’s as a result of an LLM isn’t at all times the perfect software for the job. Some steps comparable to Retrieval Augmented Technology depend on a small multimodal mannequin to create helpful embeddings for retrieval. Step one — detecting whether or not one thing is even a doc — makes use of a small and super-fast mannequin that achieves 99.9% accuracy. It’s very important to interrupt an issue down into small chunks after which work out which elements LLMs are finest fitted to. This manner, you cut back the probabilities of hallucinations occurring.

Distinguishing Hallucinations from Errors

I make a degree to distinguish between hallucinations (the mannequin inventing data) and errors (the mannequin misinterpreting current data). For example, deciding on the improper greenback quantity as a receipt complete is a mistake, whereas producing a non-existent quantity is a hallucination. Extractive fashions can solely make errors, whereas generative fashions could make each errors and hallucinations.

When utilizing generative fashions we’d like a way of eliminating hallucinations.

Grounding refers to any method which forces a generative AI mannequin to justify its outputs with regards to some authoritative data. How grounding is managed is a matter of danger tolerance for every mission.

For instance — an organization with a general-purpose inbox may look to establish motion objects. Normally, emails requiring actions are despatched on to account managers. A basic inbox that’s stuffed with invoices, spam, and easy replies (“thanks”, “OK”, and so on.) has far too many messages for people to test. What occurs when actions are mistakenly despatched to this basic inbox? Actions commonly get missed. If a mannequin makes errors however is mostly correct it’s already doing higher than doing nothing. On this case the tolerance for errors/hallucinations may be excessive.

Different conditions may warrant significantly low danger tolerance — suppose monetary paperwork and “straight-through processing”. That is the place extracted data is routinely added to a system with out overview by a human. For instance, an organization won’t permit invoices to be routinely added to an accounting system until (1) the cost quantity precisely matches the quantity within the buy order, and (2) the cost methodology matches the earlier cost methodology of the provider.

Even when dangers are low, I nonetheless err on the aspect of warning. At any time when I’m centered on data extraction I observe a easy rule:

If textual content is extracted from a doc, then it should precisely match textual content discovered within the doc.

That is tough when the data is structured (e.g. a desk) — particularly as a result of PDFs don’t carry any details about the order of phrases on a web page. For instance, an outline of a line-item may break up throughout a number of strains so the goal is to attract a coherent field across the extracted textual content whatever the left-to-right order of the phrases (or right-to-left in some languages).

Forcing the mannequin to level to precise textual content in a doc is “sturdy grounding”. Robust grounding isn’t restricted to data extraction. E.g. customer support chat-bots could be required to cite (verbatim) from standardised responses in an inner information base. This isn’t at all times best provided that standardised responses won’t truly be capable to reply a buyer’s query.

One other tough scenario is when data must be inferred from context. For instance, a medical assistant AI may infer the presence of a situation based mostly on its signs with out the medical situation being expressly acknowledged. Figuring out the place these signs have been talked about can be a type of “weak grounding”. The justification for a response should exist within the context however the precise output can solely be synthesised from the equipped data. An additional grounding step could possibly be to drive the mannequin to lookup the medical situation and justify that these signs are related. This will likely nonetheless want weak grounding as a result of signs can typically be expressed in some ways.

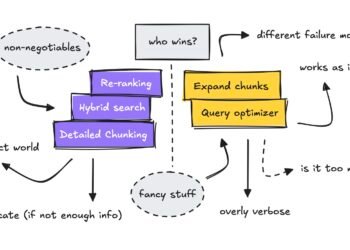

Utilizing AI to unravel more and more complicated issues could make it troublesome to make use of grounding. For instance, how do you floor outputs if a mannequin is required to carry out “reasoning” or to deduce data from context? Listed below are some concerns for including grounding to complicated issues:

- Determine complicated choices which could possibly be damaged down right into a algorithm. Somewhat than having the mannequin generate a solution to the ultimate choice have it generate the elements of that call. Then use guidelines to show the consequence. (Caveat — this could generally make hallucinations worse. Asking the mannequin a number of questions offers it a number of alternatives to hallucinate. Asking it one query could possibly be higher. However we’ve discovered present fashions are typically worse at complicated multi-step reasoning.)

- If one thing may be expressed in some ways (e.g. descriptions of signs), a primary step could possibly be to get the mannequin to tag textual content and standardise it (normally known as “coding”). This may open alternatives for stronger grounding.

- Arrange “instruments” for the mannequin to name which constrain the output to a really particular construction. We don’t wish to execute arbitrary code generated by an LLM. We wish to create instruments that the mannequin can name and provides restrictions for what’s in these instruments.

- Wherever doable, embody grounding in software use — e.g. by validating responses in opposition to the context earlier than sending them to a downstream system.

- Is there a option to validate the ultimate output? If handcrafted guidelines are out of the query, may we craft a immediate for verification? (And observe the above guidelines for the verified mannequin as properly).

- In terms of data extraction, we don’t tolerate outputs not discovered within the unique context.

- We observe this up with verification steps that catch errors in addition to hallucinations.

- Something we do past that’s about danger evaluation and danger minimisation.

- Break complicated issues down into smaller steps and establish if an LLM is even wanted.

- For complicated issues use a scientific method to establish verifiable job:

— Robust grounding forces LLMs to cite verbatim from trusted sources. It’s at all times most popular to make use of sturdy grounding.

— Weak grounding forces LLMs to reference trusted sources however permits synthesis and reasoning.

— The place an issue may be damaged down into smaller duties use sturdy grounding on duties the place doable.