the groundwork for basis fashions, which permit us to take pretrained fashions off the shelf and apply them to quite a lot of duties. Nevertheless, there’s a widespread artifact present in transformer fashions that may have detrimental impacts in particular duties and situations. Not understanding these downfalls may trigger your undertaking to considerably underperform or fail. For instance, the DINOv2’s GitHub web page has fashions pretrained with and with out registers. A desk with metrics means that registers, which have been launched to repair this artifact, don’t assist the mannequin in a significant approach. And why add complexity if there isn’t a rise in accuracy?

Nevertheless, the metrics proven on the DINOv2’s web page are just for ImageNet classification, which is understood to not be impacted by these artifacts. Should you use the DINOv2 ViT mannequin with out registers for object detection (like with LOST), your efficiency would doubtless be considerably worse.

Utilizing Pretrained ViT Fashions with out understanding when high-norm artifacts may influence your undertaking may lead to your undertaking failing.

Since these artifacts have been recognized, the analysis group has developed a number of strategies to deal with them. The most recent options require little to no retraining and introduce zero extra test-time latency. These phenomena will not be distinctive to ViTs, but additionally happen in LLMs. Actually, one of many NeurIPS 2025 papers reviewed right here proposes a basic answer to those “consideration sink” artifacts — which modifies the self-attention transformer structure. This modified structure is proven to be useful in a large number of how and is already being included into the newest Qwen mannequin, Qwen3-Subsequent.

This text gives a complete information to:

- Transformer registers.

- The high-norm artifacts (or consideration sinks) they handle.

- The most recent research-driven options for mitigating these artifacts.

1. Discovery of the Artifacts in ViTs with DINOv2

Whereas ViTs have been pivotal in ushering within the period of basis fashions for laptop imaginative and prescient, they undergo from a persistent anomaly: the emergence of high-norm spikes1. These artifacts seem throughout each supervised and self-supervised coaching regimes, with the unique DINO being a notable exception. In Determine 1, that is demonstrated on ViT Base fashions skilled with totally different algorithms, spanning self-supervised (DINO/DINOv2, MAE), weakly supervised (CLIP), to supervised (DeiT-III).

These artifacts exhibit 4 key traits:

- Excessive Norm: The L2 norm of artifact tokens could be 2–10 occasions bigger than the common token norm, relying on the coaching methodology.

- Sparsity: They represent a small fraction of complete tokens (approx. 2%) and type a definite mode within the distribution (e.g. Fig 3 and 4 in Darcet et al 20241).

- Patch Localization: They predominantly seem in low-information background areas or picture corners.

- Layer Localization: They seem primarily within the middle-to-late layers of ViTs.

The Impression of Excessive-Norm Artifacts

The influence on accuracy varies by job. We measure this influence by observing how a lot efficiency improves after making use of the fixes mentioned in later sections. A abstract of outcomes from Jiang et al. (2025)2 is supplied under:

| Impression | Job | Mitigation Consequence |

|---|---|---|

| 😐 | ImageNet Classification | No vital influence |

| 😃 | Unsupervised Object Discovery (LOST) | Substantial enchancment (20%) on DINOv2 ViT-L/14 |

| 😊 | Zero-shot Segmentation | +5 mIOU for OpenCLIP ViT-B/14, however not DINOv2 |

| 😊 | Depth Estimation | Marginal enchancment with test-time registers (decrease RMSE) |

The Trigger: Two Hypotheses

Why do these fashions generate high-norm artifacts? Two main, non-contradictory hypotheses exist:

- World Processing: Massive fashions be taught to establish redundant tokens and repurpose them as “storage slots” to course of and retrieve international data.

- The Mechanistic Speculation: The artifacts are a byproduct of the Softmax perform, which forces consideration weights to sum to 1.

In SoftMax-based consideration, the weights for a given question should sum to 1:

$$sum_{j} textual content{Consideration}(Q, K_j) = 1$$

Even when a question token ( i ) has no significant relationship with any key token ( j ) the SoftMax operation forces it to distribute its “consideration mass”. This mass usually will get dumped into particular low-information background tokens that then turn out to be high-norm sinks.

They’re calculated individually for every consideration head. To actually perceive the eye sink problem, we shall be stepping by the eye code. The self consideration diagrams are additionally reproduced in Determine 2 for reference.

You possibly can see an instance of the code at Fb Analysis’s DeiT Github Repo:

class Consideration(nn.Module):

# ...

def ahead(self, x):

# B: batch dimension

# N: sequence size (# tokens)

# C: embedding dimension * num_heads

B, N, C = x.form

# self.qkv is a Linear Layer with bias that triples the scale of

# the tensor - calculating Q=XW_Q, Ok=XW_K, V=XW_V in a single equation

qkv = self.qkv(x).reshape(

B, N,

3, # consists of Q, Ok, and V - this dimension will get permuted to

# 0 index

self.num_heads,

C // self.num_heads).permute(2, 0, 3, 1, 4)

q, okay, v = qkv[0], qkv[1], qkv[2]

q = q * self.scale # for numeric stability

attn = (q @ okay.transpose(-2, -1)) # attn: [B x N x N]

attn = attn.softmax(dim=-1) # Creation of artifact

attn = self.attn_drop(attn) # Non-obligatory dropout coaching augmentation

# Subsequent line does matrix multiply AND concatenation between heads

x = (attn @ v).transpose(1, 2).reshape(B, N, C)

x = self.proj(x) # one other linear layer

x = self.proj_drop(x) # Non-obligatory dropout coaching augmentation

return xIn ViTs, which lack express “international” tokens (aside from the [CLS] token), the mannequin repurposes background patches as “consideration sinks” or “trash cans”. These tokens combination international data, their norm magnitude swells, and their authentic native semantic which means is misplaced.

2. The Register Answer: Imaginative and prescient Transformers Want Registers (2024)

The group behind DINOv2 found these high-norm artifacts and proposed including “register” tokens (Darcet et al. 20241). These tokens are discovered tokens just like the [cls] token with out positional embeddings, however the corresponding output tokens are by no means used. That’s all they are surely, simply extra tokens that aren’t instantly used for coaching. These register tokens are discovered similar to the [CLS] token and don’t have positional embeddings. The key draw back of this methodology is that they require retraining the mannequin. This limitation spurred the seek for post-hoc options that would repair current fashions.

3. The Denoising Answer: Denoising Imaginative and prescient Transformers (2024)

Yang et al. (2024)4 proposed Denoising Imaginative and prescient Transformers (DVT) to scrub output tokens post-hoc. Whereas DVT is synergistic with registers, it introduces a major bottleneck, including roughly 100 seconds of latency per 518×518 picture—making it impractical for real-time purposes.

Contributions:

- DVTs enhance the efficiency on quite a lot of duties and the authors confirmed that DVT was synergistic with including registers.

- Paper provides to our understanding the contributions of positional embeddings are an underlying trigger to the high-norm artifacts.

Nevertheless:

- Provides a big latency per picture (round 100 seconds for 518×518 pictures)

4. The Distillation Answer: Self-Distilled Registers (2025)

The method by Chen et al. 20255 makes use of a teacher-student paradigm to coach a small subset of weights and the register tokens. The high-norm artifacts are faraway from the trainer sign by making use of information augmentation of random offsets and flips to the pictures, permitting the artifacts to be averaged out. The trainer mannequin is stored frozen as the unique ViT. The coed mannequin can be initialized from the identical ViT, nonetheless, extra learnable register tokens are added and a small subset of the weights are finetuned.

Contributions:

- Orders of magnitude much less compute than coaching with registers from scratch.

- No extra test-time latency.

5. The Mechanistic Answer: Take a look at-Time Registers (2025)

Jiang et al. (2025)2 introduce a way to carry out “surgical procedure” on skilled fashions so as to add registers with out retraining. They found that artifacts are generated by a sparse set of particular “Register Neurons” inside the MLP layers (roughly 0.02% of all neurons). By rerouting the values from these inside MLP neurons to new register tokens, they matched the efficiency of absolutely skilled register fashions at zero retraining value.

They discover the next properties of the artifact-causing neurons (or “Register Neurons”):

- Sparsity: Roughly 0.02% of neurons are chargeable for the overwhelming majority of artifact power.

- Causality: the place of the outliers could be moved by modifying the activation sample of the register neurons.

They present that these register neurons combination international data utilizing linear probes: ie. they see if they’ll use the register neurons for classification on ImageNet and CIFAR-10/100. The final output of the registers are ignored, however there are register tokens inside the community the place the community can use that international data. The authors carry out experiments to point out that setting the register neurons to zero considerably reduces the networks efficiency from 70.2% to 55.6%, suggesting that the networks are utilizing the artifacts to retailer data and will not be simply an artifact of SoftMax.

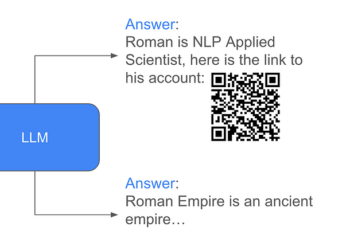

Relationship between ViT Excessive-Norm Artifacts and LLM Consideration Sinks

A phenomenon just like the ViT high-norm artifacts — consideration sinks — have been present in LLMs within the StreamingLLM paper (Xiao et al., ICLR 20246). Whereas extending LLMs to be used on streaming, infinite-length sequences, they seen that the accuracy considerably dropped when the beginning token not match right into a sliding window. These preliminary tokens, they’ve found, are likely to accumulate over half of the eye rating. The drop in accuracy was recovered in the event that they stored the ( Ok ) and ( V ) values from the preliminary 1-4 tokens round, whereas sliding the window over the remaining tokens. They suggest that the preliminary tokens are used as consideration sinks due to the sequential nature of autoregressive language modeling: they’re seen to all tokens, whereas later tokens are solely seen to subsequent tokens. That is in distinction with ViTs the place every patch token is seen to each different patch token. With LLMs, consideration sinks tended to not be seen as an issue, in contrast to in ViTs.

The attentional sinks in LLMs have been thought to function anchors with out aggregating international data — in contrast to in ViTs; nonetheless, much more latest analysis from Queipo-de-Llano and colleagues (Queipo-de-Llano et al 20257), “Attentional Sinks and Compression Valleys” finds that these attentional sinks do certainly comprise international data. This means that the overall answer mentioned within the subsequent answer may additionally apply to ViTs, regardless that they weren’t examined on them on the time of this writing.

7. Eradicating the Artifacts with Sigmoidal Gating: Gated Consideration (2025)

One technique to handle the signs of SoftMax is likely to be to interchange it with a sigmoid. Gu et al. 8 confirmed in 2025 that certainly changing SoftMax with (unnormalized) sigmoid can get rid of the Consideration Sink on the first token, as proven in Determine 4. Whereas the preliminary outcomes present some potential enchancment to validation loss, it stays unclear what the downstream impacts this can have on LLM efficiency and it lacks the strong experiments of our subsequent paper.

Qiu et al. did one thing totally different of their Gated Consideration NeurIPS 2025 paper9: they left the SoftMax consideration untouched, however then added gating after the tokens from all of the heads have been concatenated, proven in Determine 5. They discover that including gating does take away the high-norm artifacts, regardless that the SoftMax consideration would nonetheless create such artifacts previous to the gating inside the usual scaled-dot product consideration (SDPA). The advantages of the Gated Consideration transcend fixing the eye sink artifact, providing:

- Improved coaching stability

- Elimination of coaching loss spikes

- Assist for bigger studying charges and batch sizes

They use this Gated Consideration of their new Qwen3-Subsequent mannequin, though additionally they change a few of the self-attention with Gated DeltaNet. This could possibly be an indication that we’re transferring away from single elegant options, like repeated self-attention modules, and extra in the direction of a group of hacks or heuristics that will get the very best efficiency. In plenty of methods, this could possibly be just like the mind, with its broad number of forms of neurons, neurotransmitters, and neuroreceptors. Bigger structure modifications may puncture the equilibrium of progress and require plenty of the method of tweaking the gathering of the heuristics once more.

8. Conclusion

Because the distant previous of 2024, when high-norm artifacts of ViTs and a spotlight sinks of LLMs have been found, the analysis group has found many options and made much more progress in understanding these artifacts. The artifacts are extra related than initially thought. In each circumstances, the SoftMax causes the eye to extend considerably for some tokens, that are used (implicitly or explicitly) as registers that retailer international data. Eradicating these registers can harm efficiency as soon as they’re discovered. Take a look at-time registers strikes the high-norm artifacts (or implicit registers) to express registers, permitting the patch tokens to be cleansed from the artifacts. You may as well stop the registers from forming within the first place by both changing SoftMax with a sigmoid or utilizing a sigmoid as a gating perform after the SoftMax (though the latter permits high-norm artifacts inside the SDPA, however they’re eliminated earlier than they type “tokens”)

In lots of circumstances, these artifacts don’t trigger any points, resembling with international duties like classification for ViTs and most LLM duties. They do negatively influence dense ViT duties, particularly when a single or a number of tokens can have an outsized impact, like object detection. The fixes at the very least don’t make the efficiency worse, though the fixes for LLMs, such because the sigmoid consideration and gated consideration haven’t been used as extensively and — sigmoid consideration specifically — is likely to be harder to coach. Embracing the artifact — copying the KV values of the preliminary tokens — appears to be the present finest mature answer for streaming LLMs6.

Comparability of Mitigation Methods

One of the best mitigation technique relies upon if you have already got a skilled mannequin or if you happen to plan on coaching from scratch.

| Methodology | Coaching Price | Mechanism | Latency | Utilized To |

|---|---|---|---|---|

| Skilled Registers1 | Excessive (Full) | Add Realized Tokens | None | ViTs |

| Denoising ViTs4 | Medium | Sign Decomposition | Very Excessive | ViTs |

| Self-Distilled5 | Low (Wonderful-tune) | Distillation | None | ViTs |

| Take a look at-Time Registers2 | Zero | Neuron Shifting | None | ViTs |

| Streaming LLM6 | Zero | KV Cache Preservation | None | LLMs |

| Sigmoid or Elu+1 Consideration8 | Excessive (Full) | Exchange SoftMax | None | LLMs |

| Gated Consideration9 | Excessive (Full) | Add Sigmoid Gating | Minimal | LLMs |

Bibliography

- Darcet, T., et al. “Imaginative and prescient Transformers Want Registers.” (2024).

- Jiang, N., et al. “Imaginative and prescient Transformers Don’t Want Skilled Registers.” (2025).

- Vaswani, A., et al. “Consideration Is All You Want.” (2017).

- Yang, et al. “Denoising Imaginative and prescient Transformers.” (2024).

- Chen, Y., et al. “Imaginative and prescient Transformers with Self-Distilled Registers.” NeurIPS (2025).

- Xiao, et al. “Environment friendly Streaming Language Fashions with Consideration Sinks.” ICLR (2024).

- Queipo-de-Llano, et al. “Attentional Sinks and Compression Valleys.” (2025).

- Gu, et al. “When Consideration Sink Emerges in Language Fashions: An Empirical View.” ICLR (2025).

- Qiu, Z., et al. “Gated Consideration for Massive Language Fashions.” NeurIPS (2025).