I the idea of federated studying (FL) by a comedian by Google in 2019. It was a superb piece and did an excellent job at explaining how merchandise can enhance with out sending consumer knowledge to the cloud. These days, I’ve been wanting to grasp the technical facet of this subject in additional element. Coaching knowledge has turn into such an vital commodity as it’s important for constructing good fashions however loads of this will get unused as a result of it’s fragmented, unstructured or locked inside silos.

As I began exploring this subject, I discovered the Flower framework to be probably the most simple and beginner-friendly method to get began in FL. It’s open supply, the documentation is evident, and the neighborhood round it is rather lively and useful. It is without doubt one of the purpose for my renewed curiosity on this subject.

This text is the primary a part of a sequence the place I discover federated studying in additional depth, protecting what it’s, how it’s applied, the open issues it faces, and why it issues in privacy-sensitive settings. Within the subsequent instalments, I’ll go deeper into sensible implementation with the Flower framework, talk about privateness in federated studying and look at how these concepts prolong to extra superior use instances.

When Centralised Machine studying will not be excellent

We all know AI fashions rely upon massive quantities of information, but a lot of probably the most helpful knowledge is delicate, distributed, and arduous to entry. Consider knowledge inside hospitals, telephones, automobiles, sensors, and different edge programs. Privateness considerations, native guidelines, restricted storage, and community limits make shifting this knowledge to a central place very tough and even unattainable. Consequently, massive quantities of invaluable knowledge stay unused. In healthcare, this drawback is particularly seen. Hospitals generate tens of petabytes of information yearly, but research estimate that as much as 97% of this knowledge goes unused.

Conventional machine studying assumes that each one coaching knowledge may be collected in a single place, often on a centralized server or knowledge heart. This works when knowledge may be freely moved, but it surely breaks down when knowledge is non-public or protected. In apply, centralised coaching additionally relies on steady connectivity, sufficient bandwidth, and low latency, that are tough to ensure in distributed or edge environments.

In such instances, two frequent decisions seem. One choice is to not use the info in any respect, which implies invaluable info stays locked inside silos.

The opposite choice is to let every native entity practice a mannequin by itself knowledge and share solely what the mannequin learns, whereas the uncooked knowledge by no means leaves its authentic location. This second choice varieties the idea of federated studying, which permits fashions to study from distributed knowledge with out shifting it. A widely known instance is Google Gboard on Android, the place options like next-word prediction and Sensible Compose run throughout a whole lot of thousands and thousands of units.

Federated Studying: Transferring the Mannequin to the Information

Federated studying may be regarded as a collaborative machine studying setup the place coaching occurs with out accumulating knowledge in a single central place. Earlier than the way it works below the hood, let’s see a couple of real-world examples that present why this method issues in high-risk settings, spanning domains from healthcare to security-sensitive environments.

Healthcare

In healthcare, federated studying enabled early COVID screening by Curial AI, a system skilled throughout a number of NHS hospitals utilizing routine important indicators and blood checks. As a result of affected person knowledge couldn’t be shared throughout hospitals, coaching was executed domestically at every web site and solely mannequin updates have been exchanged. The ensuing world mannequin generalized higher than fashions skilled at particular person hospitals, particularly when evaluated on unseen websites.

Medical Imaging

Federated studying can also be being explored in medical imaging. Researchers at UCL and Moorfields Eye Hospital are utilizing it to fine-tune massive imaginative and prescient basis fashions on delicate eye scans that can not be centralized.

Protection

Past healthcare, federated studying can also be being utilized in security-sensitive domains comparable to protection and aviation. Right here, fashions are skilled on distributed physiological and operational knowledge that should stay native.

Several types of Federated Studying

At a high-level, Federated studying may be grouped into a couple of frequent sorts based mostly on who the purchasers are and how the info is break up.

• Cross-System vs Cross-Silo Federated Studying

Cross-device federated studying entails use of many consumers which can go as much as thousands and thousands, like private units or telephones, every with a small quantity of native knowledge and unreliable connectivity. At a given time, nevertheless, solely a small fraction of units take part in any given spherical. Google Gboard is a typical instance of this setup.

Cross-silo federated studying, alternatively, entails a a lot smaller variety of purchasers, often organizations like hospitals or banks. Every consumer holds a big dataset and has steady compute and connectivity. Most real-world enterprise and healthcare use instances appear to be cross-silo federated studying.

• Horizontal vs Vertical Federated Studying

Horizontal federated studying describes how knowledge is break up throughout purchasers. On this case, all purchasers share the identical characteristic house, however every holds completely different samples. For instance, a number of hospitals might report the identical medical variables, however for various sufferers. That is the most typical type of federated studying.

Vertical federated studying is used when purchasers share the identical set of entities however have completely different options. For instance, a hospital and an insurance coverage supplier might each have knowledge about the identical people, however with completely different attributes. Coaching, on this case requires safe coordination as a result of characteristic areas differ, and this setup is much less frequent than horizontal federated studying.

These classes are usually not mutually unique. An actual system is usually described utilizing each axes, for instance, a cross-silo, horizontal federated studying setup.

How Federated Studying works

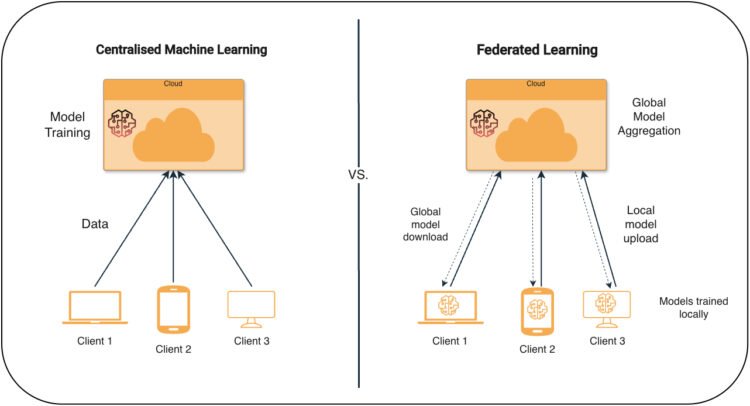

Federated studying follows a easy, repeated course of coordinated by a central server and executed by a number of purchasers that maintain knowledge domestically, as proven within the diagram beneath.

Coaching in federated studying proceeds by repeated federated studying rounds. In every spherical, the server selects a small random subset of purchasers, sends them the present mannequin weights, and waits for updates. Every consumer trains the mannequin domestically utilizing stochastic gradient descent, often for a number of native epochs by itself batches, and returns solely the up to date weights. At a excessive degree it follows the next 5 steps:

- Initialisation

A world mannequin is created on the server, which acts because the coordinator. The mannequin could also be randomly initialized or begin from a pretrained state.

2. Mannequin distribution

In every spherical, the server selects a set of purchasers(based mostly on random sampling or a predefined technique) which participate in coaching and sends them the present world mannequin weights. These purchasers may be telephones, IoT units or particular person hospitals.

3. Native coaching

Every chosen consumer then trains the mannequin domestically utilizing its personal knowledge. The info by no means leaves the consumer and all computation occurs on system or inside a company like hospital or a financial institution.

4. Mannequin replace communication

After the native coaching, purchasers ship solely the up to date mannequin parameters (may very well be weights or gradients) again to the server whereas uncooked knowledge is shared at any level.

5. Aggregation

The server aggregates the consumer updates to supply a brand new world mannequin. Whereas Federated Averaging (Fed Avg) is a standard method for aggregation, different methods are additionally used. The up to date mannequin is then despatched again to purchasers, and the method repeats till convergence.

Federated studying is an iterative course of and every cross by this loop known as a spherical. Coaching a federated mannequin often requires many rounds, typically a whole lot, relying on components comparable to mannequin dimension, knowledge distribution and the issue being solved.

Mathematical Instinct behind Federated Averaging

The workflow described above may also be written extra formally. The determine beneath exhibits the unique Federated Averaging (Fed Avg) algorithm from Google’s seminal paper. This algorithm later grew to become the principle reference level and demonstrated that federated studying can work in apply. This formulation in truth grew to become the reference level for many federated studying programs in the present day.

The unique Federated Averaging algorithm, displaying the server–consumer coaching loop and weighted aggregation of native fashions.

On the core of Federated Averaging is the aggregation step, the place the server updates the worldwide mannequin by taking a weighted common of the domestically skilled consumer fashions. This may be written as:

This equation makes it clear how every consumer contributes to the worldwide mannequin. Purchasers with extra native knowledge have a bigger affect, whereas these with fewer samples contribute proportionally much less. In apply, this straightforward thought is the rationale why Fed Avg grew to become the default baseline for federated studying.

A easy NumPy implementation

Let’s have a look at a minimal instance the place 5 purchasers have been chosen. For the sake of simplicity, we assume that every consumer has already completed native coaching and returned its up to date mannequin weights together with the variety of samples it used. Utilizing these values, the server computes a weighted sum that produces the brand new world mannequin for the subsequent spherical. This mirrors the Fed Avg equation instantly, with out introducing coaching or client-side particulars.

import numpy as np

# Shopper fashions after native coaching (w_{t+1}^okay)

client_weights = [

np.array([1.0, 0.8, 0.5]), # consumer 1

np.array([1.2, 0.9, 0.6]), # consumer 2

np.array([0.9, 0.7, 0.4]), # consumer 3

np.array([1.1, 0.85, 0.55]), # consumer 4

np.array([1.3, 1.0, 0.65]) # consumer 5

]

# Variety of samples at every consumer (n_k)

client_sizes = [50, 150, 100, 300, 4000]

# m_t = whole variety of samples throughout chosen purchasers S_t

m_t = sum(client_sizes) # 50+150+100+300+400

# Initialize world mannequin w_{t+1}

w_t_plus_1 = np.zeros_like(client_weights[0])

# FedAvg aggregation:

# w_{t+1} = sum_{okay in S_t} (n_k / m_t) * w_{t+1}^okay

# (50/1000) * w_1 + (150/1000) * w_2 + ...

for w_k, n_k in zip(client_weights, client_sizes):

w_t_plus_1 += (n_k / m_t) * w_k

print("Aggregated world mannequin w_{t+1}:", w_t_plus_1)

-------------------------------------------------------------

Aggregated world mannequin w_{t+1}: [1.27173913 0.97826087 0.63478261]

How the aggregation is computed

Simply to place issues into perspective, we are able to broaden the aggregation step for simply two purchasers and see how the numbers line up.

Challenges in Federated Studying Environments

Federated studying comes with its personal set of challenges. One of many main points when implementing it’s that the info throughout purchasers is usually non-IID (non-independent and identically distributed). This implies completely different purchasers may even see very completely different knowledge distributions which in flip can sluggish coaching and make the worldwide mannequin much less steady. For example, Hospitals in a federation can serve completely different populations that may comply with completely different patterns.

Federated programs can contain something from a couple of organizations to thousands and thousands of units and managing participation, dropouts and aggregation turns into tougher because the system scales.

Whereas federated studying retains uncooked knowledge native, it doesn’t totally remedy privateness by itself. Mannequin updates can nonetheless leak non-public info if not protected and so further privateness strategies are sometimes wanted. Lastly, communication is usually a supply of bottleneck. Since networks may be sluggish or unreliable and sending frequent updates may be expensive.

Conclusion and what’s subsequent

On this article, we understood how federated studying works at a excessive degree and in addition walked by a merely Numpy implementation. Nonetheless, as an alternative of writing the core logic by hand, there are frameworks like Flower which gives a easy and versatile method to construct federated studying programs. Within the subsequent half, we’ll utilise Flower to do the heavy lifting for us in order that we are able to concentrate on the mannequin and the info reasonably than the mechanics of federated studying. We’ll additionally take a look at federated LLMs, the place mannequin dimension, communication price, and privateness constraints turn into much more vital.

Observe: All pictures, except in any other case acknowledged, are created by the creator.