has collected information on the outcomes of sufferers who’ve acquired “Pathogen A” liable for an infectious respiratory sickness. Accessible are 8 options of every affected person and the end result: (a) handled at dwelling and recovered, (b) hospitalized and recovered, or (c) died.

It has confirmed trivial to coach a neural internet to foretell one of many three outcomes from the 8 options with virtually full accuracy. Nonetheless, the well being authorities want to predict one thing that was not captured: From the sufferers who might be handled at dwelling, who’re those who’re most at hazard of getting to go to hospital? And from the sufferers who’re predicted to be hospitalized, who’re those who’re most at hazard of not surviving the an infection? Can we get a numeric rating that represents how severe the an infection can be?

On this be aware I’ll cowl a neural internet with a bottleneck and a particular head to be taught a scoring system from a number of classes, and canopy some properties of small neural networks one is more likely to encounter. The accompanying code might be discovered at https://codeberg.org/csirmaz/category-scoring.

The dataset

To have the ability to illustrate the work, I developed a toy instance, which is a non-linear however deterministic piece of code calculating the end result from the 8 options. The calculation is for illustration solely — it’s not presupposed to be trustworthy to the science; the names of the options used had been chosen merely to be in step with the medical instance. The 8 options used on this be aware are:

- Earlier an infection with Pathogen A (boolean)

- Earlier an infection with Pathogen B (boolean)

- Acute / present an infection with Pathogen B (boolean)

- Most cancers prognosis (boolean)

- Weight deviation from common, arbitrary unit (-100 ≤ x ≤ 100)

- Age, years (0 ≤ x ≤ 100)

- Blood strain deviation from common, arbitrary unit (0 ≤ x ≤ 100)

- Years smoked (0 ≤ x ≤ ~88)

When producing pattern information, the options are chosen independently and from a uniform distribution, apart from years smoked, which will depend on the age, and a cohort of non-smokers (50%) was in-built. We checked that with this sampling the three outcomes happen with roughly equal chance, and measured the imply and variance of the variety of years smoked so we might normalize all of the inputs to zero imply unit variance.

As an illustration of the toy instance, beneath is a plot of the outcomes with the load on the horizontal axis and age on the vertical axis, and different parameters fastened. “o” stands for hospitalization and “+” for dying.

....................

....................

....................

....................

...............ooooo

............oooooooo

............oooooooo

............oooooooo

............oooooooo

............oooooooo

............ooooooo+

...........ooooooo++

...........oooooo+++

...........oooooo+++

...........ooooo++++

.......oooooooo+++++

..oooooooooooo++++++

ooooooooooooo+++++++

oooooooooooo++++++++

ooooooooooo+++++++++A traditional classifier

The information is nonlinear however very neat, and so it’s no shock {that a} small classifier community can be taught it to 98-99% validation accuracy. Launch practice.py --classifier to coach a easy neural community with 6 layers (every 8 huge) and ReLU activation, outlined in ScoringModel.build_classifier_model().

However learn how to practice a scoring system?

Our purpose is then to coach a system that, given the 8 options as inputs, can produce a rating comparable to the hazard the affected person is in when contaminated with Pathogen A. The complication is that we’ve got no scores accessible in our coaching information, solely the three outcomes (classes). To make sure that the scoring system is significant, we want sure rating ranges to correspond to the three foremost outcomes.

The very first thing somebody might strive is to assign a numeric worth to every class, like 0 to dwelling therapy, 1 to hospitalization and a couple of to dying, and use it because the goal. Then arrange a neural community with a single output, and practice it with e.g. MSE loss.

The issue with this method is that the mannequin will be taught to contort (condense and increase) the projection of the inputs across the three targets, so finally the mannequin will all the time return a price near 0, 1 or 2. You’ll be able to do that by working practice.py --predict-score which trains a mannequin with 2 dense layers with ReLU activations and a closing dense layer with a single output, outlined in ScoringModel.build_predict_score_model().

As might be seen within the following histogram of the output of the mannequin on a random batch of inputs, it’s certainly what is occurring – and that is with 2 layers solely.

..................................................#.........

..................................................#.........

.........#........................................#.........

.........#........................................#.........

.........#........................................#.........

.........#...................#....................#.........

.........#...................#...................##.........

.........#...................#...................##.........

.........###....#............##.#................##.........

........####.#.##.#..#..##.####.##..........#...###.........Step 1: A low-capacity community

To keep away from this from taking place and get a extra steady rating, we wish to drastically cut back the capability of the community to contort the inputs. We’ll go to the intense and use a linear regression — in a earlier TDS article I already described learn how to use the elements provided by Keras to “practice” one. We’ll reuse that concept right here — and construct a “degenerate” neural community out of a single dense layer with no activation. This may enable the rating to maneuver extra in keeping with the inputs, and in addition has the benefit that the ensuing community is extremely interpretable, because it merely offers a weight for every enter with the ensuing rating being their linear mixture.

Nonetheless, with this simplification, the mannequin loses all means to condense and increase the outcome to match the goal scores for every class. It should strive to take action, however particularly with extra output classes, there is no such thing as a assure that they may happen at common intervals in any linear mixture of the inputs.

We wish to allow the mannequin to find out the very best thresholds between the classes, that’s, to make the thresholds trainable parameters. That is the place the “class approximator head” is available in.

Step 2: A class approximator head

So as to have the ability to practice the mannequin utilizing the classes as targets, we add a head that learns to foretell the class primarily based on the rating. Our purpose is to easily set up two thresholds (for our three classes), t0 and t1 such that

- if the rating < t0, then we predict therapy at dwelling and restoration,

- if t0 < rating < t1, then we predict therapy in hospital and restoration,

- if t1 < rating, then we predict that the affected person doesn’t survive.

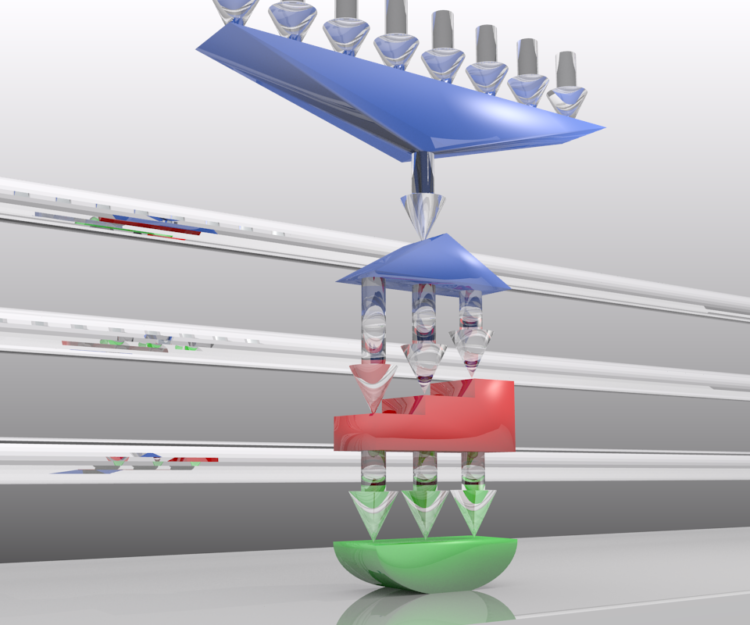

The mannequin takes the form of an encoder-decoder, the place the encoder half produces the rating, and the decoder half permits evaluating and coaching the rating in opposition to the classes.

One method is so as to add a dense layer on high of the rating, with a single enter and as many outputs because the classes. This will be taught the thresholds, and predict the chances of every class by way of softmax. Coaching then can occur as normal utilizing a categorical cross-entropy loss.

Clearly, the dense layer gained’t be taught the thresholds instantly; as a substitute, it is going to be taught N weights and N biases given N output classes. So let’s determine learn how to get the thresholds from these.

Step 3: Extracting the thresholds

Discover that the output of the softmax layer is the vector of chances for every class; the anticipated class is the one with the very best chance. Moreover, softmax works in a means that it all the time maps the most important enter worth to the most important chance. Subsequently, the most important output of the dense layer corresponds to the class that it predicts primarily based on the incoming rating.

If the dense layer has learnt the weights [w1, w2, w3] and the biases [b1, b2, b3], then its outputs are

o1 = w1*rating + b1

o2 = w2*rating + b2

o3 = w3*rating + b3

These are all simply straight strains as a perform of the incoming rating (e.g. y = w1*x + b1), and whichever is on the high at a given rating is the successful class. Here’s a fast illustration:

The thresholds are then the intersection factors between the neighboring strains. Assuming the order of classes to be o1 (dwelling) → o2 (hospital) → o3 (dying), we have to clear up the o1 = o2 and o2 = o3 equations, yielding

t0 = (b2 – b1) / (w1 – w2)

t1 = (b3 – b2) / (w2 – w3)

That is applied in ScoringModel.extract_thresholds() (although there’s some further logic there defined beneath).

Step 4: Ordering the classes

However how do we all know what’s the proper order of the classes? Clearly we’ve got a most well-liked order (dwelling → hospital → dying), however what is going to the mannequin say?

It’s price noting a few issues in regards to the strains that symbolize which class wins at every rating. As we’re excited by whichever line is the very best, we’re speaking in regards to the boundary of the area that’s above all strains:

Since this space is the intersection of all half-planes which are above every line, it’s essentially convex. (Notice that no line might be vertical.) Because of this every class wins over precisely one vary of scores; it can not get again to the highest once more later.

It additionally implies that these ranges are essentially within the order of the slopes of the strains, that are the weights. The biases affect the values of the thresholds, however not the order. We first have unfavourable slopes, adopted by small after which large optimistic slopes.

It is because given any two strains, in the direction of unfavourable infinity the one with the smaller slope (weight) will win, and in the direction of optimistic infinity, the opposite. Algebraically talking, given two strains

f1(x) = w1*x + b1 and f2(x) = w2*x + b2 the place w2 > w1,

we already know they intersect at (b2 – b1) / (w1 – w2), and beneath this, if x < (b2 – b1) / (w1 – w2), then

(w1 – w2)x > b2 – b1 (w1 – w2 is unfavourable!)

w1*x + b1 > w2*x – b2

f1(x) > f2(x),

and so f1 wins. The identical argument holds within the different route.

Step 4.5: We tousled (propagate-sum)

And right here lies an issue: the scoring mannequin is kind of free to resolve what order to place the classes in. That’s not good: a rating that predicts dying at 0, dwelling therapy at 10, and hospitalization at 20 is clearly nonsensical. Nonetheless, with sure inputs (particularly if one characteristic dominates a class) this will occur even with very simple scoring fashions like a linear regression.

There’s a option to shield in opposition to this although. Keras permits including a kernel constraint to a dense layer to drive all weights to be non-negative. We might take this code and implement a kernel constraint that forces the weights to be in growing order (w1 ≤ w2 ≤ w3), however it’s easier if we follow the accessible instruments. Fortuitously, Keras tensors assist slicing and concatenation, so we are able to break up the outputs of the dense layer into elements (say, d1, d2, d3) and use the next because the enter into the softmax:

- o1 = d1

- o2 = d1 + d2

- o3 = d1 + d2 + d3

Within the code, that is known as “propagate sum.”

Substituting the weights and biases into the above we get

- o1 = w1*rating + b1

- o2 = (w1+w2)*rating + b1+b2

- o3 = (w1+w2+w3)*rating + b1+b2+b3

Since w1, w2, w3 are all non-negative, we’ve got now ensured that the efficient weights used to resolve the successful class are in growing order.

Step 5: Coaching and evaluating

All of the elements are actually collectively to coach the linear regression. The mannequin is applied in ScoringModel.build_linear_bottleneck_model() and might be educated by working practice.py --linear-bottleneck. The code additionally mechanically extracts the thresholds and the weights of the linear mixture after every epoch. Notice that as a closing calculation, we have to shift every threshold by the bias within the encoder layer.

Epoch #4 completed. Logs: {'accuracy': 0.7988250255584717, 'loss': 0.4569114148616791, 'val_accuracy': 0.7993124723434448, 'val_loss': 0.4509878158569336}

----- Evaluating the bottleneck mannequin -----

Prev an infection A weight: -0.22322197258472443

Prev an infection B weight: -0.1420486718416214

Acute an infection B weight: 0.43141448497772217

Most cancers prognosis weight: 0.48094701766967773

Weight deviation weight: 1.1893583536148071

Age weight: 1.4411307573318481

Blood strain dev weight: 0.8644841313362122

Smoked years weight: 1.1094108819961548

Threshold: -1.754680637036648

Threshold: 0.2920824065597968The linear regression can approximate the toy instance with an accuracy of 80%, which is fairly good. Naturally, the utmost achievable accuracy will depend on whether or not the system to be modeled is near linear or not. If not, one can think about using a extra succesful community because the encoder; for instance, a number of dense layers with nonlinear activations. The community ought to nonetheless not have sufficient capability to condense the projected rating an excessive amount of.

Additionally it is price noting that with the linear mixture, the dimensionality of the load area the coaching occurs in is minuscule in comparison with common neural networks (simply N the place N is the variety of enter options, in comparison with thousands and thousands, billions or extra). There’s a continuously described instinct that on high-dimensional error surfaces, real native minima and maxima are very uncommon – there’s virtually all the time a route during which coaching can proceed to scale back loss. That’s, most areas of zero gradient are saddle factors. We don’t have this luxurious in our 8-dimensional weight area, and certainly, coaching can get caught in native extrema even with optimizers like Adam. Coaching is extraordinarily quick although, and working a number of coaching classes can clear up this drawback.

As an instance how the learnt linear mannequin features, ScoringModel.try_linear_model() tries it on a set of random inputs. Within the output, the goal and predicted outcomes are famous by their index quantity (0: therapy at dwelling, 1: hospitalized, 2: dying):

Pattern #0: goal=1 rating=-1.18 predicted=1 okay

Pattern #1: goal=2 rating=+4.57 predicted=2 okay

Pattern #2: goal=0 rating=-1.47 predicted=1 x

Pattern #3: goal=2 rating=+0.89 predicted=2 okay

Pattern #4: goal=0 rating=-5.68 predicted=0 okay

Pattern #5: goal=2 rating=+4.01 predicted=2 okay

Pattern #6: goal=2 rating=+1.65 predicted=2 okay

Pattern #7: goal=2 rating=+4.63 predicted=2 okay

Pattern #8: goal=2 rating=+7.33 predicted=2 okay

Pattern #9: goal=2 rating=+0.57 predicted=2 okayAnd ScoringModel.visualize_linear_model() generates a histogram of the rating from a batch of random inputs. As above, “.” notes dwelling therapy, “o” stands for hospitalization, and “+” dying. For instance:

+

+

+

+ +

+ +

. o + + + +

.. .. . o oo ooo o+ + + ++ + + + +

.. .. . o oo ooo o+ + + ++ + + + +

.. .. . . .... . o oo oooooo+ ++ + ++ + + + + + + +

.. .. . . .... . o oo oooooo+ ++ + ++ + + + + + + +The histogram is spiky as a result of boolean inputs, which (earlier than normalization) are both 0 or 1 within the linear mixture, however the total histogram remains to be a lot smoother than the outcomes we acquired with the 2-layer neural community above. Many enter vectors are mapped to scores which are on the thresholds between the outcomes, permitting us to foretell if a affected person is dangerously near getting hospitalized, or ought to be admitted to intensive care as a precaution.

Conclusion

Easy fashions like linear regressions and different low-capacity networks have fascinating properties in a variety of functions. They’re extremely interpretable and verifiable by people – for instance, from the outcomes of the toy instance above we are able to clearly see that earlier infections shield sufferers from worse outcomes, and that age is an important think about figuring out the severity of an ongoing an infection.

One other property of linear regressions is that their output strikes roughly in keeping with their inputs. It’s this characteristic that we used to accumulate a comparatively clean, steady rating from just some anchor factors provided by the restricted info accessible within the coaching information. Furthermore, we did so primarily based on well-known community elements accessible in main frameworks together with Keras. Lastly, we used a little bit of math to extract the data we’d like from the trainable parameters within the mannequin, and to make sure that the rating learnt is significant, that’s, that it covers the outcomes (classes) within the desired order.

Small, low-capacity fashions are nonetheless highly effective instruments to resolve the proper issues. With fast and low cost coaching, they can be applied, examined and iterated over extraordinarily rapidly, becoming properly into agile approaches to improvement and engineering.