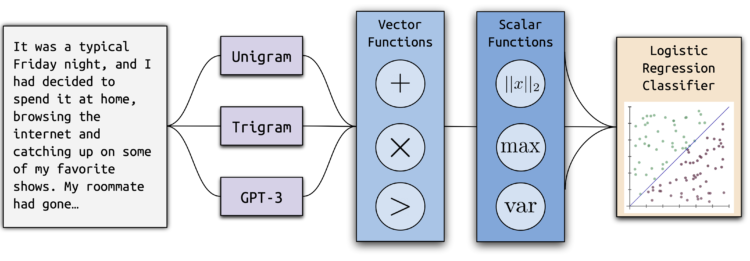

The construction of Ghostbuster, our new state-of-the-art methodology for detecting AI-generated textual content.

Massive language fashions like ChatGPT write impressively effectively—so effectively, the truth is, that they’ve develop into an issue. College students have begun utilizing these fashions to ghostwrite assignments, main some faculties to ban ChatGPT. As well as, these fashions are additionally liable to producing textual content with factual errors, so cautious readers could need to know if generative AI instruments have been used to ghostwrite information articles or different sources earlier than trusting them.

What can academics and shoppers do? Present instruments to detect AI-generated textual content generally do poorly on information that differs from what they had been educated on. As well as, if these fashions falsely classify actual human writing as AI-generated, they will jeopardize college students whose real work known as into query.

Our latest paper introduces Ghostbuster, a state-of-the-art methodology for detecting AI-generated textual content. Ghostbuster works by discovering the likelihood of producing every token in a doc beneath a number of weaker language fashions, then combining features based mostly on these chances as enter to a closing classifier. Ghostbuster doesn’t must know what mannequin was used to generate a doc, nor the likelihood of producing the doc beneath that particular mannequin. This property makes Ghostbuster notably helpful for detecting textual content probably generated by an unknown mannequin or a black-box mannequin, comparable to the favored business fashions ChatGPT and Claude, for which chances aren’t out there. We’re notably focused on making certain that Ghostbuster generalizes effectively, so we evaluated throughout a spread of ways in which textual content could possibly be generated, together with completely different domains (utilizing newly collected datasets of essays, information, and tales), language fashions, or prompts.

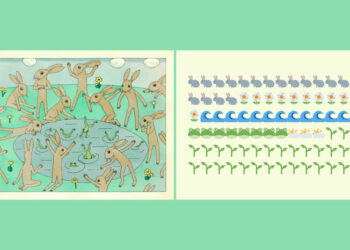

Examples of human-authored and AI-generated textual content from our datasets.

Why this Strategy?

Many present AI-generated textual content detection programs are brittle to classifying several types of textual content (e.g., completely different writing types, or completely different textual content era fashions or prompts). Easier fashions that use perplexity alone usually can’t seize extra complicated options and do particularly poorly on new writing domains. The truth is, we discovered {that a} perplexity-only baseline was worse than random on some domains, together with non-native English speaker information. In the meantime, classifiers based mostly on massive language fashions like RoBERTa simply seize complicated options, however overfit to the coaching information and generalize poorly: we discovered {that a} RoBERTa baseline had catastrophic worst-case generalization efficiency, generally even worse than a perplexity-only baseline. Zero-shot strategies that classify textual content with out coaching on labeled information, by calculating the likelihood that the textual content was generated by a selected mannequin, additionally are likely to do poorly when a unique mannequin was truly used to generate the textual content.

How Ghostbuster Works

Ghostbuster makes use of a three-stage coaching course of: computing chances, choosing options,

and classifier coaching.

Computing chances: We transformed every doc right into a sequence of vectors by computing the likelihood of producing every phrase within the doc beneath a sequence of weaker language fashions (a unigram mannequin, a trigram mannequin, and two non-instruction-tuned GPT-3 fashions, ada and davinci).

Deciding on options: We used a structured search process to pick options, which works by (1) defining a set of vector and scalar operations that mix the chances, and (2) trying to find helpful mixtures of those operations utilizing ahead characteristic choice, repeatedly including the very best remaining characteristic.

Classifier coaching: We educated a linear classifier on the very best probability-based options and a few further manually-selected options.

Outcomes

When educated and examined on the identical area, Ghostbuster achieved 99.0 F1 throughout all three datasets, outperforming GPTZero by a margin of 5.9 F1 and DetectGPT by 41.6 F1. Out of area, Ghostbuster achieved 97.0 F1 averaged throughout all circumstances, outperforming DetectGPT by 39.6 F1 and GPTZero by 7.5 F1. Our RoBERTa baseline achieved 98.1 F1 when evaluated in-domain on all datasets, however its generalization efficiency was inconsistent. Ghostbuster outperformed the RoBERTa baseline on all domains besides artistic writing out-of-domain, and had significantly better out-of-domain efficiency than RoBERTa on common (13.8 F1 margin).

Outcomes on Ghostbuster’s in-domain and out-of-domain efficiency.

To make sure that Ghostbuster is strong to the vary of ways in which a person would possibly immediate a mannequin, comparable to requesting completely different writing types or studying ranges, we evaluated Ghostbuster’s robustness to a number of immediate variants. Ghostbuster outperformed all different examined approaches on these immediate variants with 99.5 F1. To check generalization throughout fashions, we evaluated efficiency on textual content generated by Claude, the place Ghostbuster additionally outperformed all different examined approaches with 92.2 F1.

AI-generated textual content detectors have been fooled by evenly enhancing the generated textual content. We examined Ghostbuster’s robustness to edits, comparable to swapping sentences or paragraphs, reordering characters, or changing phrases with synonyms. Most adjustments on the sentence or paragraph stage didn’t considerably have an effect on efficiency, although efficiency decreased easily if the textual content was edited via repeated paraphrasing, utilizing business detection evaders comparable to Undetectable AI, or making quite a few word- or character-level adjustments. Efficiency was additionally greatest on longer paperwork.

Since AI-generated textual content detectors could misclassify non-native English audio system’ textual content as AI-generated, we evaluated Ghostbuster’s efficiency on non-native English audio system’ writing. All examined fashions had over 95% accuracy on two of three examined datasets, however did worse on the third set of shorter essays. Nonetheless, doc size could also be the principle issue right here, since Ghostbuster does practically as effectively on these paperwork (74.7 F1) because it does on different out-of-domain paperwork of comparable size (75.6 to 93.1 F1).

Customers who want to apply Ghostbuster to real-world circumstances of potential off-limits utilization of textual content era (e.g., ChatGPT-written pupil essays) ought to be aware that errors are extra seemingly for shorter textual content, domains removed from these Ghostbuster educated on (e.g., completely different styles of English), textual content by non-native audio system of English, human-edited mannequin generations, or textual content generated by prompting an AI mannequin to change a human-authored enter. To keep away from perpetuating algorithmic harms, we strongly discourage routinely penalizing alleged utilization of textual content era with out human supervision. As an alternative, we advocate cautious, human-in-the-loop use of Ghostbuster if classifying somebody’s writing as AI-generated may hurt them. Ghostbuster may also assist with a wide range of lower-risk functions, together with filtering AI-generated textual content out of language mannequin coaching information and checking if on-line sources of knowledge are AI-generated.

Conclusion

Ghostbuster is a state-of-the-art AI-generated textual content detection mannequin, with 99.0 F1 efficiency throughout examined domains, representing substantial progress over current fashions. It generalizes effectively to completely different domains, prompts, and fashions, and it’s well-suited to figuring out textual content from black-box or unknown fashions as a result of it doesn’t require entry to chances from the precise mannequin used to generate the doc.

Future instructions for Ghostbuster embody offering explanations for mannequin selections and enhancing robustness to assaults that particularly attempt to idiot detectors. AI-generated textual content detection approaches can be used alongside options comparable to watermarking. We additionally hope that Ghostbuster may also help throughout a wide range of functions, comparable to filtering language mannequin coaching information or flagging AI-generated content material on the net.

Attempt Ghostbuster right here: ghostbuster.app

Be taught extra about Ghostbuster right here: [ paper ] [ code ]

Attempt guessing if textual content is AI-generated your self right here: ghostbuster.app/experiment