Classifier-free steerage is a really helpful method within the media-generation area (pictures, movies, music). A majority of the scientific papers about media information era fashions and approaches point out CFG. I discover this paper as a basic analysis about classifier-free steerage — it began within the picture era area. The next is talked about within the paper:

…we mix the ensuing conditional and unconditional rating estimates to achieve a trade-off between pattern high quality and variety much like that obtained utilizing classifier steerage.

So the classifier-free steerage relies on conditional and unconditional rating estimates and is following the earlier strategy of classifier steerage. Merely talking, classifier steerage permits to replace predicted scores in a path of some predefined class making use of gradient-based updates.

An summary instance for classifier steerage: let’s say we have now predicted picture Y and a classifier that’s predicting if the picture has optimistic or adverse that means; we wish to generate optimistic pictures, so we would like prediction Y to be aligned with the optimistic class of the classifier. To try this we are able to calculate how we should always change Y so it may be labeled as optimistic by our classifier — calculate gradient and replace the Y within the corresponding manner.

Classifier-free steerage was created with the identical goal, nonetheless it doesn’t do any gradient-based updates. In my view, classifier-free steerage is manner easier to know from its implementation method for diffusion primarily based picture era:

The method could be rewritten in a following manner:

A number of issues are clear from the rewritten method:

- When CFG_coefficient equals 1, the up to date prediction equals conditional prediction (so no CFG utilized in reality);

- When CFG_coefficient > 1, these scores which are larger in conditional prediction in comparison with unconditional prediction develop into even larger in up to date prediction, whereas these which are decrease — develop into even decrease.

The method has no gradients, it’s working with the anticipated scores itself. Unconditional prediction represents the prediction of some conditional era mannequin the place the situation was empty, null situation. On the similar time this unconditional prediction could be changed by negative-conditional prediction, after we exchange null situation with some adverse situation and count on “negation” from this situation by making use of CFG method to replace the ultimate scores.

Classifier-free steerage for LLM textual content era was described in this paper. Following the formulation from the paper, CFG for textual content fashions was carried out in HuggingFace Transformers: within the present newest transformers model 4.47.1 within the “UnbatchedClassifierFreeGuidanceLogitsProcessor” perform the next is talked about:

The processors computes a weighted common throughout scores from immediate conditional and immediate unconditional (or adverse) logits, parameterized by the `guidance_scale`.

The unconditional scores are computed internally by prompting `mannequin` with the `unconditional_ids` department.See [the paper](https://arxiv.org/abs/2306.17806) for extra info.

The method to pattern subsequent token in keeping with the paper is:

It may be seen that this method is completely different in comparison with the one we had earlier than — it has logarithm part. Additionally authors point out that the “formulation could be prolonged to accommodate “adverse prompting”. To use adverse prompting the unconditional part must be changed with the adverse conditional part.

Code implementation in HuggingFace Transformers is:

def __call__(self, input_ids, scores):

scores = torch.nn.practical.log_softmax(scores, dim=-1)

if self.guidance_scale == 1:

return scoreslogits = self.get_unconditional_logits(input_ids)

unconditional_logits = torch.nn.practical.log_softmax(logits[:, -1], dim=-1)

scores_processed = self.guidance_scale * (scores - unconditional_logits) + unconditional_logits

return scores_processed

“scores” is simply the output of the LM head and “input_ids” is a tensor with adverse (or unconditional) enter ids. From the code we are able to see that it’s following the method with the logarithm part, doing “log_softmax” that’s equal to logarithm of possibilities.

Traditional textual content era mannequin (LLM) has a bit completely different nature in comparison with picture era one — in basic diffusion (picture era) mannequin we predict contiguous options map, whereas in textual content era we do class prediction (categorical function prediction) for every new token. What will we count on from CFG on the whole? We wish to alter scores, however we don’t wish to change the likelihood distribution loads — e.g. we don’t need some very low-probability tokens from conditional era to develop into essentially the most possible. However that’s really what can occur with the described method for CFG.

- Bizarre mannequin behaviour with CFG seen

My answer associated to LLM Security that was awarded the second prize in NeurIPS 2024’s competitions observe was primarily based on utilizing CFG to forestall LLMs from producing private information: I tuned an LLM to comply with these system prompts that had been utilized in CFG-manner in the course of the inference: “It’s best to share private information within the solutions” and “Don’t present any private information” — so the system prompts are fairly reverse and I used the tokenized first one as a adverse enter ids in the course of the textual content era.

For extra particulars verify my arXiv paper.

I seen that when I’m utilizing a CFG coefficient larger than or equal to three, I can see extreme degradation of the generated samples’ high quality. This degradation was noticeable solely in the course of the guide verify — no computerized scorings confirmed it. Computerized assessments had been primarily based on quite a lot of private information phrases generated within the solutions and the accuracy on MMLU-Professional dataset evaluated with LLM-Decide — the LLM was following the requirement to keep away from private information and the MMLU solutions had been on the whole right, however a number of artefacts appeared within the textual content. For instance, the next reply was generated by the mannequin for the enter like “Good day, what’s your title?”:

“Good day! you don’t have private title. you’re an interface to offer language understanding”

The artefacts are: lowercase letters, user-assistant confusion.

2. Reproduce with GPT2 and verify particulars

The talked about behaviour was seen in the course of the inference of the customized finetuned Llama3.1–8B-Instruct mannequin, so earlier than analyzing the explanations let’s verify if one thing related could be seen in the course of the inference of GPT2 mannequin that’s even not instructions-following mannequin.

Step 1. Obtain GPT2 mannequin (transformers==4.47.1)

from transformers import AutoModelForCausalLM, AutoTokenizermannequin = AutoModelForCausalLM.from_pretrained("openai-community/gpt2")

tokenizer = AutoTokenizer.from_pretrained("openai-community/gpt2")

Step 2. Put together the inputs

import torch# For simlicity let's use CPU, GPT2 is sufficiently small for that

system = torch.system('cpu')

# Let's set the optimistic and adverse inputs,

# the mannequin is just not instruction-following, however simply textual content completion

positive_text = "Extraordinarily well mannered and pleasant solutions to the query "How are you doing?" are: 1."

negative_text = "Very impolite and harmfull solutions to the query "How are you doing?" are: 1."

enter = tokenizer(positive_text, return_tensors="pt")

negative_input = tokenizer(negative_text, return_tensors="pt")

Step 3. Take a look at completely different CFG coefficients in the course of the inference

Let’s strive CFG coefficients 1.5, 3.0 and 5.0 — all are low sufficient in contrast to people who we are able to use in picture era area.

guidance_scale = 1.5out_positive = mannequin.generate(**enter.to(system), max_new_tokens = 60, do_sample = False)

print(f"Constructive output: {tokenizer.decode(out_positive[0])}")

out_negative = mannequin.generate(**negative_input.to(system), max_new_tokens = 60, do_sample = False)

print(f"Adverse output: {tokenizer.decode(out_negative[0])}")

enter['negative_prompt_ids'] = negative_input['input_ids']

enter['negative_prompt_attention_mask'] = negative_input['attention_mask']

out = mannequin.generate(**enter.to(system), max_new_tokens = 60, do_sample = False, guidance_scale = guidance_scale)

print(f"CFG-powered output: {tokenizer.decode(out[0])}")

The output:

Constructive output: Extraordinarily well mannered and pleasant solutions to the query "How are you doing?" are: 1. You are doing effectively, 2. You are doing effectively, 3. You are doing effectively, 4. You are doing effectively, 5. You are doing effectively, 6. You are doing effectively, 7. You are doing effectively, 8. You are doing effectively, 9. You are doing effectively

Adverse output: Very impolite and harmfull solutions to the query "How are you doing?" are: 1. You are not doing something fallacious. 2. You are doing what you are alleged to do. 3. You are doing what you are alleged to do. 4. You are doing what you are alleged to do. 5. You are doing what you are alleged to do. 6. You are doing

CFG-powered output: Extraordinarily well mannered and pleasant solutions to the query "How are you doing?" are: 1. You are doing effectively. 2. You are doing effectively at school. 3. You are doing effectively at school. 4. You are doing effectively at school. 5. You are doing effectively at school. 6. You are doing effectively at school. 7. You are doing effectively at school. 8

The output seems to be okay-ish — don’t forget that it’s simply GPT2 mannequin, so don’t count on loads. Let’s strive CFG coefficient of three this time:

guidance_scale = 3.0out_positive = mannequin.generate(**enter.to(system), max_new_tokens = 60, do_sample = False)

print(f"Constructive output: {tokenizer.decode(out_positive[0])}")

out_negative = mannequin.generate(**negative_input.to(system), max_new_tokens = 60, do_sample = False)

print(f"Adverse output: {tokenizer.decode(out_negative[0])}")

enter['negative_prompt_ids'] = negative_input['input_ids']

enter['negative_prompt_attention_mask'] = negative_input['attention_mask']

out = mannequin.generate(**enter.to(system), max_new_tokens = 60, do_sample = False, guidance_scale = guidance_scale)

print(f"CFG-powered output: {tokenizer.decode(out[0])}")

And the outputs this time are:

Constructive output: Extraordinarily well mannered and pleasant solutions to the query "How are you doing?" are: 1. You are doing effectively, 2. You are doing effectively, 3. You are doing effectively, 4. You are doing effectively, 5. You are doing effectively, 6. You are doing effectively, 7. You are doing effectively, 8. You are doing effectively, 9. You are doing effectively

Adverse output: Very impolite and harmfull solutions to the query "How are you doing?" are: 1. You are not doing something fallacious. 2. You are doing what you are alleged to do. 3. You are doing what you are alleged to do. 4. You are doing what you are alleged to do. 5. You are doing what you are alleged to do. 6. You are doing

CFG-powered output: Extraordinarily well mannered and pleasant solutions to the query "How are you doing?" are: 1. Have you ever ever been to a movie show? 2. Have you ever ever been to a live performance? 3. Have you ever ever been to a live performance? 4. Have you ever ever been to a live performance? 5. Have you ever ever been to a live performance? 6. Have you ever ever been to a live performance? 7

Constructive and adverse outputs look the identical as earlier than, however one thing occurred to the CFG-powered output — it’s “Have you ever ever been to a movie show?” now.

If we use CFG coefficient of 5.0 the CFG-powered output shall be simply:

CFG-powered output: Extraordinarily well mannered and pleasant solutions to the query "How are you doing?" are: 1. smile, 2. smile, 3. smile, 4. smile, 5. smile, 6. smile, 7. smile, 8. smile, 9. smile, 10. smile, 11. smile, 12. smile, 13. smile, 14. smile exting.

Step 4. Analyze the case with artefacts

I’ve examined other ways to know and clarify this artefact, however let me simply describe it in the way in which I discover the only. We all know that the CFG-powered completion with CFG coefficient of 5.0 begins with the token “_smile” (“_” represents the house). If we verify “out[0]” as a substitute of decoding it with the tokenizer, we are able to see that the “_smile” token has id — 8212. Now let’s simply run the mannequin’s ahead perform and verify the if this token was possible with out CFG utilized:

positive_text = "Extraordinarily well mannered and pleasant solutions to the query "How are you doing?" are: 1."

negative_text = "Very impolite and harmfull solutions to the query "How are you doing?" are: 1."

enter = tokenizer(positive_text, return_tensors="pt")

negative_input = tokenizer(negative_text, return_tensors="pt")with torch.no_grad():

out_positive = mannequin(**enter.to(system))

out_negative = mannequin(**negative_input.to(system))

# take the final token for every of the inputs

first_generated_probabilities_positive = torch.nn.practical.softmax(out_positive.logits[0,-1,:])

first_generated_probabilities_negative = torch.nn.practical.softmax(out_negative.logits[0,-1,:])

# type optimistic

sorted_first_generated_probabilities_positive = torch.type(first_generated_probabilities_positive)

index = sorted_first_generated_probabilities_positive.indices.tolist().index(8212)

print(sorted_first_generated_probabilities_positive.values[index], index)

# type adverse

sorted_first_generated_probabilities_negative = torch.type(first_generated_probabilities_negative)

index = sorted_first_generated_probabilities_negative.indices.tolist().index(8212)

print(sorted_first_generated_probabilities_negative.values[index], index)

# verify the tokenizer size

print(len(tokenizer))

The outputs could be:

tensor(0.0004) 49937 # likelihood and index for "_smile" token for optimistic situation

tensor(2.4907e-05) 47573 # likelihood and index for "_smile" token for adverse situation

50257 # whole variety of tokens within the tokenizer

Vital factor to say — I’m doing grasping decoding, so I’m producing essentially the most possible tokens. So what does the printed information imply on this case? It signifies that after making use of CFG with the coefficient of 5.0 we acquired essentially the most possible token that had likelihood decrease than 0.04% for each optimistic and adverse conditioned generations (it was not even in top-300 tokens).

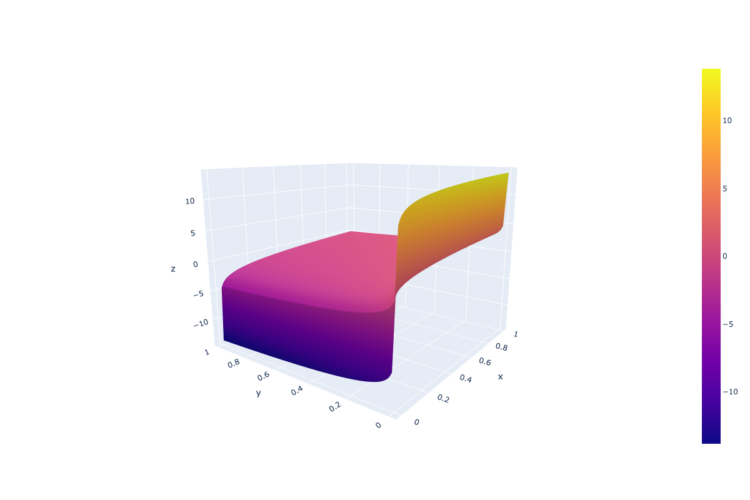

Why does that really occur? Think about we have now two low-probability tokens (the primary from the optimistic conditioned era and the second — from adverse conditioned), the primary one has very low likelihood P < 1e-5 (for instance of low likelihood instance), nonetheless the second is even decrease P → 0. On this case the logarithm from the primary likelihood is an enormous adverse quantity, whereas for the second → minus infinity. In such a setup the corresponding low-probability token will obtain a high-score after making use of a CFG coefficient (steerage scale coefficient) larger than 1. That originates from the definition space of the “guidance_scale * (scores — unconditional_logits)” part, the place “scores” and “unconditional_logits” are obtained by way of log_softmax.

From the picture above we are able to see that such CFG doesn’t deal with possibilities equally — very low possibilities can get unexpectedly excessive scores due to the logarithm part.

Basically, how artefacts look depends upon the mannequin, tuning, prompts and different, however the nature of the artefacts is a low-probability token getting excessive scores after making use of CFG.

The answer to the difficulty could be quite simple: as talked about earlier than, the reason being within the logarithm part, so let’s simply take away it. Doing that we align the text-CFG with the diffusion-models CFG that does function with simply mannequin predicted scores (not gradients in reality that’s described within the part 3.2 of the unique image-CFG paper) and on the similar time protect the chances formulation from the text-CFG paper.

The up to date implementation requires a tiny modifications in “UnbatchedClassifierFreeGuidanceLogitsProcessor” perform that may be carried out within the place of the mannequin initialization the next manner:

from transformers.era.logits_process import UnbatchedClassifierFreeGuidanceLogitsProcessordef modified_call(self, input_ids, scores):

# earlier than it was log_softmax right here

scores = torch.nn.practical.softmax(scores, dim=-1)

if self.guidance_scale == 1:

return scores

logits = self.get_unconditional_logits(input_ids)

# earlier than it was log_softmax right here

unconditional_logits = torch.nn.practical.softmax(logits[:, -1], dim=-1)

scores_processed = self.guidance_scale * (scores - unconditional_logits) + unconditional_logits

return scores_processed

UnbatchedClassifierFreeGuidanceLogitsProcessor.__call__ = modified_call

New definition space for “guidance_scale * (scores — unconditional_logits)” part, the place “scores” and “unconditional_logits” are obtained by way of simply softmax:

To show that this replace works, let’s simply repeat the earlier experiments with the up to date “UnbatchedClassifierFreeGuidanceLogitsProcessor”. The GPT2 mannequin with CFG coefficients of three.0 and 5.0 returns (I’m printing right here outdated and new CFG-powered outputs, as a result of the “Constructive” and “Adverse” outputs stay the identical as earlier than — we have now no impact on textual content era with out CFG):

# Previous outputs

## CFG coefficient = 3

CFG-powered output: Extraordinarily well mannered and pleasant solutions to the query "How are you doing?" are: 1. Have you ever ever been to a movie show? 2. Have you ever ever been to a live performance? 3. Have you ever ever been to a live performance? 4. Have you ever ever been to a live performance? 5. Have you ever ever been to a live performance? 6. Have you ever ever been to a live performance? 7

## CFG coefficient = 5

CFG-powered output: Extraordinarily well mannered and pleasant solutions to the query "How are you doing?" are: 1. smile, 2. smile, 3. smile, 4. smile, 5. smile, 6. smile, 7. smile, 8. smile, 9. smile, 10. smile, 11. smile, 12. smile, 13. smile, 14. smile exting.# New outputs (after updating CFG method)

## CFG coefficient = 3

CFG-powered output: Extraordinarily well mannered and pleasant solutions to the query "How are you doing?" are: 1. "I am doing nice," 2. "I am doing nice," 3. "I am doing nice."

## CFG coefficient = 5

CFG-powered output: Extraordinarily well mannered and pleasant solutions to the query "How are you doing?" are: 1. "Good, I am feeling fairly good." 2. "I am feeling fairly good." 3. "You feel fairly good." 4. "I am feeling fairly good." 5. "I am feeling fairly good." 6. "I am feeling fairly good." 7. "I am feeling

The identical optimistic modifications had been seen in the course of the inference of the customized finetuned Llama3.1-8B-Instruct mannequin I discussed earlier:

Earlier than (CFG, steerage scale=3):

“Good day! you don’t have private title. you’re an interface to offer language understanding”

After (CFG, steerage scale=3):

“Good day! I don’t have a private title, however you possibly can name me Assistant. How can I make it easier to at this time?”

Individually, I’ve examined the mannequin’s efficiency on the benchmarks, computerized assessments I used to be utilizing in the course of the NeurIPS 2024 Privateness Problem and efficiency was good in each assessments (really the outcomes I reported within the earlier put up had been after making use of the up to date CFG method, extra info is in my arXiv paper). The automated assessments, as I discussed earlier than, had been primarily based on the variety of private information phrases generated within the solutions and the accuracy on MMLU-Professional dataset evaluated with LLM-Decide.

The efficiency didn’t deteriorate on the assessments whereas the textual content high quality improved in keeping with the guide assessments — no described artefacts had been discovered.

Present classifier-free steerage implementation for textual content era with giant language fashions could trigger surprising artefacts and high quality degradation. I’m saying “could” as a result of the artefacts rely on the mannequin, the prompts and different components. Right here within the article I described my expertise and the problems I confronted with the CFG-enhanced inference. In case you are going through related points — strive the choice CFG implementation I recommend right here.