Fingers on In relation to AI inferencing, the sooner you may generate a response, the higher – and over the previous few weeks, we have seen plenty of bulletins from chip upstarts claiming mind-bogglingly excessive numbers.

Most lately, Cerebras claimed it had achieved an inference milestone, producing 969 tokens/sec in Meta’s 405 billion parameter behemoth – 539 tokens/sec on the mannequin’s full 128K context window.

Within the small Llama 3.1 70B mannequin, Cerebras reported even increased efficiency, topping 2,100 tokens/sec. Not far behind at 1,665 tokens/sec is AI chip startup Groq.

These numbers far exceed something that is doable with GPUs alone. Synthetic Evaluation’s Llama 3.1 70B API leaderboard reveals even the quickest GPU-based choices prime out at round 120 tokens/sec, with typical IaaS suppliers nearer to 30.

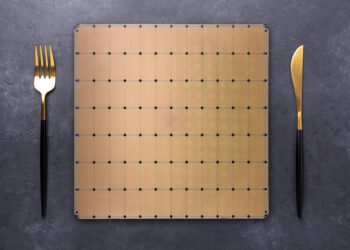

A few of that is all the way down to the truth that neither Cerebras or Groq’s chips are GPUs. They’re purpose-built AI accelerators that reap the benefits of giant banks of SRAM to beat the bandwidth bottlenecks usually related to inference.

Nonetheless, that does not account for such a big bounce. Cerebras and Groq have beforehand proven Llama 3.1 70B efficiency of round 450 and 250 tokens/sec, respectively.

As a substitute, the leap in efficiency is feasible because of a way known as speculative decoding.

A cheat code for efficiency

For those who’re not accustomed to the idea of speculative decoding, don’t fret. The approach is definitely fairly easy and entails utilizing a smaller draft mannequin – say Llama 3.1 8B – to generate the preliminary output, whereas a bigger mannequin – like Llama 3.1 70B or 405B – acts as a reality checker so as to protect accuracy.

When profitable, analysis suggests the approach can pace up token technology by anyplace from 2x to 3x whereas real-world functions have proven upwards of a 6x enchancment.

You possibly can consider this draft mannequin a bit like a private assistant who’s an professional typist. They’ll reply to emails so much sooner, and as long as their prediction is correct, all you – on this analogy the large mannequin – must do is click on ship. If they do not get it proper on the odd electronic mail, you may step in and proper it.

The results of utilizing speculative decoding is, no less than on common, increased throughputs as a result of the draft mannequin requires fewer assets – each by way of TOPS or FLOPS and reminiscence bandwidth. What’s extra, as a result of the large mannequin remains to be checking the outcomes, the benchmarkers at Synthetic Evaluation declare there’s successfully no loss in accuracy in comparison with simply working the complete mannequin.

Attempt it for your self

With all of that out of the way in which, we are able to transfer on to testing speculative decoding for ourselves. Speculative decoding is supported in plenty of widespread mannequin runners, however for the needs of this arms on we’ll be utilizing Llama.cpp.

This isn’t supposed to be a information for putting in and configuring Llama.cpp. The excellent news is getting it working is comparatively simple and there are even some prebuilt packages out there for macOS, Home windows, and Linux – which yow will discover right here.

That stated, for greatest efficiency together with your particular {hardware}, we at all times advocate compiling the most recent launch manually. You will discover extra data on compiling Llama.cpp right here.

As soon as you have bought Llama.cpp deployed, we are able to spin up a brand new server utilizing speculative decoding. Begin by finding the llama-server executable in your most well-liked terminal emulator.

Subsequent we’ll pull down our fashions. We’ll be utilizing a pair of 8-bit quantized GGUF fashions from Hugging Face to maintain issues easy. For our draft mannequin, we’ll use Llama 3.2 1B and for our fundamental mannequin, we’ll use Llama 3.1 8B – which would require a bit of beneath 12GB of vRAM or system reminiscence to run.

For those who’re on macOS or Linux you should use wget to tug down the fashions.

wget https://huggingface.co/bartowski/Meta-Llama-3.1-8B-Instruct-GGUF/resolve/fundamental/Meta-Llama-3.1-8B-Instruct-Q8_0.gguf wget https://huggingface.co/bartowski/Llama-3.2-1B-Instruct-GGUF/resolve/fundamental/Llama-3.2-1B-Instruct-Q8_0.gguf

Subsequent, we are able to take a look at out speculative decoding by working the next command. Don’t fret, we’ll go over every parameter intimately in a minute.

./llama-speculative -m Meta-Llama-3.1-8B-Instruct-Q8_0.gguf -md Llama-3.2-1B-Instruct-Q8_0.gguf -c 4096 -cd 4096 -ngl 99 -ngld 99 --draft-max 16 --draft-min 4 -n 128 -p "Who was the primary prime minister of Britain?"

Be aware: Home windows customers will wish to substitute ./llama-speculative with llama-speculative.exe. For those who aren’t utilizing GPU acceleration, you will additionally wish to take away -ngl 99 and -ngld 99.

A number of seconds after getting into our immediate, our reply will seem, together with a readout exhibiting the technology price and what number of tokens have been drafted by the small mannequin versus what number of have been accepted by the large one.

encoded 9 tokens in 0.033 seconds, pace: 269.574 t/s decoded 139 tokens in 0.762 seconds, pace: 182.501 t/s ... n_draft = 16 n_predict = 139 n_drafted = 208 n_accept = 125 settle for = 60.096%

The upper the acceptance price, the upper the technology price can be. On this case, we’re utilizing pretty low parameter depend fashions – notably for the draft mannequin – which can clarify why the settle for price is so low.

Nonetheless, even with an acceptance price of 60 p.c, we’re nonetheless seeing a reasonably sizable uplift in efficiency at 182 tokens/sec. Utilizing Llama 3.1 8B with out speculative decoding enabled, we noticed efficiency nearer to 90–100 tokens/sec.

So what is going on on on this command?

- ./llama-speculative specifies that we wish to use speculative decoding.

- -m and -md set the trail to the principle (large) and draft (small) fashions, respectively

- -c and -cd set the context window for the principle and draft fashions, respectively

- -ngl 99 and -ngld 99 inform Llama.cpp to dump all of the layers of our fundamental and draft fashions to the GPU.

- –draft-max and –draft-min set the utmost and minimal variety of tokens the draft mannequin ought to generate at a time.

- –draft-p-min units the minimal chance of speculative decoding going down.

- -n units the utmost variety of tokens to output.

- -p is the place we enter our immediate in quotes.

You will discover a full breakdown of obtainable parameters by working:

./llama-speculative --help

If you would like to make use of speculative decoding in a mission, you can even spin up an OpenAI-compatible API server utilizing the next:

./llama-server -m Meta-Llama-3.1-8B-Instruct-Q8_0.gguf -md Llama-3.2-1B-Instruct-Q8_0.gguf -c 4096 -cd 4096 -ngl 99 -ngld 99 --draft-max 8 --draft-min 4 --draft-p-min 0.9 --host 0.0.0.0 --port 8087

This can expose your API server on port 8087 when you may work together with it identical to some other OpenAI-compatible API server. This instance is offered as a proof of idea. In a manufacturing setting you will seemingly wish to set an API key and restrict entry through your firewall.

As a facet word right here, we additionally noticed a modest efficiency uplift when together with --sampling-seq ok to prioritize High-Ok sampling, however your mileage could fluctuate.

A full record of llama-server parameters might be discovered by working:

./llama-server --help

With the server up and working, now you can level your software or a front-end like Open WebUI to work together with the server. For extra data on organising the latter, try our information on retrieval augmented technology right here.

Why speculate?

Speculative decoding is in no way new. The approach was mentioned no less than way back to November 2022 – not lengthy after ChatGPT triggered the AI arms race.

Nonetheless, with monolithic fashions rising ever bigger, speculative decode provides a way to run giant monolithic fashions like Llama 3.1 405B extra effectively with out compromising on accuracy.

Whereas Meta’s 405B basis mannequin could be tiny in comparison with OpenAI’s GPT4 – which is alleged to be roughly 1.7 trillion parameters in dimension – it is nonetheless an extremely tough mannequin to run at excessive throughputs.

At full decision, attaining a technology price of 25 tokens a second would require in extra of 810GB of vRAM and greater than 20 TB/sec of reminiscence bandwidth. Reaching increased efficiency would require extra ranges of parallelism, which implies extra GPUs or accelerators.

Utilizing speculative decoding with one thing like Llama 3.1 70B because the draft mannequin, you’d want one other 140GB of reminiscence on prime of the 810, however, in concept may obtain technology charges properly over 100 tokens/sec – till a mispredict occurs, at which level your throughput will crater.

And this is likely one of the challenges related to speculative decoding: It is tremendously efficient at bolstering throughput, however in our testing, latency might be sporadic and inconsistent.

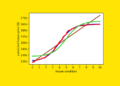

We will truly see this in Cerebra’s beforehand printed outcomes for Llama 3.1 70B when utilizing speculative decode. We do not know what the draft mannequin is, however we’re pretty sure it is the 8B variant based mostly on earlier benchmarks. As you may see, there’s an enormous spike in efficiency when speculative decode is carried out, however the variation in latency remains to be big – leaping up and down by 400 or extra tokens.

To be completely clear, at 1,665 to 2,100 tokens/sec for 70B and as much as 969 tokens/sec for 405B, there is a good likelihood the output will end producing earlier than you ever discover the hiccup.

As for why you’d want an inference engine able to producing lots of or 1000’s of tokens within the blink of a watch, Cerebras truly does a pleasant job of illustrating the issue.

On this slide, Cerebras makes its case for why sooner inference and decrease latency are vital for supporting CoT and agentic AI functions going ahead – Click on to enlarge

For those who’ve tried out OpenAI’s o1, you might have observed it is so much slower than earlier fashions. It is because the mannequin employs a sequence of thought (CoT) tree to interrupt down the duty into particular person steps, consider the responses, establish errors or gaps in logic, and proper them earlier than presenting a solution to the consumer.

Utilizing CoT as a part of the generative AI course of is believed to enhance the accuracy and reliability of solutions and mitigate errant conduct or hallucinations. Nonetheless, the consequence of the strategy is it is so much slower.

The following evolution of that is to mix CoT strategies with a number of domain-specific fashions in agentic workflow. In accordance with Cerebras, such approaches require on the order of 100x as many steps and computational energy. So, the sooner you may churn out tokens, the higher you may disguise the added latency – or that is the concept anyway. ®

Editor’s Be aware: The Register was offered an RTX 6000 Ada Technology graphics card by Nvidia, an Arc A770 GPU by Intel, and a Radeon Professional W7900 DS by AMD to assist tales like this. None of those distributors had any enter as to the content material of this or different articles.

Fingers on In relation to AI inferencing, the sooner you may generate a response, the higher – and over the previous few weeks, we have seen plenty of bulletins from chip upstarts claiming mind-bogglingly excessive numbers.

Most lately, Cerebras claimed it had achieved an inference milestone, producing 969 tokens/sec in Meta’s 405 billion parameter behemoth – 539 tokens/sec on the mannequin’s full 128K context window.

Within the small Llama 3.1 70B mannequin, Cerebras reported even increased efficiency, topping 2,100 tokens/sec. Not far behind at 1,665 tokens/sec is AI chip startup Groq.

These numbers far exceed something that is doable with GPUs alone. Synthetic Evaluation’s Llama 3.1 70B API leaderboard reveals even the quickest GPU-based choices prime out at round 120 tokens/sec, with typical IaaS suppliers nearer to 30.

A few of that is all the way down to the truth that neither Cerebras or Groq’s chips are GPUs. They’re purpose-built AI accelerators that reap the benefits of giant banks of SRAM to beat the bandwidth bottlenecks usually related to inference.

Nonetheless, that does not account for such a big bounce. Cerebras and Groq have beforehand proven Llama 3.1 70B efficiency of round 450 and 250 tokens/sec, respectively.

As a substitute, the leap in efficiency is feasible because of a way known as speculative decoding.

A cheat code for efficiency

For those who’re not accustomed to the idea of speculative decoding, don’t fret. The approach is definitely fairly easy and entails utilizing a smaller draft mannequin – say Llama 3.1 8B – to generate the preliminary output, whereas a bigger mannequin – like Llama 3.1 70B or 405B – acts as a reality checker so as to protect accuracy.

When profitable, analysis suggests the approach can pace up token technology by anyplace from 2x to 3x whereas real-world functions have proven upwards of a 6x enchancment.

You possibly can consider this draft mannequin a bit like a private assistant who’s an professional typist. They’ll reply to emails so much sooner, and as long as their prediction is correct, all you – on this analogy the large mannequin – must do is click on ship. If they do not get it proper on the odd electronic mail, you may step in and proper it.

The results of utilizing speculative decoding is, no less than on common, increased throughputs as a result of the draft mannequin requires fewer assets – each by way of TOPS or FLOPS and reminiscence bandwidth. What’s extra, as a result of the large mannequin remains to be checking the outcomes, the benchmarkers at Synthetic Evaluation declare there’s successfully no loss in accuracy in comparison with simply working the complete mannequin.

Attempt it for your self

With all of that out of the way in which, we are able to transfer on to testing speculative decoding for ourselves. Speculative decoding is supported in plenty of widespread mannequin runners, however for the needs of this arms on we’ll be utilizing Llama.cpp.

This isn’t supposed to be a information for putting in and configuring Llama.cpp. The excellent news is getting it working is comparatively simple and there are even some prebuilt packages out there for macOS, Home windows, and Linux – which yow will discover right here.

That stated, for greatest efficiency together with your particular {hardware}, we at all times advocate compiling the most recent launch manually. You will discover extra data on compiling Llama.cpp right here.

As soon as you have bought Llama.cpp deployed, we are able to spin up a brand new server utilizing speculative decoding. Begin by finding the llama-server executable in your most well-liked terminal emulator.

Subsequent we’ll pull down our fashions. We’ll be utilizing a pair of 8-bit quantized GGUF fashions from Hugging Face to maintain issues easy. For our draft mannequin, we’ll use Llama 3.2 1B and for our fundamental mannequin, we’ll use Llama 3.1 8B – which would require a bit of beneath 12GB of vRAM or system reminiscence to run.

For those who’re on macOS or Linux you should use wget to tug down the fashions.

wget https://huggingface.co/bartowski/Meta-Llama-3.1-8B-Instruct-GGUF/resolve/fundamental/Meta-Llama-3.1-8B-Instruct-Q8_0.gguf wget https://huggingface.co/bartowski/Llama-3.2-1B-Instruct-GGUF/resolve/fundamental/Llama-3.2-1B-Instruct-Q8_0.gguf

Subsequent, we are able to take a look at out speculative decoding by working the next command. Don’t fret, we’ll go over every parameter intimately in a minute.

./llama-speculative -m Meta-Llama-3.1-8B-Instruct-Q8_0.gguf -md Llama-3.2-1B-Instruct-Q8_0.gguf -c 4096 -cd 4096 -ngl 99 -ngld 99 --draft-max 16 --draft-min 4 -n 128 -p "Who was the primary prime minister of Britain?"

Be aware: Home windows customers will wish to substitute ./llama-speculative with llama-speculative.exe. For those who aren’t utilizing GPU acceleration, you will additionally wish to take away -ngl 99 and -ngld 99.

A number of seconds after getting into our immediate, our reply will seem, together with a readout exhibiting the technology price and what number of tokens have been drafted by the small mannequin versus what number of have been accepted by the large one.

encoded 9 tokens in 0.033 seconds, pace: 269.574 t/s decoded 139 tokens in 0.762 seconds, pace: 182.501 t/s ... n_draft = 16 n_predict = 139 n_drafted = 208 n_accept = 125 settle for = 60.096%

The upper the acceptance price, the upper the technology price can be. On this case, we’re utilizing pretty low parameter depend fashions – notably for the draft mannequin – which can clarify why the settle for price is so low.

Nonetheless, even with an acceptance price of 60 p.c, we’re nonetheless seeing a reasonably sizable uplift in efficiency at 182 tokens/sec. Utilizing Llama 3.1 8B with out speculative decoding enabled, we noticed efficiency nearer to 90–100 tokens/sec.

So what is going on on on this command?

- ./llama-speculative specifies that we wish to use speculative decoding.

- -m and -md set the trail to the principle (large) and draft (small) fashions, respectively

- -c and -cd set the context window for the principle and draft fashions, respectively

- -ngl 99 and -ngld 99 inform Llama.cpp to dump all of the layers of our fundamental and draft fashions to the GPU.

- –draft-max and –draft-min set the utmost and minimal variety of tokens the draft mannequin ought to generate at a time.

- –draft-p-min units the minimal chance of speculative decoding going down.

- -n units the utmost variety of tokens to output.

- -p is the place we enter our immediate in quotes.

You will discover a full breakdown of obtainable parameters by working:

./llama-speculative --help

If you would like to make use of speculative decoding in a mission, you can even spin up an OpenAI-compatible API server utilizing the next:

./llama-server -m Meta-Llama-3.1-8B-Instruct-Q8_0.gguf -md Llama-3.2-1B-Instruct-Q8_0.gguf -c 4096 -cd 4096 -ngl 99 -ngld 99 --draft-max 8 --draft-min 4 --draft-p-min 0.9 --host 0.0.0.0 --port 8087

This can expose your API server on port 8087 when you may work together with it identical to some other OpenAI-compatible API server. This instance is offered as a proof of idea. In a manufacturing setting you will seemingly wish to set an API key and restrict entry through your firewall.

As a facet word right here, we additionally noticed a modest efficiency uplift when together with --sampling-seq ok to prioritize High-Ok sampling, however your mileage could fluctuate.

A full record of llama-server parameters might be discovered by working:

./llama-server --help

With the server up and working, now you can level your software or a front-end like Open WebUI to work together with the server. For extra data on organising the latter, try our information on retrieval augmented technology right here.

Why speculate?

Speculative decoding is in no way new. The approach was mentioned no less than way back to November 2022 – not lengthy after ChatGPT triggered the AI arms race.

Nonetheless, with monolithic fashions rising ever bigger, speculative decode provides a way to run giant monolithic fashions like Llama 3.1 405B extra effectively with out compromising on accuracy.

Whereas Meta’s 405B basis mannequin could be tiny in comparison with OpenAI’s GPT4 – which is alleged to be roughly 1.7 trillion parameters in dimension – it is nonetheless an extremely tough mannequin to run at excessive throughputs.

At full decision, attaining a technology price of 25 tokens a second would require in extra of 810GB of vRAM and greater than 20 TB/sec of reminiscence bandwidth. Reaching increased efficiency would require extra ranges of parallelism, which implies extra GPUs or accelerators.

Utilizing speculative decoding with one thing like Llama 3.1 70B because the draft mannequin, you’d want one other 140GB of reminiscence on prime of the 810, however, in concept may obtain technology charges properly over 100 tokens/sec – till a mispredict occurs, at which level your throughput will crater.

And this is likely one of the challenges related to speculative decoding: It is tremendously efficient at bolstering throughput, however in our testing, latency might be sporadic and inconsistent.

We will truly see this in Cerebra’s beforehand printed outcomes for Llama 3.1 70B when utilizing speculative decode. We do not know what the draft mannequin is, however we’re pretty sure it is the 8B variant based mostly on earlier benchmarks. As you may see, there’s an enormous spike in efficiency when speculative decode is carried out, however the variation in latency remains to be big – leaping up and down by 400 or extra tokens.

To be completely clear, at 1,665 to 2,100 tokens/sec for 70B and as much as 969 tokens/sec for 405B, there is a good likelihood the output will end producing earlier than you ever discover the hiccup.

As for why you’d want an inference engine able to producing lots of or 1000’s of tokens within the blink of a watch, Cerebras truly does a pleasant job of illustrating the issue.

On this slide, Cerebras makes its case for why sooner inference and decrease latency are vital for supporting CoT and agentic AI functions going ahead – Click on to enlarge

For those who’ve tried out OpenAI’s o1, you might have observed it is so much slower than earlier fashions. It is because the mannequin employs a sequence of thought (CoT) tree to interrupt down the duty into particular person steps, consider the responses, establish errors or gaps in logic, and proper them earlier than presenting a solution to the consumer.

Utilizing CoT as a part of the generative AI course of is believed to enhance the accuracy and reliability of solutions and mitigate errant conduct or hallucinations. Nonetheless, the consequence of the strategy is it is so much slower.

The following evolution of that is to mix CoT strategies with a number of domain-specific fashions in agentic workflow. In accordance with Cerebras, such approaches require on the order of 100x as many steps and computational energy. So, the sooner you may churn out tokens, the higher you may disguise the added latency – or that is the concept anyway. ®

Editor’s Be aware: The Register was offered an RTX 6000 Ada Technology graphics card by Nvidia, an Arc A770 GPU by Intel, and a Radeon Professional W7900 DS by AMD to assist tales like this. None of those distributors had any enter as to the content material of this or different articles.