Batching your inputs collectively can result in substantial financial savings with out compromising on efficiency

When you use LLMs to annotate or course of bigger datasets, likelihood is that you just’re not even realizing that you’re losing a number of enter tokens. As you repeatedly name an LLM to course of textual content snippets or whole paperwork, your activity directions and static few-shot examples are repeated for each enter instance. Similar to neatly stacking dishes saves house, batching inputs collectively can lead to substantial financial savings.

Assume you wish to tag a smaller doc corpus of 1000 single-page paperwork with directions and few-shot examples which are about half a web page lengthy. Annotating every doc individually would value you about 1M enter tokens. Nevertheless, should you annotated ten paperwork in the identical name, you’d save about 300K enter tokens (or 30%) as a result of we don’t need to repeat directions! As we’ll present within the instance beneath, this may typically occur with minimal efficiency loss (and even efficiency acquire), particularly while you optimize your immediate alongside.

Under I’ve plotted the financial savings assuming that our common doc size is D tokens and our directions and few-shot examples have r*D tokens. The instance state of affairs from the earlier paragraph the place the directions are half the size of the doc (r = 0.5) seems in blue beneath. For longer shared directions, our financial savings might be even greater:

The primary takeaways are:

- Even with comparatively brief directions (blue line), there may be worth in minibatching

- It’s not essential to make use of actually giant minibatch sizes. Most financial savings might be obtained with even average minibatch sizes (B ≤ 10).

Let’s flip sensible with a activity the place we wish to categorize items of textual content for additional evaluation. We’ll use a enjoyable activity from the Pure-Directions benchmark the place we have to annotate sentences in debates with certainly one of 4 classes (worth, reality, testimony or coverage).

an instance, we see that we get the present matter for context after which have to categorize the sentence in query.

{

"enter": {

"matter": "the battle for justice,equality,peaceand love is futile",

"sentence": "What issues is what I'm personally doing to make sure that I'm filling the cup!"

},

"output": "Worth"

}

One query we haven’t answered but:

How will we choose the appropriate minibatch dimension?

Earlier work has proven that the perfect minibatch dimension relies on the duty in addition to the mannequin. We primarily have two choices:

- We choose an inexpensive minibatch dimension, let’s say 5 and hope that we don’t see any drops.

- We optimize the minibatch dimension together with different selections, e.g., the variety of few-shot examples.

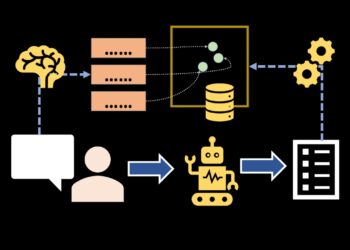

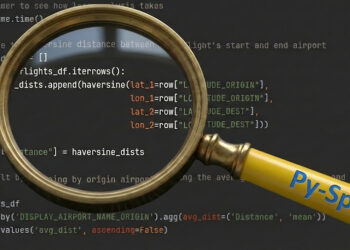

As you might need guessed, we’ll pursue possibility 2 right here. To run our experiments, we’ll use SAMMO, a framework for LLM calling and immediate optimization.

Prompts are coded up in SAMMO as immediate packages (that are merely nested Python courses that’ll be known as with enter information). We’ll construction our activity into three sections and format our minibatches in JSON format.

def prompt_program(fewshot_data, n_fewshot_examples=5, minibatch_size=1):

return Output(

MetaPrompt(

[

Section("Instructions", task["Definition"]),

Part(

"Examples",

FewshotExamples(

fewshot_data, n_fewshot_examples

),

),

Part("Output in identical format as above", InputData()),

],

data_formatter=JSONDataFormatter(),

render_as="markdown",

).with_extractor(on_error="empty_result"),

minibatch_size=minibatch_size,

on_error="empty_result",

)

Working this with out minibatching and utilizing 5 few-shot examples, we get an accuracy of 0.76 and need to pay 58255 enter tokens.

Let’s now discover how minibatching impacts prices and efficiency. Since minibatching reduces the whole enter prices, we will now use a few of these financial savings so as to add extra few-shot examples! We are able to research these trade-offs by establishing a search house in SAMMO:

def search_space(fewshot_data):

minibatch_size = search_op.one_of([1, 5, 10], title="minibatch_size")

n_fewshot_examples = search_op.one_of([5, 20], title="n_fewshot")return prompt_program(fewshot_data, n_fewshot_examples, minibatch_size)

Working this reveals us the total gamut of trade-offs:

setting goal prices parse_errors

--------------------------------------- ----------- --------------------------------- --------------

* {'minibatch_size': 1, 'n_fewshot': 5} 0.76 {'enter': 58255, 'output': 5817} 0.0

{'minibatch_size': 1, 'n_fewshot': 20} 0.76 {'enter': 133355, 'output': 6234} 0.0

{'minibatch_size': 5, 'n_fewshot': 5} 0.75 {'enter': 15297, 'output': 5695} 0.0

{'minibatch_size': 5, 'n_fewshot': 20} 0.77 {'enter': 30317, 'output': 5524} 0.0

{'minibatch_size': 10, 'n_fewshot': 5} 0.73 {'enter': 9928, 'output': 5633} 0.0

* {'minibatch_size': 10, 'n_fewshot': 20} 0.77 {'enter': 17438, 'output': 5432} 0.0

So, even with 20 few-shot examples, we save almost 70 % enter prices ([58255–17438]/58255) all whereas sustaining general accuracy! As an train, you may implement your individual goal to routinely think about prices or embrace other ways of formatting the info within the search house.

Implicit in all of that is that (i) we’ve got sufficient enter examples that use the shared directions and (ii) we’ve got some flexibility relating to latency. The primary assumption is met in lots of annotation situations, however clearly doesn’t maintain in one-off queries. In annotation or different offline processing duties, latency can also be not tremendous crucial as throughput issues most. Nevertheless, in case your activity is to supply a consumer with the reply as shortly as potential, it would make extra sense to subject B parallel calls than one name with B enter examples.