Picture by Writer | ChatGPT

The Knowledge High quality Bottleneck Each Knowledge Scientist Is aware of

You’ve got simply obtained a brand new dataset. Earlier than diving into evaluation, it is advisable perceive what you are working with: What number of lacking values? Which columns are problematic? What is the total information high quality rating?

Most information scientists spend 15-Half-hour manually exploring every new dataset—loading it into pandas, operating .data(), .describe(), and .isnull().sum(), then creating visualizations to grasp lacking information patterns. This routine will get tedious if you’re evaluating a number of datasets day by day.

What in case you might paste any CSV URL and get an expert information high quality report in beneath 30 seconds? No Python setting setup, no guide coding, no switching between instruments.

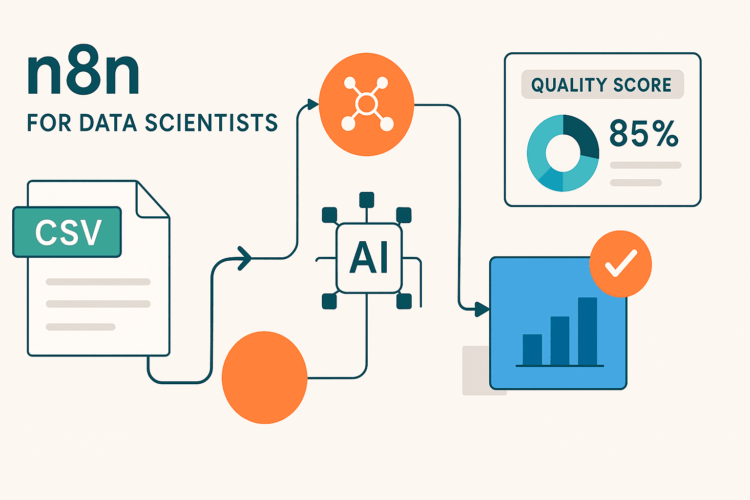

The Resolution: A 4-Node n8n Workflow

n8n (pronounced “n-eight-n”) is an open-source workflow automation platform that connects totally different companies, APIs, and instruments via a visible, drag-and-drop interface. Whereas most individuals affiliate workflow automation with enterprise processes like electronic mail advertising or buyer help, n8n may also help with automating information science duties that historically require customized scripting.

Not like writing standalone Python scripts, n8n workflows are visible, reusable, and straightforward to switch. You’ll be able to join information sources, carry out transformations, run analyses, and ship outcomes—all with out switching between totally different instruments or environments. Every workflow consists of “nodes” that symbolize totally different actions, linked collectively to create an automatic pipeline.

Our automated information high quality analyzer consists of 4 linked nodes:

- Guide Set off – Begins the workflow if you click on “Execute”

- HTTP Request – Fetches any CSV file from a URL

- Code Node – Analyzes the information and generates high quality metrics

- HTML Node – Creates an exquisite, skilled report

Constructing the Workflow: Step-by-Step Implementation

Stipulations

- n8n account (free 14 day trial at n8n.io)

- Our pre-built workflow template (JSON file supplied)

- Any CSV dataset accessible through public URL (we’ll present take a look at examples)

Step 1: Import the Workflow Template

Relatively than constructing from scratch, we’ll use a pre-configured template that features all of the evaluation logic:

- Obtain the workflow file

- Open n8n and click on “Import from File”

- Choose the downloaded JSON file – all 4 nodes will seem robotically

- Save the workflow together with your most popular identify

The imported workflow incorporates 4 linked nodes with all of the complicated parsing and evaluation code already configured.

Step 2: Understanding Your Workflow

Let’s stroll via what every node does:

Guide Set off Node: Begins the evaluation if you click on “Execute Workflow.” Good for on-demand information high quality checks.

HTTP Request Node: Fetches CSV information from any public URL. Pre-configured to deal with most traditional CSV codecs and return the uncooked textual content information wanted for evaluation.

Code Node: The evaluation engine that features strong CSV parsing logic to deal with widespread variations in delimiter utilization, quoted fields, and lacking worth codecs. It robotically:

- Parses CSV information with clever discipline detection

- Identifies lacking values in a number of codecs (null, empty, “N/A”, and so forth.)

- Calculates high quality scores and severity rankings

- Generates particular, actionable suggestions

HTML Node: Transforms the evaluation outcomes into an exquisite, skilled report with color-coded high quality scores and clear formatting.

Step 3: Customizing for Your Knowledge

To investigate your personal dataset:

- Click on on the HTTP Request node

- Substitute the URL together with your CSV dataset URL:

- Present:

https://uncooked.githubusercontent.com/fivethirtyeight/information/grasp/college-majors/recent-grads.csv - Your information:

https://your-domain.com/your-dataset.csv

- Present:

- Save the workflow

That is it! The evaluation logic robotically adapts to totally different CSV buildings, column names, and information varieties.

Step 4: Execute and View Outcomes

- Click on “Execute Workflow” within the prime toolbar

- Watch the nodes course of – every will present a inexperienced checkmark when full

- Click on on the HTML node and choose the “HTML” tab to view your report

- Copy the report or take screenshots to share together with your staff

Your entire course of takes beneath 30 seconds as soon as your workflow is about up.

Understanding the Outcomes

The colour-coded high quality rating offers you an instantaneous evaluation of your dataset:

- 95-100%: Good (or close to excellent) information high quality, prepared for quick evaluation

- 85-94%: Wonderful high quality with minimal cleansing wanted

- 75-84%: Good high quality, some preprocessing required

- 60-74%: Honest high quality, average cleansing wanted

- Beneath 60%: Poor high quality, important information work required

Notice: This implementation makes use of an easy missing-data-based scoring system. Superior high quality metrics like information consistency, outlier detection, or schema validation could possibly be added to future variations.

Here is what the ultimate report seems to be like:

Our instance evaluation reveals a 99.42% high quality rating – indicating the dataset is basically full and prepared for evaluation with minimal preprocessing.

Dataset Overview:

- 173 Complete Data: A small however adequate pattern measurement excellent for fast exploratory evaluation

- 21 Complete Columns: A manageable variety of options that enables centered insights

- 4 Columns with Lacking Knowledge: A number of choose fields comprise gaps

- 17 Full Columns: The vast majority of fields are totally populated

Testing with Totally different Datasets

To see how the workflow handles various information high quality patterns, attempt these instance datasets:

- Iris Dataset (

https://uncooked.githubusercontent.com/uiuc-cse/data-fa14/gh-pages/information/iris.csv) usually reveals an ideal rating (100%) with no lacking values. - Titanic Dataset (

https://uncooked.githubusercontent.com/datasciencedojo/datasets/grasp/titanic.csv) demonstrates a extra practical 67.6% rating as a consequence of strategic lacking information in columns like Age and Cabin. - Your Personal Knowledge: Add to Github uncooked or use any public CSV URL

Primarily based in your high quality rating, you’ll be able to decide subsequent steps: above 95% means proceed on to exploratory information evaluation, 85-94% suggests minimal cleansing of recognized problematic columns, 75-84% signifies average preprocessing work is required, 60-74% requires planning focused cleansing methods for a number of columns, and under 60% suggests evaluating if the dataset is appropriate in your evaluation objectives or if important information work is justified. The workflow adapts robotically to any CSV construction, permitting you to shortly assess a number of datasets and prioritize your information preparation efforts.

Subsequent Steps

1. Electronic mail Integration

Add a Ship Electronic mail node to robotically ship experiences to stakeholders by connecting it after the HTML node. This transforms your workflow right into a distribution system the place high quality experiences are robotically despatched to mission managers, information engineers, or purchasers everytime you analyze a brand new dataset. You’ll be able to customise the e-mail template to incorporate government summaries or particular suggestions based mostly on the standard rating.

2. Scheduled Evaluation

Substitute the Guide Set off with a Schedule Set off to robotically analyze datasets at common intervals, excellent for monitoring information sources that replace incessantly. Arrange day by day, weekly, or month-to-month checks in your key datasets to catch high quality degradation early. This proactive strategy helps you determine information pipeline points earlier than they affect downstream evaluation or mannequin efficiency.

3. A number of Dataset Evaluation

Modify the workflow to simply accept a listing of CSV URLs and generate a comparative high quality report throughout a number of datasets concurrently. This batch processing strategy is invaluable when evaluating information sources for a brand new mission or conducting common audits throughout your group’s information stock. You’ll be able to create abstract dashboards that rank datasets by high quality rating, serving to prioritize which information sources want quick consideration versus these prepared for evaluation.

4. Totally different File Codecs

Lengthen the workflow to deal with different information codecs past CSV by modifying the parsing logic within the Code node. For JSON information, adapt the information extraction to deal with nested buildings and arrays, whereas Excel information will be processed by including a preprocessing step to transform XLSX to CSV format. Supporting a number of codecs makes your high quality analyzer a common device for any information supply in your group, no matter how the information is saved or delivered.

Conclusion

This n8n workflow demonstrates how visible automation can streamline routine information science duties whereas sustaining the technical depth that information scientists require. By leveraging your current coding background, you’ll be able to customise the JavaScript evaluation logic, prolong the HTML reporting templates, and combine together with your most popular information infrastructure — all inside an intuitive visible interface.

The workflow’s modular design makes it notably precious for information scientists who perceive each the technical necessities and enterprise context of information high quality evaluation. Not like inflexible no-code instruments, n8n lets you modify the underlying evaluation logic whereas offering visible readability that makes workflows simple to share, debug, and preserve. You can begin with this basis and steadily add subtle options like statistical anomaly detection, customized high quality metrics, or integration together with your current MLOps pipeline.

Most significantly, this strategy bridges the hole between information science experience and organizational accessibility. Your technical colleagues can modify the code whereas non-technical stakeholders can execute workflows and interpret outcomes instantly. This mixture of technical sophistication and user-friendly execution makes n8n excellent for information scientists who wish to scale their affect past particular person evaluation.

Born in India and raised in Japan, Vinod brings a worldwide perspective to information science and machine studying training. He bridges the hole between rising AI applied sciences and sensible implementation for working professionals. Vinod focuses on creating accessible studying pathways for complicated subjects like agentic AI, efficiency optimization, and AI engineering. He focuses on sensible machine studying implementations and mentoring the subsequent era of information professionals via stay periods and personalised steering.

Picture by Writer | ChatGPT

The Knowledge High quality Bottleneck Each Knowledge Scientist Is aware of

You’ve got simply obtained a brand new dataset. Earlier than diving into evaluation, it is advisable perceive what you are working with: What number of lacking values? Which columns are problematic? What is the total information high quality rating?

Most information scientists spend 15-Half-hour manually exploring every new dataset—loading it into pandas, operating .data(), .describe(), and .isnull().sum(), then creating visualizations to grasp lacking information patterns. This routine will get tedious if you’re evaluating a number of datasets day by day.

What in case you might paste any CSV URL and get an expert information high quality report in beneath 30 seconds? No Python setting setup, no guide coding, no switching between instruments.

The Resolution: A 4-Node n8n Workflow

n8n (pronounced “n-eight-n”) is an open-source workflow automation platform that connects totally different companies, APIs, and instruments via a visible, drag-and-drop interface. Whereas most individuals affiliate workflow automation with enterprise processes like electronic mail advertising or buyer help, n8n may also help with automating information science duties that historically require customized scripting.

Not like writing standalone Python scripts, n8n workflows are visible, reusable, and straightforward to switch. You’ll be able to join information sources, carry out transformations, run analyses, and ship outcomes—all with out switching between totally different instruments or environments. Every workflow consists of “nodes” that symbolize totally different actions, linked collectively to create an automatic pipeline.

Our automated information high quality analyzer consists of 4 linked nodes:

- Guide Set off – Begins the workflow if you click on “Execute”

- HTTP Request – Fetches any CSV file from a URL

- Code Node – Analyzes the information and generates high quality metrics

- HTML Node – Creates an exquisite, skilled report

Constructing the Workflow: Step-by-Step Implementation

Stipulations

- n8n account (free 14 day trial at n8n.io)

- Our pre-built workflow template (JSON file supplied)

- Any CSV dataset accessible through public URL (we’ll present take a look at examples)

Step 1: Import the Workflow Template

Relatively than constructing from scratch, we’ll use a pre-configured template that features all of the evaluation logic:

- Obtain the workflow file

- Open n8n and click on “Import from File”

- Choose the downloaded JSON file – all 4 nodes will seem robotically

- Save the workflow together with your most popular identify

The imported workflow incorporates 4 linked nodes with all of the complicated parsing and evaluation code already configured.

Step 2: Understanding Your Workflow

Let’s stroll via what every node does:

Guide Set off Node: Begins the evaluation if you click on “Execute Workflow.” Good for on-demand information high quality checks.

HTTP Request Node: Fetches CSV information from any public URL. Pre-configured to deal with most traditional CSV codecs and return the uncooked textual content information wanted for evaluation.

Code Node: The evaluation engine that features strong CSV parsing logic to deal with widespread variations in delimiter utilization, quoted fields, and lacking worth codecs. It robotically:

- Parses CSV information with clever discipline detection

- Identifies lacking values in a number of codecs (null, empty, “N/A”, and so forth.)

- Calculates high quality scores and severity rankings

- Generates particular, actionable suggestions

HTML Node: Transforms the evaluation outcomes into an exquisite, skilled report with color-coded high quality scores and clear formatting.

Step 3: Customizing for Your Knowledge

To investigate your personal dataset:

- Click on on the HTTP Request node

- Substitute the URL together with your CSV dataset URL:

- Present:

https://uncooked.githubusercontent.com/fivethirtyeight/information/grasp/college-majors/recent-grads.csv - Your information:

https://your-domain.com/your-dataset.csv

- Present:

- Save the workflow

That is it! The evaluation logic robotically adapts to totally different CSV buildings, column names, and information varieties.

Step 4: Execute and View Outcomes

- Click on “Execute Workflow” within the prime toolbar

- Watch the nodes course of – every will present a inexperienced checkmark when full

- Click on on the HTML node and choose the “HTML” tab to view your report

- Copy the report or take screenshots to share together with your staff

Your entire course of takes beneath 30 seconds as soon as your workflow is about up.

Understanding the Outcomes

The colour-coded high quality rating offers you an instantaneous evaluation of your dataset:

- 95-100%: Good (or close to excellent) information high quality, prepared for quick evaluation

- 85-94%: Wonderful high quality with minimal cleansing wanted

- 75-84%: Good high quality, some preprocessing required

- 60-74%: Honest high quality, average cleansing wanted

- Beneath 60%: Poor high quality, important information work required

Notice: This implementation makes use of an easy missing-data-based scoring system. Superior high quality metrics like information consistency, outlier detection, or schema validation could possibly be added to future variations.

Here is what the ultimate report seems to be like:

Our instance evaluation reveals a 99.42% high quality rating – indicating the dataset is basically full and prepared for evaluation with minimal preprocessing.

Dataset Overview:

- 173 Complete Data: A small however adequate pattern measurement excellent for fast exploratory evaluation

- 21 Complete Columns: A manageable variety of options that enables centered insights

- 4 Columns with Lacking Knowledge: A number of choose fields comprise gaps

- 17 Full Columns: The vast majority of fields are totally populated

Testing with Totally different Datasets

To see how the workflow handles various information high quality patterns, attempt these instance datasets:

- Iris Dataset (

https://uncooked.githubusercontent.com/uiuc-cse/data-fa14/gh-pages/information/iris.csv) usually reveals an ideal rating (100%) with no lacking values. - Titanic Dataset (

https://uncooked.githubusercontent.com/datasciencedojo/datasets/grasp/titanic.csv) demonstrates a extra practical 67.6% rating as a consequence of strategic lacking information in columns like Age and Cabin. - Your Personal Knowledge: Add to Github uncooked or use any public CSV URL

Primarily based in your high quality rating, you’ll be able to decide subsequent steps: above 95% means proceed on to exploratory information evaluation, 85-94% suggests minimal cleansing of recognized problematic columns, 75-84% signifies average preprocessing work is required, 60-74% requires planning focused cleansing methods for a number of columns, and under 60% suggests evaluating if the dataset is appropriate in your evaluation objectives or if important information work is justified. The workflow adapts robotically to any CSV construction, permitting you to shortly assess a number of datasets and prioritize your information preparation efforts.

Subsequent Steps

1. Electronic mail Integration

Add a Ship Electronic mail node to robotically ship experiences to stakeholders by connecting it after the HTML node. This transforms your workflow right into a distribution system the place high quality experiences are robotically despatched to mission managers, information engineers, or purchasers everytime you analyze a brand new dataset. You’ll be able to customise the e-mail template to incorporate government summaries or particular suggestions based mostly on the standard rating.

2. Scheduled Evaluation

Substitute the Guide Set off with a Schedule Set off to robotically analyze datasets at common intervals, excellent for monitoring information sources that replace incessantly. Arrange day by day, weekly, or month-to-month checks in your key datasets to catch high quality degradation early. This proactive strategy helps you determine information pipeline points earlier than they affect downstream evaluation or mannequin efficiency.

3. A number of Dataset Evaluation

Modify the workflow to simply accept a listing of CSV URLs and generate a comparative high quality report throughout a number of datasets concurrently. This batch processing strategy is invaluable when evaluating information sources for a brand new mission or conducting common audits throughout your group’s information stock. You’ll be able to create abstract dashboards that rank datasets by high quality rating, serving to prioritize which information sources want quick consideration versus these prepared for evaluation.

4. Totally different File Codecs

Lengthen the workflow to deal with different information codecs past CSV by modifying the parsing logic within the Code node. For JSON information, adapt the information extraction to deal with nested buildings and arrays, whereas Excel information will be processed by including a preprocessing step to transform XLSX to CSV format. Supporting a number of codecs makes your high quality analyzer a common device for any information supply in your group, no matter how the information is saved or delivered.

Conclusion

This n8n workflow demonstrates how visible automation can streamline routine information science duties whereas sustaining the technical depth that information scientists require. By leveraging your current coding background, you’ll be able to customise the JavaScript evaluation logic, prolong the HTML reporting templates, and combine together with your most popular information infrastructure — all inside an intuitive visible interface.

The workflow’s modular design makes it notably precious for information scientists who perceive each the technical necessities and enterprise context of information high quality evaluation. Not like inflexible no-code instruments, n8n lets you modify the underlying evaluation logic whereas offering visible readability that makes workflows simple to share, debug, and preserve. You can begin with this basis and steadily add subtle options like statistical anomaly detection, customized high quality metrics, or integration together with your current MLOps pipeline.

Most significantly, this strategy bridges the hole between information science experience and organizational accessibility. Your technical colleagues can modify the code whereas non-technical stakeholders can execute workflows and interpret outcomes instantly. This mixture of technical sophistication and user-friendly execution makes n8n excellent for information scientists who wish to scale their affect past particular person evaluation.

Born in India and raised in Japan, Vinod brings a worldwide perspective to information science and machine studying training. He bridges the hole between rising AI applied sciences and sensible implementation for working professionals. Vinod focuses on creating accessible studying pathways for complicated subjects like agentic AI, efficiency optimization, and AI engineering. He focuses on sensible machine studying implementations and mentoring the subsequent era of information professionals via stay periods and personalised steering.