AI is evolving quickly, and software program engineers not have to memorize syntax. Nonetheless, pondering like an architect and understanding the expertise that allows techniques to run securely at scale is turning into more and more worthwhile.

I additionally need to mirror on being in my position a yr now as an AI Options Engineer at Cisco. I work with clients each day throughout completely different verticals — healthcare, monetary providers, manufacturing, legislation companies, and they’re all making an attempt to reply largely the identical set of questions:

- What’s our AI technique?

- What use circumstances truly match our knowledge?

- Cloud vs. on-prem vs. hybrid?

- How a lot will it value — not simply as we speak, however at scale?

- How can we safe it?

These are the actual sensible constraints that present up instantly when you attempt to operationalize AI past a POC.

Just lately, we added a Cisco UCS C845A to one in every of our labs. It has 2x NVIDIA RTX PRO 6000 Blackwell GPUs, 3.1TB NVMe, ~127 allocatable CPU cores, and 754GB RAM. I made a decision to construct a shared inside platform on prime of it — giving groups a constant, self-service setting to run experiments, validate concepts, and construct hands-on GPU expertise.

I deployed the platform as a Single Node OpenShift (SNO) cluster and layered a multi-tenant GPUaaS expertise on prime. Customers reserve capability via a calendar UI, and the system provisions an remoted ML setting prebuilt with PyTorch/CUDA, JupyterLab, VS Code, and extra. Inside that setting, customers can run on-demand inference, iterate on mannequin coaching and fine-tuning, and prototype manufacturing grade microservices.

This put up walks via the structure — how scheduling choices are made, how tenants are remoted, and the way the platform manages itself. The choices that went into this lab platform are the identical ones any group faces once they’re severe about AI in manufacturing.

That is the inspiration for enterprise AI at scale. Multi-agent architectures, self-service experimentation, safe multi-tenancy, cost-predictable GPU compute, all of it begins with getting the platform layer proper.

Preliminary Setup

Earlier than there’s a platform, there’s a naked metallic server and a clean display.

Bootstrapping the Node

The node ships with no working system. If you energy it on you’re dropped right into a UEFI shell. For OpenShift, set up usually begins within the Crimson Hat Hybrid Cloud Console by way of the Assisted Installer. The Assisted Installer handles cluster configuration via a guided setup circulate, and as soon as full, generates a discovery ISO — a bootable RHEL CoreOS picture preconfigured in your setting. Map the ISO to the server as digital media via the Cisco IMC, set boot order, and energy on. The node will telephone house to the console, and you’ll kick off the set up course of. The node writes RHCOS to NVMe and bootstraps. Inside a couple of hours you will have a operating cluster.

This workflow assumes web connectivity, pulling pictures from Crimson Hat’s registries throughout set up. That’s not at all times an choice. Lots of the clients I work with function in air-gapped environments the place nothing touches the general public web. The method there may be completely different: generate ignition configs domestically, obtain the OpenShift launch pictures and operator bundles forward of time, mirror every thing into an area Quay registry, and level the set up at that. Each paths get you to the identical place. The assisted set up is far simpler. The air-gapped path is what manufacturing seems like in regulated industries.

Configuring GPUs with the NVIDIA GPU Operator

As soon as the GPU Operator is put in (occurs robotically utilizing the assisted installer), I configured how the 2 RTX PRO 6000 Blackwell GPUs are introduced to workloads via two ConfigMaps within the nvidia-gpu-operator namespace.

The primary — custom-mig-config — defines bodily partitioning. On this case it’s a combined technique, that means GPU 0 is partitioned into 4 1g.24gb MIG slices (~24GB devoted reminiscence every), GPU 1 stays complete for workloads that want the total ~96GB. MIG partitioning is actual {hardware} isolation. You get devoted reminiscence, compute items, and L2 cache per slice. Workloads will see MIG cases as separate bodily gadgets.

The second — device-plugin-config — configures time-slicing, which permits a number of pods to share the identical GPU or MIG slice via speedy context switching. I set 4 replicas per complete GPU and a pair of per MIG slice. That is what allows operating a number of inference containers aspect by aspect inside a single session.

Foundational Storage

The three.1TB NVMe is managed by the LVM Storage Operator (lvms-vg1 StorageClass). I created two PVCs as part of the preliminary provisioning course of — a quantity backing PostgreSQL and chronic storage for OpenShift’s inside picture registry.

With the OS put in, community conditions met (DNS, IP allocation, all required A data) which isn’t lined on this article, GPUs partitioned, and storage provisioned, the cluster is prepared for the appliance layer.

System Structure

This leads us into the principle subject: the system structure. The platform separates into three planes — scheduling, management, and runtime, with the PostgreSQL database as the one supply of reality.

Within the platform administration namespace, there are 4 at all times on deployments:

- Portal app: a single container operating the React UI and FastAPI backend

- Reconciler (controller): the management loop that repeatedly converges cluster state to match the database

- PostgreSQL: persistent state for customers, reservations, tokens, and audit historical past

- Cache daemon: a node-local service that pre-stages giant mannequin artifacts / inference engines so customers can begin rapidly (pulling a 20GB vLLM picture over company proxy can take hours)

A fast word on the event lifecycle, as a result of it’s simple to complicate delivery Kubernetes techniques. I write and check code domestically, however the pictures are constructed within the cluster utilizing OpenShift construct artifacts (BuildConfigs) and pushed to the interior registry. The deployments themselves simply level at these pictures.

The primary time a part is launched, I apply the manifests to create the Deployment/Service/RBAC. After that, most adjustments are simply constructing a brand new picture in-cluster, then set off a restart so the Deployment pulls the up to date picture and rolls ahead:

oc rollout restart deployment/ -n That’s the loop: commit → in-cluster construct → inside registry → restart/rollout.

The Scheduling Aircraft

That is the person dealing with entry level. Customers see the useful resource pool — GPUs, CPU, reminiscence, they choose a time window, select their GPU allocation mode (extra on this later), and submit a reservation.

GPUs are costly {hardware} with an actual value per hour whether or not they’re in use or not. The reservation system treats calendar time and bodily capability as a mixed constraint. The identical manner you’d guide a convention room, besides this room has 96GB of VRAM and prices significantly extra per hour.

Underneath the hood, the system queries overlapping reservations towards pool capability utilizing advisory locks to stop double reserving. Basically it’s simply including up reserved capability and subtracting it from complete capability. Every reservation tracks via a lifecycle: APPROVED → ACTIVE → COMPLETED, with CANCELED and FAILED as terminal states.

The FastAPI server itself is deliberately skinny. It validates enter, persists the reservation, and returns. It by no means talks to the Kubernetes API.

The Management Aircraft

On the coronary heart of the platform is the controller. It’s Python primarily based and runs in a steady loop on a 30-second cadence. You may consider it like a cron job by way of timing, however architecturally it’s a Kubernetes-style controller liable for driving the system towards a desired state.

The database holds the specified state (reservations with time home windows and useful resource necessities). The reconciler reads that state, compares it towards what truly exists within the Kubernetes cluster, and converges the 2. There aren’t any concurrent API calls racing to mutate cluster state; only one deterministic loop making the minimal set of adjustments wanted to achieve the specified state. If the reconciler crashes, it restarts and continues precisely the place it left off, as a result of the supply of reality (desired state) stays intact within the database.

Every reconciliation cycle evaluates 4 considerations so as:

- Cease expired or canceled classes and delete the namespace (which cascades cleanup of all assets inside it).

- Restore failed classes and take away orphaned assets left behind by partially accomplished provisioning.

- Begin eligible classes when their reservation window arrives — provision, configure, and hand the workspace to the person.

- Keep the database by expiring previous tokens and imposing audit log retention.

Beginning a session is a multi-step provisioning sequence, and each step is idempotent, that means it’s designed to be safely re-run if interrupted halfway:

The reconciler is the solely part that talks to the Kubernetes API.

Rubbish assortment can be baked into the identical loop. At a slower cadence (~5 minutes), the reconciler sweeps for cross namespace orphans equivalent to stale RBAC bindings, leftover OpenShift safety context entries, namespaces caught in terminating, or namespaces that exist within the cluster however don’t have any matching database document.

The design assumption all through is that failure is regular. For instance, we had an influence provide failure on the node that took the cluster down mid-session and when it got here again, the reconciler resumed its loop, detected the state discrepancies, and self-healed with out guide intervention.

The Runtime Aircraft

When a reservation window begins, the person opens a browser and lands in a full VS Code workspace (code-server) pre-loaded with all the AI/ML stack, and kubectl entry inside their session namespace.

Standard inference engines equivalent to vLLM, Ollama, TGI, and Triton are already cached on the node, so deploying a mannequin server is a one-liner that begins in seconds. There’s 600GB of persistent NVMe backed storage allotted to the session, together with a 20GB house listing for notebooks and scripts, and a 300GB mannequin cache.

Every session is a totally remoted Kubernetes namespace, its personal blast radius boundary with devoted assets and nil visibility into every other tenant’s setting. The reconciler provisions namespace scoped RBAC granting full admin powers inside that boundary, enabling customers to create and delete pods, deployments, providers, routes, secrets and techniques — regardless of the workload requires. However there’s no cluster degree entry. Customers can learn their very own ResourceQuota to see their remaining price range, however they will’t modify it.

ResourceQuota enforces a tough ceiling on every thing. A runaway coaching job can’t OOM the node. A rogue container can’t fill the NVMe. LimitRange injects sane defaults into each container robotically, so customers can kubectl run with out specifying useful resource requests. There’s a proxy ConfigMap injected into the namespace so person deployed containers get company community egress with out guide configuration.

Customers deploy what they need — inference servers, databases, {custom} providers, and the platform handles the guardrails.

When the reservation window ends, the reconciler deletes the namespace and every thing inside it.

GPU Scheduling

Now the enjoyable half — GPU scheduling and truly operating hardware-accelerated workloads in a multi-tenant setting.

MIG & Time-slicing

We lined the MIG configuration within the preliminary setup, but it surely’s price revisiting from a scheduling perspective. GPU 0 is partitioned into 4 1g.24gb MIG slices — every with ~24GB of devoted reminiscence, sufficient for many 7B–14B parameter fashions. GPU 1 stays complete for workloads that want the total ~96GB VRAM for mannequin coaching, full-precision inference on 70B+ fashions, or something that merely doesn’t slot in a slice.

The reservation system tracks these as distinct useful resource sorts. Customers guide both nvidia.com/gpu (complete) or nvidia.com/mig-1g.24gb (as much as 4 slices). The ResourceQuota for every session laborious denies the alternative sort. Should you reserved a MIG slice, you bodily can’t request an entire GPU, even when one is sitting idle. In a combined MIG setting, letting a session by accident eat the incorrect useful resource sort would break the capability math for each different reservation on the calendar.

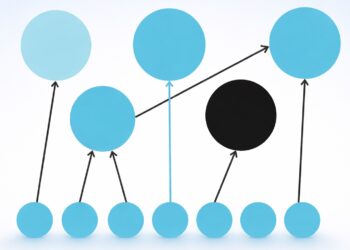

Time-slicing permits a number of pods to share the identical bodily GPU or MIG slice via speedy context switching. The NVIDIA gadget plugin advertises N “digital” GPUs per bodily gadget.

In our configuration, 1 complete GPU seems as 4 schedulable assets. Every MIG slice seems as 2.

What which means is a person reserves one bodily GPU and might run as much as 4 concurrent GPU-accelerated containers inside their session — a vLLM occasion serving gpt-oss, an Ollama occasion with Mistral, a TGI server operating a reranker, and a {custom} service orchestrating throughout all three.

Two Allocation Modes

At reservation time, customers select how their GPU price range is initially distributed between the workspace and person deployed containers.

Interactive ML — The workspace pod will get a GPU (or MIG slice) hooked up straight. The person opens Jupyter, imports PyTorch, and has quick CUDA entry for coaching, fine-tuning, or debugging. Further GPU pods can nonetheless be spawned by way of time-slicing, however the workspace is consuming one of many digital slots.

Inference Containers — The workspace is light-weight with no GPU hooked up. All time-sliced capability is out there for person deployed containers. With an entire GPU reservation, that’s 4 full slots for inference workloads.

There’s a actual throughput tradeoff with time-slicing, workloads share VRAM and compute bandwidth. For improvement, testing, and validating multi-service architectures, which is strictly what this platform is for, it’s the fitting trade-off. For manufacturing latency delicate inference the place each millisecond of p99 issues, you’d use devoted slices 1:1 or complete GPUs.

GPU “Tokenomics”

One of many first questions within the introduction was: How a lot will it value — not simply as we speak, however at scale? To reply that, you need to begin with what the workload truly seems like in manufacturing.

What Actual Deployments Look Like

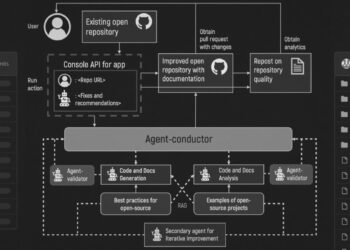

Once I work with clients on their inference structure, no one is operating a single mannequin behind a single endpoint. The sample that retains rising is a fleet of fashions sized to the duty. You’ve gotten a 7B parameter mannequin dealing with easy classification and extraction, runs comfortably on a MIG slice. A 14B mannequin doing summarization and basic function chat. A 70B mannequin for complicated reasoning and multi-step duties, and perhaps a 400B mannequin for the toughest issues the place high quality is non-negotiable. Requests get routed to the suitable mannequin primarily based on complexity, latency necessities, or value constraints. You’re not paying 70B-class compute for a activity a 7B can deal with.

In multi-agent techniques, this will get extra fascinating. Brokers subscribe to a message bus and sit idle till referred to as upon — a pub-sub sample the place context is shared to the agent at invocation time and the pod is already heat. There’s no chilly begin penalty as a result of the mannequin is loaded and the container is operating. An orchestrator agent evaluates the inbound request, routes it to a specialist agent (retrieval, code technology, summarization, validation), collects the outcomes, and synthesizes a response. 4 or 5 fashions collaborating on a single person request, every operating in its personal container inside the identical namespace, speaking over the interior Kubernetes community.

Community insurance policies add one other dimension. Not each agent ought to have entry to each software. Your retrieval agent can discuss to the vector database. Your code execution agent can attain a sandboxed runtime. However the summarization agent has no enterprise touching both, it receives context from the orchestrator and returns textual content. Community insurance policies implement these boundaries on the cluster degree, so software entry is managed by infrastructure, not software logic.

That is the workload profile the platform was designed for. MIG slicing enables you to proper measurement GPU allocation per mannequin, a 7B doesn’t want 96GB of VRAM. Time-slicing lets a number of brokers share the identical bodily gadget. Namespace isolation retains tenants separated whereas brokers inside a session talk freely. The structure straight helps these patterns.

Quantifying It

To maneuver from structure to enterprise case, I developed a tokenomics framework that reduces infrastructure value to a single comparable unit: value per million tokens. Every token carries its amortized share of {hardware} capital (together with workload combine and redundancy), upkeep, energy, and cooling. The numerator is your complete annual value. The denominator is what number of tokens you truly course of, which is fully a perform of utilization.

Utilization is probably the most highly effective lever on per-token value. It doesn’t scale back what you spend, the {hardware} and energy payments are mounted. What it does is unfold these mounted prices throughout extra processed tokens. A platform operating at 80% utilization produces tokens at practically half the unit value of 1 at 40%. Similar infrastructure, dramatically completely different economics. That is why the reservation system, MIG partitioning, and time-slicing matter past UX — they exist to maintain costly GPUs processing tokens throughout as many obtainable hours as attainable.

As a result of the framework is algebraic, it’s also possible to clear up within the different route. Given a recognized token demand and a price range, clear up for the infrastructure required and instantly see whether or not you’re over-provisioned (burning cash on idle GPUs), under-provisioned (queuing requests and degrading latency), or right-sized.

For the cloud comparability, suppliers have already baked their utilization, redundancy, and overhead into per-token API pricing. The query turns into: at what utilization does your on-prem unit value drop under that price? For constant enterprise GPU demand, the sort of steady-state inference site visitors these multi-agent architectures generate, on-prem wins.

Cloud token prices in multi-agent environments scale parabolically.

Nonetheless, for testing, demos, and POCs, cloud is cheaper.

Engineering groups typically have to justify spend to finance with clear, defensible numbers. The tokenomics framework bridges that hole.

Conclusion

Initially of this put up I listed the questions I hear from clients continuously — AI technique, use-cases, cloud vs. on-prem, value, safety. All of them finally require the identical factor: a platform layer that may schedule GPU assets, isolate tenants, and provides groups a self-service path from experiment to manufacturing with out ready on infrastructure.

That’s what this put up walked via. Not a product and never a managed service, however an structure constructed on Kubernetes, PostgreSQL, Python, and the NVIDIA GPU Operator — operating on a single Cisco UCS C845A with two NVIDIA RTX PRO 6000 Blackwell GPUs in our lab. It’s a sensible start line that addresses scheduling, multi-tenancy, value modeling, and the day-2 operational realities of maintaining GPU infrastructure dependable.

This isn’t as intimidating because it seems. The tooling is mature, and you’ll assemble a cloud-like workflow with acquainted constructing blocks: reserve GPU capability from a browser, drop into a totally loaded ML workspace, and spin up inference providers in seconds. The distinction is the place it runs — on infrastructure you personal, underneath your operational management, with knowledge that by no means leaves your 4 partitions. In apply, the barrier to entry is commonly decrease than leaders count on.

Scale this to a number of Cisco AI Pods and the scheduling airplane, reconciler sample, and isolation mannequin carry over straight. The muse is similar.

Should you’re working via these identical choices — methods to schedule GPUs, methods to isolate tenants, methods to construct the enterprise case for on-prem AI infrastructure, I’d welcome the dialog.

I’m an AI Options Engineer at Cisco, specializing in enterprise AI infrastructure. Attain out at [email protected].